Data Testing Tools: Key Capabilities and 6 Tools You Should Know

Databand.ai

AUGUST 30, 2023

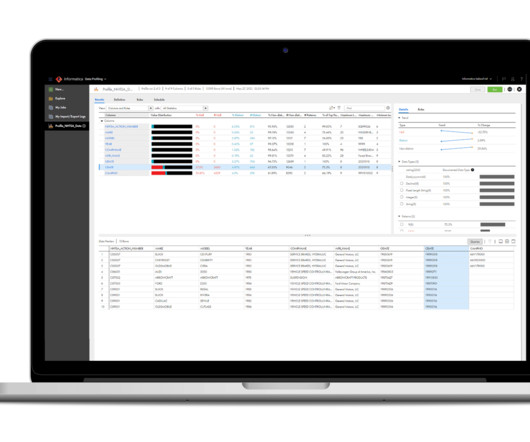

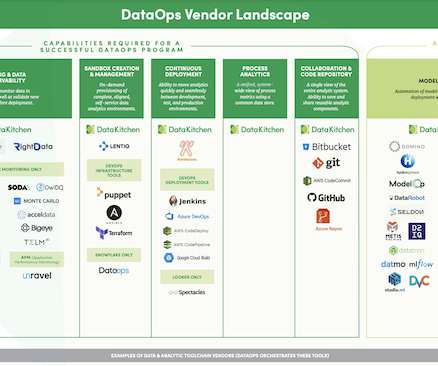

These tools play a vital role in data preparation, which involves cleaning, transforming, and enriching raw data before it can be used for analysis or machine learning models. There are several types of data testing tools. This is part of a series of articles about data quality.

Let's personalize your content