DataOps Tools: Key Capabilities & 5 Tools You Must Know About

Databand.ai

AUGUST 30, 2023

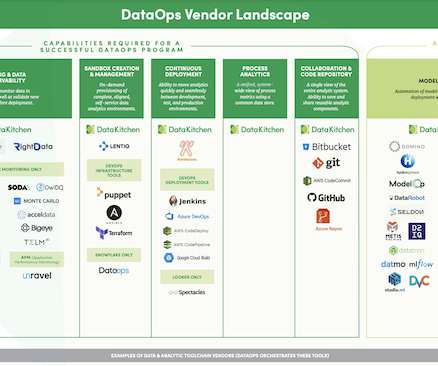

These tools help organizations implement DataOps practices by providing a unified platform for data teams to collaborate, share, and manage their data assets. By using DataOps tools, organizations can break down silos, reduce time-to-insight, and improve the overall quality of their data analytics processes.

Let's personalize your content