Data Lake Explained: A Comprehensive Guide to Its Architecture and Use Cases

AltexSoft

AUGUST 29, 2023

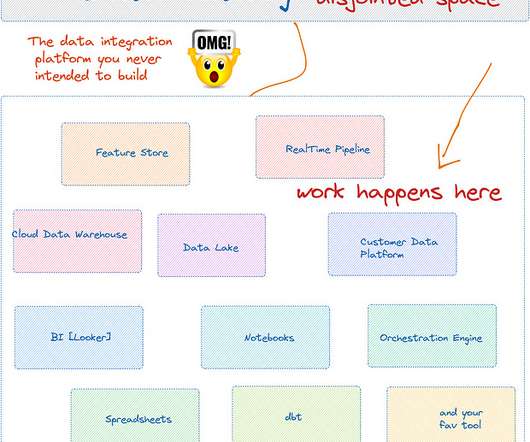

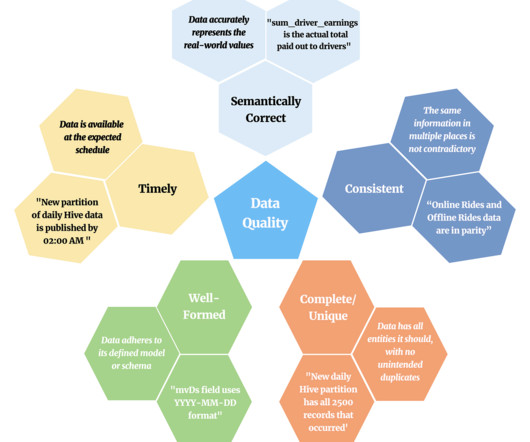

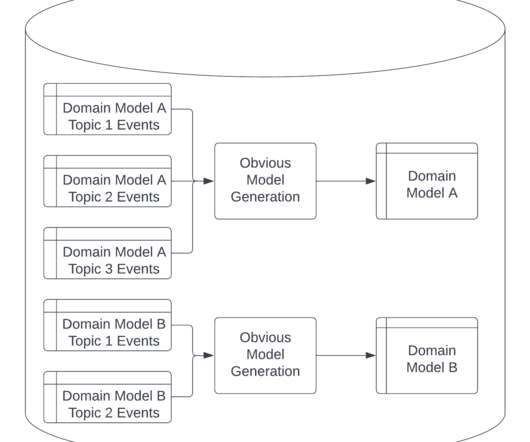

Data lakes emerged as expansive reservoirs where raw data in its most natural state could commingle freely, offering unprecedented flexibility and scalability. This article explains what a data lake is, its architecture, and diverse use cases. Data warehouse vs. data lake in a nutshell.

Let's personalize your content