Data Pipeline- Definition, Architecture, Examples, and Use Cases

ProjectPro

DECEMBER 7, 2021

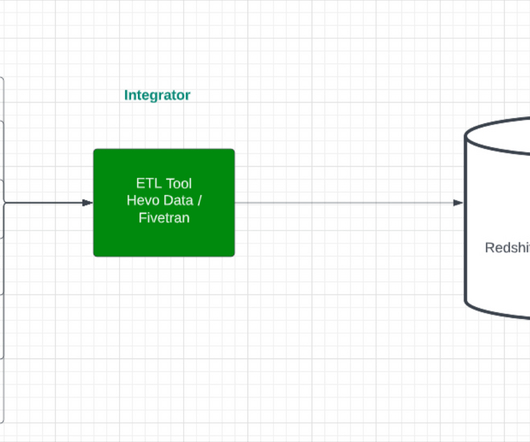

A pipeline may include filtering, normalizing, and data consolidation to provide desired data. In broader terms, two types of data -- structured and unstructured data -- flow through a data pipeline. The transformed data is then placed into the destination data warehouse or data lake.

Let's personalize your content