Organizations often manage operational data using open-source databases like MySQL, frequently deployed on local machines. To enhance data management and security, many organizations prefer deploying these databases on cloud providers like AWS, Azure, or Google Cloud Platform (GCP).

Organizations using GCP to host their MySQL database via Google Cloud SQL can utilize SQL queries to access historical data for transactional purposes. However, GCP MySQL may lack robust monitoring capabilities for real-time analytics or ongoing operations.

In scenarios requiring advanced analytics and enhanced monitoring, migrating to a data warehouse like BigQuery is essential. It can provide more robust monitoring and metrics logging features.

Let’s get started with the details of migrating from GCP MySQL to BigQuery!

Why Transfer GCP MySQL Data to BigQuery?

Transferring data from MySQL databases on GCP to BigQuery offers several benefits, including:

- Unstructured Data Analysis: BigQuery object tables provide a structured record interface for analyzing unstructured data in the cloud storage. You can analyze this data using remote functions and derive insights using BigQuery ML.

- Reduced Query Time: BigQuery’s BI Engine, an in-memory analysis service, efficiently caches large datasets from BigQuery to reduce latency in query responses. As a result, the BI engine minimizes time to derive valuable insights from your real-time data.

Google Cloud Platform (GCP) for MySQL: A Brief Overview

GCP MySQL is a fully managed relational database service offered by Google Cloud SQL for MySQL. It allows you to configure, manage, and deploy all the major versions of MySQL databases on the Google Cloud Platform. With GCP MySQL, you can primarily focus on building applications using your data rather than managing the underlying infrastructure.

For business continuity, GCP MySQL facilitates seamless recovery with zero data loss. It also safeguards sensitive data by employing automatic encryption at rest and in transit.

Additionally, with Cloud SQL Insights on GCP, you can quickly identify and fix performance issues related to the MySQL databases.

BigQuery: A Brief Overview

BigQuery is a fully managed, serverless data warehouse offered by Google Cloud. It provides a unified analytics platform for storing and analyzing massive amounts of operational data within an organization using simple SQL queries.

BigQuery enables processing petabytes of data without complex infrastructure, and you only pay for the resources you use. With its real-time analytics and built-in business intelligence, you can swiftly develop and deploy machine learning models.

You can connect to BigQuery through various ways, including the BigQuery REST API, GCP Console, bq command line tool, or client libraries such as Python or Java.

Methods to Connect GCP MySQL to BigQuery

To load GCP MySQL file to BigQuery, you can utilize Hevo Data or a manual CSV Export/Import method.

Method 1: Convert GCP MySQl to BigQuery Table Using Hevo Data

Hevo Data is a real-time ELT, no-code pipeline platform that enables you to cost-effectively automate data pipelines based on your preferences. With the integration of over 150+ data sources, you can export data from different sources, load it to desired destinations, and transform it for detailed analysis.

Here are a few key features of Hevo Data:

- Data Transformation: Hevo Data provides analyst-friendly options, such as Python-based Transformation scripts or Drag-and-Drop Transformation blocks, to simplify the data transformation. These options will help you clean, prepare, and transform data before loading it to your desired destination.

- Incremental Data Load: Hevo Data allows you to migrate real-time data, which ensures efficient bandwidth usage on both sides of the pipeline.

- Auto-Schema Mapping: Hevo Data’s Auto Mapping feature eliminates the tedious task of manually managing the schema. It automatically identifies the structure of incoming data and replicates it to the desired destination schema. You can choose full or incremental mappings based on your data replication needs.

Let’s see how to connect GCP MySQL to BigQuery using Hevo Data.

Step 1: Configure GCP MySQL as Your Source Connector

Before you begin the setup, make sure you have the following prerequisites ready:

Here are the steps to configure Google Cloud MySQL as your source in Hevo:

- Log into your Hevo account.

- From the Navigation Bar, click PIPELINES.

- Navigate to the Pipelines List View page and click the + CREATE button.

- Select Google Cloud MySQL as the source type in the Select Source Type page.

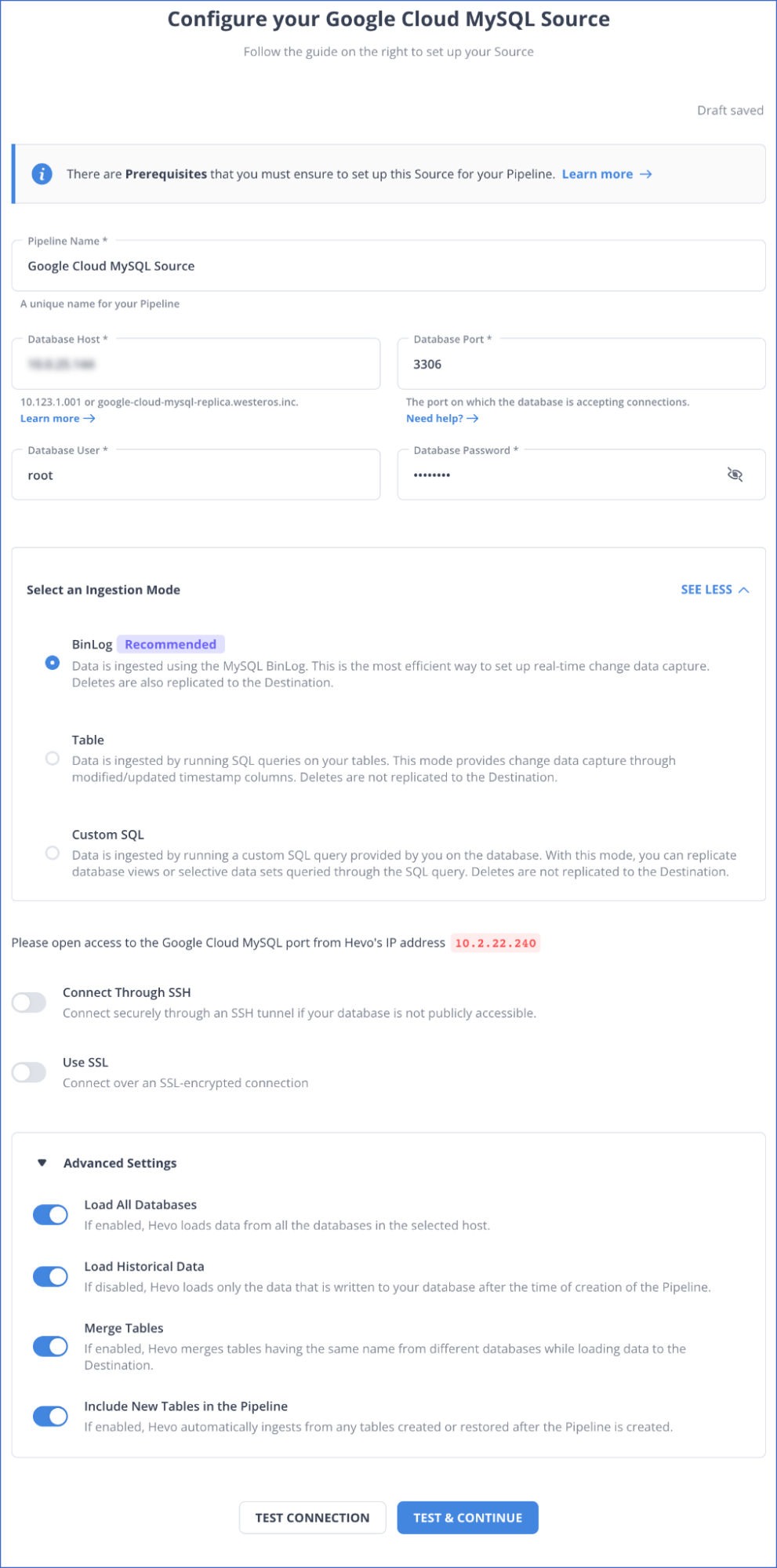

- Specify the mandatory fields in the Configure your Google Cloud MySQL Source page.

GCP MySQL to BigQuery: Configuring Your Google Cloud MySQL Source Page

GCP MySQL to BigQuery: Configuring Your Google Cloud MySQL Source Page

- Click TEST CONNECTION > TEST & CONTINUE to complete the source configuration.

For more information about source configuration, read Hevo’s Google Cloud MySQL documentation.

Step 2: Configure BigQuery as Your Destination Connector

Before you start the configuration, ensure the following prerequisites are met:

- Log in to Google Cloud Platform (GCP) for a BigQuery Project or create one.

- Assign the required roles for the GCP project to the linked Google account.

- An active billing account linked with your GCP project.

- You must have a Team Collaborator or any administrator role except the Billing Administrator role.

Here are the steps to configure your BigQuery destination in Hevo:

- Click the DESTINATIONS option in the Navigation Bar.

- From the Destinations List View page, click the + CREATE button.

- Go to the Add Destination page and choose Google BigQuery as your destination type.

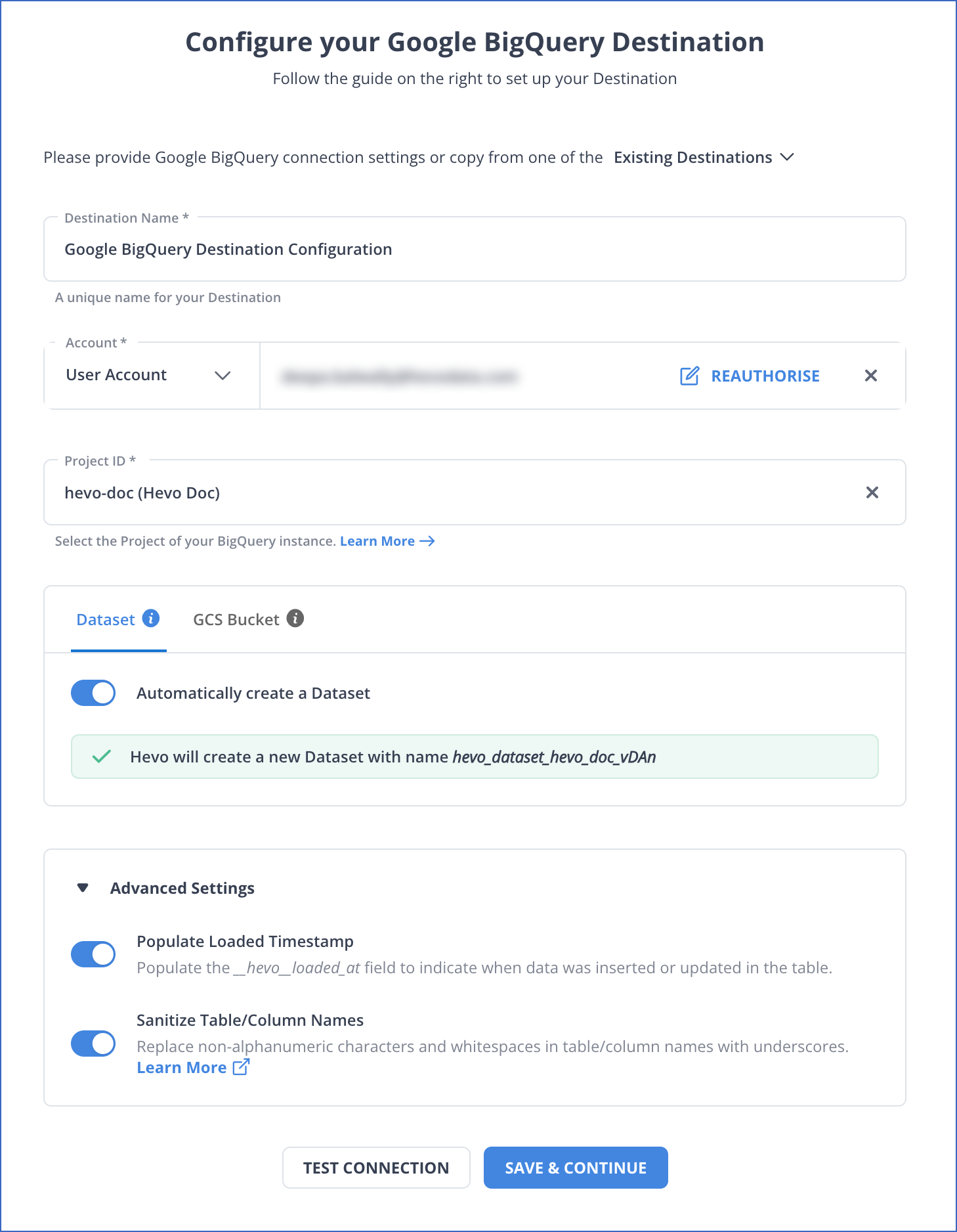

- Provide the required information on the Configure your Google BigQuery Destination page.

- Click TEST CONNECTION > SAVE & CONTINUE to finish the destination configuration.

GCP MySQL to BigQuery: Configuring Your Google BigQuery Destination Page

GCP MySQL to BigQuery: Configuring Your Google BigQuery Destination Page

To learn more about destination configuration, read Hevo’s Google BigQuery documentation.

Get Started with Hevo for Free

Method 2: Copy Data from GCP MySQL to BigQuery Using CSV Export/Import Method

For this migration, you can export data from the Cloud SQL for MySQL to a CSV file in GCS buckets. Subsequently, you can load the CSV file from the cloud storage bucket into a table within BigQuery.

Before starting the migration, you must ensure the following prerequisites are in place:

Let’s look into the steps to copy data from GCP MySQL to BigQuery using the CSV Export/Import method:

Step 1: Export Data from GCP MySQL to CSV File Using gcloud CLI

- Open the Google Cloud console and go to the cloud storage Buckets page.

- Choose the desired bucket name to upload the files from GCP MySQL and click the Upload Files button. Read this documentation to learn more about uploading files to your bucket.

- Open the Cloud Shell Editor and identify the service account for the Cloud SQL instance you are exporting from using the following command:

gcloud sql instances describe cloudSQL_InstanceName

- Grant the storage.objectAdmin IAM role to the Cloud SQL instance service account using the following command:

gsutil iam

To learn more about IAM roles, see IAM Permissions.

- Export the data as a CSV file by executing the following command:

gcloud sql export csv cloudSQL_InstanceName gs://bucket_name/file_name \

--database=database_name \

--offload \

--query=select_query_statement

Step 2: Import CSV Data to BigQuery Table Using SQL Query Editor

- Open the BiQuery page in the Google Cloud console.

- Enter the following SQL statements in the query editor:

LOAD DATA OVERWRITE MyDataset.MyTable(variable1 INT64,variable2 STRING)

FROM FILES (

format = 'CSV',

uris = [ 'gs://bucket_name/path_to_file/file_name.csv']);

- Click the Run button

Using the above CSV Export/Import method, you can successfully migrate the data from GCP MySQL to BigQuery.

Limitations of GCP MySQL to BigQuery Migration Using CSV Export/Import Method

- Requires Tech Familiarity: The CSV Export/Import method requires familiarity with the cloud storage buckets, the gcloud CLI, and SQL commands for loading data into BigQuery. Inadequate familiarity with any of these tools can affect the migration process.

- Lack of Real-time Capabilities: Any changes to the data in MySQL databases hosted on GCP are not reflected in BigQuery until the next CSV export/import is performed. This delay can impact decision-making processes that depend on real-time insights.

- High Complexity: BigQuery requires CSV data to be UTF-8 encoded. If CSV files use other supporting encoding types, explicitly specifying the encoding during the import becomes necessary. This adds complexity to the migration process, as you need to ensure proper conversion to UTF-8 to maintain compatibility with BigQuery.

Use Cases of GCP MySQL to BigQuery Migration

- Enhanced Data Warehousing: BigQuery provides advanced Software as a Service (SaaS) technology for serverless data warehousing operations. This allows you to concentrate on improving the core business activities while entrusting infrastructure maintenance and platform development to Google Cloud.

- Improve Business Performance: BigQuery lets you solve challenging issues, apply machine learning to uncover emerging data insights, and experiment with new hypotheses. This ensures timely insights into your business performance, which lets you refine processes for better outcomes.

Conclusion

Migrating from GCP MySQL to BigQuery offers organizations a strategic opportunity to enhance data management, gain valuable insights, and facilitate data-driven decision-making. In this article, you have learned how to transfer data from GCP MySQL to BigQuery using Hevo Data and the CSV Export/Import method.

The migration using the CSV Export/Import method requires manual interventions and proficiency in the relevant GCP services. In contrast, utilizing Hevo Data’s pre-built connectors offers a streamlined approach that drastically manual efforts and requires minimal technical expertise.

With Hevo Data’s readily available connectors, you can empower your organization to make smarter decisions based on up-to-date data.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also checkout our unbeatable pricing to choose the best plan for your organization.

Share your experience of GCP MySQL to BigQuery integration in the comments section below!

Frequently Asked Questions (FAQ)

Q. How can I schedule regular updates from Google Cloud MySQL to BigQuery, ensuring new data is added, recently edited data is updated, and deleted data is removed from BigQuery?

A. To preserve data consistency, you can configure the Google Cloud SQL for MySQL as an external data source in BigQuery. This will lower the billing amounts because the data need not be duplicated. However, querying is slower in external data sources. To overcome this challenge, you can choose Hevo Data’s pre-built connectors to automate your data pipeline based on your preferences.

Sony holds a Master’s degree in Computer Science. With over two years of experience in content writing and three years of experience in teaching, she is passionate about creating informative and engaging technical content for a diverse audience. Sony has authored numerous articles covering a wide range of topics in the data science, machine learning, and AI domains. Her ultimate goal is to encourage data professionals with knowledge and insights through her technical content.