Dbt is a free and open-source tool that uses SQL to help you with data organization, transformation, and analysis. Meanwhile, BigQuery is a cloud-based data warehousing system that can handle large datasets and offers flexibility for data storage, analysis, and sharing.

When you connect dbt to BigQuery, you can benefit from the strengths of both tools to optimize your data modeling and analytics workflows. This guide will guide you through the process of connecting dbt to BigQuery with clear, step-by-step instructions, allowing you to create data models and perform SQL queries on your BigQuery data.

Whether you’re a data analyst, data engineer, or data scientist, this guide will provide the necessary information to connect dbt to BigQuery and help you enhance your data analysis capabilities.

What is dbt?

Data Build Tool (dbt) is a cutting-edge open-source data modeling and transformation tool. It enables engineers and data analysts to transform and structure data in a dependable and scalable manner. Dbt is used to build data pipelines for data warehousing and business intelligence applications.

Dbt aids in automating the process of creating data models. Users can create SQL queries and use code to automate repetitive activities. Additionally, it provides functions like testing and version control that guarantee the consistency and accuracy of the data. Snowflake, Redshift, BigQuery, and other databases are among the many that dbt can operate with.

What is Google BigQuery?

Google BigQuery is a Data Warehouse hosted on the Google Cloud Platform that helps enterprises with their analytics activities. This Software as a Service (SaaS) platform is serverless and has outstanding data management, access control, and Machine Learning features (Google BigQuery ML). Google BigQuery excels in analyzing enormous amounts of data and quickly meets your Big Data processing needs with capabilities like exabyte-scale storage and petabyte-scale SQL queries.

Google BigQuery’s columnar storage makes data searching more manageable and effective. On the other hand, the Colossus File System of BigQuery processes queries using the Dremel Query Engine via REST. The storage and processing engines rely on Google’s Jupiter Network to quickly transport data from one location to another.

How to Connect dbt to BigQuery?

This section will explain how to connect dbt to BigQuery. You will learn the following things:

- How to set up a new BigQuery instance.

- Access sample data from a public dataset.

- Connect dbt to BigQuery.

Prerequisites

Make sure you can access your Google account, new or existing, before starting this guide. To set up BigQuery with the Google Cloud Platform, you can use a personal or professional account (GCP).

Set-Up

Before starting the set-up, log in to your Google account.

- Step 1: Open the BigQuery Console.

- You will be prompted to create a Google Cloud Platform account if you don’t already have one.

- If you do have one (or more), it will probably log you into the one that is the oldest. Check that you are using the right email account by clicking your profile picture to the right.

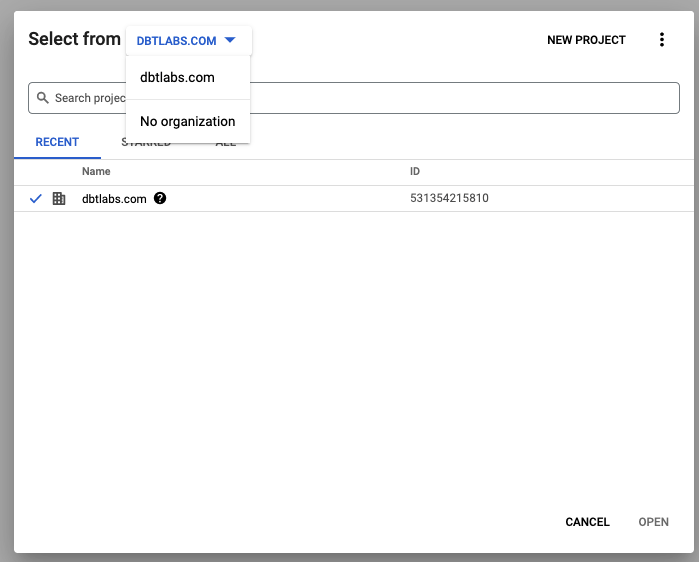

- Step 2: Create a new project:

- You will be immediately requested to establish a new project if you have just created a BigQuery account.

- You can choose the project drop-down in the header bar and start a new project from there if you already have an established organization.

Image Source

Image Source

- Step 3: Choose a NEW PROJECT. A project name should appear automatically. Change the name to “dbt Learn – Bigquery Setup” or something else that is more pertinent.

Image Source

Image Source

Load Data

We will demonstrate how to use select statements to gain access to public data sets that BigQuery supports and can be directly queried. We’ll also demonstrate how to use that data to populate your database objects.

- Step 1: Navigate to the BigQuery Console once more. Ensure that the header has your new project selected. If you cannot access your account or project, click on your profile image to the right and make sure you are using the right email address.

- Step 2: To ensure you can execute the below queries properly, copy and paste them into the Query Editor.

select * from `dbt-tutorial.jaffle_shop.customers`;

select * from `dbt-tutorial.jaffle_shop.orders`;

select * from `dbt-tutorial.stripe.payment`;

- Step 3: Verify that you have an output:

Image Source

Image Source

- Step 4: Set up Datasets. BigQuery datasets are comparable to standard database schemas.

- Locate your project. To view the options, click the three dots.

- Click Make a dataset.

- Input the Dataset ID as required. Choose a name that serves the intended purpose. In this example, we will generate one for the jaffle shop and one for the stripe later. This will be used like schema in fully qualified references to your database objects, i.e., database.schema.table.

- The remaining fields can be left with their default values:

- You can leave the data location field empty; if chosen, this will choose the GCP location where your data will be kept. The US multi-region is the current default location. This location will be used by all the tables in this dataset.

- Billing table expiration will be set automatically to 60 days, even though it is unchecked because billing has not been set up for this project. Hence GCP deprecates tables by default.

- Give Google control over encryption.

- Next, select CREATE DATASET.

- With the second dataset, stripe, repeat steps 1 to 4.

Connect to dbt Cloud

In this section, you will learn how to connect dbt to BigQuery:

Generate BigQuery Credentials

You must create a keyfile if you want dbt to connect to your warehouse. With most other data warehouses, this is comparable to utilizing a database username and password.

- Step 1: Go over to the BigQuery credential wizard. Ensure that the header has your new project chosen. Click on your profile image to the right and make sure you are using the right email address if you cannot access your account or project.

- Step 2: Choose Service account, then click + Generate Credentials.

- Step 3: In the Service account name area, enter dbt-user, then select Create and Proceed.

- Step 4: In the Role area, enter “BigQuery Admin” and click OK.

- Step 5: Then click Next.

- Step 6: Leave all fields in the “Give users access to this service account” section blank. Click Done.

- Step 7: Choose the service account.

- Step 8: Choose Keys.

- Step 9: Click Add Key and create a new key.

- Step 10: Click Create after selecting JSON as the key type.

- Step 11: The JSON file download should be required. Save it locally with a distinct filename and in an easy-to-remember location like dbt-user-creds.json.

Create dbt Cloud Account

Let’s begin this by setting up a dbt Cloud account:

- Step 1: Go to dbt Cloud.

- Step 2: Create a new dbt Cloud account and send yourself an email to confirm it if you don’t already have one.

- Step 3: You can start a new project from your existing dbt Cloud account if you already have one:

- Step 4: Projects can be accessed by clicking the gear symbol at the top right.

- Step 5: Press “+ New Project.”

- Step 6: The “Setup a New Project” page has been reached.

- Step 7: In the dbt Project Name column, enter “Analytics.” Now, you will have the option of renaming this project.

- Step 8: Then click Next.

Connect dbt to BigQuery

Let’s now configure the connection between BigQuery and dbt Cloud.

- Step 1: To set up your connection, click BigQuery.

- Step 2: Under the BigQuery settings, click Upload a Service Account JSON File.

- Step 3: The JSON file you downloaded from Create BigQuery Credentials should be selected. All required fields will be filled up by dbt Cloud.

- Step 4: From the top, select Test. This demonstrates that BigQuery can access your dbt Cloud account.

- Step 5: Click Continue if you see “Connection test Succeeded!” You might need to regenerate your BigQuery credentials if it fails.

Why Connect dbt to BigQuery?

There are many reasons why it is better to connect dbt to BigQuery:

- It is difficult to handle the dependencies between jobs when we construct multi-step data pipelines using BigQuery. Instead, dbt provides the ref() and source() macros to let data analysts refer to related tables. Automatic dependency analysis and job sequencing are features of DBT. We don’t have to manage dependencies manually. Data analysts can therefore create even complex, multi-step tasks using just BigQuery queries.

- We can view data lineage from the dependencies using the dbt web user interface. Data lineage metadata management is not necessary.

- Jinja, a contemporary and user-friendly Python templating language, is the base of dbt. We can create our own macros, then. Also, using dbt packages makes it simple to leverage outside libraries in our dbt projects.

Final Thoughts

In this article, you learned how to set up and connect dbt to BigQuery. In conclusion, connecting dbt to BigQuery can provide significant benefits for data analysts and data teams looking to improve their data modeling and analytics capabilities. With dbt, users can create repeatable and scalable data pipelines, easily collaborate on data models, and quickly iterate on analytics projects. Additionally, using BigQuery as the underlying data warehouse provides a highly scalable and cost-effective solution for storing and querying large volumes of data. Now you can go ahead and start transforming your data using dbt BigQuery.

For cases when you rarely need to replicate data, your engineering team can easily do it. Though, for frequent and massive volumes of data transfers from multiple sources, your engineering team would need to constantly monitor and fix any data leaks. Or you can simply hop onto a smooth ride with cloud-based ELT solutions like Hevo Data which automates the data integration process for you and runs your dbt projects to transform data present in your data warehouse. At this time, the dbt Core™ on Hevo is in BETA. Please reach out to Hevo Support or your account executive to enable it for your team.

Visit our Website to Explore Hevo

Offering 150+ plug-and-play integrations and saving countless hours of manual data cleaning & standardizing, Hevo Data also offers in-built pre-load data transformations that get it done in minutes via a simple drag-n-drop interface or your custom python scripts.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Share your experience of learning about Data Build Tool(dbt)! Let us know in the comments section below!

Sharon is a data science enthusiast with a passion for data, software architecture, and writing technical content. She has experience writing articles on diverse topics related to data integration and infrastructure.