Most organizations today practice a data-driven culture, emphasizing the importance of evidence-based decisions. You can also utilize the data available about your organization to perform various analyses and make data-informed decisions, contributing towards sustainable business growth. However, to get the most out of your data, you should ensure your datasets are free of anomalies, missing values, duplicate values, inconsistencies, and inaccuracies. This helps you obtain results that are not skewed but are trustworthy and reliable.

Data quality monitoring (DQM) plays a critical role in safeguarding the integrity of these datasets. It is a set of processes designed to assess, measure, and manage the health of your business’s data. By continuously monitoring data quality, you can mitigate risks associated with poor-quality data, such as flawed analytics, erroneous choices, and reputational damage. This article will provide all the information you need on data quality monitoring, including key metrics, techniques, and challenges.

What Is Data Quality Monitoring?

Data quality monitoring is a systematic process of evaluating, maintaining, and enhancing the quality of data within your organization. It involves establishing data quality rules, implementing automated checks, and actively identifying and rectifying discrepancies, anomalies, or errors that may compromise its integrity.

Continuous data quality monitoring allows you to adhere to predefined standards for accuracy, completeness, consistency, timeliness, and validity. You can monitor quality metrics over time, analyze trends and patterns, and identify potential problems before they significantly impact your analysis. Read on to know the importance of data quality monitoring and the techniques involved.

Why Does Data Quality Monitoring Matter?

Data quality monitoring is crucial because poor data can have significant negative consequences. Inaccurate or incomplete data leads to flawed analytics, misguided business decisions, dropping customer confidence, and, ultimately, financial losses. According to Garter’s research, organizations face an average of $15 million yearly loss due to poor data quality. This emphasizes the need to procure quality data to obtain valuable data-driven insights.

By monitoring data quality, you can:

- Ensure Business Intelligence Credibility: This proactive approach can help you avoid costly errors and wasted resources associated with inaccurate data while improving your operational efficacy. It provides business insights that are trustworthy and reliable.

- Foster Regulatory Compliance: Many industries have strict data governance regulations, and data quality monitoring can help you adhere to data privacy laws by maintaining data integrity and auditability.

- Improve Customer Experience: Clean and accurate data can help you develop personalized interactions, provide efficient service delivery, and reduce frustrations at customer touchpoints.

Tools and Techniques for a Robust DQM Framework

Building a robust Data Quality Monitoring (DQM) framework is crucial for ensuring your data is fit for purpose. This section will walk you through the four steps of crafting a strategic data quality monitoring plan.

1. Data Quality Dimensions

Building a solid foundation for data quality starts with identifying and defining the data quality dimensions relevant to your business goals. These quality dimensions are the attributes that will measure data quality and provide a benchmark for assessing how well the data meets your needs. You need to establish the criteria for your data quality analysis.

Data quality monitoring: Key metrics of the DQM strategy

Data quality monitoring: Key metrics of the DQM strategy

These are the six key data quality dimensions you can consider:

- Accuracy: Reflects the degree to which the data is close to the actual representation.

- Completeness: Ensures all necessary data points are present and accessible.

- Consistency: Assesses whether the data is consistent across various sources.

- Validity: Confirms whether the data conforms to the predefined formats and ranges.

- Uniqueness: Ensures each data point represents a single, distinct entity.

- Timeliness: Addresses the relevance of data based on its update frequency.

2. Data Quality Assessment

You must assess the current state of your data by employing various tools and techniques. You can identify the existing issues with your data and address them. Three key techniques that come into play are:

Data Profiling

This technique involves analyzing your data to understand its structure, content, and characteristics. Data profiling helps identify potential data quality issues like missing values, inconsistencies in formatting, and potential outliers.

Data Validation

Validation goes a step further by checking your data against predefined rules and business logic. It ensures data adheres to specific formats, ranges, and relationships between different data points. For example, age cannot be negative, and email addresses must follow a standard pattern.

Data Cleansing

Once you identify the data quality issues, you can use cleansing techniques to rectify them. This might involve correcting errors, removing duplicates, imputing missing values, and formatting data inconsistencies. Data cleansing ensures your data is accurate, complete, and usable for analysis.

3. Data Quality Monitoring

After the initial assessment, monitoring the data quality over time is crucial to maintaining its integrity. This involves tracking and reporting any deviations within the data quality dimensions and pinpointing areas where potential issues might exist. Analytical dashboards are the most common tools you can use to monitor data quality metrics. It provides a centralized visual representation of the key data quality metrics, such as:

- Error Ratio: This metric calculates the percentage of errors present in your data compared to the total number of data points. A higher error ratio indicates significant inconsistencies or inaccuracies within your datasets.

- Data Transformation Errors: This metric focuses on the issues arising during the transformation process. It measures the frequency of errors, latency, and data volume when converting data from one format to another. Having these errors can lead to the loss of valuable information.

- Data Time-to-Value: This metric measures the time taken to transform raw data into a format usable for analysis and decision-making. A longer time-to-value suggests inefficiencies in your data processing pipelines, which can delay insight generation and hinder your ability to react quickly to evolving business needs.

You can use the metrics mentioned above and many others to better understand your data and make appropriate interventions to maintain its quality.

4. Data Quality Improvement

Data quality improvement focuses on actively addressing the root causes of data quality issues and implementing solutions to prevent them from recurring. To achieve this, you can leverage the data quality auditing technique. This technique allows you to regularly schedule audits and gain deeper insights into your data’s quality processes and controls. Audits enable you to evaluate cleansing routines, data validation rules, and overall data governance policies. They reveal areas where your DQM framework might be lacking, allowing for targeted improvement efforts.

Challenges in Data Quality Monitoring

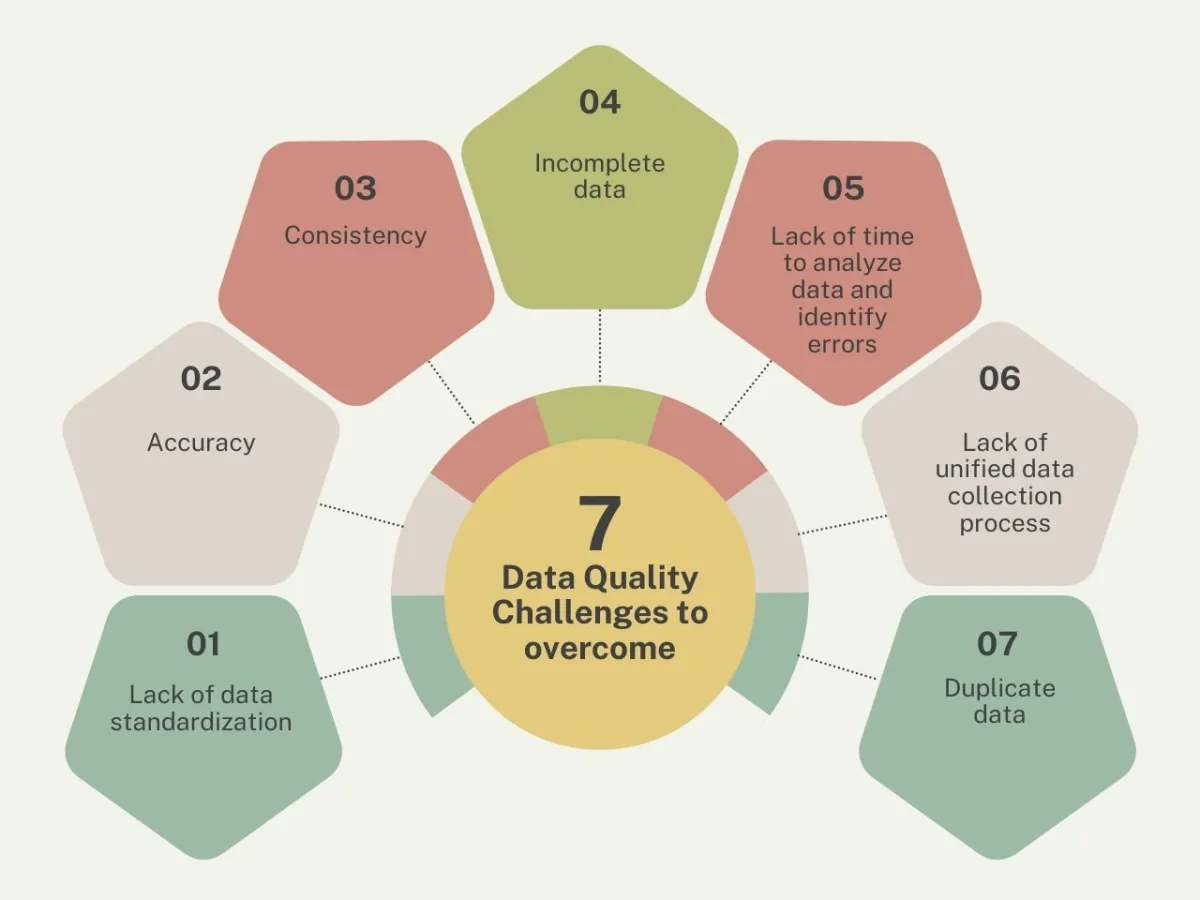

Ensuring data quality is crucial for your business to make informed decisions. However, maintaining consistent and accurate data comes with its own set of hurdles. Here are some of the challenges you might encounter with data quality monitoring:

Data quality monitoring: Data quality challenges to overcome

Data quality monitoring: Data quality challenges to overcome

- Inconsistent Data Across Systems: Many organizations store the same data across various locations for different use cases. However, having inconsistent data at these locations due to errors or outdated information can lead to inaccurate analytics and business decisions. This emphasizes the need for meticulous examination and data reconciliation.

- Data Accuracy Issues: Data accuracy is a multifaceted challenge. Erroneous data input, integration and transformation complexities, and data governance and management gaps can all contribute to this issue. Furthermore, technological limitations, complex data architectures, and intricate data pipeline workflows can complicate your efforts to ensure data integrity and quality.

- Data Volume and Scale Issues: The sheer volume of data translates to a larger pool of data points to monitor, analyze, and troubleshoot for quality issues. It can also mean having high data cardinality, which isn’t necessarily wrong but can lead to structural inconsistencies, requiring adjustments to your monitoring techniques. Additionally, data quality can suffer without efficient storage and computing solutions for your high-volume, high-velocity data.

Build Clean Data Pipelines with Hevo

Even robust data pipelines are susceptible to quality issues from data sources or internal transformations, leading to a domino effect on downstream analytics and reporting. This is where you can leverage Hevo and simplify the quality monitoring process.

Hevo is a no-code, real-time, and cost-effective data pipeline platform. This ELT data integration platform automates data pipelines, ensuring they are flexible to your needs. It has over 150 pre-built connectors that eliminate the errors from manual coding for connection setup. The three main features you can benefit from using this platform:

- Automated Schema Mapping: You can ensure consistent data format and structure upon ingestion. Hevo also keeps your destination repository in sync with all the latest information at the source using its Change Data Capture (CDC) feature to prevent any kind of latency.

- Data Transformation: Hevo provides preload transformations and removes potential data quality issues like missing and duplicate data. It also provides user-friendly drag-and-drop and Python-based transformations before loading your datasets to the target destination.

- Incremental Data Load: It also allows you to transfer modified data in real time, optimizing the bandwidth utilization at both the source and destination.

Get Started with Hevo for Free

Using Hevo’s error-handling mechanisms, you can streamline the troubleshooting process, monitor your data pipelines, and identify problems before they impact your data flow. The platform notifies you with alerts, helping you respond quickly. You can do all this and more, even with limited technical experience, as Hevo provides an intuitive and user-friendly interface.

If you already have an AWS account for your data workflow and various data sources to manage, you can leverage Hevo to achieve seamless data integration. It is available on AWS Marketplace, with public and pay-as-you-go offers, and it streamlines your AWS data quality monitoring process.

Wrapping Up

This article provides an overview of data quality monitoring, why it matters, and what techniques you can implement in your framework. Additionally, it outlines the common challenges of monitoring data quality and introduces Hevo as a solution to aid you in the process. Data quality monitoring is an ongoing process that helps ensure the reliability and integrity of your data. By implementing a comprehensive data quality monitoring framework, you can protect yourself from the pitfalls of poor data and utilize your data assets to achieve sustainable business growth.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also checkout our unbeatable pricing to choose the best plan for your organization.

Share your experience with Data Quality Monitoring in the comments section below!

FAQs

Q. How does data quality impact your organization?

Here are some other key aspects that heavily depend on the quality of your data:

- Optimize Resource Allocation: Data quality is critical to understand which resources drive the most value and make informed decisions. Clean, reliable data allows you to identify areas of high demand, predict future needs, and allocate resources efficiently to maximize your ROI.

- Innovation and Growth: High-quality data helps you discover subtle patterns and trends that can lead to new product ideas. It also enables you to design and conduct controlled experiments to test and validate their effectiveness. Clean data sets the baseline metrics for comparison, ensuring accurate and actionable results.

- Supply Chain Management: Effective supply chain management relies heavily on data quality. Clean data allows you to forecast demand accurately, which is crucial for optimizing inventory levels and avoiding stockouts or overstocking. It also enables you to track shipments efficiently and optimize your delivery routes.

Riya is a technical content writer who enjoys writing informative blogs and insightful guides on data engineering.

An avid reader, she is committed to staying abreast of the latest developments in the data analytics domain.