Insights generation from in-house data has become one of the most critical steps for any business. Integrating data from a database into a data warehouse enables companies to obtain essential factors influencing their operations and understand patterns that can boost business performance.

Azure PostgreSQL is one of the most widely used PostgreSQL databases in the Azure marketplace. Integrating this data into a data warehouse like Amazon Redshift allows companies to have a better analytical environment for understanding the data. This article will discuss two main methods of integrating data from Azure Postgres to Redshift.

Why Migrate Azure Postgres to Redshift?

Moving data from Azure Postgres to Redshift can be a beneficial step for businesses. It can enable you to query data in near real-time and build low-latency applications for fraud detection, live dashboard applications, and more.

Azure Postgres to Redshift integration allows you to run SQL queries in the AWS environment to build, train, and deploy machine learning models. This integration allows you to share your data securely across cloud platforms and third-party applications.

An Overview of Azure PostgreSQL

Azure PostgreSQL is a fully managed PostgreSQL database, enabling you to concentrate on building applications rather than managing the databases. It provides high availability with a service level agreement (SLA) of up to 99.99% uptime.

The platform provides AI-powered solutions that can optimize your performance. Azure PostgreSQL’s integration feature with Azure Kubernetes Service and Azure App Service can give you an edge in deploying your application. You can get a free trial, after which you can opt for pay-as-you-go services that will help you optimize costs according to your requirements.

An Overview of Amazon Redshift

Amazon Redshift is a cloud data warehousing service that enables companies to make cost-effective data-driven decisions. Its massive parallel processing (MPP) feature improves performance, scaling, and availability.

Redshift allows you to run SQL queries, develop aesthetic visualizations, and perform near real-time analytics on the data to generate valuable insights. For more information on the cost of using Redshift, refer to Redshift Pricing.

Methods to Load Azure Postgres to Redshift

This section highlights two of the prominent methods on how to read Azure Postgres in Redshift.

Method 1: Load Data from Azure Postgres to Redshift Using Hevo

Hevo Data is a cost-effective, no-code, automated ELT data pipeline platform that enables you to create adaptable data pipelines for real-time data integration. It provides you with 150+ data source connectors from which you can obtain data. Hevo Data’s highly interactive user interface makes integrating data from various sources accessible without technical expertise.

Here are some of the critical features provided by Hevo:

- Data Transformation: Hevo provides data transformation features that enable you to clean and transform your data with simple drag-and-drop and Python-based methods.

- Incremental Data Load: It enables you to transfer modified data in real-time, ensuring efficient bandwidth utilization at the source and destination.

- Automated Schema Mappings: Hevo performs the schema management task by automatically detecting the incoming data format and replicating it to the destination schema. It lets you choose between Full & Incremental Mappings according to your data replication requirements.

Step 1: Setting up Azure Postgres as Source

Following the steps in this section, you can ingest data from Azure Postgres using the Hevo pipeline. But before getting into the steps, you must ensure the prerequisites are satisfied.

Prerequisites

Configure Azure Postgres as the Source

After satisfying the prerequisites, you can follow the steps below:

- In the Navigation Bar, select PIPELINES and click + CREATE on the Pipelines List View.

- Select Azure PostgreSQL from the Select Source Type page.

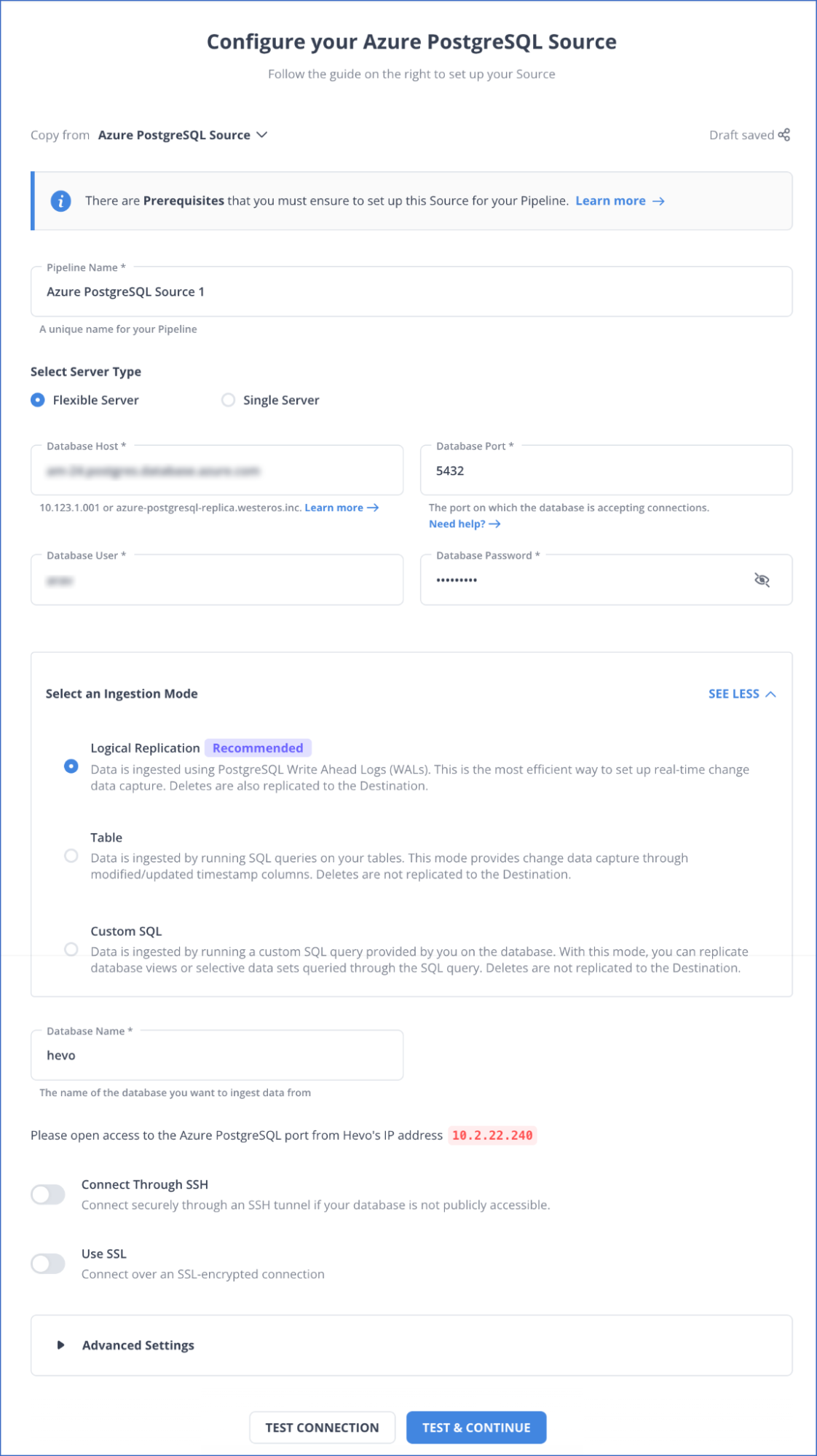

- You must specify all the necessary fields on the Configure your Azure PostgreSQL Source page.

Azure Postgres to Redshift: Configure Azure PostgreSQL Source page

Azure Postgres to Redshift: Configure Azure PostgreSQL Source page

- Select TEST CONNECTION and click on TEST & CONTINUE. After these steps, you must specify Object and Query Mode Settings for the chosen data ingestion mode.

For more information about the steps involved, refer to the Hevo Documentation on Azure PostgreSQL.

Step 2: Configuring Redshift as Destination

This section involves configuring Redshift as your destination. Before starting, ensure that all the prerequisites are satisfied.

Prerequisites

Set up Amazon Redshift as the Destination

After satisfying the prerequisite conditions, follow the steps given below:

- Select DESTINATIONS on the Navigation Bar and click + CREATE in the Destinations List View.

- Select Amazon Redshift on the Add Destination page.

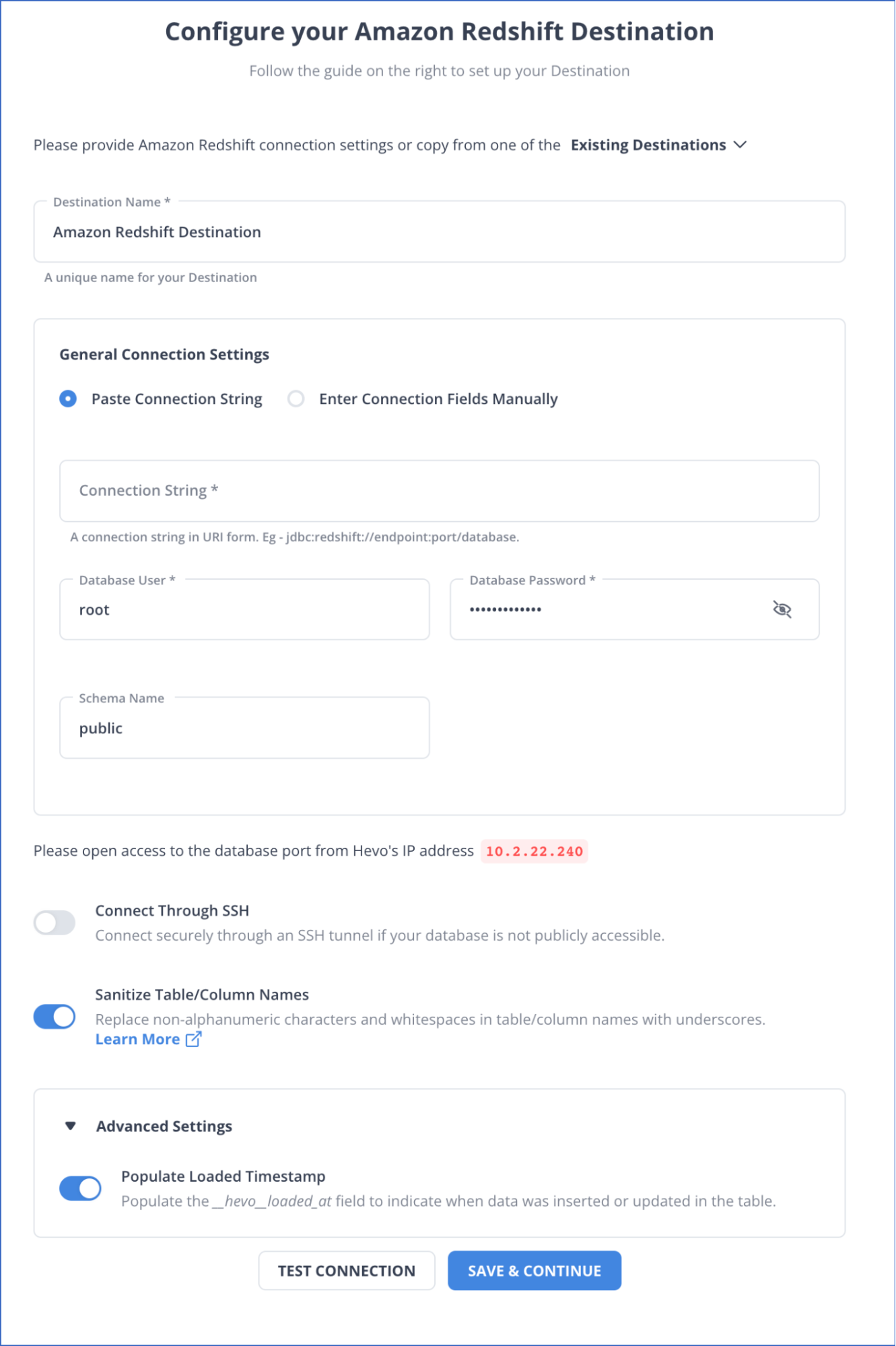

- Specify the mandatory fields on the Configure your Amazon Redshift Destination page.

Azure Postgres to Redshift: Configure Amazon Redshift Destination

Azure Postgres to Redshift: Configure Amazon Redshift Destination

- Select TEST CONNECTION and click on SAVE & CONTINUE.

To know more about the steps involved, refer to Hevo Data Amazon Redshift Documentation.

Get Started with Hevo for Free

Method 2: Sync Azure Postgres to Redshift Using pg_dump and Query Editor v2

This method will explain to you how to insert Azure Postgres data into Redshift table using pg_dump and Query Editor_v2. Follow these steps to convert Azure Postgres to Redshift table:

Step 1: Export Data from Azure for PostgreSQL to Your Local Machine

In this step, you can use pg_dump to export data from Azure Postgres to your local system. Before getting started, you must satisfy the given prerequisites.

Prerequisites

Load Data from Azure PostgreSQL to Your Local Machine

After satisfying all the prerequisites, follow these steps:

After performing the steps, you can check the error.log file to ensure the data transfer process is smooth and error-free. You can optimize the data transfer process by following the guide on best practices for pg_dump and pg_restore.

To learn more about the steps involved in this method, follow the instructions for migrating your PostgreSQL database.

Step 2: Load Data from Your Local Machine to Amazon Redshift

You can follow the steps in this section to load data from your local machine to Amazon Redshift. But before that, you must ensure your Query Editor v2 administrator is set up on a common S3 Bucket.

You must have specific IAM permissions to perform load operations. You can get that by following this syntax:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::<staging-bucket-name>"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::<staging-bucket-name>[/<optional-prefix>]/${aws:userid}/*"

]

}

]

}

You can also ensure data separation to prevent access to other users’ data by following this syntax and mentioning the placeholders:

{

"Version": "2012-10-17",

"Statement": [

{"Sid": "userIdPolicy",

"Effect": "Deny",

"Principal": "*",

"Action": ["s3:PutObject",

"s3:GetObject",

"s3:DeleteObject"],

"NotResource": [

"arn:aws:s3:::<staging-bucket-name>[/<optional-prefix>]/${aws:userid}/*"

]

}

]

}

After the permissions, you can follow the steps given below to move data from local file to Amazon Redshift:

- You can connect the target database in the tree-view panel of query editor v2.

- Select Load data, and choose Load from local file for the Data source.

- Choose Browse and select the file from the local environment.

- Select the File format.

- Choose Next, and select Load new table.

- Select the Target table location, and choose Create table.

- Select Load data to start the data loading process.

To learn the in-depth steps, refer to loading data into a database.

Limitations of Using pg_dump and Query Editor v2

This method has some limitations that you must consider before using it to integrate Azure Postgres with Redshift.

- Lack of Automation: This method lacks automation as it requires you to load data from source to destination manually. The destination will also not reflect any changes made to the dataset at the source. This method will consume valuable time as you might need to keep updating the data constantly.

- Prior Technical Knowledge: This method requires technical knowledge, as the steps involve writing code and manually transferring data from source to destination. You might also need to review the documentation to correct any mistakes while performing the steps.

Use Cases of Integrating Azure Postgres to Redshift

- Connecting Azure Postgres to Redshift enables you to optimize business intelligence through insights-driven solutions and reports. You can quickly integrate Redshift with BI tools like Amazon QuickSight and create captivating analysis dashboards.

- Azure Postgres to Redshift integration enables you to effectively ingest and query hundreds of megabytes of data per second.

- With data in your Redshift instance, you can efficiently train different machine-learning models on it and deploy it according to your needs.

Conclusion

This article highlights the two most prominent methods for integrating data from Azure Postgres to Redshift. Although both methods are efficient and can effectively enable data movement, the second method has certain limitations.

Using Hevo to integrate data from Azure Postgres to Redshift can easily overcome these limitations. It also provides an easy-to-use user interface, so you do not need to worry about technical complexities.

Interested in knowing about data integration from some other database platform to Amazon Redshift? Here are some of the top picks:

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also checkout our unbeatable pricing to choose the best plan for your organization.

Share your experience of Azure Postgres to Redshift integration in the comments section below!

Frequently Asked Questions (FAQs)

Q. What are the considerations before choosing between Amazon Redshift, Snowflake, or Azure SQL Database?

When considering between Amazon Redshift, Snowflake, or Azure SQL Database, you must consider specific use cases:

- Amazon Redshift is present in the AWS environment, making integration with other AWS applications easier. But you must also be cautious about the heavy concurrency that comes with it.

- Conversely, Snowflake is suitable for AWS users less dependent on other AWS services. It is ideal for scenarios with high concurrency query patterns.

- You can opt for Azure SQL Database if your applications depend heavily on Microsoft Stack and Azure services.

Suraj is a technical content writer specializing in AI and ML technologies, who enjoys creating machine learning models and writing about them.