Data is a powerful tool for organizational success today. When used effectively, it provides valuable insights into everyday operations to maximize business value. However, businesses may face data storage and processing challenges in a data-rich world.

With Azure Postgres, you can store and process unstructured and structured data, but it lacks real-time analytics and data sharing. This is where a platform like Snowflake comes into play.

When migrating to Snowflake, your organization can extract insights from operational data in real-time for smart decision-making. Snowflake’s data-sharing feature allows smooth collaboration with your organization’s departments, teams, and clients.

Let’s look into the two efficient methods for migrating from Azure Postgres to Snowflake.

Why Integrate Azure Postgres to Snowflake?

Integrating Azure Postgres to Snowflake may include several reasons. Some of them are given below:

- Snowflake’s intelligent query optimizer allows your organization’s data analysts to extract and analyze data on demand with low latency.

- Snowflake’architecture works with ad-hoc queries to support your data analysis without affecting other database operations.

- Snowflake’s architecture offers high availability, ensuring the organizational data remains continuously available and safeguarded against outages and unexpected restarts.

- With Snowflake, your organization will have the flexibility to adjust computing power according to your needs. It enables you to scale resources efficiently without experiencing any downtime.

Azure Postgres: A Brief Overview

Azure Postgres is a fully managed relational database service compatible with a PostgreSQL database engine. It mainly focuses on application innovation, and its zone-redundant high availability feature automates other database management tasks.

With Azure Postgres’s intelligent performance optimization and query store, you can quickly create and scale your databases.

Azure Postgres is available in Single-Server or Flexible-Server deployment modes. With a Single Server, you can manage most database routine tasks with less user configuration and control. With a Flexible Server, you can manage database management tasks, server configurations, and cost optimization with more flexibility and granular control.

Snowflake: A Brief Overview

Snowflake is a self-managed service where all its hardware and software components execute entirely on a public cloud infrastructure.

Like shared-disk architecture, Snowflake has a central data repository that is accessible from all virtual compute instances on the platform. This allows the organization to scale the storage and compute resources independently based on its needs. In addition, Snowflake uses Massively Parallel Processing (MPP) compute clusters, similar to shared-nothing architectures, for query processing.

You can connect to Snowflake using several options, including a web-based user interface, SnowSQL, ODBC, and JDBC drivers, and native connectors like Python or Spark.

Methods for Data Migration from Azure Postgres to Snowflake

You can convert Azure Postgres to Snowflake using Hevo Data or manually via Azure Blob Storage.

Method 1: Transfer Azure Postgres Data to Snowflake Using Hevo Data

Hevo Data is a no-code, real-time ELT platform that cost-effectively automates data pipelines according to your preferences. With Hevo Data’s 150+ readily available connectors, you can easily export data from different sources, load it to destinations, and transform it for detailed analysis.

Some of the key features of Hevo Data are given below:

- Data Transformation: Analyst-friendly data transformation approaches allow you to analyze data efficiently. To clean, prepare, and transform data before importing it to the desired destination, you can write a Python-based transformation script or utilize Drag-and-Drop transformation blocks.

- Incremental Data Load: Hevo Data can transfer data in real-time, maximizing bandwidth use on both ends of the data pipeline.

- Auto Schema Mapping: Hevo’s Auto Mapping feature eliminates manual schema management. It recognizes and replicates the incoming data format to the desired destination schema. Based on your data replication needs, you can choose either full or incremental mappings.

Let’s see how to integrate Azure Postgres to Snowflake using Hevo Data.

Step 1: Configuring Azure Postgres as Your Source Connector

Before starting the configuration, make sure the following prerequisites are met:

Here are the steps to configure your Azure Postgres as your source in Hevo:

- Log in to your Hevo account.

- Select the PIPELINES option from the Navigation Bar.

- Click the + CREATE button on the Pipelines List View page.

- In the Select Source Type page, select Azure PostgreSQL as your source type.

- In the Configure your Amazon RDS SQL Server Source page, specify the mandatory fields.

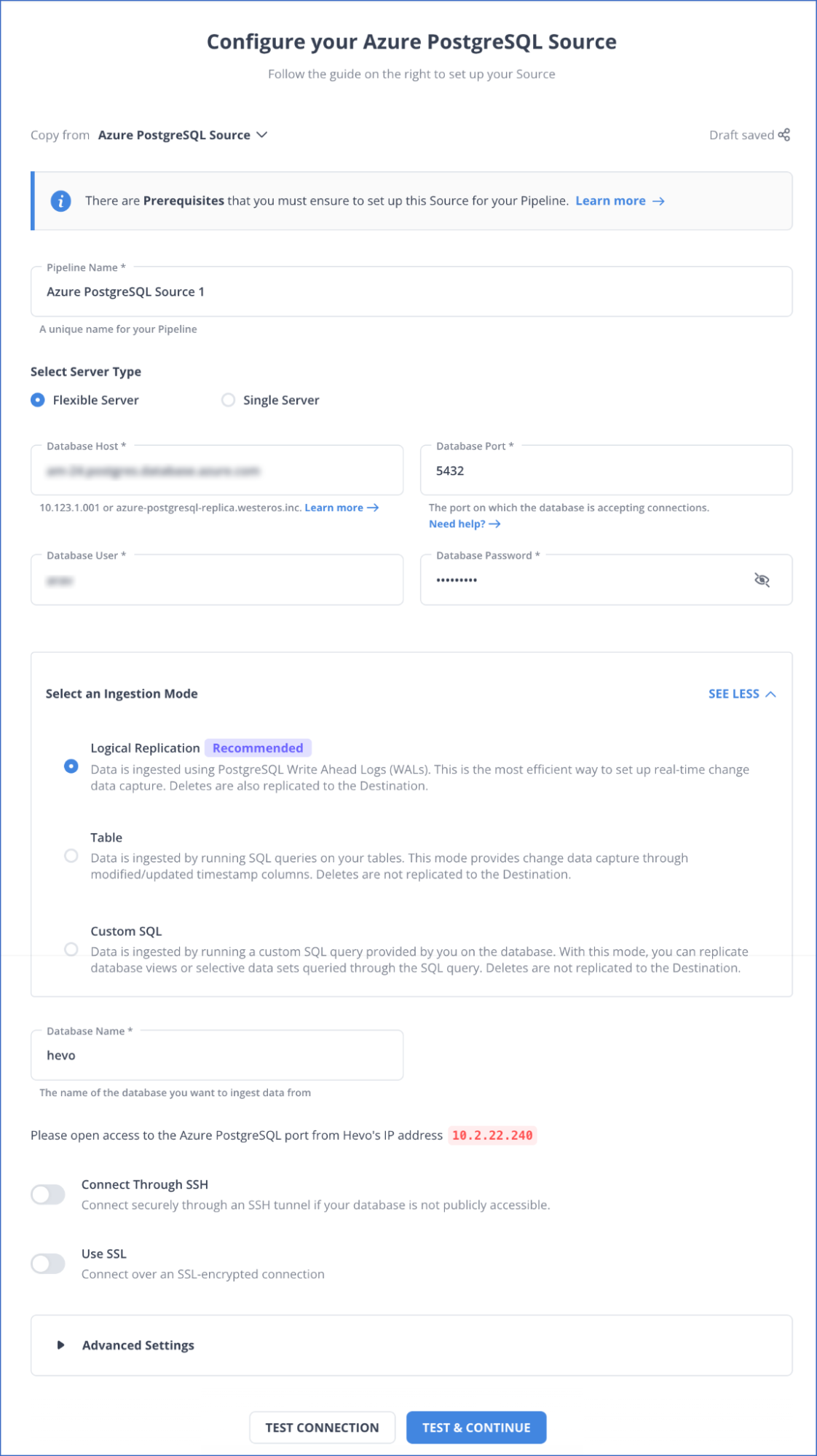

Azure Postgres to Snowflake: Configuring your Azure PostgreSQL Source Page

Azure Postgres to Snowflake: Configuring your Azure PostgreSQL Source Page

- To verify the connection settings, click on the TEST CONNECTION button.

- Click on the TEST & CONTINUE button to complete the source configuration.

To learn more about source configuration, read Azure PostgreSQL documentation in Hevo.

Step 2: Configuring Snowflake as Your Destination Connector

Before you begin, ensure the following prerequisites are in place:

Perform the following steps to configure the Snowflake as your destination in Hevo:

- From the Navigation Bar, choose the option DESTINATIONS.

- Navigate to the Destinations List View page and click the + CREATE button.

- Select Snowflake as your destination type on the Add Destination page.

- Specify the required fields on the Configure your Snowflake Destination page.

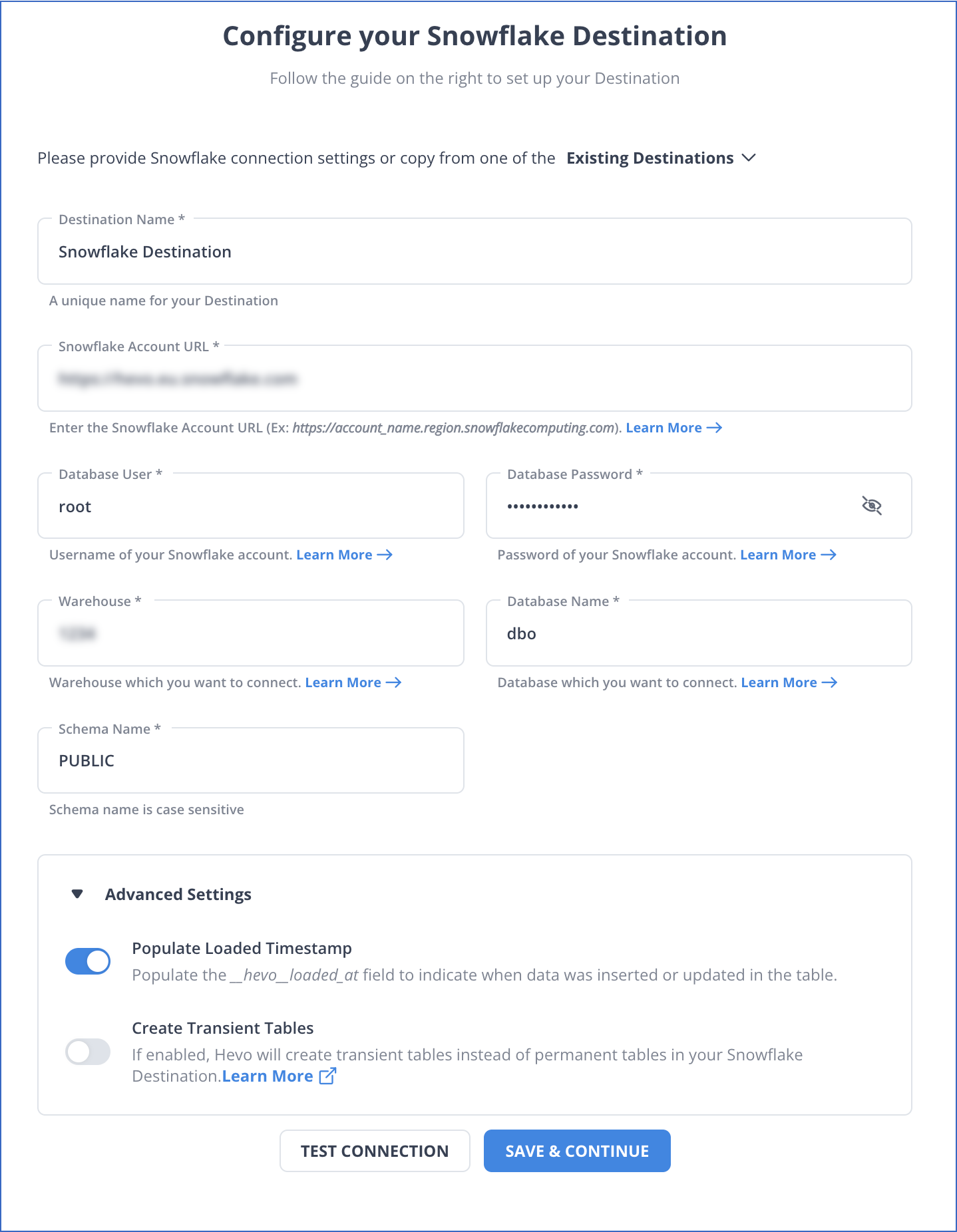

Azure Postgres to Snowflake: Configuring your Snowflake Destination Page

Azure Postgres to Snowflake: Configuring your Snowflake Destination Page

- Click the TEST CONNECTION > SAVE & CONTINUE to complete the destination configuration.

To learn more about destination configuration, read Snowflake documentation in Hevo.

Get Started with Hevo for Free

Method 2: Import Azure Postgres to Snowflake via Azure Blob Storage

With this method, you must initially export data from the Azure Postgres database to Azure Blob Storage using the azure_storage extension. Then, load the data from the Azure Blob Storage container to Snowflake using the COPY INTO command.

Step 1: Export Azure Postgres Data to Azure Blob Storage

Before you start, make sure that the following prerequisites are met:

Let’s go through the steps to export Azure Postgres to Azure Blob Storage:

- Install the azure_storage Extension in the Azure Postgres DB Instance

Open the psql utility and run the following command in the Postgres prompt:

CREATE EXTENSION azure_storage;

- Create a Storage Account

Open the Azure CLI and execute the following command:

az storage account create \

--name MyStorageAccount

--resource-group MyResourceGroup

After creating the storage account, Azure will create two 512-bit keys to access the data in the storage account.

For more information about storage accounts, read how to create a storage account.

- Map the Storage Account with the Access Key

- Open Azure CLI and list out the two access keys associated with your storage account using the following command:

az storage account keys list \

--resource-group MyResourceGroup

--account-name MyStorageAccount

Use the first key to access Azure storage and the second key to keep your account secure when rotating keys. To learn more, read about storage account keys.

- Map the storage account with the access key parameter using the following command:

SELECT azure_storage.account_add('MyStorageAccount', 'SECRET_ACCESS_KEY');

- Export Data from an Azure PostgreSQL Table to Azure Blob Storage

For exporting the data, utilize one of the following methods within the psql utility:

- Run the following

COPY command in the postgres prompt after replacing the sample_table with your desired Azure Postgres table name:

COPY sample_table

TO 'https://MyStorageAccount.blob.core.windows.net/targetblob/sample_table_copied.csv'

WITH (FORMAT 'csv');

- Using the blob_put function, execute the following command by replacing the sample_table with your preferred Azure Postgres table name:

SELECT azure_storage.blob_put('MyStorageAccount', 'targetblob', 'sample_table_copied.csv', subquery1) FROM (SELECT * FROM sample_table) subquery1;

The COPY command and blob_put function help you export the data from the sample_table to a new CSV file named sample_table_copied. This CSV file will be stored in the blob container “targetblob” in the same Azure Storage Account MyStorageAccount”.

Step 2: Import CSV File from Azure Blob Storage to Snowflake

Before you begin, ensure the following prerequisites are in place:

Perform the following steps to copy the data from Azure Blob Storage to Snowflake:

- Log in to your Snowflake account through Snowsight.

- Choose Projects > Worksheets to open an existing worksheet or create a new SQL worksheet.

- In your Snowflake warehouse, execute the following command in the SQL worksheet:

ALTER WAREHOUSE mywarehouse RESUME;

- Create a database and schema in the warehouse using the following command:

CREATE DATABASE sample_database;

CREATE SCHEMA sample_schema;

- You must create a table “my_snowflake_table” whose structure matches the source file.

- Load the data from the named staged files “MyAzureStage” in the Azure blob storage to the Snowflake using the COPY INTO command:

COPY INTO my_snowflake_table

FROM @MyAzureStage

PATTERN='.*sample_table_copied.*.csv';

Read this Snowflake documentation to learn more about loading the data from Azure Blob Storage.

Using the steps in the above method, you can export data from Azure Postgres to Snowflake file.

Limitations of Azure Postgres to Snowflake Migration via Azure Blob Storage

- Technical Expertise: If you are a beginner, you will need significant knowledge and skill to manage data effectively in Azure Postgres, Snowflake, and Azure Blob Storage. This includes optimizing the migration process, troubleshooting issues, and ensuring data integrity and security.

- Migration Costs: Data transfers in and out of Azure Blob Storage incur data egress charges based on the volume of data. Your organization must carefully assess the data transfer requirements and implement best practices to minimize unnecessary costs.

Use Cases of Azure Postgres to Snowflake Migration

- Cross-cloud capabilities: Employing a multi-cloud approach empowers organizations to avoid vendor lock-in. Additionally, it helps your organization utilize a wide range of AWS, Azure, or GCP services, including security, analytics, application migration, and development.

- Zero-copy cloning: Cloning data through copying presents multiple drawbacks, such as data duplication, additional storage needs, and time consumption if the data volume is high. With Snowflake’s Zero-copy cloning, your organization can clone large data volumes within seconds without storage or computation costs.

Conclusion

Migrating data from Azure Postgres to Snowflake offers significant advantages in real-time analytics, data sharing, and scalability.

In this article, you have learned how to connect Azure Postgres to Snowflake using Hevo Data or manually through Azure Blob Storage.

If you are unfamiliar with Azure Blob Storage, moving your data between Azure Postgres and Snowflake might be challenging. As an intermediary tool for data migration, Azure Blob Storage can also become expensive due to its in-and-out transfer costs.

To overcome these challenges, you can choose Hevo Data. Its pre-built connectors help you transfer terabytes of data in minutes and at a low cost.

If you want to learn more about strategies and best practices for data migration, read this Hevo article.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also checkout our unbeatable pricing to choose the best plan for your organization.

Share your experience of Azure Postgres to Snowflake integration in the comments section below!

Frequently Asked Questions (FAQs)

Q. What drives the migration of data to the Snowflake warehouse for many organizations?

A. There are several reasons why organizations choose Snowflake as their destination platform. A few of them are given below:

- Snowflake can handle structured and semi-structured data formats, including Parquet, XML, CSV, ORC, JSON, AVRO, and more.

- Snowflake complies with the ANSI SQL standards, offering comprehensive support for analytical functions and automated query optimization.

Radhika is a Content Specialist at Hevo Data with over 5 years of experience. She excels at creating high-quality content related to data engineering, especially data integration, and data analytics. Her expertise lies in translating complex technical concepts into accessible and engaging content for diverse audiences.

Sony is a former Computer Science teacher turned technical content writer, specializing in crafting blogs and articles on topics such as ML, AI, Python Frameworks, and other emerging trends in Data Science and Analytics.