How Providence Health Built a Model marketplace using Databricks

Providence's MLOps Platform

Providence is a healthcare organization with 120,000 caregivers serving over 50 hospitals and 1,000 clinics across seven states. Providence is a pioneer in moving all electronic healthcare records (EHR) data to the cloud and is a healthcare leader in leveraging cloud technology to develop a large inventory of Artificial Intelligence (AI) and Machine Learning (ML) models.

The recent popularity of Large Language Models (LLMs) has created an unprecedented demand to deploy open source LLMs fine-tuned on Providence's rich EHR data set. Home-brewed AI/ML models and fine-tuned LLMs have created an even more extensive inventory of AI/ML models at Providence. The data science team at Providence embarked on an ambitious project to build an MLOps platform to develop, validate and deploy a large inventory of AI/ML models at scale.

Providence's MLOps platform has three pillars: model development, model risk management, and model deployment. The data science team has been building processes, best practices, and governance as part of the first two pillars of the MLOps platform. We partnered with Databricks to build the third pillar of the MLOps platform: model deployment.

There are over sixty-five Databricks workspaces at Providence. Each of these workspaces has an inventory of models, with some in high demand across the enterprise. The problem Providence encountered was how to deploy high-demand AI/ML models without searching all sixty-five workspaces for models. Once popular models are identified, how can the governance infrastructure provide access to these models with minimal effort?

Providence presented this problem to Databricks who devised a solution to create "Providence's Model Marketplace," a single and centralized Databricks workspace with a repository of popular AI/ML models. This solution solves two major problems: (1) caregivers across the enterprise just need access to the "Models Marketplace" to deploy any model from over sixty-five workspaces. (2) The "Providence's Model Marketplace" is one workspace where the enterprise searches when deploying models, therefore reducing model governance complexity.

Over several weeks, Providence's team of platform engineers, DevOps engineers, and Data Scientists worked closely with the Databricks Professional Services team to build "Providence's Model Marketplace." Providence and Databricks teams met multiple times a week to share updates, resolve blockers, and transfer knowledge. As a result, when the Databricks team completed the project, Providence seamlessly picked up the project and immediately began using and improving the platform.

MLOps Platform Architecture

Data Scientists often generate tens of hundreds of models over a short period of time. To better govern the existing models, having all production-grade ML models live in one centralized workspace is ideal so they can be easily looked up or shared across teams.

Databricks workspaces represent a natural division among business groups or teams. In order to have all production versions of ML models live in one curated workspace, Databricks proposed the above diagram for architecture — using external storage storage as an intermediate layer for exporting and importing models.

In this project, Providence was restricted to using Databricks Generally Available features, therefore Models in Unity Catalog functionality was not considered. In general, we proposed 2 high-level steps.

- Export: A daily scheduled job (run by the service principal) runs in every workspace to export the latest production versions of ML models into external storage.

- Import: There is a daily scheduled job (also run by the service principal) running in the centralized "Providence's Models Marketplace" workspace to import the latest production versions of ML models into this "curated" workspace from external storage storage.

Implementation

All code and jobs were run by service principals. The code was built on top of the MLflow export/import tool.

The logic of the implementation is straightforward. When data scientists are ready to push a version of a model into production, they will first transition the model stage into "Production" in the MLflow model registry in their dev Databricks workspace. After that, the export and import logic details are explained in the following sections.

Export:

The export code is run in all of the dev workspaces. The algorithm, as described below, grabs the latest production version of the model that has not been exported before. Then it exports the corresponding files into DBFS, and copies them into external storage. Those files include model files together with its MLflow experiments and other artifacts. After this latest production version of the model has been exported, we update the description as "Exported Already On …….".

Algorithm

- Get a qualified list of models in one dev workspace (has at least 1 production version)

- Grab the current export summary delta table from external storage. If it exists, overwrite to a Delta table

- For each model in the qualified list:

Check the latest production version of this model:- If the description contains key work "Exported Already On", do not proceed any further

- Else (the description does not contain keyword "Exported Already On"):

- Proceed to export model and files;

- Modify the original model's description to "Exported Already On …"

- Record the export information by inserting a new row into the internal delta table

- Overwrite content from the internal delta table to the "export summary" delta table from external storage

After the export, make the description of the original model's latest production version as "Exported Already On......"

client.update_model_version(

name=model_name,

version=latest_production_version,

description="Exported Already On " + todaysdate + ", old description: " + latest_description_production_version

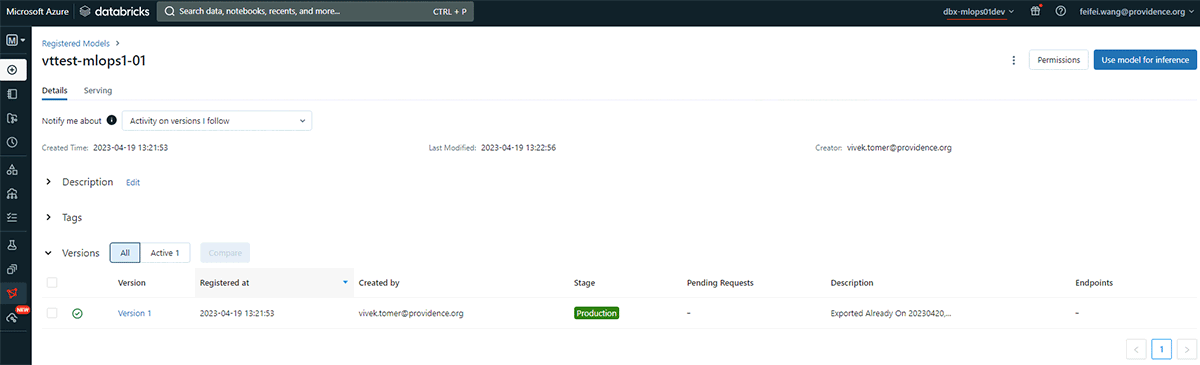

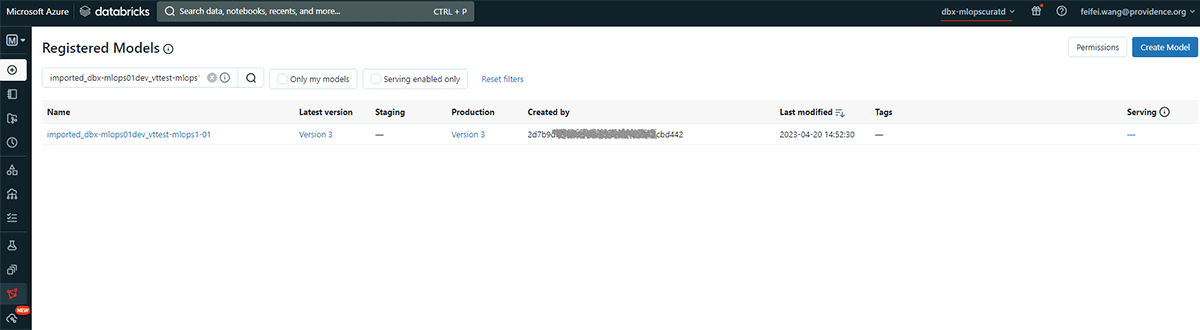

)The two screenshots below demonstrate first exporting the latest production Version 1 model created by Vivek in the "dev01" workspace, then importing it to the "Providence's Models Marketplace" workspace by a service principal.

The export screenshot:

The import screenshot:

Import:

Let's take a look at the import logic for production models from external storage into the "Providence Model Marketplace" workspace.

Algorithm

- Filter export table down to today's date, per workspace, per model, per latest exported version (or latest timestamp) only.

- Grab the current import summary delta table from the external storage location and overwrite to an internal delta table

- For each row in the filtered table from step 1:

- Grab information, model_name, original_workspace_id, exported version, etc.

- Import the model files and MLflow experiment

- Record this import information by inserting a new row into the same internal delta table

- Overwrite content from the internal delta table to the "import summary" delta table from external storage

Future Steps

The project took an evolutionary architecture approach to deal with Databricks features not yet in general availability (GA). For example, "Models in Unity Catalog" offers similar functionality, but (as of the time of this writing) it is in preview. When in GA, "Models in Unity Catalog" would be leveraged to make the curated models available at the Providence Model Marketplace workspace. A Databricks workflow triggered from CI/CD would still be used as the mechanism to apply the corresponding permissions to the approved models.

Providence continues to build upon the work done by Databricks. In recent months, requests to implement large language models (LLMs) in various applications and processes at Providence have significantly increased. As a result, we are fine-tuning open-source LLMs on Providence's EHR data and deploying it on the MLOps platform created in partnership with Databricks.

The DevOps engineering team at Providence is creating a DevOps pull request process to download, distribute and deploy open-source models securely across the enterprise. Providence's MLOps platform is secure, open, and fully automated. A Providence caregiver can easily access any home-brewed or open-source LLM by simply making a pull request.

Conclusion

At Providence, our strength lies in Our Promise of "Know me, care for me, ease my way." Working at our family of organizations means that regardless of your role, we'll walk alongside you in your career, supporting you so you can support others. We provide best-in-class benefits and we foster an inclusive workplace where diversity is valued, and everyone is essential, heard, and respected. Together, our 120,000 caregivers (all employees) serve in over 50 hospitals, over 1,000 clinics and a full range of health and social services across Alaska, California, Montana, New Mexico, Oregon, Texas and Washington. As a comprehensive health care organization, Providence serves more people, advancing best practices, and continue our more than 100-year tradition of serving the poor and vulnerable.

If you are interested in job searching, please feel free to apply to join Providence and the team. Here is Providence's careers website: https://www.providenceiscalling.jobs/

About the authors:

We would like to thank Young Ling, Patrick Leyshock, Robert Kramer and Ramon Porras from Providence for supporting the MLOps project. We would also like to thank Andre Mesarovic, Antonio Pinheirofilho, Tejas Pandit, and Greg Wood for developing the MLflow-export-import tool: https://github.com/mlflow/mlflow-export-import.

About the authors

- Feifei Wang is a Senior Data Scientist at Databricks, working with customers to build, optimize, and productionize their ML pipelines. Previously, Feifei spent 5 years at Disney as a Senior Decision Scientist. She holds a Ph.D co-major in Applied Mathematics and Computer Science from Iowa State University, where her research focus was Robotics.

- Luis Moros is a Staff Data Scientist consultant at the ML Practice of Databricks. He has been working in software engineering for more than 20 years, focusing in Data Science and Big Data in the last 8. Prior to Databricks, Luis has applied Machine Learning and Data Science in different industries including: Financial Services, BioTech, Entertainment, and Augmented Reality.

- Vivek Tomer is a Director of Data Science at Providence where he is responsible for creating and leading strategic enterprise AI/ML projects. Prior to Providence, Mr. Tomer was Vice President, Model Development at Umpqua Bank where he led the development of the bank's first loan-level credit risk and customer analytics models. Mr. Tomer has two master's degrees from the University of Illinois at Urbana-Champaign, one in Theoretical Statistics and the other in Quantitative Finance, and has over a decade of experience in solving complex business problems using AI/ML models.

- Lindsay Mico is the Head of Data Science for Providence, with a focus on enterprise scale AI solutions and cloud native architectures. Originally trained as a cognitive neuroscientist and statistician, he has worked across industries including natural resource management, telecom, and healthcare.