Cybersecurity Lakehouse Best Practices Part 1: Event Timestamp Extraction

In this four-part blog series "Lessons learned from building Cybersecurity Lakehouses," we will discuss a number of challenges organizations face with data engineering when building out a Lakehouse for cybersecurity data, and offer some solutions, tips, tricks, and best practices that we have used in the field to overcome them. If you want to build your own Cybersecurity Lakehouse, this series will educate you on the challenges and offer a way forward.

Databricks has built a practical low-code configuration solution for efficiently handling and standardizing cyber logs. Our Lakehouse platform simplifies data engineering, facilitating a faster shift to search, analytics, and streamed threat detection. It complements your existing SIEM and SOAR systems, enhancing your cybersecurity operations without unnecessary complexity.

In part one, we begin with the most fundamental element of any cyber analytics engine: uniform event timestamp extraction. Accurate timestamps are among the most important elements in security operations and incident response. Without accuracy, generating a sequence of events taken by system users or bad actors is impossible. In this blog, we will look at some of the strategies available to identify, extract, and transform event timestamp information into a Delta Lake, such that they are usable within a cyber context.

Why is event time so important?

Machine-generated log data is messy at best. There are well-defined structures for specific file types (JSON, YAML, CSV etc.), but the content and format of the data that makes up those files are largely left to the developers interpretation. While time formats exist (ISO 8601), adherence to them is limited and subjective - perhaps log formats predate those standards, or geographic bias for a specific format drives how those timestamps are written.

Despite the varied time formats reported in logs, we are responsible for normalizing them to ensure interoperability with all log data being received and analyzed in any cyber engine.

To emphasize the importance of interoperability between timestamps, consider some of the tasks a typical security operations center (SOC) needs to answer daily.

- Which computer did the attacker compromise first?

- In what order did the attacker move from system to system?

- What activities occurred, and in what order once the initial foothold had been established?

Without accurate and unified timestamps, it is impossible to generate a timeline of activities that occurred to answer these questions effectively. Below, we examine some of the challenges and offer advice on how to approach them.

Timestamp Issues

Multiple or single column: Before considering how to parse an event timestamp, we must first isolate it. This may already happen automatically in some log formats or spark read operations. However, in others, it is unlikely. For instance, comma-separated values (CSV) files will be extracted by Spark as individual columns. If the timestamp is isolated by one of those, then great! However, a machine generating syslog data likely lands as a single column, and the timestamp must be isolated using regular expressions.

Date and time formats: These cause a lot of confusion in log files. For instance, '12/06/12' vs. '06/12/12'. Both formats are valid, but identifying the day, month, and year is challenging without knowing the local system log format.

Timezone Identification: Similar to data and time formats, some systems either report the timezone of the timestamp, whereas others assume a local time and do not print the timezone at all. This may not be an issue if all data sources are reported and analyzed within the same time zone. However, organizations need to analyze tens or hundreds of log sources from multiple time zones in today's connected and global world.

Identifying, extracting, and parsing event timestamps require consistently and effectively representing time within our storage systems. Below is an example of how to extract and parse a timestamp from a syslog-style Apache web server.

Extracting Timestamps Scenario

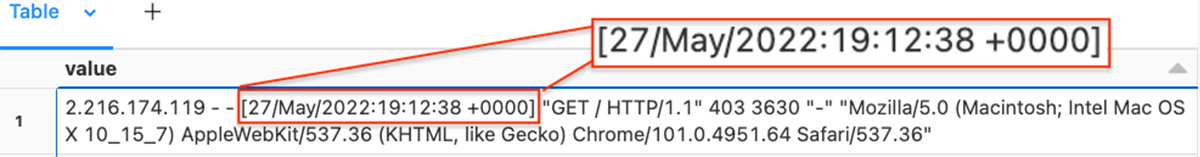

In the following example, we look at the standard Apache web server log format. The data is generated as a text record and is read as a single column (value) in Databricks. Therefore, we need to extract the event timestamp using a regular expression.

Example regex to extract the event timestamp from a single column of data:

from pyspark.sql.functions import regexp_extract

TIMESTAMP_REGEX = '^([^ ]*) [^ ]* ([^ ]*) \[([^\]]*)\]'

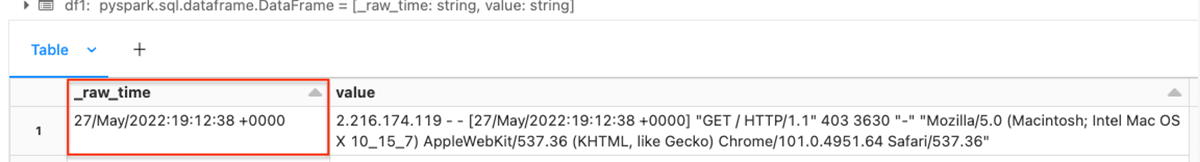

df1 = df.select(regexp_extract("value", TIMESTAMP_REGEX, 3).alias('_raw_time'), "*")

display(df1)We use the PySpark regexp_extract function to extract the part of the string that has the event timestamp, and create a column _raw_time with the matching characters.

Resulting dataframe:

Parsing Timestamps

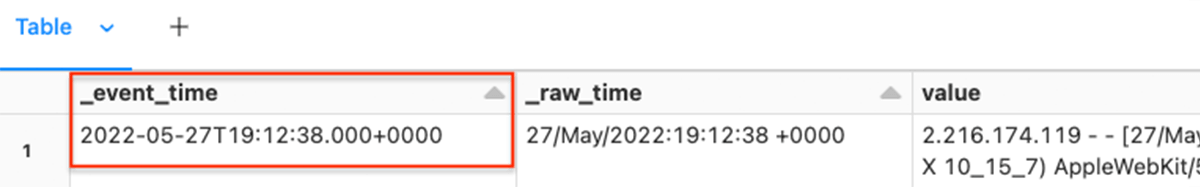

With the event timestamp extracted as a new column, we can now normalize it into an ISO 8601 standard timestamp.

To normalize the timestamp, we need to define the format using the date/time format modifiers and convert it to a unix-style timestamp before transforming it to the ISO formatted timestamp format.

TIMESTAMP_FORMAT = "dd/MMM/yyyy:HH:mm:ss Z"Example transformation to an ISO 8601 formatted event timestamp:

from pyspark.sql.functions import to_timestamp, unix_timestamp, col

TIMESTAMP_FORMAT="dd/MMM/yyyy:HH:mm:ss Z"

df2 = df1.select(

to_timestamp(unix_timestamp(col("_raw_time"), TIMESTAMP_FORMAT).cast("timestamp"), "dd-MM-yyyy HH:mm:ss.SSSZ").alias("_event_time")

)

display(df2)We use the PySpark functions unix_timestamp and to_timestamp to generate the new metadata column _event_time.

Resulting dataframe:

The resulting column is cast to Timestamp Type to ensure consistency and data integrity.

Tips and best practices

In our journey with helping many customers with cyber analytics, we have gathered some invaluable advice and best practices that can significantly enhance the ingest experience.

Explicit time format: When building parsers, explicitly setting the time format will significantly speed up the parse task when compared to passing a column to a generic library that needs to test many formats to find one that returns an accurate timestamp column.

Column Naming: Prefix metadata columns with an underscore. This allows easy distinction between machine-generated data and metadata, with the added bonus of appearing left-justified by default in data frames and tables.

Event Time vs. Ingest Time: Delays occur in data transmission. Add a new metadata column for ingest time and create operational rigor to identify data sources currently behind or missing.

Defaults: Strategize over missing or undetermined timestamps. Things can and do go wrong. Make a judgment call over how to process missing timestamps. Some of the tactics we have seen are:

- Set the date to zero (01/01/1970) and create operational rigor to identify and correct data.

- Set the date to the current ingest time and create operational rigor to identify and correct data

- Fail the pipeline entirely

Conclusion

Well-formed and accurate event timestamps are critical for enterprise security operations and incident response for generating event sequences and timelines to investigate cyber threats. Without interoperability across all data sources, it is impossible to maintain an effective security posture. Complexities such as regular expression extraction and parsing discrepancies in data sources underpin this. In helping many customers to build out Cybersecurity Lakehouses, we have created practical solutions to speed up this process.

Get in Touch

In this blog, we worked through a single example of the many possible timestamp extraction issues encountered with semi-structured log files. If you want to learn more about how Databricks cyber solutions can empower your organization to identify and mitigate cyber threats, contact [email protected] and check out our new Lakehouse for Cybersecurity Applications webpage.