Part 2 of the blog series on deploying Workflows through Terraform. Here we focus on how to convert inherited Workflows into Terraform IaC

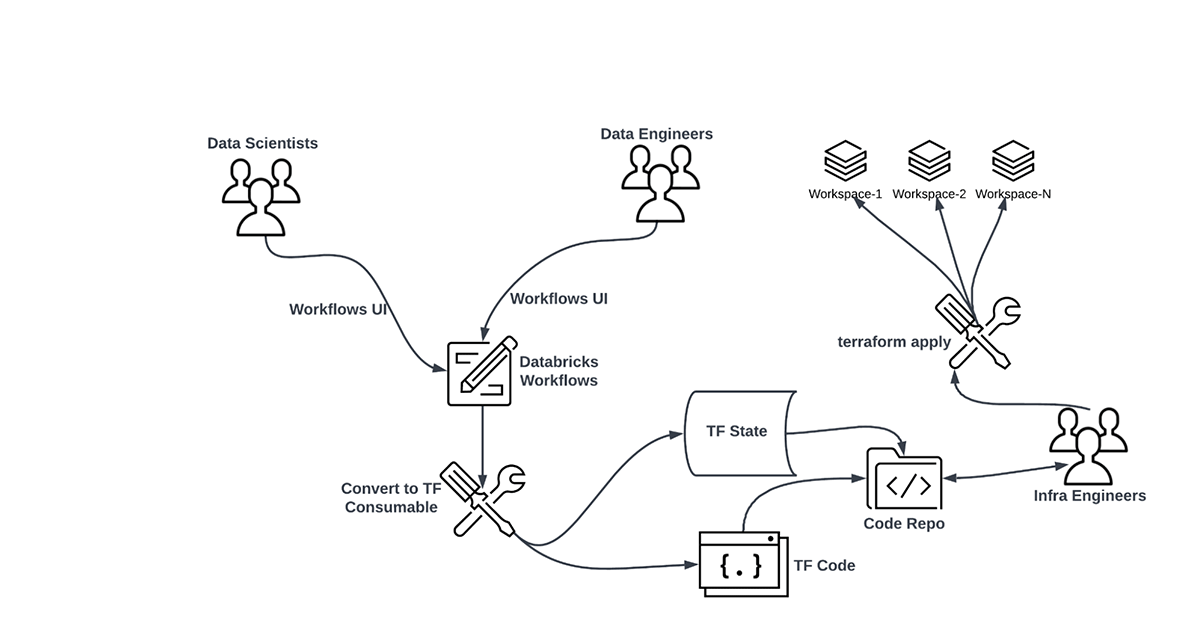

In the previous blog Databricks Workflows through Terraform we learnt how to use the Terraform Databricks Provider to create from scratch Infrastructure-as-Code (IaC) for Multi-Task Jobs / Workflows. Workflows UI is easy, modularized, and provides a visual representation of the dependencies. Hence it may be a preferred way to build workflows for most engineers and data scientists. However, certain organizations may prefer to manage their infrastructure using code and may even have a team of dedicated infra engineers. In this case, it is still possible to inherit a UI-created workflow and maintain it as code. Below is how it may look like in practice:

In this blog, we will discuss tooling which can convert an existing workflow into Terraform IaC. We will also take a look at various ways in which such IaC can be deployed.

Terraform Experimental Resource Exporter

As a part of the Terraform Databricks Provider package, Databricks offers a tool known as Experimental Resource Exporter. There's still work going on to turn it into an all-in-one Databricks resource IaC generator for all offered clouds, however even in its present shape, this tool has the ability to generate Terraform HashiCorp Language (HCL) code for Multi-task Jobs. Below are the steps to work with it:

- Set up the Terraform Databricks Provider

- Set up authentication to connect with the source Databricks Workspace

- Execute the Exporter utility and generate Terraform IaC

- Refactor and redeploy the Terraform IaC into other workspaces

Set up the Terraform Databricks provider

The Exporter utility is shipped with the binaries for the Terraform Databricks provider. Setting up the provider downloads those binaries to a local directory. Hence, begin by creating a folder and a file <my_provider>.tf inside it. Add the below code into the file (choose the latest provider version from its release history). Execute the command terraform init (Terraform needs to be installed for this).

terraform {

required_providers {

databricks = {

source = "databricks/databricks"

version = "1.6.1" # provider version

}

}

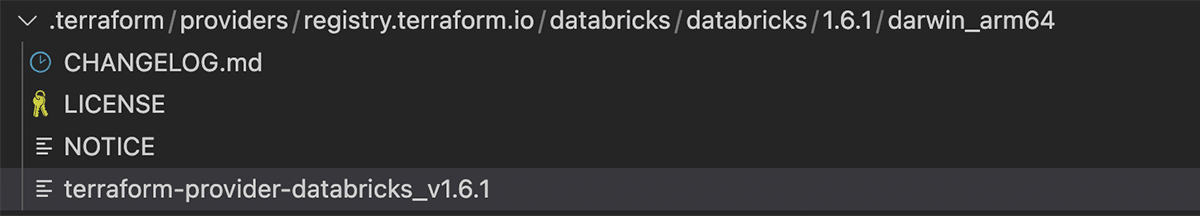

}Terraform will download the binaries and save them in a .terraform folder inside the working directory. On an Apple M1 Pro Mac (macOS Monterey V 12.5.1), the downloads look like this:

Our artifact of interest is the file terraform-provider-databricks_v1.6.1 pertaining to the provider version "1.6.1" specified in the <my_provider >.tf file.

Set up authentication

At the time of publishing this blog, the Exporter utility uses only two modes of authentication with Databricks:

- The DEFAULT profile stored in configured credentials file

~/.databrickscfgfor Databricks CLI. It is created by thedatabricks configure --tokencommand. Check this page for more details. - Environment variables

DATABRICKS_HOST and DATABRICKS_TOKENcreated through commandsexport DATABRICKS_HOST=https://my-databricks-workspace.cloud.databricks.comandexport DATABRICKS_TOKEN=my_databricks_api_token. You can refer to this documentation to generate a Databricks API token.

Execute the Exporter

A command like this can be executed to fetch the Terraform HCL code for a job matching the name my-favorite-job, provided it has been active in the last 120 days:

.terraform/providers/registry.terraform.io/databricks/databricks/1.6.1/darwin_arm64/terraform-provider-databricks_v1.6.1 exporter -skip-interactive -match=my-favorite-job -listing=jobs -last-active-days=120 -debug -skip-interactiveTo fetch all jobs, the below command should work:

.terraform/providers/registry.terraform.io/databricks/databricks/1.6.1/darwin_arm64/terraform-provider-databricks_v1.6.1 exporter -skip-interactive -services=jobs -debugThere is an interactive mode to run this utility as well. The directory where the Terraform files would be generated can be specified as an argument. As of version 1.6.1, jobs should have run at least once to be eligible to be picked up by this utility. Detailed documentation to use this Exporter can be found in the Terraform registry.

Refactor and redeploy the Terraform IaC

The IaC files generated by the Exporter utility look much like those created in the previous blog Databricks Workflows through Terraform. These files may be version controlled through a git provider. Infra Engineers can use this code as base and then modularize it. Hardcoded objects may be converted into variables (.tfvars, vars.tf, var.terraform apply. For example, let's say we want to deploy the same job in two workspaces, but want to have different cluster configurations for the underlying tasks. We want different people to be notified when the Job or Tasks fail. To be able to implement this scenario, we can keep a multitude of variable files having different resource configurations for each workspace, environment, or even each business domain.

Another point to note is that the IaC generated for a Workflow through the steps above cannot be executed in a vacuum. As described in the prior blog, every Workflow has plenty of dependencies on other resources such as Identity, Cluster policies, Instance pools, git credentials etc. IaC for these resources also needs to be generated in a similar way so that all jigsaw puzzle pieces can fit properly in a coherent way.

As an exercise, let's implement the variable email notifications scenario. Whenever a workflow starts, succeeds or fails, we want to send notifications to different email addresses decided by the environment type (dev, test or prod). We start by adding to the working IaC directory a Terraform file (my-variables.tf) which declares two variables – environment and email_notification_groups.

variable "environment" {

default = "dev"

}

variable "email_notification_groups" {

default = {

"dev" = {

"on_start" = ["[email protected]"]

"on_success" = ["[email protected]"]

"on_failure" = ["[email protected]"]

}

"test" = {

"on_start" = ["[email protected]"]

"on_success" = ["[email protected]"]

"on_failure" = ["[email protected]"]

}

"prod" = {

"on_start" = ["[email protected]"]

"on_success" = ["[email protected]"]

"on_failure" = ["[email protected]"]

}

}

description = "Environment decides who gets notified"

}Inside the Terraform IaC for the multi-task job, we remove any hardcoding from the Email Notifications block and make it dynamic (var.email_notification_groups and var.environment are used to access values of the declared variables).

email_notifications {

on_failure = var.email_notification_groups[var.environment]["on_failure"]

on_start = var.email_notification_groups[var.environment]["on_start"]

on_success = var.email_notification_groups[var.environment]["on_success"]And finally, we inject the environment dynamically from the command line:

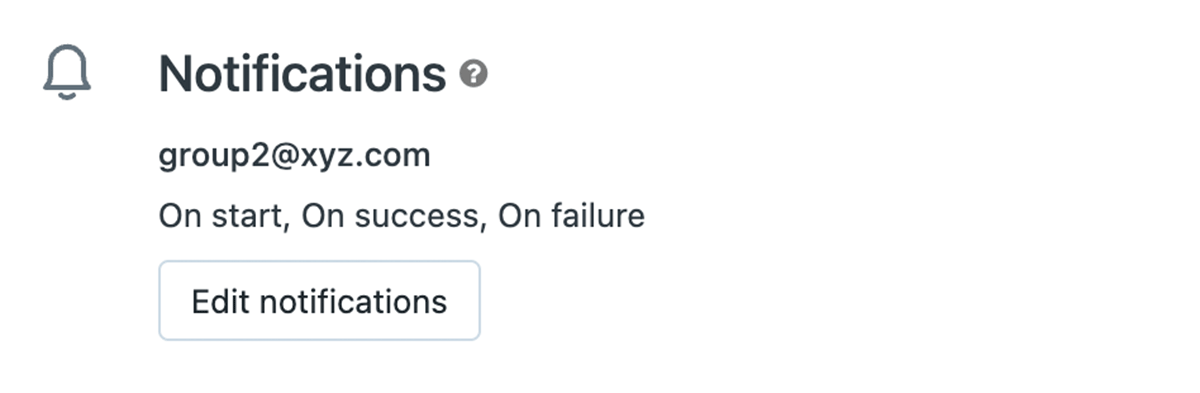

terraform apply -var="environment=test"It results in a Workflow with an Email Notifications section like the below:

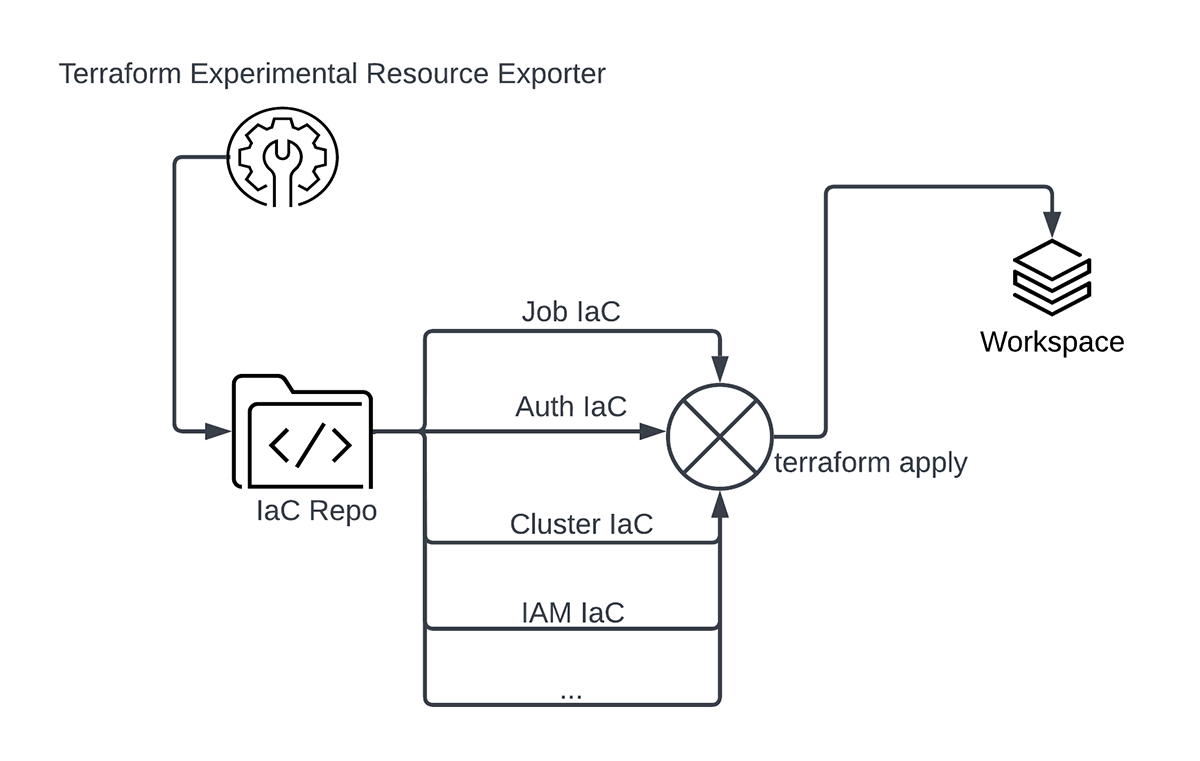

The diagram below summarizes how different configurations can come together at run time during deployment and generate many variations of a Workflow.

You can refer to the Ilimity Bank Disaster Recovery case study to study how Illimity Bank has used the Exporter utility to generate Terraform IaC for their Databricks resources and then redeployed them in their secondary environments.

Conclusion

With the collaboration of data engineers, data scientists and infra engineers, organizations can build modularized and scalable workflows, which can be deployed into multiple workspaces at the same time through Terraform Infrastructure-as-Code. Writing IaC from scratch for each and every Databricks workflow can be a daunting task. The Exporter utility can come in handy to generate a baseline terraform code and extend it as required.

Get Started

Learn Terraform

Databricks Terraform Provider

Databricks Workflows through Terraform