Better Alerts: Announcing New Workflows Notifications

Databricks Workflows is a fully-managed orchestration service that is deeply integrated with the Databricks Lakehouse Platform. Workflows is a great alternative to Airflow and Azure Data Factory for building reliable data, analytics, and ML workflows on any cloud without needing to manage complex infrastructure. To help data teams sleep better at night, we are happy to announce the ability to receive Job notifications via webhook so you can easily integrate Workflows into your operations stack. Additionally, we are also rolling out streamlined email notifications.

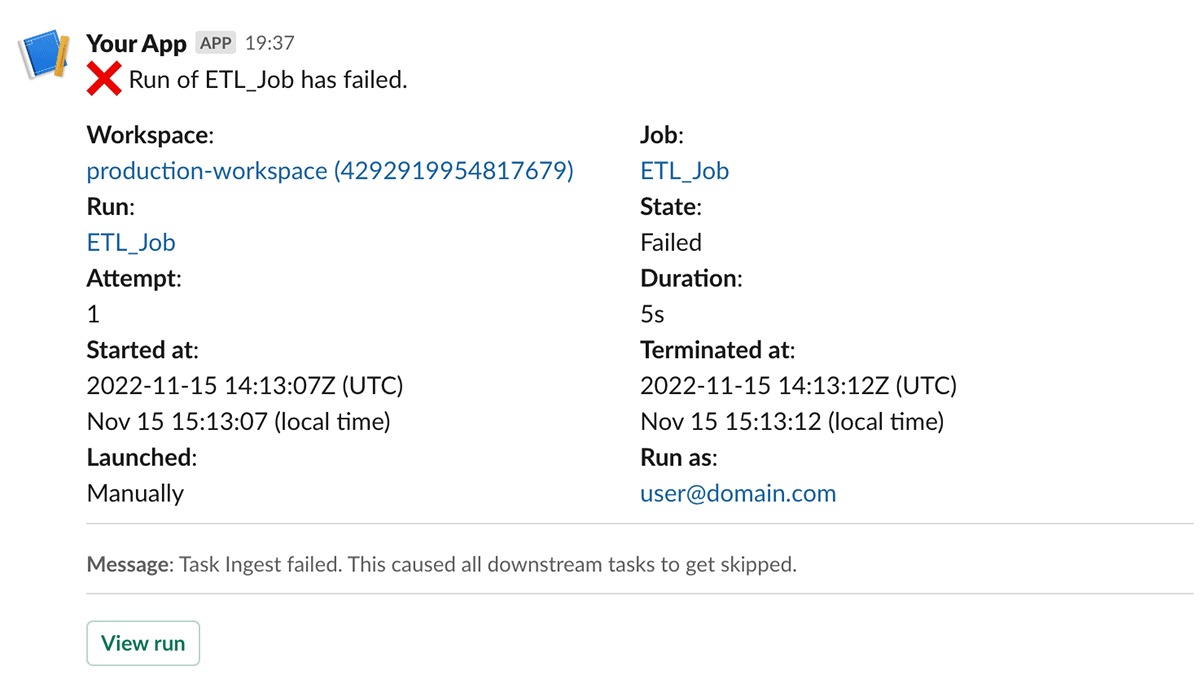

Workflow notifications for Slack and webhooks

You can now integrate Workflow notifications into operational monitoring systems with a new webhook notification destination. We also offer a direct integration with Slack, which makes it easy to keep your team updated on important events such as a failed job run. These richer notifications will allow data teams to collaborate better directly in the tool of their choice.

Future integrations will include notifications sent to Microsoft Teams or PagerDuty.

This feature is currently in Public Preview: Learn more

Streamlined email notifications

We've also streamlined our email notifications with more context and a modern design. Notification emails will now include important details like run duration and error messages.

This new feature is available to all customers immediately on an opt-in basis per workspace. Admins can enable this through Workspace Settings.

Get started with Databricks Workflows

To experience the productivity boost that a fully-managed, integrated lakehouse orchestrator offers, we invite you to create your first Databricks Workflow today. Get started for free.