Modular Orchestration with Databricks Workflows

Thousands of Databricks customers use Databricks Workflows every day to orchestrate business critical workloads on the Databricks Lakehouse Platform. As is often the case, many of our customers' use cases require the definition of non-trivial workflows that include DAGs (Directional Acyclic Graphs) with a very large number of tasks with complex dependencies between them. As you can imagine, defining, testing, managing, and troubleshooting complex workflows is incredibly challenging and time-consuming.

Breaking down complex workflows

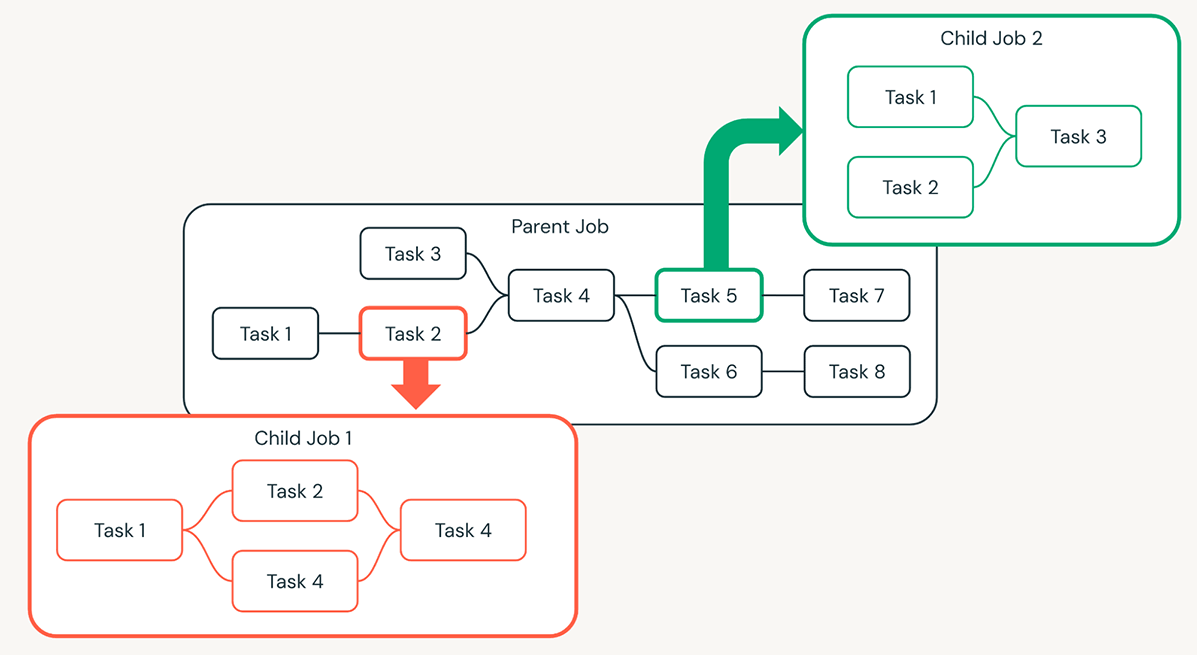

One way to simplify complex workflows is to take a modular approach. This entails breaking down large DAGs into logical chunks or smaller "child" jobs that are defined and managed separately. These child jobs can then be called from the "parent" job making the overall workflow much simpler to comprehend and maintain.

Why modularize your workflows?

The decision on why you should divide the parent job into smaller chunks can be made based on a number of reasons. By far, the most common reason we hear about from customers is the need to split a DAG up by organizational boundaries. This means, allowing different teams in an organization to work together on different parts of a workflow. This way, ownership of parts of the workflow can be better managed, with different teams potentially using different code repositories for the jobs they own. Child job ownership across different teams extends to testing and updates, making the parent workflows more reliable.

An additional reason to consider modularization is reusability. When several workflows have common steps, it makes sense to define those steps in a job once and then reuse that as a child job in different parent workflows. By using parameters, reused tasks can be made more flexible to fit the needs of different parent workflows. Reusing jobs reduces the maintenance burden of workflows, ensures updates and bug fixes occur in one place and simplifies complex workflows. As we add additional control flow capabilities to workflows in the near future, another scenario we see being useful to customers is looping a child job, passing it different parameters with each iteration (NOTE that looping is an advanced control flow feature you will hear more about soon. So stay tuned!)

Implementing modular workflows

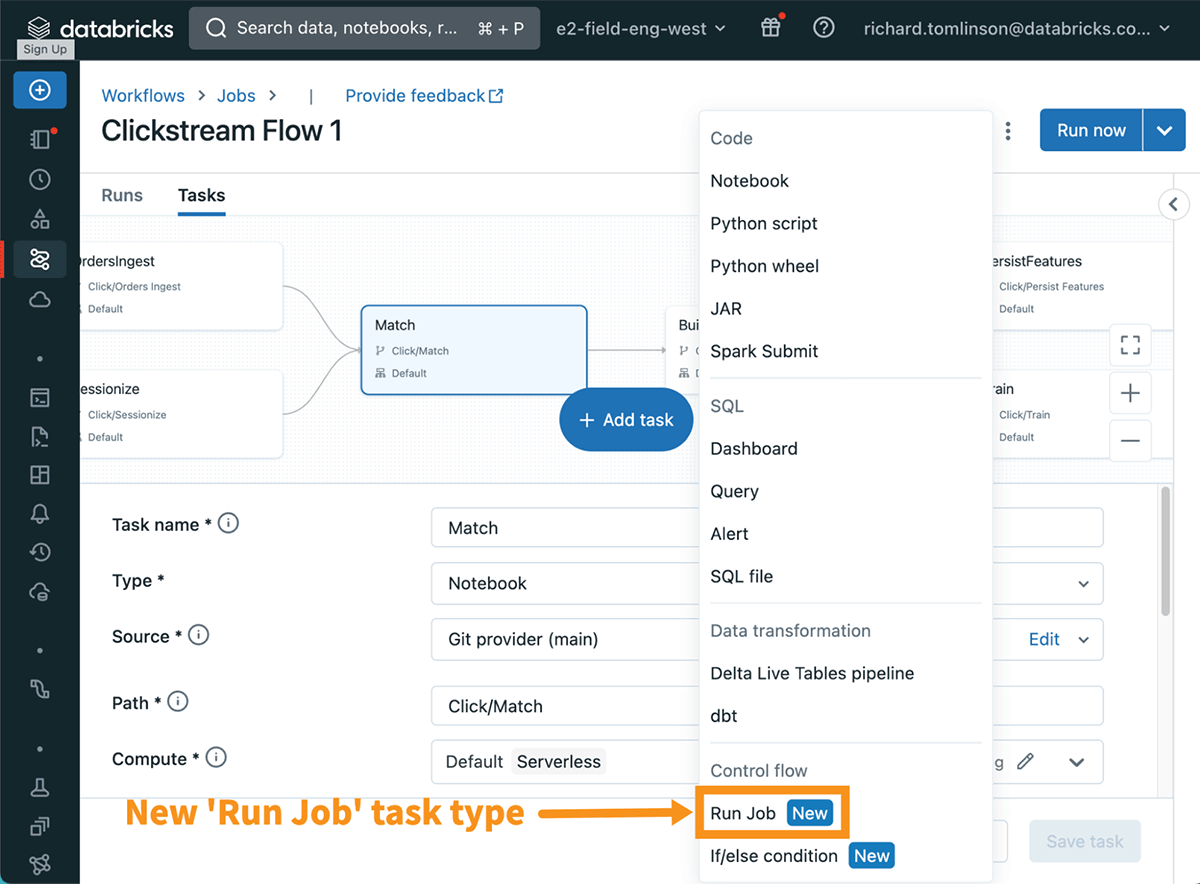

As part of several new capabilities announced during the most recent Data + AI Summit, is the ability to create a new task type called "Run Job". This allows Workflows users to call a previously defined job as a task and by doing so enables teams to create modular workflows.

To learn more about the different task types and how to configure them in the Databricks Workflows UI please refer to the product docs.

Getting started

The new task type "Run Job" is now generally available in Databricks Workflows. To get started with Workflows:

- Learn more about Databricks Workflows

- Take a tour of Databricks Workflows

- Define and run your first workflow with this quickstart guide.