In 2010, a transformative concept took root in the realm of data storage and analytics — a data lake. The term was coined by James Dixon, Back-End Java, Data, and Business Intelligence Engineer, and it started a new era in how organizations could store, manage, and analyze their data. Data lakes emerged as expansive reservoirs where raw data in its most natural state could commingle freely, offering unprecedented flexibility and scalability.

This article explains what a data lake is, its architecture, and diverse use cases. The goal is to provide a comprehensive guide that can be a navigational tool for all specialists plotting their course in today's data-driven world.

What is a data lake?

A data lake is a centralized repository designed to hold vast volumes of data in its native, raw format — be it structured, semi-structured, or unstructured. A data lake stores data before a specific use case has been identified. This flexibility makes it easier to accommodate various data types and analytics needs as they evolve over time. The term data lake itself is metaphorical, evoking an image of a large body of water fed by multiple streams, each bringing new data to be stored and analyzed.

Instead of relying on traditional hierarchical structures and predefined schemas, as in the case of data warehouses, a data lake utilizes a flat architecture. This structure is made efficient by data engineering practices that include object storage. Such an object storage model allows metadata tagging, incorporating unique identifiers, streamlining data retrieval and enhancing performance.

Watch our video explaining how data engineering works. This will simplify further reading.

https://www.youtube.com/watch?v=qWru-b6m030&t=1s&ab_channel=AltexSoft

Getting back to the topic, the key thing to understand about a data lake isn't its construction but rather its capabilities. It is a versatile platform for exploring, refining, and analyzing petabytes of information that continually flow in from various data sources.

Who needs a data lake?

If the intricacies of big data are becoming too much for your existing systems to handle, a data lake might be the solution you're seeking.

Organizations that commonly benefit from data lakes include:

- those that plan to build a strong analytics culture, where data is first stored and then made available for various teams to derive their own insights;

- businesses seeking advanced insights through analytics experiments or machine learning models; and

- organizations conducting extensive research with the need to consolidate data from multiple domains for complex analysis.

If your organization fits into one of these categories and you're considering implementing advanced data management and analytics solutions, keep reading to learn how data lakes work and how they can benefit your business.

Data lake vs. data warehouse

Before diving deeper into the intricacies of data lake architecture, it's essential to highlight the distinctions between a data warehouse and a data lake.

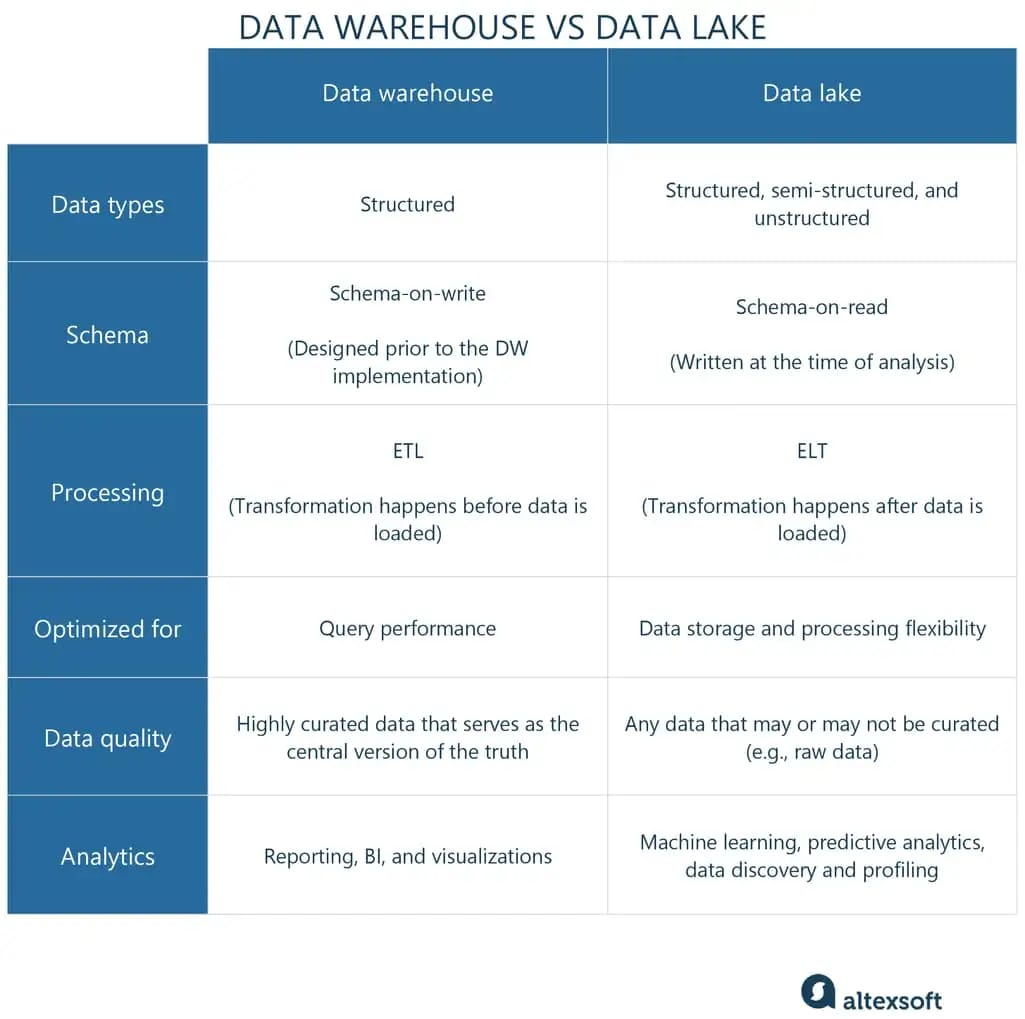

Data warehouse vs. data lake in a nutshell.

Traditional data warehouses have been around for decades. Initially designed to support analytics, these systems allow organizations to query their data for insights, trends, and decision-making.

Data warehouses require a schema — a formal structure for how the data is organized — to be imposed upfront. Due to the schema-on-write, data warehouses are less flexible. However, they can handle thousands of daily queries for tasks such as reporting and forecasting business conditions.

Another distinction is the ETL vs. ELT choice: In data warehouses, Extract and Transform processes usually occur before data is loaded into the warehouse. Many organizations also deploy data marts, which are dedicated storage repositories for specific business lines or workgroups.

Unlike traditional DWs, cloud data warehouses like Snowflake, BigQuery, and Redshift come equipped with advanced features; learn more about the differences in our dedicated article.

On the other hand, data lakes are a more recent innovation designed to handle modern data types like weblogs, clickstreams, and social media activity, often in semi-structured or unstructured formats.

Unlike data warehouses, data lakes allow a schema-on-read approach, enabling greater flexibility in data storage. This makes them ideal for more advanced analytics activities, including real-time analytics and machine learning. While flexible, they may require more extensive management to ensure data quality and security. Also, data lakes support ELT (Extract, Load, Transform) processes, in which transformation can happen after the data is loaded in a centralized store.

A data lakehouse may be an option if you want the best of both worlds. Make sure to check out our dedicated article.

Data lake architecture

This section will explore data architecture using a data lake as a central repository. While we focus on the core components, such as the ingestion, storage, processing, and consumption layers, it's important to note that modern data stacks can be designed with various architectural choices. Both storage and compute resources can reside on-premises, in the cloud, or in a hybrid configuration, offering many design possibilities. Understanding these key layers and how they interact will help you tailor an architecture that best suits your organization's needs.

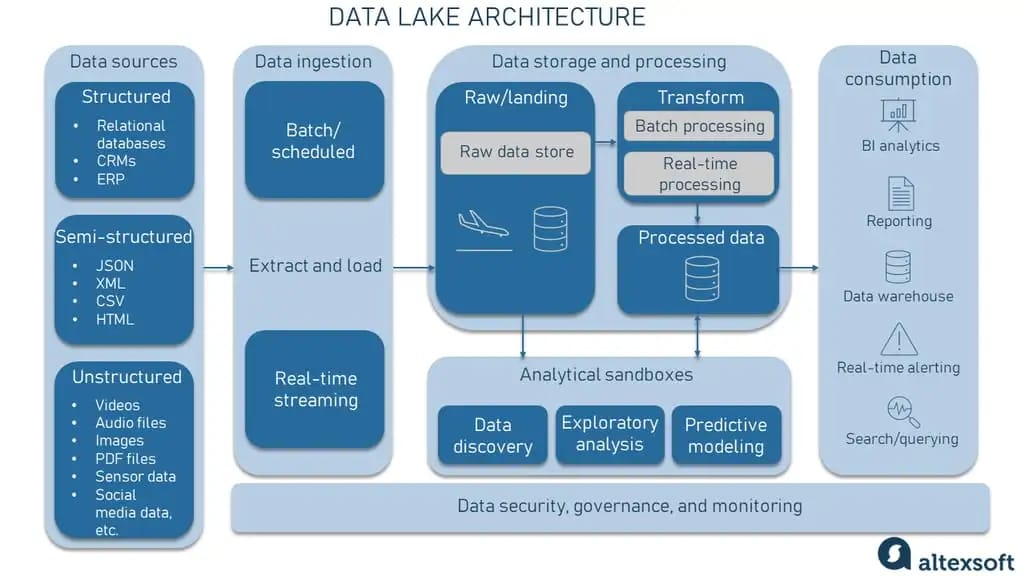

An example of a data lake architecture.

Data sources

In a data lake architecture, the data journey starts at the source. Data sources can be broadly classified into three categories.

Structured data sources. These are the most organized forms of data, often originating from relational databases and tables where the structure is clearly defined. Common structured data sources include SQL databases like MySQL, Oracle, and Microsoft SQL Server.

Semi-structured data sources. This type of data has some level of organization but doesn't fit neatly into tabular structures. Examples include HTML, XML, and JSON files. While these may have hierarchical or tagged structures, they require further processing to become fully structured.

Unstructured data sources. This category includes a diverse range of data types that do not have a predefined structure. Examples of unstructured data can range from sensor data in the industrial Internet of Things (IoT) applications, videos and audio streams, images, and social media content like tweets or Facebook posts.

Understanding the data source type is crucial as it impacts subsequent steps in the data lake pipeline, including data ingestion methods and processing requirements.

Data ingestion

Data ingestion is the process of importing data into the data lake from various sources. It serves as the gateway through which data enters the lake, either in batch or real-time modes, before undergoing further processing.

Batch ingestion is a scheduled, interval-based method of data importation. For example, it might be set to run nightly or weekly, transferring large chunks of data at a time. Tools often used for batch ingestion include Apache NiFi, Flume, and traditional ETL tools like Talend and Microsoft SSIS.

Real-time ingestion immediately brings data into the data lake as it is generated. This is crucial for time-sensitive applications like fraud detection or real-time analytics. Apache Kafka and AWS Kinesis are popular tools for handling real-time data ingestion.

Video explaining how data streaming works.

Unifying batch and streaming data processing capabilities, the ingestion layer often utilizes multiple protocols, APIs, or connection methods to link with various internal and external data sources we discussed earlier. The variety of protocols ensures a smooth data flow, catering to the heterogeneous nature of the data sources.

As we mentioned earlier, within the context of a data lake, the ELT paradigm usually kicks in post-ingestion transformation. This approach first extracts data from the source and is loaded into the data lake's raw or landing zone. Here, lightweight transformations might be applied, but the data often remains in its original format. Subsequent transformation into a more analyzable form occurs within the data lake itself.

Data storage and processing

The data storage and processing layer is where the ingested data resides and undergoes transformations to make it more accessible and valuable for analysis. This layer is generally divided into different zones for ease of management and workflow efficiency.

Raw data store section. Initially, ingested data lands in what is commonly referred to as the raw or landing zone. At this stage, the data is in its native format—whether that be structured, semi-structured, or unstructured. The raw data store acts as a repository where data is staged before any form of cleansing or transformation. This zone utilizes storage solutions like Hadoop HDFS, Amazon S3, or Azure Blob Storage.

Transformation section. After residing in the raw zone, data undergoes various transformations. This section is highly versatile, supporting both batch and stream processing.

Here are a few transformation processes that happen at this layer.

- The data cleansing process involves removing or correcting inaccurate records, discrepancies, or inconsistencies in the data.

- Data enrichment adds value to the original data set by incorporating additional information or context.

- Normalization modifies the data into a common format, ensuring consistency.

- Structuring often involves breaking down unstructured or semi-structured data into a structured form suitable for analysis.

After these transformations, the data becomes what's often called trusted data. It's more reliable, clean, and suitable for various analytics and machine learning models.

Processed data section. Post-transformation, the trusted data can be moved to another zone known as the refined or conformed data zone. Additional transformation and structuring may occur to prepare the data for specific business use cases. The refined data is what analysts and data scientists will typically interact with. It's more accessible and easier to work with, making it ideal for analytics, business intelligence, and machine learning tasks. Tools like Dremio or Presto may be used for querying this refined data.

In summary, this layer is where the data transitions from raw to trusted and eventually to refined or conformed, each with its own set of uses and tools. This streamlined data pipeline ensures organizations can derive actionable insights efficiently and effectively.

Analytical sandboxes

The analytical sandboxes serve as isolated environments for data exploration, facilitating activities like discovery, machine learning, predictive modeling, and exploratory data analysis. These sandboxes are deliberately separated from the main data storage and transformation layers to ensure that experimental activities do not compromise the integrity or quality of the data in other zones.

Both raw and processed data can be ingested into the sandboxes. Raw data can be useful for exploratory activities where original context might be critical, while processed data is typically used for more refined analytics and machine learning models.

Data discovery. This is the initial step where analysts and data scientists explore the data to understand its structure, quality, and potential value. This often involves descriptive statistics and data visualization.

Machine learning and predictive modeling. Once a solid understanding of the data is achieved, machine learning algorithms may be applied to create predictive or classification models. This phase might involve a range of ML libraries like TensorFlow, PyTorch, or Scikit-learn.

Exploratory data analysis (EDA). During EDA, statistical graphics, plots, and information tables are employed to analyze the data and understand the variables' relationships, patterns, or anomalies without making any assumptions.

Tools like Jupyter Notebooks, RStudio, or specialized software like Dataiku or Knime are often used within these sandboxes for creating workflows, scripting, and running analyses.

The sandbox environment offers the advantage of testing hypotheses and models without affecting the main data flow, thus encouraging a culture of experimentation and agile analytics within data-driven organizations.

Data consumption

Finally, we reach the data consumption layer. This is where the fruits of all the preceding efforts are realized. The polished, reliable data is now ready for end users and is exposed via Business Intelligence tools such as Tableau or Power BI. It's also where specialized roles like data analysts, business analysts, and decision-makers come into play, using the processed data to drive business decisions.

Crosscutting governance, security, and monitoring layer

An overarching layer of governance, security, monitoring, and stewardship is integral to the entire data flow within a data lake. Not an out-of-the-box layer, it is typically implemented through a combination of configurations, third-party tools, and specialized teams.

Governance establishes and enforces rules, policies, and procedures for data access, quality, and usability. This ensures information consistency and responsible use. Tools like Apache Atlas or Collibra can add this governance layer, enabling robust policy management and metadata tagging (contextual information about the stored data).

Security protocols safeguard against unauthorized data access and ensure compliance with data protection regulations. Solutions such as Varonis or McAfee Total Protection for Data Loss Prevention can be integrated to fortify this aspect of your data lake.

Monitoring and ELT (Extract, Load, Transform) processes handle the oversight and flow of data from its raw form into more usable formats. Tools like Talend or Apache NiFi specialize in streamlining these processes while maintaining performance standards.

Stewardship involves active data management and oversight, often performed by specialized teams or designated data owners. Platforms like Alation or Waterline Data assist in this role by tracking who adds, modifies, or deletes data and managing the metadata.

Together, these components form a critical layer that not only supports but enhances the capabilities of a data lake, ensuring its effectiveness and security across the entire architecture.

You can find out more about these and other concepts in our data-related articles:

Data Governance: Concept, Models, Framework, Tools, and Implementation Best Practices

Implementing a Data Management Strategy: Key Processes, Main Platforms, and Best Practices

Metadata Management: Process, Tools, Use Cases, and Best Practices

Popular data lake platforms: Powering your architecture

In the context of data lake architecture, it's essential to consider the platforms on which these data lakes are built. Here are some of the major players in the field, each offering robust data lake services.

Amazon Web Services (AWS) for data lakes

Amazon Web Services (AWS) offers a robust data lake architecture anchored by its highly available and low-latency Amazon S3 storage service. S3 is particularly attractive for those looking to take advantage of AWS's expansive ecosystem, including complementary services like Amazon Aurora for relational databases.

AWS Lake Formation architecture. Source: AWS

Integrated ecosystem. One of S3's strong suits is its seamless integration with various AWS services. AWS Glue provides robust data cataloging, while Amazon Athena offers ad hoc querying capabilities. Amazon Redshift serves as the go-to data warehousing solution within the ecosystem. This well-integrated set of services streamlines data lake management but can be complex and may require specialized skills for effective navigation.

Advanced metadata management. Although S3 itself may lack some advanced metadata capabilities, AWS resolves this through AWS Glue or other metastore/catalog solutions. These services allow for more intricate data management tasks, making data easily searchable and usable.

Data lake on AWS. This feature automatically sets up core AWS services to aid in data tagging, searching, sharing, transformation, analysis, and governance. The platform includes a user-friendly console for dataset search and browsing, simplifying data lake management for business users.

AWS provides a comprehensive yet complex set of tools and services for building and managing data lakes, making it a versatile choice for organizations with varying needs and expertise levels.

Azure Data Lake Storage

Azure Data Lake Storage (ADLS) is a feature-rich data lake solution by Microsoft Azure, specifically designed for enterprises invested in or interested in Azure services. Unlike a separate service, the newly launched Data Lake Storage Gen2 is an enhancement of Azure Blob Storage, offering a suite of capabilities for data management.

Enterprise-grade security. The platform provides built-in data encryption, enabling organizations to secure their data at rest. Furthermore, it offers granular access control policies and comprehensive auditing capabilities, which are essential for meeting stringent security and compliance standards.

Azure Private Link support. ADLS supports Azure Private Link, a feature that allows secure and private access to data lakes via a private network connection.

Integration and versatility. Azure Data Lake Storage integrates seamlessly with operational stores and data warehouses, allowing for a cohesive data management strategy.

High workload capability. The platform can handle high workloads, allowing users to run advanced analyses and store large volumes of data.

Snowflake for data lakes

Snowflake has redefined the data lake landscape with its cross-cloud platform, emerging as a top vendor in the field. Unlike traditional data lakes, Snowflake brands itself as a data cloud, breaking down data silos and enabling seamless integration of structured, semi-structured, and unstructured data. The platform is known for its speed and reliability, powered by an elastic processing engine that eliminates concurrency issues and resource contention.

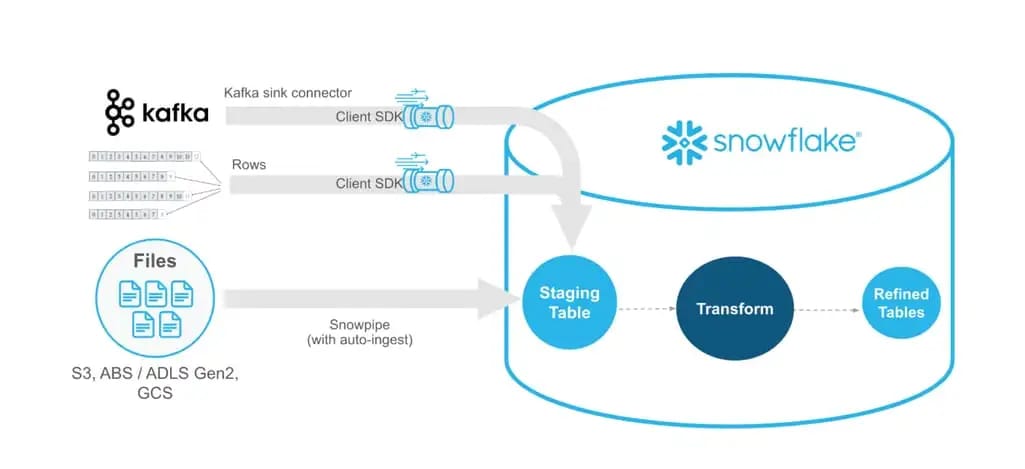

Data load with Snowpipe. Source: Snowflake

Key to Snowflake’s success is its focus on flexibility and simplicity, often described by data professionals as a platform that "just works." It offers advanced features like Snowpark and Snowpipe, which facilitate multilanguage programming and data streaming. Its efficient storage capabilities include automatic micro-partitioning, rest and transit encryption, and compatibility with existing cloud object storage, eliminating data movement.

Learn more about the pros and cons of Snowflake in our dedicated article.

Exploring data lake use cases and real-world examples

Data lakes are versatile solutions catering to diverse data storage and analytical needs. Below are some key use cases and real-world examples that underscore data lakes' growing importance and broad applicability.

Real-time analytics

Real-time analytics involves the analysis of data as soon as it becomes available. This is critical in finance, where stock prices fluctuate in seconds, and in eCommerce, where real-time recommender systems can boost sales.

Data lakes excel in real-time analytics because they can scale to accommodate high volumes of incoming data, support data diversity, offer low-latency retrieval, integrate well with stream processing frameworks like Apache Kafka, and provide flexibility with schema-on-read capabilities.

For example, Uber uses data lakes to enable real-time analytics that support route optimization, pricing strategies, and fraud detection. This real-time processing allows Uber to make immediate data-driven decisions.

Machine learning

All the AI and ML initiatives require extensive datasets to train models for prediction and automation. The ability to store raw data alongside processed data enables sophisticated analytics models. Data lakes provide the computational power and storage capabilities to handle these workloads.

Airbnb leverages its data lake to store and process the enormous amounts of data needed for its machine-learning models that predict optimal pricing and enhance user experiences.

By the way, if you want to know more about the story of Airbnb, watch our video explaining how this company creates the future of travel.

IoT analytics

The Internet of Things (IoT) produces vast amounts of data from devices like sensors, cameras, and machinery. Data lakes can handle this volume and variety, allowing industries to make more informed decisions.

General Electric uses its industrial data lake to handle real-time IoT device data, enabling optimized manufacturing processes and predictive maintenance in the aviation and healthcare sectors. Also, the GE subsidiary — GE Healthcare (GEHC) — adopted a new data lakehouse architecture using AWS services with the Amazon S3 data lake to store raw enterprise and event data.

Advanced search and personalization

Enhanced search capabilities and personalized recommendations require analyzing user behavior and preferences, which can be complex and varied. Data lakes support this by allowing companies to store diverse datasets that can be analyzed for these specific functions. For example, Netflix uses a data lake to store viewer data and employs advanced analytics to offer more personalized viewing recommendations.

Maximizing your data lake's potential: Key practices to follow

To ensure your data lake is an asset rather than a liability, adhere to the following best practices.

Strategic planning. Establish your business goals and pinpoint the data types you'll be storing. This paves the way for a well-organized data lake.

Quality assurance. Integrate validation checks and data scrubbing procedures to uphold the reliability and value of your stored data.

Performance enhancement. Harness strategies such as indexing, partitioning, and caching to boost query performance and overall data lake responsiveness.

Robust security. Regularly perform audits and enforce role-based access controls. Also, utilize encryption-at-rest to safeguard sensitive data.

By meticulously incorporating these key practices, your data lake will be optimally positioned to provide meaningful insights, thereby facilitating informed decision-making across your organization.