To some, the word Apache may bring images of Native American tribes celebrated for their tenacity and adaptability. On the other hand, the term spark often brings to mind a tiny particle that, despite its size, can start an enormous fire. These seemingly unrelated terms unite within the sphere of big data, representing a processing engine that is both enduring and powerfully effective — Apache Spark.

This article will expose Apache Spark architecture, assess its advantages and disadvantages, compare it with other big data technologies, and provide you with a path to familarity with this impactful instrument. Whether you're a data scientist, software engineer, or big data enthusiast, get ready to explore the universe of Apache Spark and learn ways to utilize its strengths to the fullest.

What is Apache Spark?

Maintained by the Apache Software Foundation, Apache Spark is an open-source, unified engine designed for large-scale data analytics. Its flexibility allows it to operate on single-node machines and large clusters, serving as a multi-language platform for executing data engineering, data science, and machine learning tasks. With its native support for in-memory distributed processing and fault tolerance, Spark empowers users to build complex, multi-stage data pipelines with relative ease and efficiency.

Before diving into the world of Spark, we suggest you get acquainted with data engineering in general. For this, we have a short, engaging video.

How data engineering works in a nutshell.

The building blocks of Apache Spark

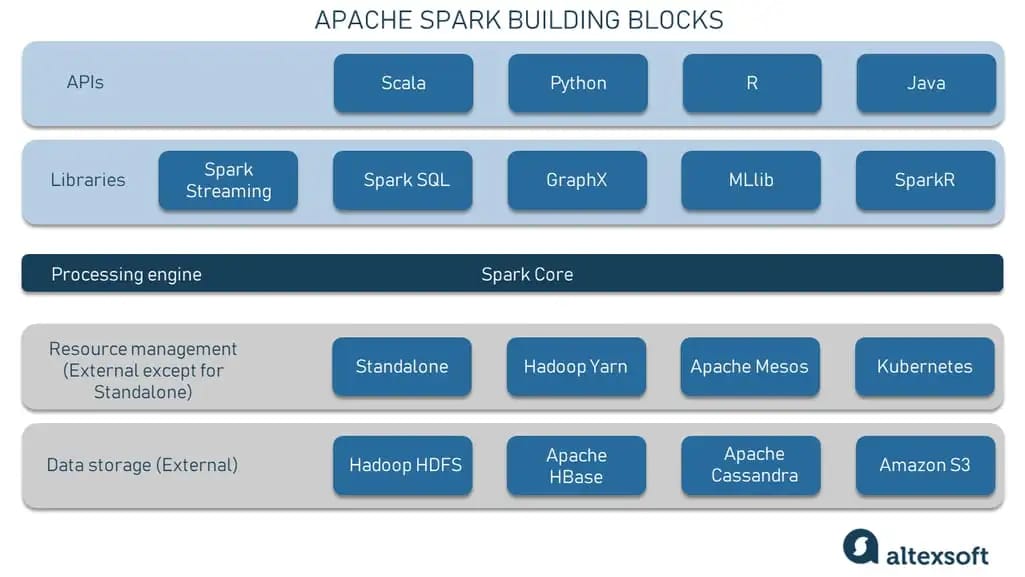

Apache Spark comprises a suite of libraries and tools designed for data analysis, machine learning, and graph processing on large-scale data sets. These components interact seamlessly with each other, making Spark a versatile and comprehensive platform for processing huge volumes of diverse information.

Apache Spark components.

Spark Core forms the foundation of the larger Spark ecosystem and provides the basic functionality of Apache Spark. It has in-memory computing capabilities to deliver speed, a generalized execution model to support various applications, and Java, Scala, Python, and R APIs.

Spark Streaming enhances the core engine of Apache Spark by providing near-real-time processing capabilities, which are essential for developing streaming analytics applications. This module can ingest live data streams from multiple sources, including Apache Kafka, Apache Flume, Amazon Kinesis, or Twitter, splitting them into discrete micro-batches.

To give real-world context, companies across various industries leverage the power of Spark Streaming and Kafka to deliver data-driven solutions:

- Uber utilizes them for telematics analytics, interpreting data their vehicles and drivers generate to optimize routes, improve safety, and increase efficiency.

- Pinterest relies on this powerful combination to analyze global user behavior based on real-time data, provide better user experiences, optimize content delivery, and drive engagement on its platform.

- Netflix leverages Spark Streaming and Kafka for near real-time movie recommendations. By instantaneously analyzing millions of users' viewing habits and preferences, they can suggest movies or series that customers will likely enjoy, enhancing their personalization strategy.

Despite Spark’s extensive features, it’s worth mentioning that it doesn’t provide true real-time processing, which we will explore in more depth later.

Spark SQL brings native support for SQL to Spark and streamlines the process of querying semistructured and structured data. It works with various formats, including Avro, Parquet, ORC, and JSON. Besides SQL syntax, it supports Hive Query Language, which enables interaction with Hive tables.

GraphX is Spark's component for processing graph data. It extends the functionality of Spark to deal with graph-parallel computations, letting users model and transform their data as graphs. This feature significantly simplifies complex data relationship handling and analysis.

MLlib (Machine Learning Library) comprises common machine learning algorithms and utilities, including classification, regression, clustering, collaborative filtering, and dimensionality reduction.

SparkR is an R package that provides a lightweight frontend for Apache Spark from R. It allows data scientists to analyze large datasets and interactively run jobs on them from the R shell.

What Apache Spark is used for

With its diverse capabilities, Apache Spark is used in various domains. Here are some of the possible use cases.

Big data processing. In the realm of big data, Apache Spark's speed and resilience, primarily due to its in-memory computing capabilities and fault tolerance, allow for the fast processing of large data volumes, which can often range into petabytes. Many industries, from telecommunications to finance and healthcare, use Spark to run ELT and ETL (Extract, Transform, Load) operations, where vast amounts of data are prepared for further analysis.

Data analysis. Spark SQL, a component of Apache Spark, enables in-depth analysis across large datasets stored in distributed systems, thereby providing insights to drive business decisions. For example, an eCommerce company might use Spark SQL to analyze clickstream and other customer behavior data to understand shopping patterns and personalize their marketing strategies.

Machine learning. The MLlib library in Spark provides various machine learning algorithms, making Spark a powerful tool for predictive analytics. Companies employ Spark for customer churn prediction, fraud detection, and recommender systems.

Stream processing. With the rise of IoT and real-time data needs, Apache Spark's streaming component has gained prominence. It allows organizations to process data streams in near real-time and react to changes. For instance, financial institutions use Spark Streaming to track real-time trading and detect anomalies and fraudulent activities.

Graph processing. Spark's GraphX library is designed to manipulate graphs and perform computations over them. It's a powerful tool for social network analysis, PageRank algorithms, and building recommendation systems. For instance, social media platforms may use GraphX to analyze user connections and suggest potential friends.

Apache Spark architecture: RDD and DAG

At its core, Apache Spark's architecture is designed for in-memory distributed computing and can handle large amounts of data with remarkable speed.

Two key abstractions in Spark architecture are the Resilient Distributed Dataset (RDD) and the Directed Acyclic Graph (DAG).

Resilient Distributed Datasets (RDDs). The term Resilient Distributed Datasets has the following components:

- Resilient: Objects can recover from failures. If any partition of an RDD is lost, it can be rebuilt using lineage information, hence the name.

- Distributed: RDDs are distributed across the network, enabling them to be processed in parallel.

- Datasets: RDDs can contain any type of data and can be created from data stored in local filesystems, HDFS (Hadoop Distributed File System), databases, or data generated through transformations on existing RDDs.

To sum up, RDDs are immutable and distributed collections of objects that can be processed in parallel. They are partitioned across the nodes in the cluster, allowing tasks to be executed simultaneously on different machines.

Directed Acyclic Graph (DAG). When transformations are applied to RDDs, Spark records the metadata to build up a DAG, which reflects the sequence of computations performed during the execution of the Spark job. When an action is called on the RDD, Spark submits the DAG to the DAG Scheduler, which splits the graph into multiple stages of tasks.

Master-slave architecture in Apache Spark

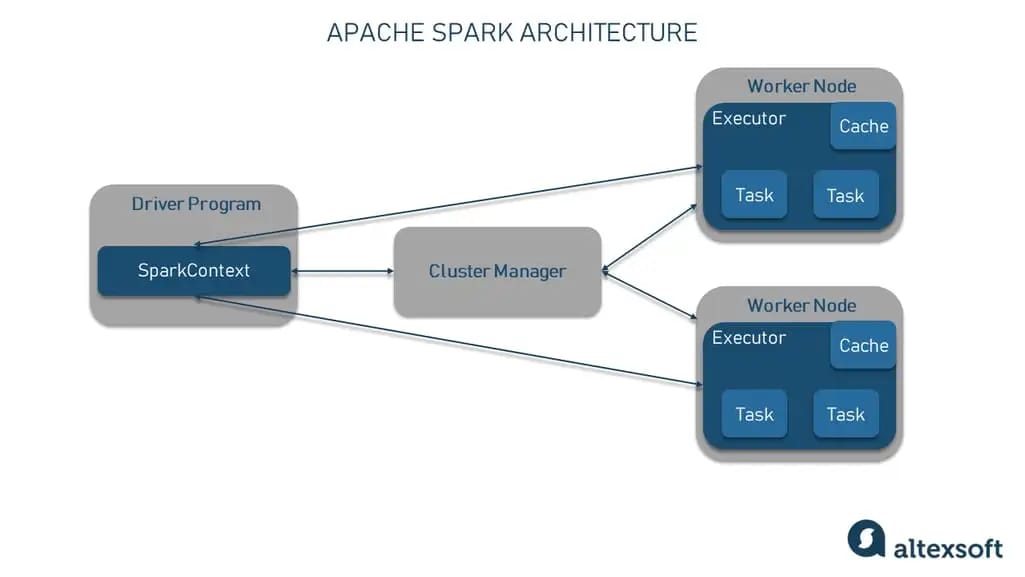

Apache Spark's architecture is based on a master-slave structure where a driver program (the master node) operates with multiple executors or worker nodes (the slave nodes). The cluster consists of a single master and multiple slaves, and Spark jobs are distributed across this cluster.

Apache Spark architecture in a nutshell.

The Driver Program is the "master" in the master-slave architecture that runs the main function and creates a SparkContext, acting as the entry point and gateway to all Spark functionalities. It communicates with the Cluster Manager to supervise jobs, partitions the job into tasks, and assigns these tasks to worker nodes.

The Cluster Manager is responsible for allocating resources in the cluster. Apache Spark is designed to be compatible with a range of options:

- Standalone Cluster Manager: A straightforward, pre-integrated option bundled with Spark, suitable for managing smaller workloads.

- Hadoop YARN: Often the preferred choice due to its scalability and seamless integration with Hadoop's data storage systems, ideal for larger, distributed workloads.

- Apache Mesos: A robust option that manages resources across entire data centers, making it suitable for large-scale, diverse workloads.

- Kubernetes: A modern container orchestration platform that gained popularity as a cluster manager for Spark applications owing to its robustness and compatibility with containerized environments.

Learn more about the good and the bad of Kubernetes in our dedicated article.

This flexible approach allows users to select the Cluster Manager that best fits their specific needs, whether those pertain to workload scale, hardware type, or application requirements.

The Executors or Worker Nodes are the “slaves” responsible for the task completion. They process tasks on the partitioned RDDs and return the result back to SparkContext.

Apache Spark advantages

Apache Spark brings several compelling benefits to the table, particularly when it comes to speed, ease of use, and support for sophisticated analytics. The following are the key advantages that make Apache Spark a powerful tool for processing big data.

Speed and performance

A key advantage of Apache Spark is its speed and performance capabilities, especially when compared to Hadoop MapReduce — the processing layer of the Hadoop big data framework. While Spark's speed is often cited as being "100 times faster than Hadoop," it's crucial to understand the specifics of this claim.

This impressive statistic comes from a 2014 benchmark test where Spark significantly improved performance over Hadoop MapReduce. The speed enhancements primarily come from Spark’s ability to hold data in memory (RAM) rather than continually writing and reading to and from a disk. This is particularly useful when working with iterative algorithms, commonly used in machine learning and graph computation, helping processes to run faster and more efficiently. In scenarios where these conditions are met, Spark can significantly outperform Hadoop MapReduce.

However, it's worth noting that this advantage is somewhat conditional. The performance difference may not be as pronounced in certain situations, such as simple operations that don't require multiple passes over the same data. Despite these nuances, Spark's high-speed processing capabilities make it an attractive choice for big data processing tasks.

Multi-language support with PySpark and other APIs

Despite being written in Scala, the framework extends its support to Java, Python, and R via intuitive APIs, including PySpark — the Python API for Apache Spark that enables large-scale data processing in a distributed environment using Python. All of this makes Spark more versatile and eases the learning curve for professionals experienced in any of the mentioned languages.

This advantage is significant for organizations that rely on cross-functional teams. Data scientists can use Python and R for data analysis, while data engineers opt for Java or Scala, which are more commonly used by them. Moreover, this accessibility opens up a broader talent pool for companies, providing a considerable edge over platforms primarily relying on a single language, such as the Java-centric Hadoop.

Spark UI: A convenient integrated web user interface

Apache Spark stands out from other open-source platforms due to its integrated web user interface, known as Spark UI. This tool allows experts to monitor their Spark clusters' status and resource consumption.

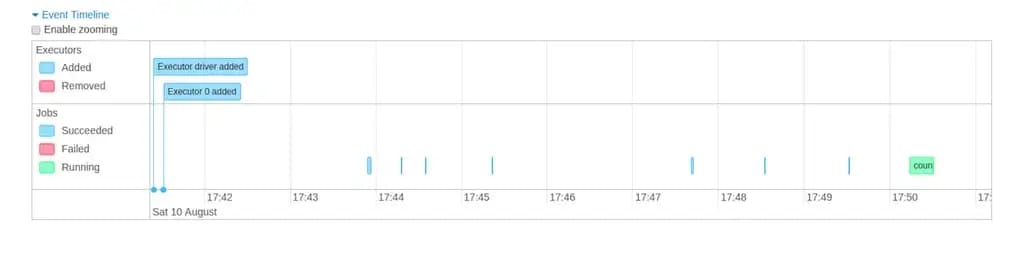

The details page shows the event timeline. Source: Apache Spark

Spark UI provides several tabs, each displaying different sets of information:

- The Jobs tab offers a comprehensive summary of all jobs within the Spark application, including details such as job status, duration, and progress. Clicking on a specific job opens a more detailed view with an event timeline, DAG visualization, and all job stages.

- The Stages tab presents a detailed breakdown of each step in the job, making it easy to track the progress and identify any bottlenecks in your data processing.

- The Executors tab gives an overview of the executors running the tasks, showing their status, how much storage and memory they are occupying, and other useful metrics.

- Additional tabs give information about storage, environment settings, and more, depending on Spark's components.

With such a range of readily available, detailed information, Spark UI becomes a powerful tool for monitoring, debugging and tuning Spark applications, offering a significant advantage of the Apache Spark platform.

Flexibility and compatibility

Apache Spark's flexibility makes it an excellent tool for many use cases. As mentioned, it's compatible with various cluster managers allowing users to run Spark on the platform that best meets their requirements, be it the simplicity of a standalone setup or the scalability of Kubernetes. Furthermore, Spark can process data from various sources, such as HDFS, Apache Cassandra, Apache HBase, and Amazon S3. This means Spark can be integrated into different modern data stacks.

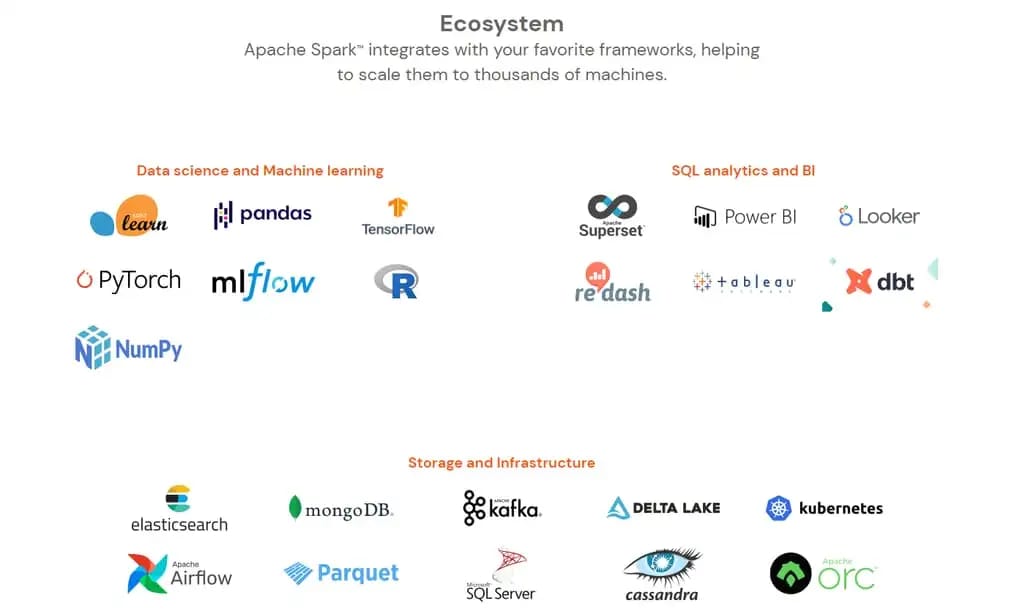

Apache Spark integrates with various frameworks. Source: Apache Spark.

Furthermore, Spark's ecosystem expands its adaptability to various domains. For data science and machine learning, Spark connects to popular libraries and frameworks such as pandas, TensorFlow, and PyTorch, thus enabling complex computations and predictive analytics. For SQL and BI tasks, Spark's ability to interface with tools like Tableau, DBT, and Looker supports sophisticated data analytics and visualization.

In terms of storage and infrastructure, Spark's versatility shines through its ability to work with various platforms, from Delta Lake to Elasticsearch to various popular database management systems.

Advanced analytics capabilities

Spark's advanced analytics capabilities are another of its significant advantages. It incorporates a comprehensive set of libraries, including Spark SQL for structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming. These built-in libraries allow users to carry out complex tasks, ranging from real-time analytics to sophisticated machine learning and graph computations. Data scientists and data engineers can achieve their goals by staying on the same platform without integrating multiple tools.

Detailed documentation

One of the significant advantages of Apache Spark is its detailed and comprehensive documentation. This extensive guide covers all aspects of Spark's architecture, APIs, and libraries. It proves invaluable for developers of varying experience levels.

The documentation provides detailed tutorials and examples that explain complex concepts clearly and concisely, which is particularly beneficial when developers try to deepen their understanding of Spark.

Experienced developers can use it to aid in troubleshooting and solving specific challenges. The resource covers a wide range of topics and goes deep into each of them, offering clarity on the functionalities and capabilities of Spark.

An active and growing community

As an open-source platform, Apache Spark enjoys the robust support of a thriving community. This active user base contributes significantly to Spark's ongoing development while providing invaluable shared knowledge and troubleshooting assistance.

There are many active participants within the official Apache Spark community. The Spark project on GitHub, where the bulk of development occurs, has garnered over 36.3k stars and counts more than 1.9k contributors. Moreover, nearly 80,000 questions related to Apache Spark on Stack Overflow form a rich reservoir of knowledge and user experiences. Various other Spark-focused online forums and groups further enhance the support network, making it convenient to find assistance or insights as required.

Apache Spark disadvantages

While Spark's capabilities are impressive, it's important to note that, like any technology, it has limitations. Understanding them can help you decide whether Spark is the right choice for your specific needs.

High memory consumption and increased hardware costs

Inherent in its design, Apache Spark is a resource-intensive system, especially regarding memory. It heavily leverages RAM for in-memory computations, which allows it to offer high-speed data processing. However, this characteristic can also lead to high memory consumption, which results in higher operational costs, scalability limits, and challenges for data-intensive applications.

This heavy reliance on RAM also means that Spark may require more expensive hardware than disk-based solutions like Hadoop MapReduce. The necessity for larger volumes of RAM and faster CPUs can increase the upfront cost of setting up a Spark environment, particularly for large-scale data processing tasks.

Therefore, while Spark's speed and performance are notable benefits, it's crucial to consider the potential for increased spend and memory requirements.

Limited support for real-time processing

Although often touted for near-real-time processing capabilities, Apache Spark isn't capable of genuine real-time processing. This is due to Spark Streaming's reliance on micro-batches, which handles small groups of events collected over a predefined interval. Tools like Apache Flink or Apache Storm might be better suited for applications requiring actual streaming analytics.

Hard learning curve

While Apache Spark has undeniably powerful features, one aspect that often surfaces among users is its hard learning curve. Many users on platforms like Reddit and Quora point out that while Spark's basics, such as DataFrames and datasets, are relatively straightforward, truly understanding and leveraging Spark can be more difficult.

Key concepts like distributed storage, in-memory processing, data exchange cost, column formatting, and table store benefits, among others, form the bedrock of Spark's capabilities. These principles, while essential, may pose a significant challenge for beginners in the data processing field.

That said, it's not an insurmountable problem. Many users also argue that learning Apache Spark can be more manageable with the right guidance, a clear understanding of use cases, and hands-on experience. Moreover, if you already have a basic knowledge of Python, learning Spark through PySpark could be a more straightforward journey than grasping the intricacies of systems like Hadoop.

Small files problem

Apache Spark faces difficulties when dealing with large numbers of small files. More files within a workload mean more metadata to parse and more tasks to schedule, which can significantly slow down processing.

Dependence on external storage systems: A weakness?

Apache Spark's relationship with external storage systems is a distinctive aspect that can be both advantageous and disadvantageous.

On the one hand, while Spark doesn't have a built-in storage system, its architecture is designed to interface with diverse data storage solutions such as HDFS, Amazon S3, Apache Cassandra, or Apache HBase. This allows users to select the system that best fits their specific needs and environment, adding to Spark's flexibility.

On the other hand, this dependence on external storage systems can add an extra layer of complexity when integrating Spark into a data pipeline. Working with third-party repositories potentially creates compatibility and data management issues, requiring additional time and effort to resolve.

So while dependence on external storage systems is not an inherent drawback, it is an aspect to be considered when evaluating the suitability of Apache Spark for a specific use case or data architecture. It's a characteristic that can make Spark a powerful data processing engine, but it requires careful planning to utilize it effectively.

Apache Spark alternatives

While Apache Spark is a versatile and powerful tool for data processing, other alternatives might better suit certain use cases or preferences. This section will discuss two popular alternatives to Spark: Hadoop and Flink.

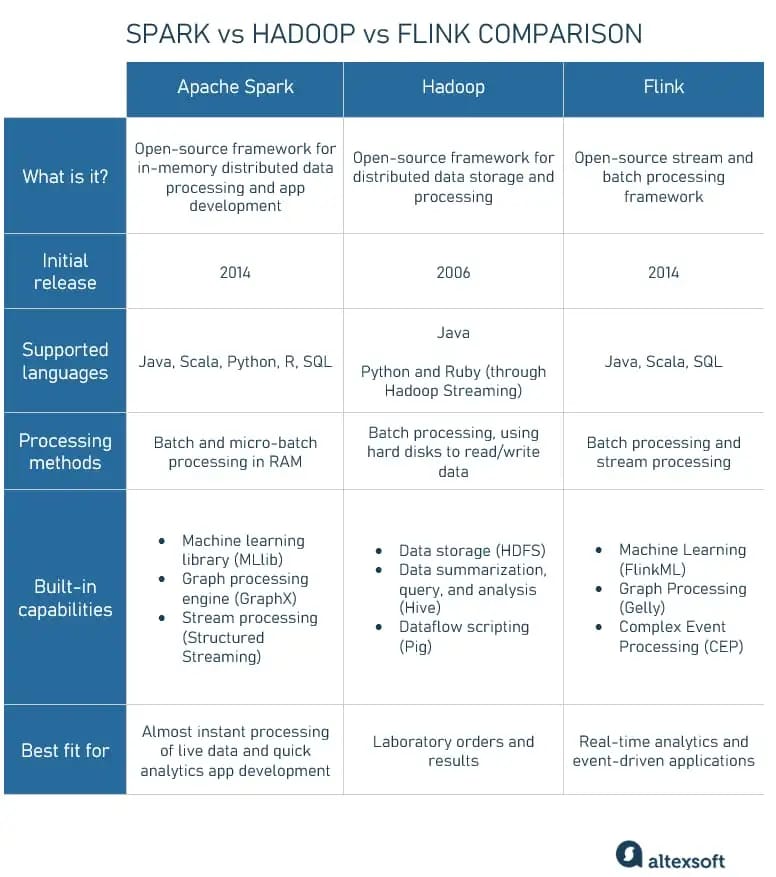

Spark vs. Hadoop vs. Flink comparison

Apache Hadoop

Apache Hadoop is a pioneer in the world of big data processing. Developed by the Apache Software Foundation and released in 2006, Hadoop provides a robust framework for distributed computing over large data sets using simple programming models.

Hadoop's core consists of the Hadoop Distributed File System (HDFS) for storage and the MapReduce programming model for processing. It was primarily designed to handle batch processing and does this efficiently even for enormous volumes of data.

While written in Java, Hadoop MapReduce supports Python and Ruby through Hadoop Streaming. Moreover, Hadoop's ecosystem includes other components like HBase for column-oriented databases, Pig for dataflow scripting, and Hive for data summarization, query, and analysis.

Hadoop shines when processing large data sets where time is not the primary factor. It is extensively used for web searching, log processing, market campaign analysis, and data warehousing.

Read our dedicated article for a more detailed comparison of Apache Hadoop vs. Apache Spark.

Apache Flink

Apache Flink, introduced in 2014, is an open-source stream and batch-processing framework. What sets Flink apart is its true streaming capabilities. Unlike Spark, which handles data in micro-batches, Flink can operate on an event-by-event basis. This feature makes Flink a powerful tool for real-time analytics and applications built on event-driven architecture.

Flink is compatible with several languages, including Java, Scala, and SQL, and handles batch and stream processing effectively. Furthermore, Flink features libraries for machine learning (Flink ML), Graph processing (Gelly), and Complex Event Processing (CEP).

So while Apache Spark provides a flexible and powerful solution for data processing, Hadoop and Flink also offer compelling features. Depending on the specific requirements of your project, such as data size, processing speed, and real-time capabilities, one may be a better fit than the others.

How to Get Started with Apache Spark

Apache Spark is invaluable for those interested in data science, big data analytics, or machine learning. Its rich and complex data-processing capabilities can significantly enhance your professional skillset, and mastering Spark could even provide a substantial career boost. According to the Stack Overflow Developer Survey from 2022, Apache Spark emerged as the highest-paying framework under the “Other Frameworks, Libraries, and Tools” category. This indicates that there is significant demand in the industry for professionals with Spark expertise, and the investment in learning this tool can yield substantial returns.

But how does one navigate this rich and complex data-processing engine? Here's a guide to help you get started.

Helpful Skills

Becoming proficient with Apache Spark requires certain foundational skills. These prerequisites can accelerate your learning and smooth your journey:

- Knowledge of Linux: Understanding the Linux operating system is beneficial because many Spark applications are deployed on Linux-based systems.

- Programming: Knowing a programming language makes learning Spark easier. While Spark is written in Scala, it also provides APIs for Java, Python, and R. Thus, having basic knowledge of these languages will be useful.

- Understanding of distributed systems: Familiarity with concepts of distributed computing will aid in grasping Spark's processing capabilities.

- Experience with SQL: Spark provides a SQL interface, Spark SQL, for working with structured and semi-structured data. So having hands-on experience with SQL commands is helpful.

Once you've solidified these foundational skills, the next step is to immerse yourself in Spark itself.

Training and Certification

There are numerous ways to dive into Apache Spark. You can study using official Apache Spark documentation, self-learning resources, or enroll in an online training program. Quite a few platforms like edX, Coursera, Udemy, and DataCamp offer comprehensive Spark tutorials.

Here are a few recommended courses.

- Big Data Analysis with Scala and Spark on Coursera provides hands-on experience using Spark and Scala for Big Data analysis.

- From 0 to 1: Spark for Data Science with Python on Udemy is designed for absolute beginners to Spark, requiring only basic Python knowledge.

- Introduction to Spark SQL in Python on DataCamp covers the process of analyzing big data using Spark SQL.

- Big Data Analytics Using Spark on edX, a course provided by The University of California, San Diego, covers the basics and beyond of using Spark to analyze data at scale.

Besides training, earning a certification in Apache Spark can validate your skills and enhance your credibility in the field. Some popular certifications include:

- Apache Spark Developer Certification (HDPCD): This Hortonworks certification examines your Spark Core and Spark SQL knowledge.

- Databricks Certified Associate Developer for Apache Spark 3.0: This certification from Databricks, the company founded by the creators of Apache Spark, demonstrates your proficiency in Spark and the Databricks lakehouse platform.

Remember, as with any tool, practice is the key to mastering Apache Spark. Start working on small projects, and gradually take on more complex tasks as your understanding deepens. With time and dedication, you'll become proficient in handling big data with Apache Spark.

In addition to formal training and certifications, the Apache Spark community provides a wealth of resources and forums where you can ask questions, learn from the experiences of others, and stay updated with the latest developments. These resources can be a great aid in your learning journey.

- Official Apache Spark Mailing Lists: These are great places to get help and follow along with the project's development. They have separate lists for user questions and development discussions.

- Stack Overflow: It is a well-known resource for programmers of all skill levels. You can use it to ask questions and get high-quality answers. Just make sure to tag your question with "apache-spark" to attract the right attention.

- Apache Spark Subreddit: On Reddit, you can find a lot of discussions, articles, and resources related to Apache Spark. It's a good place to ask questions and benefit from someone else's experience.

Remember, the key to learning is consuming knowledge and being part of conversations, asking questions, and sharing your insights with others. These platforms provide a way to do just that and accelerate your learning journey with Apache Spark.

This post is a part of our “The Good and the Bad” series. For more information about the pros and cons of the most popular technologies, see the other articles from the series:

The Good and the Bad of Kubernetes Container Orchestration

The Good and the Bad of Docker Containers

The Good and the Bad of Apache Airflow

The Good and the Bad of Apache Kafka Streaming Platform

The Good and the Bad of Hadoop Big Data Framework

The Good and the Bad of Snowflake

The Good and the Bad of C# Programming

The Good and the Bad of .Net Framework Programming

The Good and the Bad of Java Programming

The Good and the Bad of Swift Programming Language

The Good and the Bad of Angular Development

The Good and the Bad of TypeScript

The Good and the Bad of React Development

The Good and the Bad of React Native App Development

The Good and the Bad of Vue.js Framework Programming

The Good and the Bad of Node.js Web App Development

The Good and the Bad of Flutter App Development

The Good and the Bad of Xamarin Mobile Development

The Good and the Bad of Ionic Mobile Development

The Good and the Bad of Android App Development

The Good and the Bad of Katalon Studio Automation Testing Tool

The Good and the Bad of Selenium Test Automation Software

The Good and the Bad of Ranorex GUI Test Automation Tool

The Good and the Bad of the SAP Business Intelligence Platform

The Good and the Bad of Firebase Backend Services

The Good and the Bad of Serverless Architecture