Cloud Data Migration - Why Data Observability Plays a Critical Role

Enterprises in all industries are in the midst of a massive migration of data into the cloud. On-premises technology offerings like Cloudera, Hadoop, and others have long suffered from installation, uptime, performance, and scalability issues. To successfully use these data stacks requires a dedicated infrastructure team and other data expertise which is hard to find and very expensive.

The advent of cloud offerings like Snowflake, DataProc, AWS EMR, and others have allowed users to reduce operational headaches and easily adopt innovative approaches like data meshes, a data marketplace, and other resources that reduce costs and democratize how data is managed and used. We have now moved past a point where cloud transformation of data stack is optional; it is clearly now essential.

A successful data migration doesn’t just mean a data movement from on-premises to a cloud environment. Mass migration of data and assets in a “dump and load” process is rarely successful and never optimal. Instead, you should first assess your inventory of jobs and processes - identify critical jobs and document their performance characteristics, prune obsolete jobs and code from repositories, so that only known, working assets are identified for migration.

Next, create an inventory of data assets - identify active data assets and their dependencies to other assets and jobs. A huge amount of effort is needed to understand the target cloud platform architecture and the platform and feature configuration best practices that need to be implemented to support optimal performance, operations, and observability of the migrated data. It often involves re-architecting and refactoring the data layout, transformation flows, and consumption workloads to best suit the target environment. Data teams will need to leverage cloud innovations and adapt to take advantage of the unique features of the new environment. The cloud destination is not the end, rather it's the beginning of a new journey.

A data observability solution plays a critical role in your data migration because it provides a framework for the journey and will allow you to successfully migrate with confidence.

For this blog, let’s look at a specific case of how Acceldata helps migrate data from Hadoop technologies to Snowflake.

For a successful cloud data migration, you go through the phases of Proof of Concept, Preparation, Data Migration, Consumption, Monitoring, Optimization. Each phase is further divided into sub-phases that help you to focus on different areas involved in making intelligent decisions. Let’s look at these with some additional detail:

Proof of Concept

- Snowflake Trusted Advisor: Acceldata provides recommendations that help you follow Snowflake best practices and evaluates your account by using checks.

- Implement PoC

- Champion Snowflake: Acceldata provides dashboards and hero reports that help you champion Snowflake within your organization.

- Snowflake Cost Assessment: Acceldata provides cost intelligence dashboards that help you make project budgeting/contract decisions.

Preparation

- Critical Data Element Identification: Acceldata provides data usage and profiling information that helps you make decisions on what data to retire, retain and/or prioritize for your data migration plan. It also helps you set a performance baseline of workloads to support realistic performance expectations in Snowflake.

- Data Migration Schedule/Strategy: Acceldata helps you create an inventory of assets to support migration candidate selection and establish baseline quality metrics.

- Snowflake Administration: Acceldata helps you configure your Snowflake account following the best practices recommendations in order to make it robust and secure.

- Snowflake Data Layout: Acceldata helps you understand the data layout complexities with regard to clustering key, micro partitioning and other Snowflake features

Data Migration

- Data Ingestion Best Practices: Acceldata provides deeper insights into Snowpipe, COPY commands and other ingestion features.

- Data Transfer

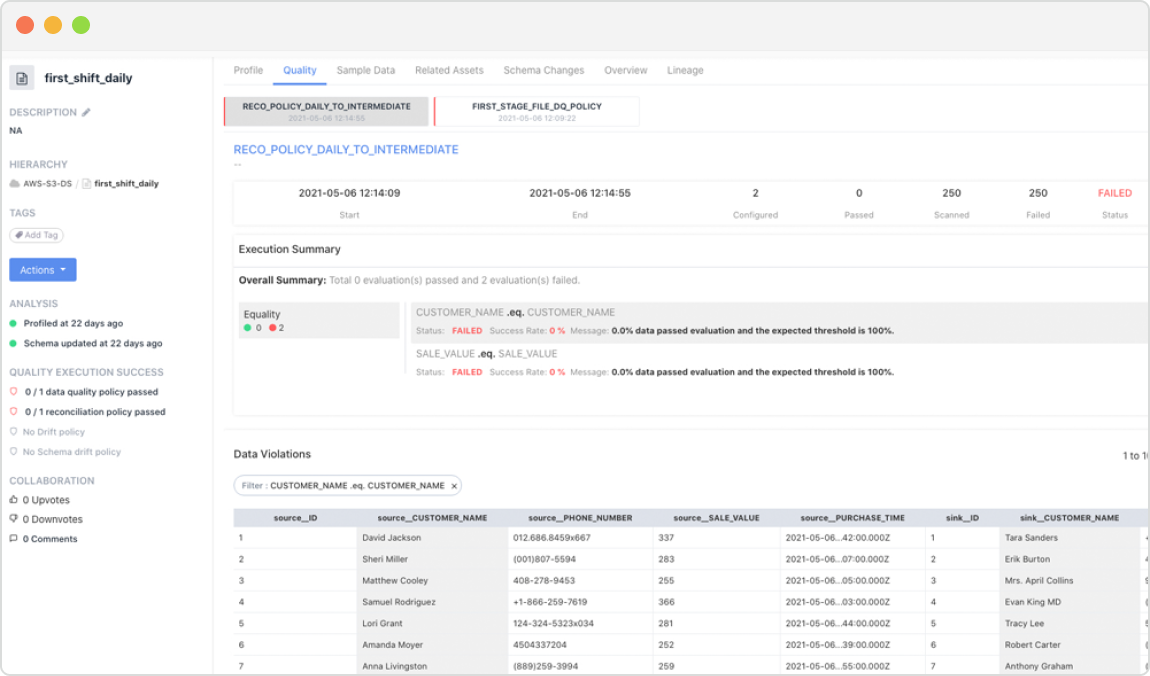

- Data Reconciliation: Acceldata allows checking the integrity of the migrated data by comparing the source and target datasets. It also helps you do RCA on migrated workloads that are not functioning as expected.

Consumption

- Data Discovery/Profiling: Acceldata discovers data assets, understanding structure, content and their relationships.

- Build Pipelines

- Data Transformation

Monitoring

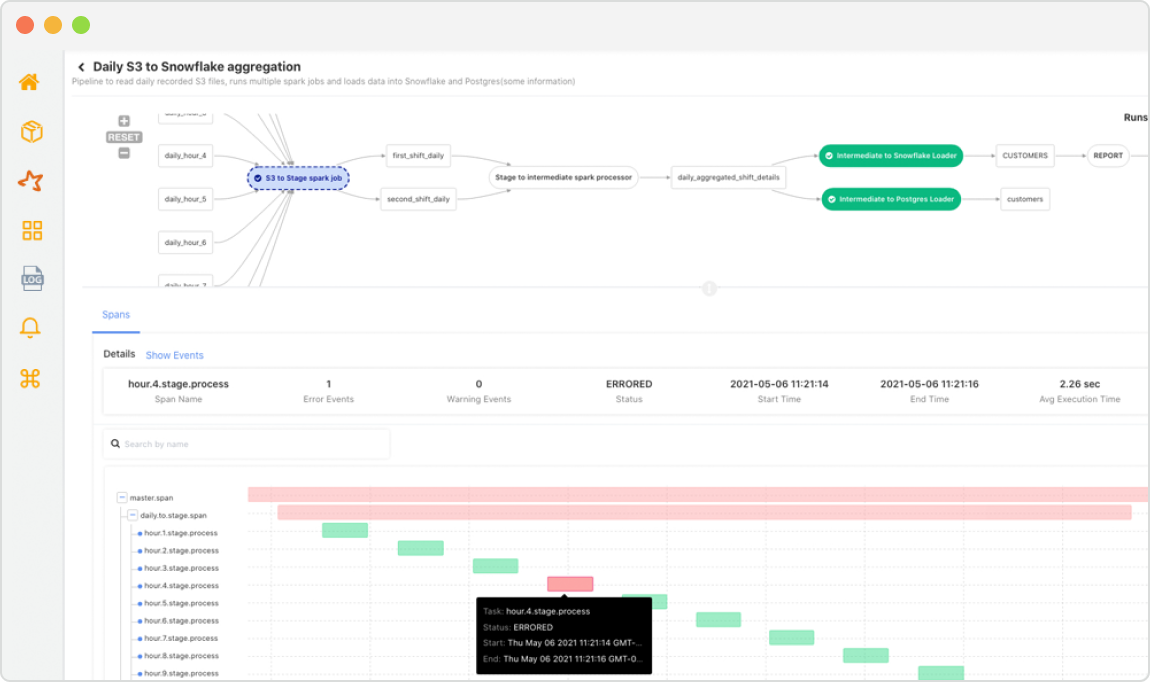

- Pipeline Monitoring: Acceldata monitors the data flows and the internal and external dependencies that link all pipelines together. It transforms the new pipelines into observable pipelines that tie all dimensions of observability together.

- Data Testing: Acceldata provides a way to make assertions about your data, and then test whether these assertions are valid.

- Data Quality: Acceldata measures data quality characteristics such as accuracy, completeness, consistency, validity, uniqueness, and timeliness and also schema/model drift, and others.

- Snowflake Platform Monitoring: Acceldata monitors the Snowflake platform for costs, administration, usage and performance.

- Incident and Alert Management: Acceldata provides a system for raising an incident, responding to it and also managing.

- Reporting: Acceldata provides ways to automatically run reports(pre-built + custom) at a predefined frequency and provide information to a list of recipients.

Optimization

- Cost Optimization: Acceldata provides a way to explore costs, detect spikes and root-cause them, forecast the costs/contract and also recommend ways for cost reduction.

- Resource Rightsizing: Acceldata provides information on resizing cloud resources to better match the workload requirements.

- Performance Optimization: Acceldata highlights anomalous workloads and provides statistics on the probable ways to optimize the performance.

Get a demo of the Acceldata Data Observability Platform and learn how you can optimize your data spend, improve operational intelligence, and ensure data reliability.

.webp)

.webp)

.webp)