How to Use ChatGPT ETL Prompts

At the heart of data engineering lies the ETL process—a necessary, if sometimes tedious, set of operations to move data across pipelines for production.

Now imagine having a co-pilot to streamline and supercharge the process. While data engineering will always need at least one human in the loop, ChatGPT ETL prompts are a great way to make that process move just a little bit faster — particularly if you’re still managing ETL on a budget.

ChatGPT can be a game-changing ally for small teams — but only if you know how to sweet talk it.

So let’s take a little ChatGPT speaking course and look at a few example prompts for each stage of the ETL process.

ChatGPT ETL Prompt Examples

From extraction to loadings and every micro-step in between—we’ve put together a list of ChatGPT ETL prompt examples to help you on your AI ETL journey.

While it won’t be as simple as a copy and paste, they should give you a directional view of the structure and level of detail required to get a usable script from your ChatGPT sidekick.

But remember, no matter how good the AI is, your scripts still need you in the loop. So, don’t forget to review your ChatGPT outputs before leverage scripts or pushing any SQL code to production.

Extraction

ChatGPT ETL prompts can be used to help write scripts to extract data from different sources, including:

Databases

I have a SQL database with a table named employees. The table has columns id, first_name, last_name, department, and hire_date. I'm trying to extract a list of employees who were hired in the last six months and belong to the “IT” department. Can you help me craft the SQL query for this extraction?APIs

I'm working on a project that requires fetching the latest tweets of a specific user using the Twitter API. Can you help me craft a Python script that uses the tweepy library to get the most recent 100 tweets from a given user handle?Flat files

I've got a large CSV file with product listings, and I need to extract rows that have a price above $100. Can you provide a Python snippet using the pandas library to help me filter this data?Web sources

I want to extract the titles of articles from Fox News’ homepage. Can you help me write a Python script using BeautifulSoup that can scrape and list down all article titles from a given URL?Transformation

ChatGPT ETL prompts can assist in writing scripts for data cleaning and transformation, such as:

Validation

I have a DataFrame in Python with a column named email. Some of the email entries are missing or have invalid formats. Can you help me write a Python script using pandas to fill missing emails with 'unknown@email.com' and validate the format of the existing ones?Normalization

I have a DataFrame in Python with a column salary that contains employee salaries. I'd like to normalize these salaries between 0 and 1 to perform some machine learning tasks. Can you help me write a Python script using pandas to achieve this normalization?Filtering

In my SQL database, I have a table named orders with columns order_id, customer_name, order_date, and total_amount. I need to extract orders placed in the last three days with a total_amount more than $100. Can you provide me with the SQL query for this filtering?Aggregation

I have a table named sales in my SQL database with columns product_id, sale_date, and units_sold. I want to find the total units_sold for each product_id in the last month. Can you assist with the SQL query for this aggregation?Enrichment

I have a table customers in my SQL database with columns customer_id, name, and country_code. Separately, I have another table countries that maps country_code to country_name. Can you help me write an SQL query to enrich the customers table with the country_name from the countries table?Type conversions

I have a DataFrame in Python with a column age that's currently stored as a string. I'd like to convert this column to integers to perform some arithmetic operations. Can you help me write a Python script using pandas to achieve this data type conversion?Date-time parsing

I'm working with a list of dates in Java stored as strings in the format 'dd-MM-yyyy'. I need to parse them into LocalDate objects to perform some date arithmetic. Can you assist me in writing a Java method to parse these date strings?String manipulation

In my SQL database, I have a table employees with a column full_name storing names in the format 'LastName, FirstName'. I'd like to split this into two separate columns, first_name and last_name. Can you provide me with the SQL query to achieve this string manipulation?Loading

ChatGPT ETL prompts can help write scripts to load data into different databases, data lakes, or data warehouses. Simply ask ChatGPT to leverage popular tools or libraries associated with each destination.

Databases

I have a CSV file named products.csv containing product details. I'd like to import this data into my MySQL database into a table called products_table. Can you guide me through writing a script to achieve this using Python's MySQLdb library?Data lakes

I'm processing various log files, and after processing, I'd like to store them in a structured manner in my Amazon S3 bucket. Can you help me craft a Python script using the boto3 library to upload files to an S3 bucket and organize them by date?Data warehouses

I've aggregated transaction data that I'd like to upload to our Amazon Redshift data warehouse for further analytics. The data is currently in a pandas DataFrame. Can you help me write a Python script using psycopg2 and pandas to load this DataFrame into a specific Redshift table?Suggest performance bottleneck fixes

You could also ask ChatGPT to help with performance tuning.

Tune the load process

I'm using PostgreSQL to store my company's transactional data. Every end of the month, I run a Python script to batch load millions of records from various CSV files into a table named monthly_transactions. However, the current loading process is taking too long, sometimes up to several hours. I've heard about bulk loading and other performance tuning measures. Can you guide me on how to optimize this load process to improve efficiency and speed?Implement Upserts

I'm frequently updating and inserting new records into my PostgreSQL users table. If a user already exists (based on a unique user_id), I want to update their details; if not, I want to insert them. I've heard about the UPSERT functionality. Can you help me craft a SQL command to achieve this?Data archiving

Over the years, I've accumulated a lot of log files on my server, making retrieval slow and consuming significant storage. I'd like to create an automated Python script that archives log files older than two years into compressed formats and organizes them by year. Can you assist in writing this script?Partitioning techniques

Our sales_data table in MySQL has grown tremendously, containing records spanning several years. To optimize query performance, I'm considering partitioning the table by year. Could you guide me through the process of setting up partitioning for this table based on the sale_date column?Provide guidance and best practices on specific ETL tools

Say you’re new to Apache Kafka. You could ask a ChatGPT ETL prompt like:

We're aiming to create a real-time analytics solution and are considering Apache Kafka for stream processing. I've heard about topics, producers, and consumers in Kafka. Can you provide a beginner's guide on setting up a basic Kafka pipeline and explain these concepts?The tools you harness greatly influence your success

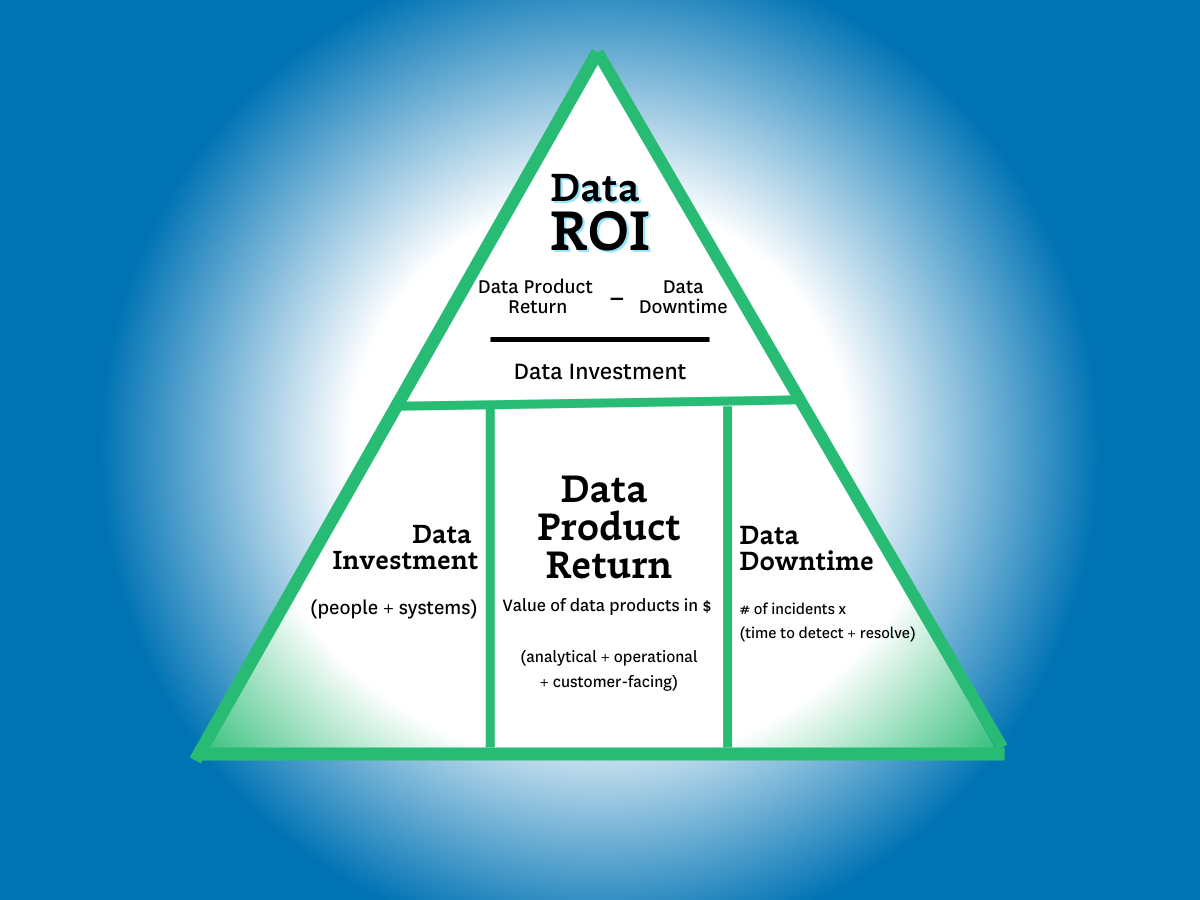

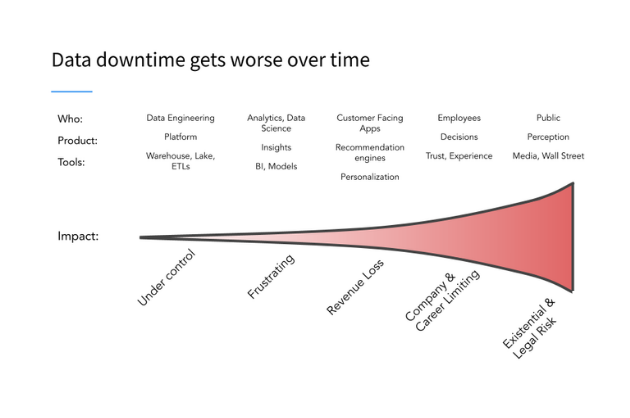

While ChatGPT ETL prompts may act as a handy sidekick for complex (or tedious) ETL tasks, there’s one pivotal element you can’t leave to AI robots: data quality.

Data quality issues aren’t limited to the ETL process—they can occur at any step of the data pipeline. Monte Carlo uses programmatic data observability to act as your data infrastructure’s guardian angel, ensuring that the information flowing through your pipeline is always clean, accurate, and reliable.

With Monte Carlo, you’ll gain insights into the health of your data from ETL and beyond to detect anomalies, resolve pipeline breaks, and prevent data quality incidents before they escalate.

Want to learn more? Contact our team to find out how data observability can support your ETL process—and beyond—to accelerate the adoption of data at your organization.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage