Computer Vision: Algorithms and Applications to Explore in 2024

Explore the commonly used computer vision algorithms and techniques for identifying and classifying images in real-world computer vision applications.

Computer vision is one of the most trending and compelling subfields of artificial intelligence. You must have encountered and used the applications of computer vision without even knowing it. Whether it is quality control of crops through image classification or image processing for electronic deposits, computer vision techniques are transforming industries across the globe. According to Grandview Research, the computer vision market is estimated to be worth $12.2 billion by the end of 2021, growing at a CAGR of 7.3% to reach $20.05 billion by 2028.

Computer Vision focuses on replicating the complex working of the human visual system and enabling a machine or computer to identify and process different objects in videos and images, just like a human being. With the advancement in artificial intelligence and machine learning and the improvement in deep learning and neural networks, Computer vision algorithms can process massive volumes of visual data. The performance of computer vision algorithms has surpassed humans in specific tasks like detecting and labeling objects in terms of speed and accuracy.

OpenCV Project for Beginners to Learn Computer Vision Basics

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectComputer vision algorithms find applications in various sectors like healthcare, agriculture, automotive, security, with ample research being done to develop frameworks, toolkits, and software libraries in recent years. With no future adieu, let's look at some of the most commonly used computer vision algorithms and applications.

Table of Contents

Top Computer Vision Algorithms and Applications

1. SIFT Algorithm

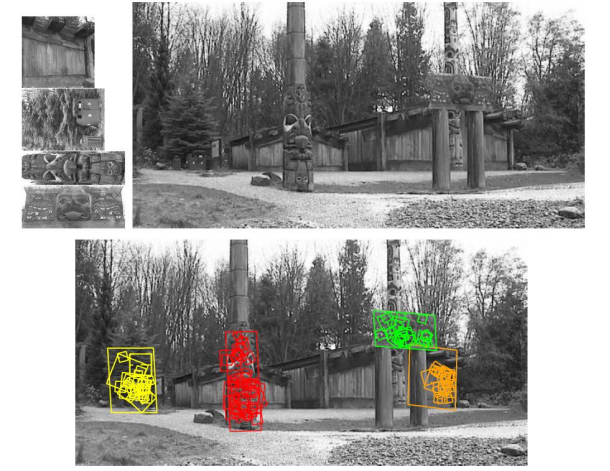

SIFT was proposed in 2004 by David Lowe, the University of British Columbia in his research paper. SIFT or the scale-invariant feature transform algorithm is used to detect also describe the local features in a digital image. It locates key points and furnishes them with quantitative information, also known as descriptors used for object detection and recognition. The descriptors obtained using SIFT are invariant against the transformation of images, making the image look different even though they have the same objects but are also resilient to rotation, illumination, and viewpoint.

SIFT is a 4-Step computer vision algorithm -

-

Scale-space Extrema Detection: In this step, the algorithm searches overall image locations and scales using a difference-of-Gaussian or (DoG) function to identify potential interest points. These points are invariant to scale and orientation.

-

Keypoint Localization: At each candidate location, a detailed model is fit to determine the location and scale of key points based on their stability measures.

-

Orientation Assignment: Orientations are assigned to each keypoint based on local image gradient directions. The assigned orientation, scale, and location of each feature in the image are used in all future operations on the image, which are invariant to any transformations.

-

Keypoint Descriptor: The local image gradients are measured at the selected scale around each keypoint. These gradients are transformed into a representation that allows for significant change in illumination and local shape distortion.

Image from Lowe’s paper: This is an example of location recognition using SIFT. The top left images are used for training. The top right image is the test image taken from a different viewpoint. The bottom image shows the detected locations of the objects in squares and parallelograms

Here's what valued users are saying about ProjectPro

Ed Godalle

Director Data Analytics at EY / EY Tech

Gautam Vermani

Data Consultant at Confidential

Not sure what you are looking for?

View All ProjectsAdvantages of SIFT Algorithm

- SIFT descriptors are more accurate than other descriptors. SIFT can find distinctive key points invariant to location, scale, and rotation and robust to changes in rotation, scale, shear, and position, making SIFT an ideal algorithm for object recognition.

- Locality: Features are local, so they are robust to occlusion and clutter, which is often caused by prior segmentation.

- Distinctiveness: Individual features can be used to match to a large database of objects

- Quantity: Multiple features can be generated even for small objects

- Efficiency: SIFT has close to real-time performance.

Disadvantages of SIFT Algorithm

SIFT is slow and does not perform well when the illumination changes. The main disadvantage is the high dimensionality in its descriptors, which makes it computationally heavy.

Computer Vision Applications of SIFT

SIFT can also be used to recognize objects in 2D images, 3D reconstruction, motion tracking and segmentation of objects, image panorama stitching, and so on. Other applications include robotic mapping and navigation, video tracking, individual identification of wildlife, hand gesture recognition, etc.

Here is a link to a python implementation of SIFT: Link. You can use the OpenCV implementation of SIFT or the MatLab implementation.

New Projects

2. SURF Algorithm

Speeded up robust features or SURF is a patentedss feature detector and descriptor algorithm used in computer vision mainly for object recognition, classification, image registration, and reconstruction tasks. SURF is an approximation of SIFT, but is several times faster than SIFT and gives better results without any reduction in the quality of the detected points. It is based on the paper co-written by H. Bay, A. Ess, T. Tuytelaars, and L. Van Gool.SURF is more robust against image transformations when compared to SIFT.

SURF is a 2- Step computer vision algorithm-

-

Feature Extraction: interest point in the image is selected using a Hessian matrix approximation.

-

Feature Description: The SURF descriptor is created using two steps. First, we fix an orientation based on the circular region information around the keypoint (interest point). Next, we construct a square region aligned with the orientation, and we can extract the descriptors.

SURF approximates the DoG using box filters. Instead of Gaussian averaging the images, squares are used for approximation. This gives better results since convolution with squares is faster if the integral image is being used. For each selected keypoint a neighborhood around it is selected and divided into subregions. For each subregion, the wavelet responses are taken and represented. This gives the SURF feature descriptors. The sign of Laplacian is computed in the detection phase and is used for underlying interest points. The sign of the Laplacian distinguishes bright blobs on dark backgrounds in images. When images are compared for matching, the features are only compared if they have the same type of contrast based on the sign of orientation. This allows for faster matching.

Computer Vision Applications of SURF

You can use SURF descriptors to locate and recognize objects, people( object recognition), 3D reconstructions, image registration and classification, object tracking, etc.

Advantages of SURF Algorithm

SURF is faster when compared to SIFT in real-time computer vision applications. It also has low dimensionality and a lower computation time when compared to SIFT.

Disadvantages of SURF Algorithm

SURF is not stable to rotation. It does not function as expected if there are illumination problems in the images.

Here is a link to the python implementation of SURF: link, and here is a link to a simple MatLab example to implement the SURF algorithm: link.

You can also use the openCV functions for SURF:

surf = cv2.xfeatures2d.SURF_create()

keypoints, descriptors = surf.detectAndCompute(img, None)

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

3. Viola-Jones Algorithm

The Viola-Jones object detection algorithm was developed by two computer vision researchers Paul Viola and Michael Jones, in 2001 to solve the problem of face detection, but it can also be trained to detect various object classes in images in real-time. This algorithm is slow to train for a given dataset but can detect faces with impressive speed and accuracy in real-time. Viola-Jones algorithm uses Haar-like features to detect faces in images.

The Viola-Jones algorithm has four main steps: for a given image(color or grayscale image), the algorithm looks at many smaller subregions in the image and tries to find a face by looking for specific features in each subregion. The algorithm needs to check many different scales and positions because an image can contain many faces of various sizes.

-

Selecting Haar-like Features

The three types of Haar-like features that the Viola-Jones algorithm uses are Edge features, Line-features, and Four-sided features. Edge features and Line features are used for detecting edges and lines, respectively. The four-sided features are used for finding diagonal features in the image.

-

Creating an Integral Image

When creating an integral image, the value of each point is the sum of all pixels above and to the left, including the target pixel.

Image credits: https://github.com/sunsided/

-

Running AdaBoost Training

The algorithm learns from the images we supply from the training dataset and can determine the false positives and true negatives in the dataset, making it more precise and accurate. We get an accurate model once we have looked at all possible positions and combinations of features.

-

Creating Classifier Cascades:

Cascading is another “hack” to increase the speed and accuracy of the algorithm. In cascading, each stage consists of a strong classifier. All the features are grouped into several stages. Each stage has several features. The job of each stage is to determine whether a given sub-window in an image is not a face or it may be a face. A sub-window is immediately discarded as not a face if it fails to detect a face in any stage.

Computer Vision Applications of Viola-Jones Algorithm

You can build a real-time face detection system, an object tracking system, real-time attendance marking system using video streams using this algorithm. Viola-Jones algorithm was the first of its kind and set the foundation in the field of face detection.

Advantages of Viola-Jones Algorithm

Despite being one of the first face detection frameworks, Viola-Jones is powerful. The applications built using this algorithm produce notable real-time face detection results.

Disadvantages of Viola-Jones Algorithm

The algorithm can be slow to train as the size of the training dataset increases, but the real-time face detection speed remains quite impressive.

Here’s a link to the implementation of the algorithm: link

Get FREE Access to Machine Learning Example Codes for Data Cleaning, Data Munging, and Data Visualization

4. Eigenfaces Approach using PCA Algorithm.

Face recognition is one of the most successful and widely used applications of computer vision research. From using face recognition to unlock our phones and laptops to using face recognition as a tool to identify security threats in organizations and defense tasks, face recognition is everywhere. Let us discuss a popular face recognition technique used by computer vision researchers called ‘Eigenfaces’. Formally, we can say that face recognition is a classification task. The inputs given to the algorithm are images, and the output is a list of names of people identified by the algorithm.

Sirovich and Kirby first proposed the fundamentals of the Eigenfaces algorithm in 1987. It was later formalized by Turk and Pentland in 1991[link]. This approach uses linear algebra concepts and dimensionality reduction to recognize faces in images. This method is easy to implement and computationally less expensive. Hence, it is used in handwriting recognition, medical image analysis, face detection, recognition, etc. Eigenfaces algorithm uses an unsupervised dimensionality reduction technique called PCA or the principal component analysis.

The idea behind PCA is that we want to select the hyperplane so that when all the data points are projected onto it, i.e., they are maximally spread out. Then we use the axis of maximal variance. We have borrowed a term from linear algebra called eigenvectors. This is where eigenfaces get its name from. We compute the covariance matrix of our image data and consider that covariance matrix’s largest eigenvectors. Those are our principal axes, and we use those axes to project the data onto and reduce dimensions.

This approach allows us to take high-dimensional data and reduce it down to a lower dimension by selecting the largest eigenvectors of the covariance matrix and projecting them onto those eigenvectors. Since we’re computing the axes of maximum spread, we are always retaining the most critical aspects of our data.

Computer Vision Applications of Eigenfaces Approach

Eigenfaces was one of the first face recognition algorithms to be used on android. It can be used for facial emotion recognition. The eigenfaces technique is not limited to face recognition but can also be extended for handwriting recognition, lip-reading medical image analysis in hospitals, voice recognition, sign language interpretation, hand gesture recognition, etc. Hence many prefer the term ‘eigenimage’ to ‘eigenface’.

Advantages of Eigenfaces Approach

It’s straightforward to implement this algorithm to detect faces in videos and images.

Disadvantages of Eigenfaces Approach

For the algorithm to give accurate results, the training dataset images need properly centered faces. The algorithm is also sensitive to lighting and scaling.

5. Lucas- Kanade Algorithm:

Understanding the motion of objects or object tracking in scenes is one of the key problems in computer vision research. One of the widely used techniques to solve this in computer vision is the Lucas-Kanade optical flow algorithm. This algorithm, proposed in 1981, is a simple technique used to estimate the movement of features of interest in successive images of a scene in a video. It associates a movement vector to every interesting pixel in a scene, obtained by comparing two consecutive images.

The Lucas Kanade algorithm is based on the ‘Brightness constancy assumption.’ The fundamental assumption made here is that the pixel level brightness will not change between two successive frames. It also assumes that the color of an object does not change significantly in two consecutive frames in a scene. Another assumption which Lucas Kanade method makes is that the motion of the pixel values inside an object in a scene will be similar.

Applications of Lucas- Kanade Algorithm

You can use this algorithm to track optical flow or layered motion in videos.

Advantages of Lucas- Kanade Algorithm

Lucas Kanade algorithm is easier to implement compared to other object tracking algorithms. It can have good accuracy and calculation speeds for the calculation and prediction of the motion of objects. Fixed neighborhood size for the pixel of interest reduces the complexity of the algorithm.

Disadvantages of Lucas- Kanade Algorithm

The drawback of the Lucas Kanade algorithm is that it doesn’t perform well with rapid motion. It works well for moderate object speeds. The algorithm has errors on the boundaries of moving objects in scenes.

Use the cv.calcOpticalFlowPyrLK OpenCV method to use the LK method.

6. Kalman Filter

Obstacle detection is one of the most exciting areas of research in computer vision. It requires tracking and predicting the position of objects. Kalman filter is long regarded as the optimal solution in computer vision applications like object tracking, prediction, and correction tasks. We see the application of the Kalman filter in real-world applications like robotics, medical applications, defense images and videos, public and private security, and location and navigation systems.

Kalman filter is an algorithm that can estimate and predict future positions based on past estimates of the object position. The filter is named after Rudolf Kalman, who published his paper in 1960 giving a recursive solution to discrete data linear filtering. Kalman filter is unique because it is purely a time-domain filter, unlike others formulated in the frequency domain and then transformed into the time domain.

Computer Vision Applications of Kalman Filter

You can use the Kalman filter to build applications for object detection, classification of moving objects, and tracking of objects in videos. The very first application of Kalman Filter was in guided navigation, NASA’s Apollo space program. Since then Kalman filter finds applications in the fields of aerospace, land, and maritime navigation. It is also used in Robotics for autonomous navigation of mobile robots. Trajectory road tracking and detection is also another area of application for the Kalman filter.

Advanatges of Kalman Filter

Kalman filter has low computational requirements and is easy t implement. It also converges fast had gives reliable results.

Disadvanatges of Kalman Filter

You can only use Kalman Filter for linear state transitions. It assumes that both the system and observation models equations are linear, which is not realistic in many real-life situations. It assumes that the state is Gaussian distributed, which may not happen in real-world problems.

Here is a simple python implementation of the Kalman filter using Numpy: Link

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

7. Mean Shift Algorithm

The most difficult yet highly demanded feature of computer vision is object tracking. Not only does the object needs to be identified, but the identification also needs to be quick enough to render in real-time while it moves. There may be a change in orientation or scale( due to the change in distance from the camera) which makes the object tracking task complicated.

One of the algorithms commonly used to solve this problem is the mean shift algorithm. It works primarily on color images and can efficiently track objects in scenes. Mean shift is a statistical concept related to clustering. This clustering algorithm looks for centroids in the dataset within clusters. It works by shifting data points towards the centroids to become the means of the other points in the cluster. This algorithm is also known as mode seeking algorithm. You need not specify the number of clusters in advance, unlike other clustering algorithms like K-Means clustering. The mean shift algorithm determines the number of clusters based on the dataset. It is a simple algorithm to implement for object tracking but has a high computational cost.

In the mean shift method, every video instance is checked in the form of the frame’s pixel distribution. We define an initial window( square or a circle) by giving the position to identify the maximum pixel distribution. The algorithm then keeps track of the area by moving the window in the direction of maximum pixel distribution. The distance between the center of the tracking window and the centroid of the k-pixels inside the window decides the object’s direction of movement.

Computer Vision Applications of Mean Shift Algorithm

The mean shift algorithm is helpful to track objects in videos with static backgrounds.

Advantages of Mean Shift Algorithm

The mean shift algorithm results in the arbitrary shape of clusters that might not be spherical like in the K-means algorithm. The mean shift method is robust to outliers in the image data.

Disadvantages of Mean Shift Algorithm

The result of the mean shift algorithm depends on the input window size. The algorithm is also computationally expensive and does not scale well.

You can use the sklearn.cluster.MeanShift from python sci-kit learn library to implement a mean shift algorithm. Here’s a link to a simple implementation of the mean shift algorithm using python: link

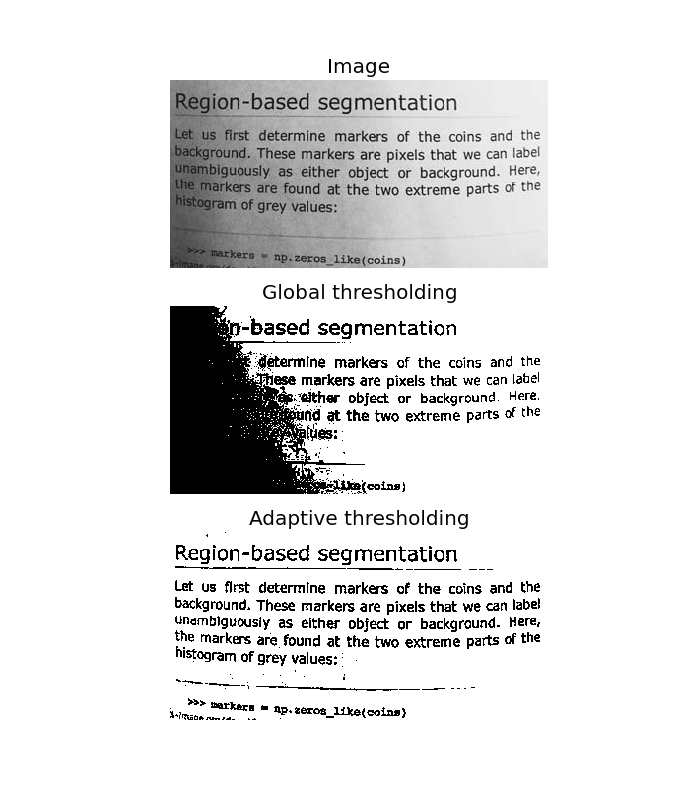

8. Adaptive Thresholding

Image thresholding, one of the key steps for image segmentation, is common in many computer vision and image processing techniques. The aim of thresholding an image is to classify its pixels as dark or light. While applying basic thresholding to images, we manually provide a threshold value ( say T) to segment the foreground and background segments in the image. We also have Otsu’s thresholding which automatically determines the optimal threshold value T for any input image. However, both these techniques have a drawback. These global thresholding methods apply the same threshold value to all pixels in the input image. But if there are varying lighting conditions, shadows in the input image, a single threshold value may not be optimal for segmentation.

Image source: link

Adaptive thresholding overcomes this drawback by calculating a threshold value for each pixel in the input image. For each pixel, a gray-level weighted average of the neighborhood pixels is evaluated. The average value is taken as the threshold. This highlights pixels that are different from their neighborhood instead of performing just a foreground and background segmentation.

Computer Vision Applications of Adaptive Tresholding

Adaptive thresholding is one of the easiest and extensively used image preprocessing techniques to extract the regions of interest by segmenting the images. This is extensively used in video processing, medical image analysis, geo-spatial image analysis, etc.

Advantages of Adapative Thresholding

Adaptive thresholding algorithm provides a way to segment regions of interest in images that are resilient to illumination changes, resulting in better segmentation results. Adaptive thresholding is a quick and simple way to segment images.

Disadvantages of Adaptive Thresholding

Pixels included in a segmentation class may not be coherent in that region. Thresholding does not account for spatial locations of pixels; hence may group incoherent objects in the same areas, based only on the intensity of pixels.

You can use cv2.adaptiveThreshold from the openCV module. For a python implementation, you can use from skimage.filters import threshold_otsu, threshold_adaptive

9. Graph Cut Algorithms

As a subfield of computer vision graph cut optimization algorithms are used to solve a variety of simple computer vision problems like image smoothing, image segmentation, etc. Graph cuts can be used as energy minimization tools for a variety of computer vision problems with binary and non-binary energies, mostly solved by solving the maximum flow problem in graphs.

Graph algorithms have been successfully applied to several computer vision and image processing problems. We can apply graph cut algorithms to the problem of image segmentation.

You can use graph cuts to divide an image into background and foreground segments in an input image. This is done in two stages: First, we build a network flow graph based on the given input image. Then a max-flow algorithm is run on the network flow graph to find the min-cut, which produces the optimal segmentation of the image.

Computer Vision Applications of Graph Cut Algorithms

Graph cut algorithms are extensively used in image segmentation, network flow analysis, image smoothing, social network analysis, etc. Watershed algorithms, which are used for image segmentation and clustering, are generalizations of graph cut algorithms.

Advantages of Graph Cut Algorithms

Graph cut algorithm has a low error rate and very fast inference from the image. They work well on images with large unknown regions and give accurate segmentation when given only two input strokes, i.e., graph cut algorithms are binary segmentation algorithms.

Disadvantages of Graph Cut Algorithms

Graph cut algorithms cannot handle transparent or semi-transparent boundaries, sophisticated shapes and work on the assumption that the object’s shape in the image is smooth.

Here is a link to an image segmentation using graph cut algorithm: Link

10. YOLO Algorithm

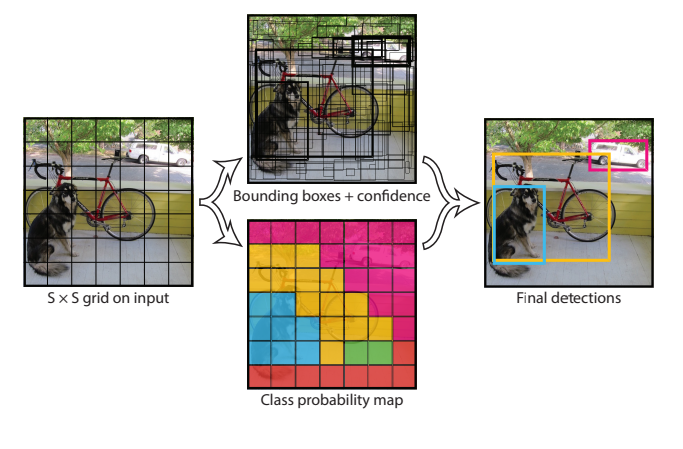

Object detection in computer vision and graphics involves detecting various objects in digital images and videos. YOLO or ‘You Only Look Once’ is an algorithm that provides real-time object detection using neural networks. This algorithm is known for its speed and accuracy. The algorithm can be used to detect people, animals, traffic signals, etc.

YOLO uses convolution neural networks or CNN’s to perform real-time object detection. The CNN model predicts the class probabilities for the detected objects and applies bounding boxes for the detected objects in an input image. And as per the name, the algorithm only requires a single forward propagation through the model for object detection and prediction in an input image.

Image source: link

The architecture of a YOLO model is like an FCNN( fully convolutional neural network). The model passes an nxn image through an FCNN and gives an mxm prediction consisting of bounding boxes and class probabilities for each bounding box. To use the YOLO algorithm for your applications, you can use the YOLOv3 version from python libraries.

Computer Vision Applications of YOLO

YOLO is extensively used in autonomous driving vehicles to detect objects in its path efficiently.

Advantages of YOLO

YOLO is also an open-source algorithm. Yolo can process the images in videos at the rate of 45 fps to 150 fps, giving better results than in real-time. The YOLO network can also generalize an image better than other CNN’s. The training time for CNN’s like the RetinaNet is greater than for YOLO. However, the accuracy of YOLO is equal to that of RetinaNet when the training dataset is sufficiently largDisadvantagesges of YOLO

YOLO has low recall and higher localization error when compared to algorithms like Faster R-CNN. YOLO also does not detect objects which are close to each other accurately since each grid in the image can have only two bounding boxes. YOLO also fails to detect small objects in an image. YOLO may also not perform efficiently when the training dataset isn’t large enough.

Here is a link to a simple implementation of the YOLO algorithm: Link

The human race is close to solving computer vision owing to the exponential growth in technology and the abundant data availability. With the progress in neural networks and deep learning, the applications are already in use in medical institutes and industries and play a big part in our daily lives. We hope that this overview of some of the most used Computer vision algorithms helps make your journey to understand the massive world of computer vision a little easier.

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,