Most Essential 2023 Interview Questions on Data Engineering

Analytics Vidhya

FEBRUARY 7, 2023

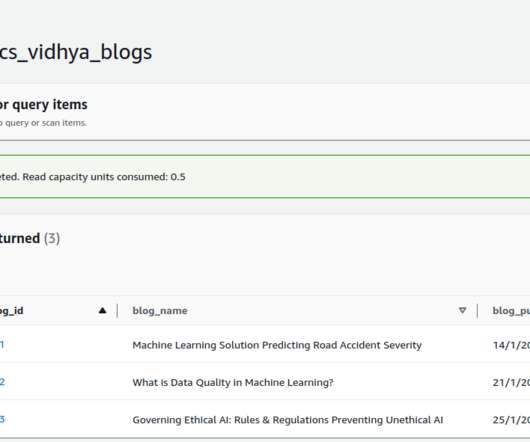

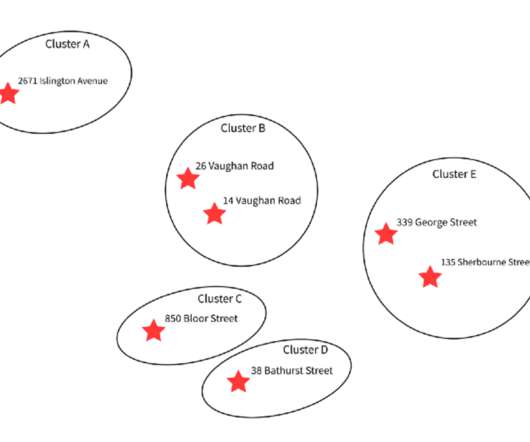

Introduction Data engineering is the field of study that deals with the design, construction, deployment, and maintenance of data processing systems. The goal of this domain is to collect, store, and process data efficiently and efficiently so that it can be used to support business decisions and power data-driven applications. This includes designing and implementing […] The post Most Essential 2023 Interview Questions on Data Engineering appeared first on Analytics Vidhya.

Let's personalize your content