The Workflow Engine For Data Engineers And Data Scientists

Data Engineering Podcast

JUNE 24, 2019

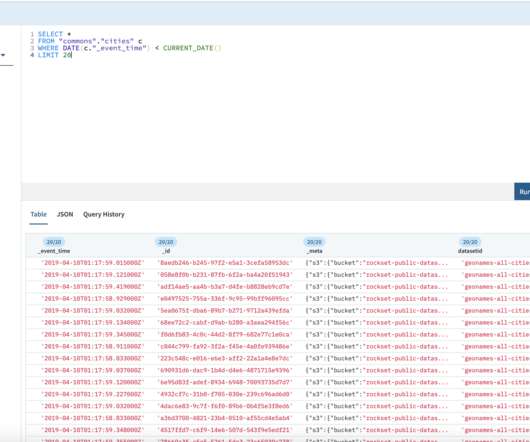

Summary Building a data platform that works equally well for data engineering and data science is a task that requires familiarity with the needs of both roles. Data engineering platforms have a strong focus on stateful execution and tasks that are strictly ordered based on dependency graphs. Data science platforms provide an environment that is conducive to rapid experimentation and iteration, with data flowing directly between stages.

Let's personalize your content