Cloudera has been providing enterprise support for Apache NiFi since 2015, helping hundreds of organizations take control of their data movement pipelines on premises and in the public cloud. Working with these organizations has taught us a lot about the needs of developers and administrators when it comes to developing new dataflows and supporting them in mission-critical production environments.

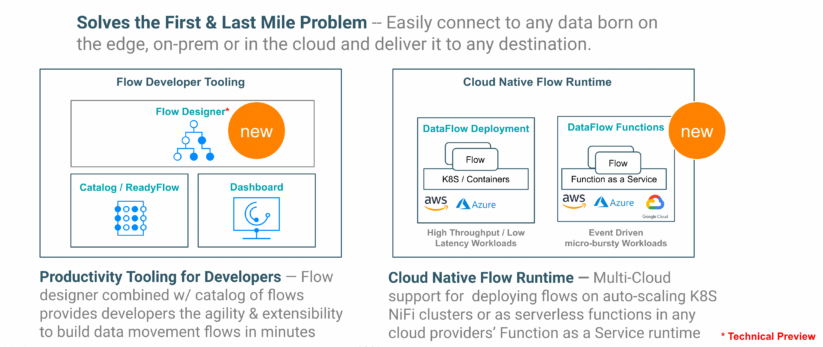

In 2021 we launched Cloudera DataFlow for the Public Cloud (CDF-PC), addressing operational challenges that administrators face when running NiFi flows in production environments. Existing NiFi users can now bring their NiFi flows and run them in our cloud service by creating DataFlow Deployments that benefit from auto-scaling, one-button NiFi version upgrades, centralized monitoring through KPIs, multi-cloud support, and automation through a powerful command-line interface (CLI). Recently, we announced the general availability of DataFlow Functions, allowing NiFi flows to be executed in serverless compute environments, such as AWS Lambda, Azure Functions, or Google Cloud Functions.

With DataFlow Deployments and DataFlow Functions being available, flow administrators can now pick the best option for running their dataflows in production in the public cloud. Now, we shift focus on the needs of developers and addressing the challenges they face when building dataflows in the cloud.

Enabling self-service for developers

Developers need to onboard new data sources, chain multiple data transformation steps together, and explore data as it travels through the flow. They value NiFi’s visual, no-code, drag-and-drop UI, the 450+ out-of-the-box processors and connectors, as well as the ability to interactively explore data by starting individual processors in the flow and immediately seeing the impact as data streams through the flow.

We’ve observed organizations using more and more data sources and destinations, as well as expecting a more diverse range of developers to build data movement flows. This observation further emphasizes the need for universal developer accessibility, which makes sure that developer tooling is easy to use for newcomers while giving power users the advanced options they need. A critical aspect of universal developer accessibility is to provide dataflow development as a self-service offering to developers. This is a challenge because developers are either required to manage their own local Apache NiFi installation, or a platform team is required to manage a centralized development environment that all developers can use.

What if there was a way to not require developers to manage their own Apache NiFi installation without putting that burden on platform administrators? What if we could provide an easy-to-manage, self-service development environment for developers that anyone can start using immediately?

These are the questions we asked ourselves, and I am excited to announce the technical preview of DataFlow Designer, making self-service dataflow development a reality for Cloudera customers.

A reimagined visual editor to boost developer productivity and enable self service

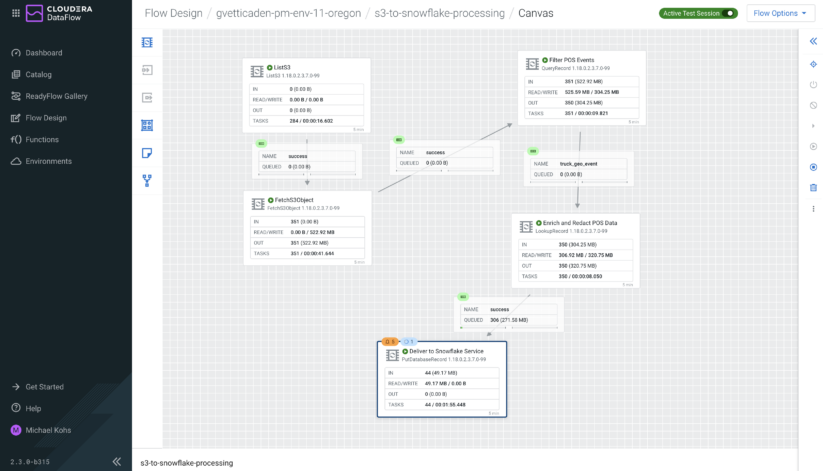

At the core of our new self-service developer experience is the new DataFlow Designer, which reinforces NiFi’s most popular features while making key improvements to the user experience—all presented in a fresh look and feel.

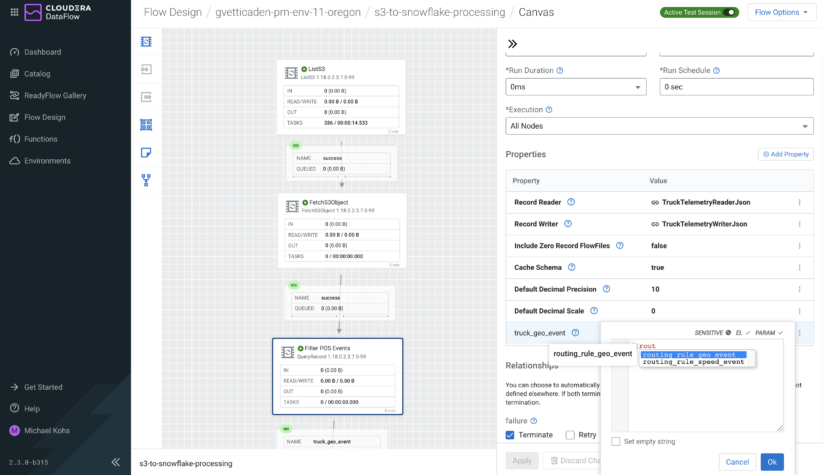

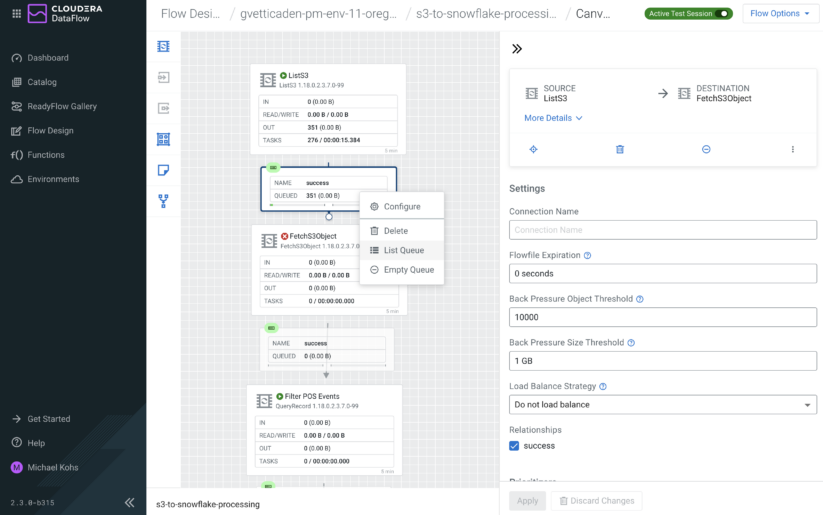

Figure 1: The Designer canvas with a brand new look and feel

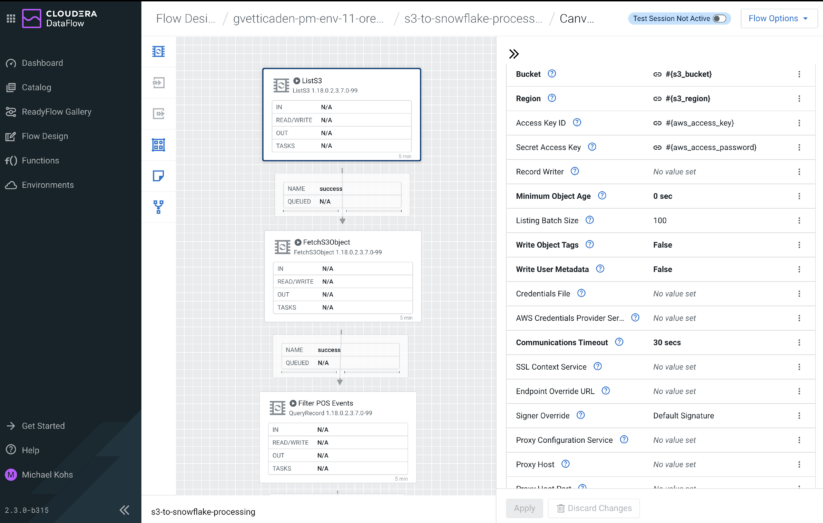

A key improvement over the traditional Apache NiFi canvas is the new expandable configuration side panel, allowing developers to quickly edit processor configurations without losing focus of what’s happening on the canvas. The side panel is context-sensitive and instantly displays relevant configuration information as you navigate through your flow components.

Figure 2: Don’t lose sight of the canvas while applying configuration changes in the side panel

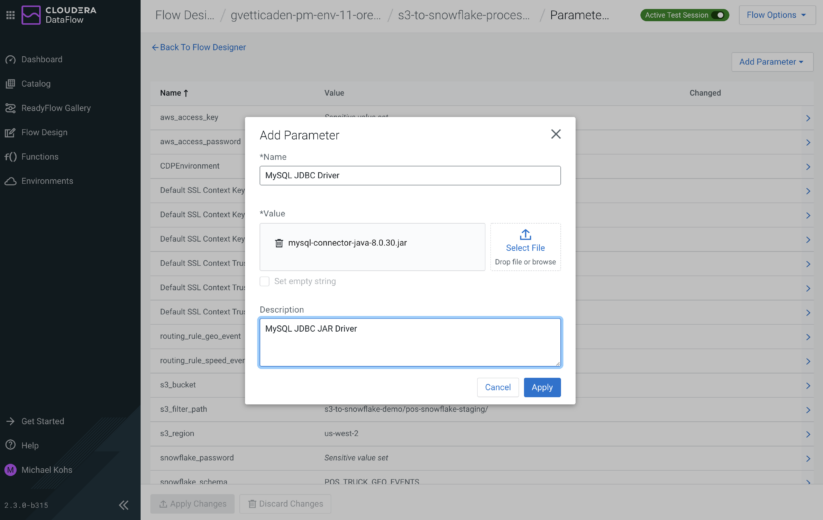

Another example of how the new flow designer makes a developer’s life easier is the ability to directly upload files through the designer UI. In traditional NiFi development environments, developers would either require SSH access to the NiFi instances to upload files or ask their administrators to do it for them. Having the ability to upload files like JDBC Drivers, Python scripts, etc. directly in the designer makes building new flows a lot more self-service.

Figure 3: Easily upload files directly through the designer without requiring SSH access to servers

Speaking of parameters—they are an important concept to make your dataflows portable. After all, it’s very likely that you are developing your flow against test systems but in production it needs to run against production systems, meaning that your source and destination connection configuration has to be adjusted. The best way to do this is by parameterizing these connection configuration values allowing you to plug in different values when creating a flow deployment in production. You can set default values for parameters as well as mark them as sensitive, which ensures that no one can see the value that was set.

Figure 4: Central management of flow parameters

The Designer supports on-the-fly parameter creation when configuring components as well as auto-complete by pressing CTRL+SPACE when providing a configuration value. As a result, parameter management is always at your fingertips right where you need it without requiring you to switch between views to look them up.

Figure 5: Parameter references in the configuration panel and auto-complete

Interactivity when needed while saving costs

One of NiFi’s unique features is the ability to interact with each component in a dataflow individually without having to stop the entire flow. This allows developers to make changes to their processing logic on the fly while running some test data through their flow and validating that their changes work as intended. For example, if your dataflow is reading events from a Kafka topic, which you want to filter and process but you’re not sure about the exact schema the events are in, you might want to peek at the events before writing your filter condition. With NiFi you can configure your source processor and run it independently of any other processors to retrieve data. Once you have retrieved the data, NiFi stores it in a queue, which allows you to explore the content and metadata attributes of the events. Once you know how your events look, you can move to the next step in your flow and define the filter condition and further processing logic. This makes it easy for developers to iterate and validate each processing step as well as onboard new data sources that they’re not familiar with.

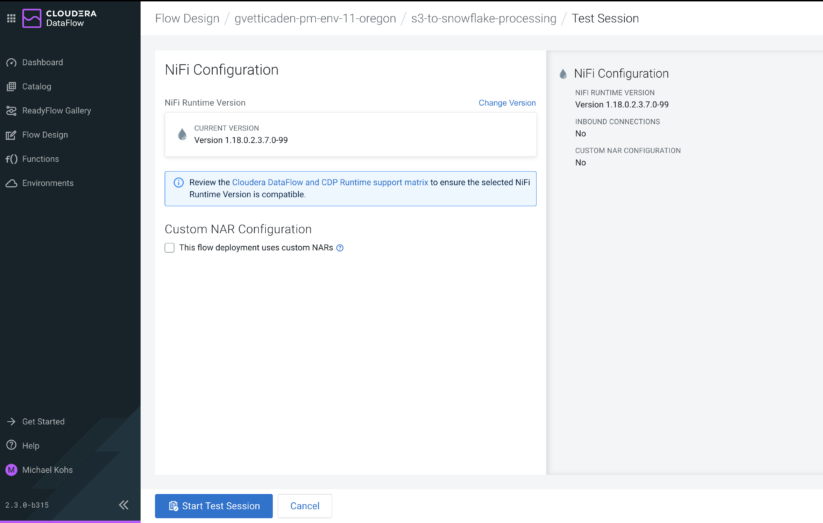

We wanted to preserve the rapid, interactive development process while keeping the cost for required infrastructure low—especially during times when developers are not working on their flows. To meet this need we’ve introduced a new concept called test sessions with the DataFlow Designer.

When a developer creates a new dataflow, they are immediately directed to the Designer and can start building their flow without having to wait for any resources to be created. They can drag and drop processors to the canvas immediately, create parameters and services, and apply configuration changes.

Figure 6: Developers can start building dataflows immediately without requiring any NiFi resources to be allocated—note the grayed out processors indicating that no test session is active

As soon as they want to run a processor and test their flow logic, they can initiate a test session, which provisions NiFi resources on the fly within minutes.

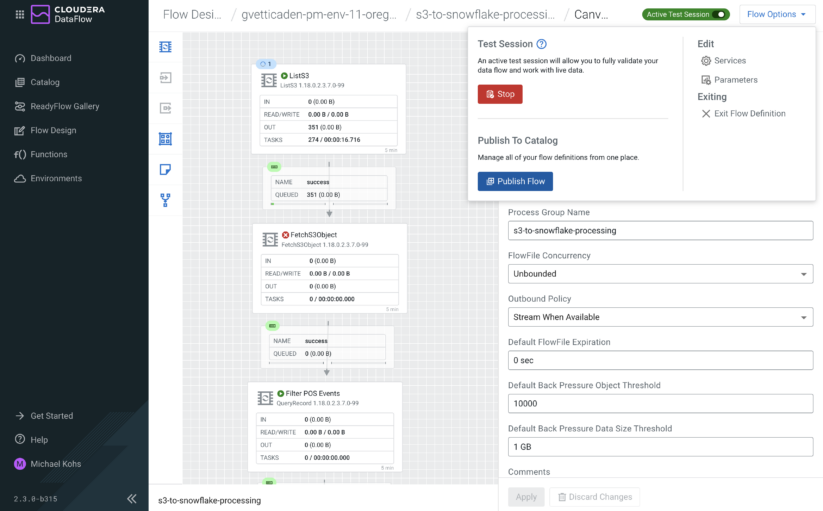

Figure 7: Test sessions provide an interactive experience that NiFi developers love

Once a test session is active, developers can start or stop individual processors and services and explore data in the flow to validate their flow design. When the test session is no longer needed, developers can terminate it, freeing up the resources and saving costs. Test sessions act like on-demand NiFi sandboxes for developers.

Figure 8: Once a test session has been started, developers can interact with processors and monitor data as it is processed by their dataflow

A streamlined deployment process from development to production

Developing and testing dataflows is the first step in the dataflow life cycle, and needs to integrate well with deploying and monitoring dataflows in production environments. With the designer becoming available in CDF-PC, we can now support flow developers and flow administrators alike through a streamlined process.

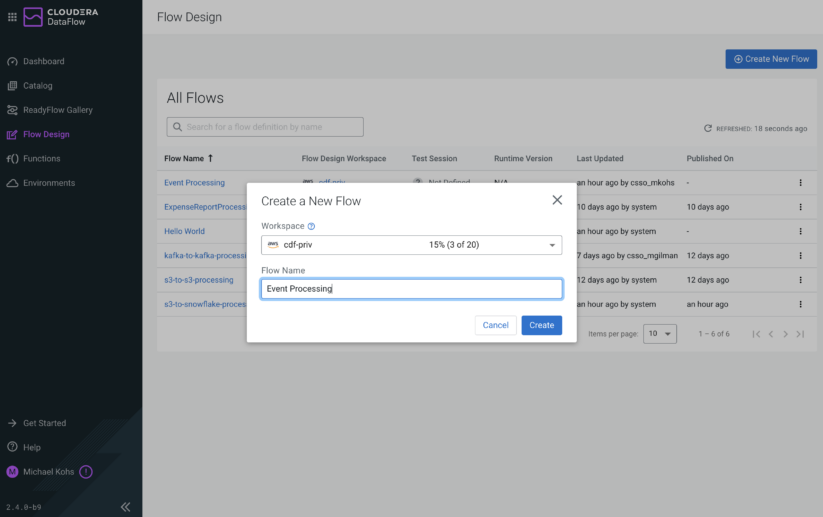

Figure 9: Developers can create new draft flows as needed

Developers create draft flows, build them out, and test them with the designer before they are published to the central DataFlow catalog. Once they are in the DataFlow catalog, flow administrators can deploy them in their cloud provider of choice (AWS or Azure) and benefit from the aforementioned features like auto-scaling, one-button NiFi version upgrades, centralized monitoring through KPIs, and automation through a powerful CLI.

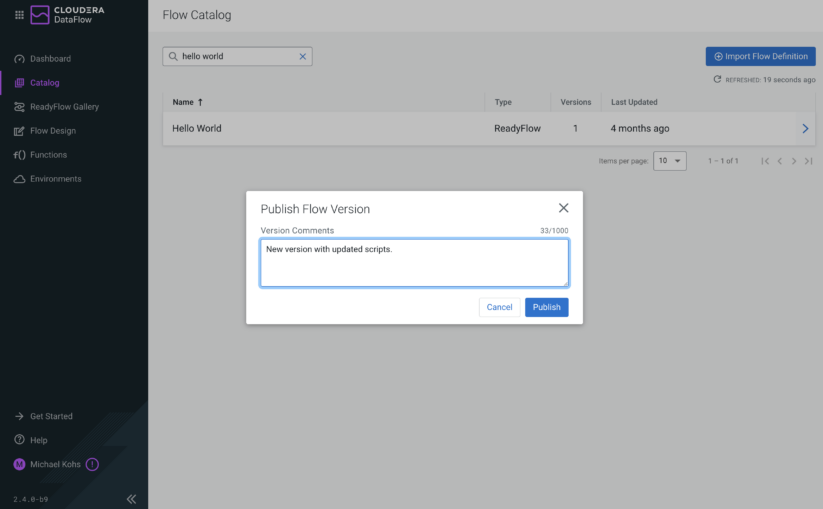

Figure 10a: Once a draft flow has been validated using a test session, developers can publish them to the DataFlow catalog for production deployments

Figure 10b: As part of the publication step, developers can leave comments and are redirected to the catalog from where they can initiate a deployment

Looking ahead and next steps

The DataFlow Designer technical preview represents an important step to deliver on our vision of a cloud-native service that organizations can use for all their data distribution needs, and is accessible to any developer regardless of their technical background. Cloudera DataFlow for the Public Cloud (CDF-PC) now covers the entire dataflow lifecycle from developing new flows with the Designer through testing and running them in production using DataFlow Deployments or DataFlow Functions depending on the use case.

Figure 11: Cloudera DataFlow for the Public Cloud (CDF-PC) enables Universal Data Distribution

The DataFlow Designer is now available to CDP Public Cloud customers as a technical preview. Please reach out to your Cloudera account team or to Cloudera Support to request access.

Stay tuned for more information as we work towards making the DataFlow Designer generally available to CDP Public Cloud customers and sign up for our upcoming DataFlow webinar or check out the DataFlow Designer technical preview documentation.