Your 101 Guide to Data Augmentation Techniques

Explore various data augmentation techniques for all kinds of data: images, texts, audio, and other data formats.| ProjectPro

Data scientists and machine learning engineers often come across this scenario where the data for their project is not sufficient for training a machine learning model, often resulting in poor performance. This is particularly true when working with complex deep-learning models that require large amounts of data to perform well. However, collecting and annotating large amounts of data might not always be possible, and it is also expensive and time-consuming. Bid goodbye to worries related to such problems with this blog, as it covers an appropriate and effective solution to the problem of limited data available for training machine learning and deep learning models. Let's dive into the world of data augmentation, which addresses this challenge by artificially increasing the size of the training data by applying transformations to the existing data,

Image Segmentation using Mask R-CNN with Tensorflow

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectWith a humongous amount of data generated every second, collecting qualitative and relevant data to train machines has become tedious. That is why data scarcity has become a significant problem, particularly in research domains like healthcare and finance, where the data is confidential or not easily accessible for machine learning professionals who want to leverage it. Given enough training data, machine learning models can smoothly solve challenging problems. However, access to quality data remains a barrier for many real-world machine-learning projects.

When training an ML model on limited examples, it tends to overfit. Overfitting occurs when an ML model yields accurate results for training examples but not for unseen data. It can be prevented in many ways, for instance, by choosing another algorithm, optimizing the hyperparameters, and changing the model architecture. Ultimately, the most important countermeasure against overfitting is adding more and better quality data to the training dataset.

One solution to such problems is data augmentation, a technique for creating new training samples from existing ones. Data augmentation can be applied to all types of input data, like image datasets, text data, audio data, and even multi-modal data. Data augmentation effectively enhances the accuracy and performance of machine learning and deep learning models in scenarios where the dataset size is not large enough.

The above image depicts how modifying various aspects of the same image can generate new augmented images, on which we can train our model. Various elements like color, size, orientation, and background of all the generated images appear different and are new data to the input dataset.

What is Data Augmentation in Deep Learning?

Data collection and labeling (annotating) can be time-consuming and expensive for deep-learning models. That is where data augmentation methods come into play. If image manipulations, such as random cropping, flipping, rotations, scaling, etc., are applied to the original images, then we say data augmentation methods generate that data.

Data augmentation is the process of artificially increasing the amount of data by generating new data points from existing data to improve model performance. It involves augmenting the given datasets by making small changes to them or using machine learning models to create new data points in the latent space of the original data.

When we incorporate Generative Adversarial Networks (GANs) to create data augmentations, the deep learning model is said to be trained on synthetic data as the training dataset was created by a GAN model, not by manipulating original images.

In deep learning, data augmentation techniques are used to improve the performance of computer vision applications such as Image classification, image recognition, object detection, etc. The models behind such applications are expected to perform highly accurately irrespective of the transformations implemented on the input dataset, such as rotation, magnification, blur, etc. The data augmentation methods are also used for enhancing the performance of natural language processing (NLP) based applications.

Besides the meaning of data augmentation in deep learning, it is crucial to understand the significance of data augmentation methods. Let us explore that!

Using object detection and image classification in computer vision, algorithms can identify and classify different objects for image recognition. This evolving technology includes the use of data augmentation to create better-performing models. The models must identify objects in all conditions, whether rotated, magnified, or hazy. Researchers needed a way to add training data with realistic alterations artificially. Also, natural language processing leverages this technique to yield augmented data.

New Projects

Why is Data Augmentation Important in Deep Learning?

Deep learning models are trained on large amounts of data which can be in any form. When using such models for prediction, overfitting is a common problem. Thus, it becomes necessary to adopt efficient augmentation techniques for training set such that the model performs well on test data. Data Augmentation is an apt method for training deep neural networks to perform computer vision tasks like object detection, image recognition, etc.

In summary, using data augmentation has many advantages, as follows:

1. Model predictions are more accurate because data augmentation helps the model identify previously unseen samples.

2. The model has enough data to understand and train all available parameters. It is crucial in applications where data collection is difficult.

3. Prevention of model overfitting due to data augmentation and increase in data diversity.

4. It saves time when collecting more data would take time. Additionally, it reduces the cost of collecting large amounts of data in cases where data collection is expensive.

These advantages will be easier to understand once you explore the data augmentation techniques in the next section.

Here's what valued users are saying about ProjectPro

Abhinav Agarwal

Graduate Student at Northwestern University

Jingwei Li

Graduate Research assistance at Stony Brook University

Not sure what you are looking for?

View All ProjectsData Augmentation Techniques

Several data augmentation techniques are used for different data formats, such as texts, images, audio, video, etc. Let us explore those techniques in detail.

Data Augmentation Techniques for Audio Data

The audio data comprises waves and signals of varying frequencies, pitch, velocities, tunes, beats, and wavelengths. Time shifts, pitch changes, and speed can be applied to generate synthetic data for audio. NumPy provides an easy way to handle noise injection and time shifting in python. Another package named Librosa (a library for speech and speech recognition and organization) helps manipulate pitch and speed.

Noise can also be added manually to the training set as an augmentation technique to increase the amount of data. Thus, noise injection into existing data is another technique to produce synthetic data that we could incorporate into our projects for training models, thereby preventing the occurrence of overfitting.

Image by Edward Ma

You don't have to remember all the machine learning algorithms by heart because of the amazing libraries in Python. Work on these Machine Learning Projects in Python with code to know more!

Data Augmentation Techniques for Image Data

Various image processing techniques are used to create an augmented image dataset in the computer vision domain. Image augmentation techniques can be applied to input images with image manipulation methods in OpenCV, like random crop, horizontal flip, geometric transformations, random horizontal flip, random vertical flip, image flip, color space transformations, and other such common image transformations to create new synthetic images artificially.

For example, by copying and flipping a single image horizontally, we can double the number of training examples for the same image. Other transforms such as rotate, crop, zoom, and pan apply to the original data. This way, we can modify the pixel values of the original images. We can also combine transformations to to expand our collection of training examples further.

Data augmentation need not be limited to geometric operations on image dimensions. Noise addition, changes in color settings, and other effects such as blurring and sharpening filters also help transform existing training samples into new data. Data augmentation can be used in Convolutional Neural Networks (CNNs) and other types of neural networks.

A few more examples of data augmentation techniques for images are:

Medium cut: Crops the center of the specified image. Size is a user-specified parameter.

Random Harvest: Crops the specified image at random positions.

Random upside down: Randomly flips the image vertically with the specified probability.

Random horizontal flip: Randomly flips the given image horizontally with the given probability.

Random rotation: Rotate an image by a specific angle.

Resize: Resizes the input image to a specific size.

Random Affinity: A random affinity transformation of an image whose center remains unchanged.

Luminance: One way to magnify it is by changing the image’s brightness. The resulting image will be darker or lighter compared to the original image.

Contrast is the degree of separation between an image's darkest and brightest areas. You can also change the contrast of the image.

Saturation: Saturation is the separation between colors in an image.

Data Augmentation Techniques for Textual Data and Other forms of Data

In Natural Language Processing domain, we deal with textual data that usually comprises redundant information like stop words or special characters, which are removed during preprocessing. Such data, at times, compromises its quality and may not be sufficient for appropriately training a model. To solve this problem, one can use data augmentation techniques for textual data.

Below are different techniques for augmenting text data, which are as follows:

1. Replacing some words with their synonyms.

2. Replacing some words with words having similar (cosine similarity-based) word embeddings (such as word2vec or GloVe) to these words.

3. Replacing words with powerful Transformer Models (BERTs) based on context.

4. Using reverse translation. It means that some words may change when a sentence is translated into another language and then transformed back into the original language.

All these text data augmentation techniques ensure that the same text can be used to generate new data having the same meaning and conveying the same information although being treated as different training set by the language model.

Advanced Data Augmentation Techniques

One can leverage advanced machine learning/deep learning models for creating augmented datasets. A few examples are:

Adversarial Training/Adversarial Machine Learning

Adversarial attacks are imperceptible changes (pixel-level changes) to an image that can completely change a model's predictions. To solve this problem, adversarial training transforms images until the deep learning model is tricked into not correctly analyzing the data. These transformed or augmented images are used in training examples to make the model robust against enemy attacks.

Below is an example of images of the Brain Tumour MRI dataset after applying image augmentation techniques to the input image. Notice that each augmented image generated varies in its appearance.

Generative adversarial networks (GANs)

Images synthesized by GAN are used as augmented images for input to the model. However, this will train a generator, discriminator, and classifier (based on your use case). The generator's task is to generate fake data that is, new or augmented data, by incorporating feedback from a discriminator that keeps a check on differences between actual data and generated data. The drawback of using GANs is that they require a large amount of resource consumption and effort. In the image below you can see a CT scan image produced by CycleGAN, a variation of GAN. Thus, GAN-generated CT scan images are used to augment datasets in the medical field. Once the dataset is created, it can be used for classification and other tasks.

Neural Style Transfer-based augmentation

Neural Style Transfer is the deep learning technique of applying the style of one image to the content of another image, thereby leading to a completely creative artistic image. This phenomenon is used in transfer learning and can be implemented well by Generative Adversarial Networks (GANs), Visual Geometry Group (VGG) neural networks, Convolutional Neural Networks (CNNs), InceptionNet-V3, ResNet, and other variants.

Here, a series of convolutional layers have been trained to decompose the image, allowing us to separate content and style. After separation, the content of one image is composited with the styles of another image to create a style-enhanced image. The content remains the same, but the style changes, making the model more robust as it works regardless of the style of the image.

Neural Style Transfer is an excellent application of leveraging image data augmentation methods in state-of-the-art model architectures wherein changing image background (style) and subject (content) leads to creating new images with varying appearances that could be used as training data.

After learning about the various techniques used for creating additional data points using data augmentation techniques, it is time for you to explore how such methods can be applied in real-world scenarios.

How to do Data Augmentation in Keras?

Keras provides an ImageDataGenerator class that defines the image data preparation and augmentation configuration.

Rather than performing operations on the entire set of images in memory, the API is designed to be iteratively processed by the deep learning model fitting process, creating sophisticated images just in time. It reduces memory overhead but adds to the time taken during model training.

After creating and configuring the ImageDataGenerator, we must make it suitable for the data. It computes all the statistics needed to perform the image transformation. To do this, call the fit() function on your data generator and pass it to the training dataset.

The data generator is an iterator, returning batches of image patterns on demand. You can configure the batch size, prime the data generator, and fetch batches of images by calling the flow() function.

Finally, you can use data generators. Instead of calling the fit() function on your model, you should call the fit_generator() function, passing in your data generator the desired epoch length and the total number of epochs to train.

Here, we use the Keras preprocessing layers, such as tf.keras.layers.Resizing, tf.keras.layers.Rescaling, tf.keras.layers.RandomFlip, and tf.keras.layers.RandomRotation.

Below is a code sample depicting how to augment images using Keras.

Below is the resulting output (augmented data) we get after applying geometric transformations as part of image augmentation. All the images differ in their aspects like pixel values, orientations, size, etc., after rotations, horizontal flips, width, and height shifts.

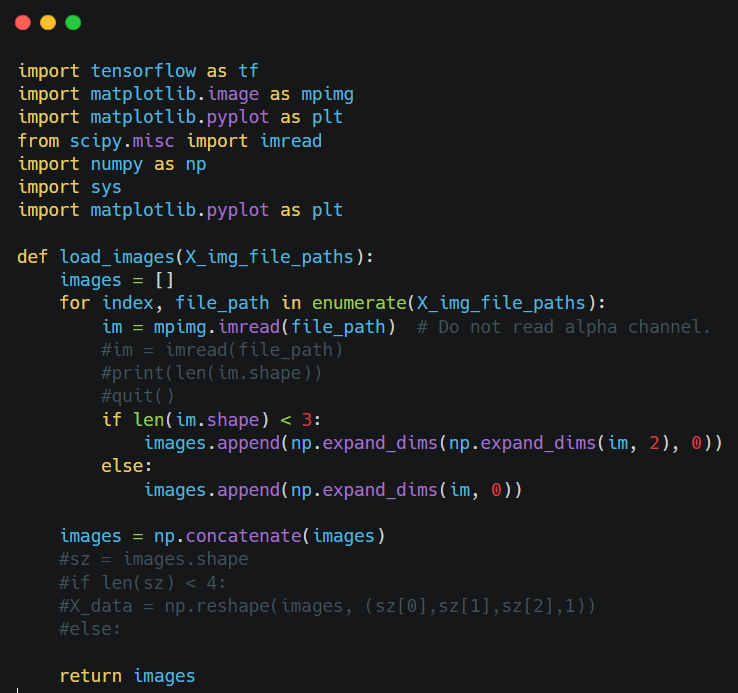

How to do Data Augmentation in Tensorflow?

The tf.image methods, such as tf.image.flip_left_right, tf.image.rgb_to_grayscale, tf.image.adjust_brightness, tf.image.central_crop, and tf.image.stateless_random* are few inbuilt functions in Tensorflow for data augmentation on input images.

Noise injection can be done not only on audio datasets but also on images as shown below using Tensorflow’s inbuilt functions.

Gaussian noise adds a normally distributed random value to each pixel, whereas salt and pepper noise replaces random pixels with extremely dark or light values.

Can R be used for Machine learning? Work on these machine learning projects in R to find out the answer.

How to do Data Augmentation in Caffe?

In Caffe, real-time data augmentation is implemented within the ImageData layer.

DeepDetect supports strong data augmentation like crop, rotation, Gaussian blur, posterization, erosion, salt & pepper, contrast limited adaptive histogram equalization, conversion to HSV and LAB, brightness distortion, decolorization, histogram equalization, inverse image, contrast distortion, saturation, HUE distortion, random permutations, perspective transforms, horizontal, vertical, zoom for its Caffe backend and training of images.

The API makes it easy to train robust image models. The controls are for noise and distort objects of mllib object. Both Noise and Distort can be used alone or together. The Caffe package is imported into the Python environment for performing data augmentation.

This blog discussed the various data augmentation techniques for handling different data types. To learn how such techniques are utilized in practical projects in Data Science and Big Data, check out ProjectPro’s repository of end-to-end solved projects in Data Science and Big Data.

FAQ's

1) What is Mirror Reflection in Data Augmentation?

Mirror Reflection results from the Horizontal and Vertical Flipping of an image in the data augmentation process. Horizontal flip rotates the image around a vertical line that passes through the center of the image. It implies that the image is symmetrically flipped, and the two sides of the image around the central (mid) line are mirror images or symmetric reflections of each other.

The new coordinates of each corner can be described as the mirror image of the corner of a vertical line through the center of the image. The vertical line through the center will be the vertical midline of the line connecting the original and newly transformed corners.

2) Why use a generator for Data Augmentation?

In the context of data augmentation using Keras, instead of reading data from a file, database, or other data source, a Data Generator generates data according to certain patterns. You can define generation transformations to generate data. The Keras Image Data Generator is used to take an input of original data, perform random transformations on this data as well, and return an output containing only the newly transformed data. No data is added.

The Keras image data generator class is also used to perform data augmentation aimed at gaining overall gains in model generalization. Operations such as rotation, translation, shearing, scaling, and horizontal flipping are performed randomly on data augmentation using an image data generator.

In the context of GANs for data augmentation, a generator is used as well. The generator part of the GAN learns to create fake data by incorporating feedback from the discriminator. We learn to have the discriminator classify its output as real. A generator that learns to produce plausible data. It takes a fixed-length random vector as input and learns to generate samples that mimic the distribution of the original data set. The generated samples will be the negative samples of the discriminator.

About the Author

Simran

Simran Anand is a Software Engineer graduated from Vellore Institute of Technology. Her expertise includes technical paradigms like Machine Learning, Deep Learning, Data Analytics and Competitive Programming. She has worked on various hands-on projects in the domains of Data Science, Artificial