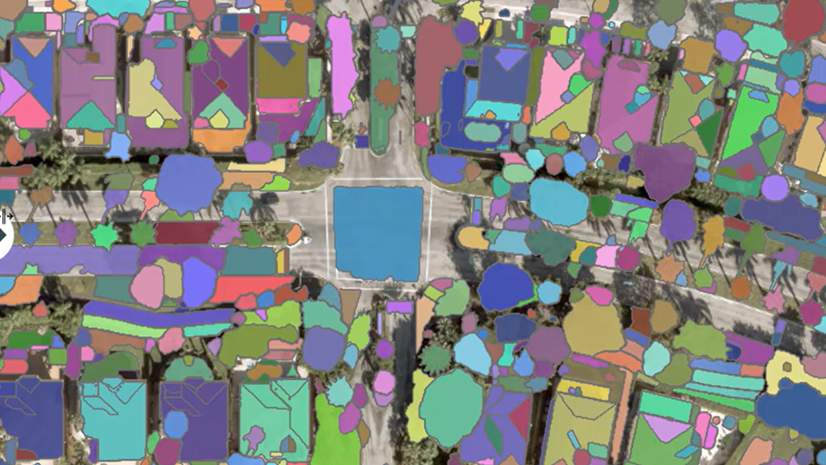

Meta’s Segment Anything Model (SAM) is making waves in the realm of image segmentation. It can precisely segment objects in images and can be used to extract GIS features such as center-pivot farms, ships or yachts that distinctly stand out from their background. However, while SAM excels at segmenting objects, it does not categorize them by the type of object, and this poses a challenge.

In a complex scene with buildings, roads and trees, SAM segments each object, generating distinct masks for each instance, but it doesn’t provide any knowledge of the type of object. Consequently, extracting GIS features representing trees or buildings- becomes impractical as all features belong to an unknown ‘object’ class. This limitation renders SAM incompatible with GIS workflows, as the lack of informative data impedes feature extraction.

Enter Grounding Dino, an open-source vision-language model that excels at detecting objects in images given their textual description. By integrating SAM with Grounding Dino, it becomes possible not only to detect features of a specific kind in imagery but also to precisely segment them. The synergy between Grounding Dino and SAM allows users to provide free-form text prompts, describing the object of interest they want to extract. This innovative approach enables SAM to extract features based on textual descriptions, vastly expanding its utility in GIS applications.

We have released Text SAM as an open-source sample model that can be prompted using free-form text prompts to extract features of various kinds. The source code of this model is available here for those who want to learn how they can integrate such models with ArcGIS.

Let’s look at some of the results that we obtained using text prompts such as words or phrases.

Note: Avoid acronyms and short forms when describing your object to get accurate detections.

While Text SAM excels at segmenting diverse objects, the best results can be obtained by keeping the following in mind:

- “Things”, not “stuff”—Text SAM is suitable for extracting objects with clear boundaries and distinct shapes, such as cars, trees, buildings, etc. It is not suitable at extracting amorphous or indistinct elements like grass, water, forests, etc., which might cover large areas and may not have well defined boundaries or shapes. Pixel classification models, such as High Resolution Land Cover Classification are better suited for that.

- Pick the right cell-size—The cell-size is often the most important model argument for any deep learning based inferencing task. It plays a critical role as it directly affects how much of the object and it’s background the model “sees” during prediction. Other model arguments like the text threshold, box threshold, and batch size may enhance performance. Here’s a quick rundown of these parameters:

-

- box_threshold—Determines the confidence score for selecting detections to include in results (range: 0 to 1.0).

- text_threshold—Sets the confidence score for associating detected objects with provided text prompts (range: 0 to 1.0).

- batch_size—The number of image tiles processed in each step of the model inference. This depends on the memory of your graphics card

-

- Post-processing—Employing geoprocessing tools in ArcGIS Pro for refining Text SAM’s predictions can mitigate noise and enhance accuracy. For instance, you may choose to filter out small detections by setting a definition expression using the shape’s area.

Now it’s your turn to unleash the potential of Text SAM with relevant text prompts and extract objects of interest. Stay tuned for more blogs on pretrained models tailored for specific GIS tasks, such as land-cover classification. Explore our blogs to delve deeper into the world of pretrained models.

Article Discussion: