Your data security is our priority

At Databricks, we know that data is one of your most valuable assets and always has to be protected - that's why security is built into every layer of the Databricks Lakehouse Platform. Like most software-as-a-service (SaaS) platforms, Databricks operates under a Shared Responsibility Model which means that the customer must evaluate the security features available and correctly configure those necessary to safely meet their risk profile, protect sensitive data, and adhere to their internal policies or regulatory requirements. Summarized succinctly - Databricks is responsible for the security of the platform, and the customer is responsible for the security in the platform.

Security Best Practices inspired by our most security-conscious customers

Our security team has helped thousands of customers deploy the Databricks Lakehouse Platform with these features configured correctly. Due to this expertise, we have identified a threat model and created a best practice checklist for what "good" looks like on all three major clouds.

Some customers have valued hearing how we came up with this list of best practices. The story started at a lunch with the Databricks Financial Services account teams in New York City in 2021. One of our Solution Architects made a passionate case to help customers validate their configurations to avoid inadvertently missing anything that might be critical to meeting their compliance or regulatory requirements. We realized that financial services companies processing sensitive data would apply almost the same security controls to their Databricks deployments. For example, they'll run their business and design their architecture differently, but almost all will use customer-managed keys (CMK), Private Link, and store query results within their account.

In order to cater for as many customers as possible, we split the list into "most" and "high security" deployments. We also mapped out a threat model based on customers' primary considerations about Databricks and the main areas we wanted our customers to understand.

The result was a set of secure configuration guides that provide evidence-based recommendations for deploying Databricks securely, all driven by the controls relied upon by our most security-conscious customers. You can download these security best practice recommendations from our Security and Trust Center or check them out directly via the links below:

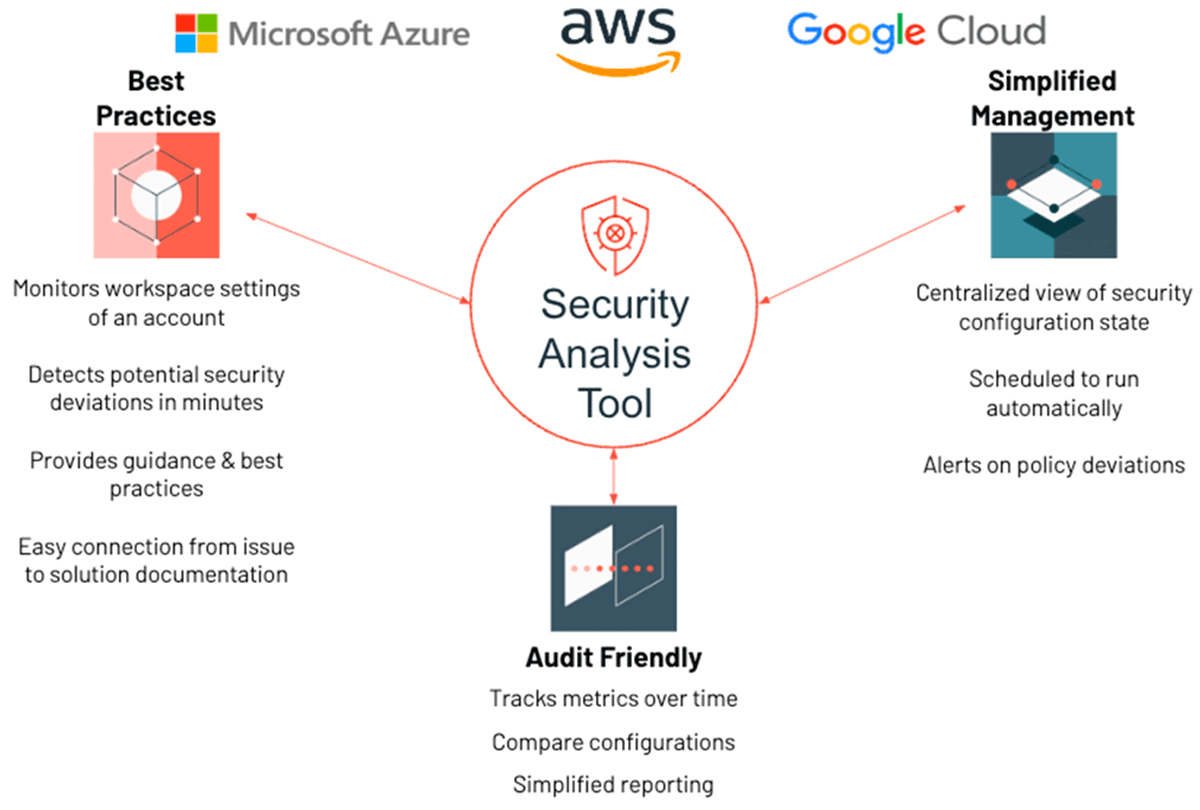

Security Analysis Tool (SAT)

Of course, even better than best practice recommendations is an automated security health check that does the analysis for you. In November, we launched the Security Analysis Tool (SAT). SAT measures your workspace configuration against our security best practices, programmatically verifying them using standard API calls and reporting deviations by severity, with links that explain how to extend your security setup to meet any additional stringent requirements derived from your internal policies. And as of February, the SAT has now gone multi-cloud, thereby enabling customers to measure their security health against the best practices for each of the three major clouds.

Conclusion

We know that security is top of mind for all our customers, and that's why we have made our security best practice guides readily available on our Security and Trust Center. Today, you can download the recommendations for your chosen cloud(s) and start with the Security Analysis Tool (SAT). But we also recommend you bookmark the page and return to it regularly to check the latest and greatest recommendations for securing your data. The bad guys aren't standing still, and neither should you!