Snowflake Architecture and It's Fundamental Concepts

This article will give you a detailed overview of the Snowflake Data Warehouse and its three-layered architecture.

As the demand for big data grows, an increasing number of businesses are turning to cloud data warehouses. The cloud is the only platform to handle today's colossal data volumes because of its flexibility and scalability. Launched in 2014, Snowflake is one of the most popular cloud data solutions on the market. With around 5774 companies using it, Snowflake has recently been added to the top 20 most valued worldwide unicorns and the top 10 most expensive US unicorns.

AWS Snowflake Data Pipeline Example using Kinesis and Airflow

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectThis blog walks you through what does Snowflake do, the various features it offers, the Snowflake architecture, and so much more.

Table of Contents

- Snowflake Overview and Architecture

- What is Snowflake Data Warehouse?

- What Does Snowflake Do?

- Snowflake Features that Make Data Science Easier

- Building Data Applications with Snowflake Data Warehouse

- Snowflake Data Warehouse Architecture

- How Does Snowflake Store Data Internally?

- The AWS-Snowflake Partnership

- Snowflake Competitors

- Snowflake vs. RedShift

- Snowflake vs. BigQuery

- Snowflake vs. Databricks

- Snowflake Projects for Practice in 2022

- Dive Deeper Into The Snowflake Architecture

- FAQs on Snowflake Architecture

Snowflake Overview and Architecture

With Data Explosion, acquiring, processing, and storing large or complicated datasets appears more challenging. As a result, having a central repository to safely store all data and further examine it to make informed decisions becomes necessary for enterprises. This is the reason why we need Data Warehouses.

What is Snowflake Data Warehouse?

A Data Warehouse is a central information repository that enables Data Analytics and Business Intelligence (BI) activities. A data warehouse can store vast amounts of data from numerous sources in a single location, run queries and perform analyses to help businesses optimize their operations. Its analytical skills enable companies to gain significant insights from their data and make better decisions.

Snowflake is a Data Warehouse solution that supports ANSI SQL and is available as a SaaS (Software-as-a-Service). It also offers a unique architecture that allows users to quickly build tables and begin querying data without administrative or DBA involvement.

What Does Snowflake Do?

Snowflake is a cloud-based data platform that provides excellent manageability regarding data warehousing, data lakes, data analytics, etc. Snowflake's fantastic feature is that it can handle a near-infinite number of concurrent workloads, ensuring that your users can always perform what they need to do when they need to.

Snowflake provides data warehousing, processing, and analytical solutions that are significantly quicker, simpler to use, and more adaptable than traditional systems.

Snowflake is not based on existing database systems or big data software platforms like Hadoop. On the other hand, Snowflake integrates an entirely new SQL query engine with unique cloud-native architecture. Snowflake offers all of the capabilities of an enterprise analytical database to the customer and several unique features and functions.

Let us take a look at the unique features of Snowflake that make it better than other data warehousing platforms.

New Projects

Snowflake Features that Make Data Science Easier

Here are three Snowflake attributes that make running successful data science projects easier for businesses-

1. Centralized Source of Data

When training machine learning models, data scientists must consider a wide range of data. However, data can be stored in a variety of locations and formats. Data scientists usually invest up to 80% of their time seeking, extracting, merging, filtering, and preparing data. Data scientists often need more data over the course of the data science project. This process can take several weeks, adding to the delay in the data science workflow.

Snowflake puts all data on a single high-performance platform by bringing data in from many locations, reducing the complexity and delay imposed by standard ETL processes. Snowflake allows data to be examined and cleaned immediately, assuring data integrity. Snowflake also has data discovery features, allowing users to find and retrieve data more efficiently and rapidly. Snowflake Data Marketplace gives users rapid access to various third-party data sources. Moreover, numerous sources offer unique third-party data that is instantly accessible when needed.

2. Provides Powerful Computing Resources for Data Processing

Before inputting data into advanced machine learning models and deep learning tools, data scientists require sufficient computing resources to analyze and prepare it. Feature engineering is a computational technique that entails changing raw data into more relevant features resulting in accurate predictive models. Developing new predictive features can be-

-

difficult and time-consuming,

-

requiring domain knowledge,

-

demanding familiarity with each model's specific requirements, etc.

Traditional data preparation platforms, including Apache Spark, are unnecessarily complex and inefficient, resulting in fragile and costly data pipelines.

Snowflake's unique design provides an independent compute cluster for each workload and team, ensuring that data engineering, BI, and data science workloads do not compete for resources. Snowflake's machine learning partners transfer most of their automated feature engineering down into Snowflake's cloud data platform. You can perform manual feature engineering in various languages using Snowflake's Python, Apache Spark, and ODBC/JDBC interfaces. Data processing with SQL makes feature engineering available to a broader audience of data professionals and can result in speed and increased efficiency of up to ten times.

Here's what valued users are saying about ProjectPro

Jingwei Li

Graduate Research assistance at Stony Brook University

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Not sure what you are looking for?

View All Projects3. Extensive Network of Partners

Data scientists leverage various tools, and the machine learning (ML) sector is continually expanding, with the release of new data science tools every year. On the other hand, traditional data infrastructure can't always handle the needs of numerous toolkits, and new technologies like AutoML require a modern infrastructure to work correctly.

Snowflake's extensive network of partners allows customers to take advantage of direct connections to all existing and emerging data science-

-

languages including Python, R, Java, and Scala;

-

open-source libraries like PyTorch, XGBoost, TensorFlow, and scikit-learn;

-

notebooks like Jupyter and Zeppelin; and

-

platforms like DataRobot, Dataiku, H2O.ai, Zepl, Amazon Sagemaker, etc.

The Snowflake cloud data warehouse also integrates with the most up-to-date machine learning tools and libraries, such as Dask and Saturn Cloud. Snowflake eliminates the need to reconfigure the underlying data every time tools, languages, or libraries change by providing a single consistent source for data. You can smoothly integrate the output from these tools back into Snowflake.

Here are some more exceptional features offered by the Snowflake data warehouse solution-

-

Minimize the Need for Administration- Snowflake eliminates the management issues that come with traditional data solutions. Snowflake is a data warehousing platform that runs on the cloud. The system's design provides a high level of performance while removing the need for administrative overhead. The database is entirely managed and scales automatically in response to workload demands. Performance tweaking, infrastructure management, and optimization capabilities are all built-in, which gives businesses peace of mind. They just need to deliver their data and hand it over to Snowflake to manage.

-

Easily Available- Snowflake Architecture is designed to be fully distributed, covering multiple zones and regions, and is highly fault-tolerant in the event of hardware failure. Snowflake users are rarely aware of the consequences of any core hardware malfunction.

-

Highly Secure- Snowflake Architecture's key characteristic is security. It supports two-factor authentication and federated authentication with SSO support. Access control is based on roles and the ability to limit access based on pre-defined conditions. Snowflake also has several accreditations, such as HIPAA and SOC2 Type 2.

-

Easy Data Sharing among Partners- Snowflake has a unique feature that allows data owners to share their data with partners or other customers without duplicating it. Because there is no data transit and storage is not used, the data consumer simply pays for the data processing. Snowflake's native sharing tools, accessible via native SQL, enable you to avoid the constraints of FTP or email.

-

Multi-Cloud Support- Snowflake is a fully managed data warehouse deployed across various clouds while maintaining the same intuitive user interface. Snowflake meets its users where they are most at ease, reducing the need to transfer data over the internet from their cloud environment to Snowflake. Amazon Web Services, Google Cloud Platform, and Microsoft Azure support Snowflake.

-

Excellent Performance- Snowflake is well-known for its high performance and allows separate scaling of computing and storage while keeping all of the benefits of standard RDBMS technologies. By doing so, Snowflake has overcome one of the most significant constraints associated with traditional database technology. Snowflake lets users define a cluster size for initial deployment and then scale up or down as needed once the system is active. Snowflake handles scaling activities transparently for its customers.

-

Pay-per-use Pricing- Users can enjoy a simplified purchasing experience using Snowflake. A genuine pay-per-use approach allows for per-second pricing. Users only pay for the storage they consume and the processing power used to execute a request. No prior expenses or considerable planning is necessary to start your data warehousing project. Clusters automatically scale up to handle heavy workloads and back down to the pre-defined size. Users are only charged for the increased capacity while it is in use.

Stay ahead of your competitors in the industry by working on the best big data projects.

Building Data Applications with Snowflake Data Warehouse

Big data is being collected, stored, and processed by businesses. The major challenge for these businesses is figuring out how to commercialize big data in a practical and scalable way. By combining Snowflake with a well-designed end-user application, businesses can significantly increase the profits on selling or leasing accessibility to an organization's databases. Various companies benefit from the Snowflake data warehouse, from technology to real estate.

Source: HG Insights

Because of Snowflake's pay-per-use pricing model, companies can prioritize their cost management from budgeting to tracking and optimizing. The key objective of any data warehouse architecture is concurrency, scalability, and overall efficiency. And this is one of the many reasons why Snowflake is in high demand in the industry.

Several organizations can quickly transform, integrate, and analyze their data with Snowflake's Data Cloud. They can also design and run data apps and securely share, gather, and commercialize real-time data.

Snowflake in Action at CISCO

Snowflake increases data governance, provides granular data protection, and enables Cisco to use data to have a significant business impact. Cisco has reaped numerous benefits from the Snowflake data warehouse, such as -

-

Minimizing storage costs (disabling time travel when dropping a table, using temporary tables instead of permanent ones, using Snowflake zero-copy cloning, etc.);

-

Optimizing compute costs (by evaluating warehouse sizes and employing effective query strategies, for example);

-

Designing custom monitoring dashboards, and so on.

Snowflake in Action at Western Union

Snowflake's multi-cluster shared data architecture expanded instantaneously to serve Western Union's data, users, and workloads without causing resource conflict. Consolidating over 30 data stores into Snowflake provides them with more significant insights at a fraction of the cost of traditional data engineering. Snowflake's fully managed architecture and easy-to-navigate API have handled Western Union's data operations difficulties.

Snowflake Data Warehouse Architecture

Before we dive into the Snowflake architecture, we first understand shared-disk and shared-nothing architectures.

Shared disk Architecture

All computational nodes in this system share the same disk or storage device. Although each processing node (processor) has its memory, all processors have access to all disks. Since all nodes can access the same data, cluster control software is needed to monitor and control data processing. All nodes have a uniform copy of the data when updated or deleted. Two (or more) nodes trying to edit the same data simultaneously must not be allowed.

A shared disk architecture is typically well-suited for large-scale computing that requires ACID compliance. Applications and services that require only limited shared access to data and applications or workloads that are hard to split are usually suitable for a shared disk. Oracle Real Database Clusters is one such example of shared disk architecture.

Shared-nothing Architecture

Each computing node has its private memory and storage or disk space in a shared-nothing architecture. These nodes can communicate with each other due to interconnected network connections. When a request for processing comes in, a router routes it to the proper computing node for fulfillment. Specific business rules are often used at this routing layer to route traffic efficiently to each cluster node. When one of the computer nodes in a shared-nothing cluster fails, it transfers the processing rights to another cluster node.

Due to this change of ownership, there will be no interruption in processing the user requests. A shared-nothing architecture provides the application with high accessibility and scalability. One of the first web-scale technology organizations to deploy shared-nothing architectures, Google runs geographically distributed shared-nothing clusters with thousands of computing units. This is why a shared-nothing architecture is the right approach for a complex analytical data processing system like a data warehouse.

Now, let us discuss the Snowflake Database Architecture in detail.

Snowflake Architecture

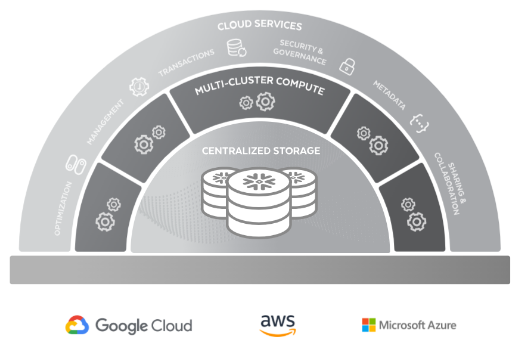

The Snowflake architecture combines shared-nothing and shared-disk architecture. Snowflake uses a central data repository accessible from all compute nodes in the platform, like shared-disk systems. Snowflake, however, utilizes MPP (massively parallel processing) compute clusters to perform queries. Each node in the cluster keeps a piece of the entire data set locally, like shared-nothing systems. This method combines a shared-disk design's ease of data management with a shared-nothing architecture's efficiency and scale-out advantages.

Source: Snowflake.com

The Snowflake data warehouse architecture has three layers -

-

Database Storage Layer

-

Query Processing Layer

-

Cloud Services Layer

Database Storage Layer

The database storage layer of the Snowflake architecture divides the data into numerous tiny partitions, optimized and compressed internally. Snowflake includes a Scalable cloud blob storage type for storing structured and semi-structured data (including JSON, AVRO, and Parquet). Snowflake saves and manages data on the cloud using a shared-disk approach, making data management simple. The shared-nothing architecture ensures that users do not worry about data distribution over multiple cluster nodes. Snowflake hides user data objects and makes them accessible only via SQL queries through the compute layer.

To acquire data for query processing, compute nodes link to the storage layer. You pay only for the average monthly storage because the storage layer is independent. Snowflake's storage is elastic because the cloud provisions it, and it is charged monthly based on consumption per TB.

Query Processing Layer/Compute Layer

For query execution, Snowflake uses the Virtual Warehouse. The query processing layer is separated from the disk storage layer in the Snowflake data architecture. You can use the data from the central storage layer to run queries in this layer.

MPP (massively parallel processing) compute clusters comprised of many nodes with CPU and Memory hosted on the cloud by Snowflake are known as Virtual Warehouses. In Snowflake, you can construct many Virtual Warehouses for varying requirements based on workloads. Each virtual warehouse can use a single storage layer. A virtual warehouse, in most cases, has its own independent compute cluster and does not interface with other virtual warehouses. Virtual Warehouses may be auto-resumed and auto-suspended, are easily expandable, and include an auto-scaling factor (when defined).

Cloud Services Layer

The cloud services layer contains all of the operations that coordinate throughout Snowflake, such as authentication, security, data management, and query optimization. A cloud service is a stateless computing resource that operates across different availability zones and uses highly accessible and usable information. The cloud services layer provides a SQL client interface for data operations such as DDL and DML.

Services handled by the cloud services layer include:

-

Login requests made by users must pass through this layer.

-

Query submissions to Snowflake must pass through this layer's optimizer before being routed to the Compute Layer for processing.

-

This layer stores the metadata needed to optimize a query or filter data.

The Snowflake architecture has the advantage of scaling any layer independently of the others. You can, for example, elastically scale the storage layer and be charged separately for storage. Several virtual warehouses can be deployed and expanded when there is a need for more resources for faster query processing and better performance.

How Does Snowflake Store Data Internally?

Let us now understand the Snowflake data storage layer in detail.

As soon as data enters the Snowflake data storage layer, it is restructured into multiple micro-partitions that are internally optimized, compressed, and stored in columnar format. Data administration is easier using the cloud for data storage, which operates as a shared-disk architecture (data accessible by all clusters). The only way for users to access the data objects that Snowflake stores in its storage layer are via running SQL query operations; they are neither directly visible nor accessible.

Micro Partitions, Data Clustering, and Columnar Format are a few key concepts relevant to the data storage layer that go into creating Snowflake's table structure for its faster retrieval.

-

Micro Partition- All data in Snowflake tables are automatically divided into micro-partitions, which are contiguous storage units, as opposed to traditional static partitioning, which requires a column name to be manually entered to partition the data. A micro-partition's data size ranges from fifty MB to five hundred MB. Each micro-partition's column is automatically assigned the most effective compression algorithm by the snowflake storage layer. The rows in tables are then translated into separate micro-partitions arranged in a columnar format. Tables are dynamically partitioned using the sequence of the data while inserting or loading the data. To enable and keep table maintenance simpler, all DML functions (such as DELETE and UPDATE) make use of the underlying micro-partition metadata. For instance, only a small number of operations, such as deleting all of the records from a table, are metadata-only.

-

Data Clustering-

Data clustering is crucial because unordered or incompletely sorted table data can affect query performance, especially for large tables. Each time data is imported or put into a table in the Snowflake storage layer, clustering metadata for each micro-partition generated in the process is collected and recorded. After that, Snowflake uses this clustering data to speed up queries that employ these columns by avoiding unnecessary micro-partition scanning during querying.

-

Columnar Format- Columnar data storage offers several benefits over row-based formats.

-

Data security, as data is not accessible by humans.

-

Low storage consumption – Columnar storage decreases latency and is effective at reading data quickly.

-

Supports complex nested data structures.

-

Designed to work best with queries that process massive amounts of data.

The AWS-Snowflake Partnership

Snowflake is a cloud-native data warehousing platform for importing, analyzing, and reporting vast amounts of data first distributed on Amazon Web Services (AWS). Traditional on-premise systems like Oracle and Teradata are costly for many small and mid-sized organizations. The cost of purchasing, installing, and maintaining hardware/software are a few reasons which make these systems expensive. On the other hand, Snowflake is deployed on the cloud and is immediately available to users. Snowflake's pricing approach gives businesses of all sizes the freedom to use a data warehouse as a unified data storage for business data analytics and reporting. You can deploy Snowflake environments directly from the AWS cloud for AWS users.

As an Amazon Web Services partner, Snowflake, provides a complete set of services for AWS-supported data warehousing, including-

-

AWS PrivateLink supports Snowflake clients to connect to their Snowflake instance quickly and securely without using the public Internet.

-

Snowflake Snowpipe provides an automated, cost-effective service for loading data from AWS into Snowflake for Snowflake users. The service asynchronously identifies and feeds data into Snowflake from Amazon Simple Storage Service using Amazon SQS and other AWS technologies (Amazon S3).

-

AWS Glue integration allows you to handle data transformation and ingestion pipelines more easily.

-

Snowflake data access for AWS Sagemaker reduces data preparation time and provides a single source of information for Amazon's new machine-learning modeling solutions.

Snowflake Competitors

Let us look at key differences between Snowflake and some of its competing data platforms, such as Redshift, BigQuery, and Databricks.

Snowflake vs. RedShift

|

Features |

Snowflake |

Redshift |

|

When compared to Redshift, Snowflake often outperforms it in public TPC-based benchmarks, although only by a small margin. Its micro-partition storage strategy efficiently searches less data than larger partitions. In contrast to multi-tenant shared resource solutions, the decoupled storage & compute architecture's ability to isolate workloads allows you to minimize resource competition. Additionally, the capacity to expand warehouse sizes can often improve performance (for a higher cost), though not always linearly. |

Redshift provides more customization options than others and includes a result cache for accelerating repetitive query workloads. However, it does not provide significantly faster computing performance than other competing data warehouses. Sort keys can enhance performance; however, they only make a small difference. Indexes are not supported; thus, conducting low-latency analytics for huge data volumes is difficult. |

|

Both data volumes and concurrent queries scale well in Snowflake. In addition to allowing cluster resizing without causing downtime, the decoupled storage/compute design also allows for horizontal auto-scaling for increased query concurrency during peak usage. |

Even with RA3, Redshift cannot split up various workloads among clusters, which limits its size. Even though it can scale to up to 10 clusters to accommodate query concurrency, by default it can only manage a maximum of 50 queued requests across all clusters. |

|

One of the earliest decoupled storage and computation systems, the Snowflake architecture was the first to offer nearly infinite compute scaling, workload isolation, and horizontal user scalability. It runs on AWS, Azure, and GCP. You have to transfer data from your VPC to the Snowflake cloud because it is multi-tenant over shared resources in nature. Its most expensive tier, "Virtual Private Snowflake" (VPS), can run a specific, isolated version of Snowflake. Each discrete T-shirt size is bundled with preset HW properties that are hidden from the users, and its virtual warehouses can be sized along an XS/S/M.../4XL axis. |

Being the first Cloud DW in the company, Redshift has the group's oldest architecture. Its architecture was not designed to keep storage and computation separate. Even though it now features RA3 nodes that let you scale compute and only cache the data you need locally, all compute still functions as a single unit. It lags behind other decoupled storage/compute systems because you cannot isolate and separate various workloads over the same data. Unlike other cloud data warehouses, Redshift is deployed in your VPC and functions as an isolated tenant per customer. |

|

A key difference between Snowflake and Redshift is that the range of security and compliance options varies depending on which edition of the product you choose. Carefully check the edition you are considering to ensure it includes all the necessary features. Snowflake also showcases always-on encryption and VPC/VPN network isolating options. |

To meet your security requirements, Redshift's end-to-end encryption can be customized. A virtual private cloud (VPC) and a VPN are options for isolating and connecting your network to your current IT infrastructure. You can meet compliance requirements with the help of integration with AWS CloudTrail's auditing. |

Build a job-winning Big Data portfolio with end-to-end solved Apache Spark Projects for Resume and ace that Big Data interview!

Snowflake vs. BigQuery

|

Features |

Snowflake |

BigQuery |

|

To maximize their independent performance, the Snowflake architecture separates computation, storage, and cloud services. Snowflake offers a time-based pricing approach for computing resources, where customers are charged for execution time per second but not for the volume of data scanned during processing. The Snowflake architecture also offers various options for reserved or on-demand storage at various prices. You can choose the features that best suit your business from Snowflake’s five versions, with extra functionality associated with each escalating price level. |

BigQuery is a serverless data warehouse that eliminates the need for design considerations by managing all resources and automating scalability and availability. As a result, managers can make decisions on the required CPU or storage levels. BigQuery offers two options for pricing. Its on-demand pricing mechanism for computational resources employs a query-based pricing model. |

|

Clusters can stop or start during busy or slow periods due to Snowflake's auto-scaling and auto-suspend features. With Snowflake, users can easily resize clusters but not individual nodes. Additionally, Snowflake lets you automatically scale up to 10 warehouses with a cap of 20 DML per queue in a single table. |

Similarly, BigQuery manages all the behind-the-scenes work and automatically provisions your additional compute resources as needed. BigQuery, however, has a default limit of 100 concurrent users. |

|

The Snowflake data warehouse platform automatically encrypts data at rest. However, it offers granular permissions for schemas, tables, views, procedures, and other objects but not for columns. Snowflake offers no built-in virtual private networking. AWS PrivateLink, however, can solve this problem if Snowflake is hosted in AWS. |

BigQuery offers permissions on datasets, specific tables, views, and table access controls in addition to security at the column level. Since BigQuery is a native Google service, you can also use other Google Cloud services that have security and authentication features built-in to BigQuery, which makes integrations much simpler. |

|

Since Snowflake sets its pricing on each unique warehouse, your consumption will significantly impact the price. Snowflake offers several data warehouse sizes (such as X-Small, Small, Medium, Large, and X-Large), all of which have widely varying prices and server/cluster counts. Starting at around 0.0003 credits per second or one credit per hour for Snowflake Standard Edition, the price for an X-Small Snowflake data warehouse is one credit. Additionally, the cost of credit varies greatly on Snowflake, depending on the tier. You can pre-purchase credits to cover consumption for several Snowflake plans. |

BigQuery charges users depending on how many bytes are read or scanned. BigQuery provides flat-rate pricing, and on-demand pricing. With on-demand pricing, you are charged $5 per TB for each TB of bytes processed in a particular query (the first TB of data processed per month is completely free of charge). You must purchase slots (virtual CPUs) or dedicated resources under BigQuery's flat-rate pricing model to conduct your queries. 100 slots cost about $2,000 per month, but with an annual commitment, the price drops to $1,700. |

Snowflake vs. Databricks

|

Features |

Snowflake |

Databricks |

|

The Snowflake data warehouse has an easy-to-use SQL interface. It also offers many automation options that make it easier to use. For instance, auto-scaling and auto-suspending help stop and start clusters during slow or busy periods. Clusters can easily be modified. |

Cluster auto-scaling is available in Databricks; however, it could be more user-friendly. Since it is intended for a technical audience, the UI is more complex. More manual input is necessary for tasks like resizing clusters, upgrading configurations, or altering options. The learning curve is more difficult. |

|

SQL-based business intelligence use cases are the best fit for Snowflake. You will probably need to rely on their partner ecosystem to work on machine learning and data science use cases with Snowflake data. Like Databricks, Snowflake offers JDBC and ODBC drivers for third-party platform integration. These partners would probably process Snowflake data using a different processing engine, such as Apache Spark, before delivering the outputs to Snowflake. |

High-performance SQL queries can be run on Databricks for Business Intelligence use cases. To increase reliability over Data Lake 1.0, Databricks created Open-source Delta Lake. Since Databricks Delta Engine runs on top of Delta Lake, SQL queries were usually limited to EDWs can now be sent with high-performance levels. |

|

Since Snowflake already has structured data suited to the business use case, it is the best choice if you require high-performance queries. Snowflake performs slowly on semi-structured data since it might have to load the entire dataset into RAM and run a thorough scan. Additionally, Snowflake is batch-based and requires the complete dataset for results computation. |

Databricks uses vectorization and cost-based optimizations. Hash connectors are another feature that Databricks offers to speed up query aggregation. You can conduct high-performance queries by optimizing data processing tasks with Databricks. Databricks is a system that allows both batch processing and continuous data processing (streaming). |

Snowflake Projects for Practice in 2022

1. How to deal with slowly changing dimensions using Snowflake?

This project uses Snowflake Data Warehouse to implement several SCDs. Snowflake provides various services to help you create an effective data warehouse with ETL capabilities and support for various external data sources. In this project, you will use Snowflake's Stream and Task Functionalities to create Type 1 and Type 2 SCD.

Source Code- How to deal with slowly changing dimensions using Snowflake?

2. Snowflake Real-Time Data Warehouse Project for Beginners

You will learn how to deploy the Snowflake architecture and build a data warehouse in the cloud to generate business value in this Snowflake Data Warehousing Project. You will gain a better grasp of Snowflake components such as Snowpipe, Time Travel, and so on by working on this project.

Source Code- Snowflake Real-Time Data Warehouse Project for Beginners-1

3. AWS Snowflake Data Pipeline Example using Kinesis and Airflow

This project will help you understand how to build a Snowflake Data Pipeline from EC2 logs to Snowflake storage and S3 post-transformation and processing through Airflow DAGs. Through Airflow DAG processing and transformation, you will supply two data streams to Snowflake, and S3 processed stages in this project: customers data and orders data. You will learn how to create a Snowflake database and establish Snowflake stages.

Source Code- AWS Snowflake Data Pipeline Example using Kinesis and Airflow

Dive Deeper Into The Snowflake Architecture

The Snowflake Data Warehouse architecture empowers businesses to harness the power of data to stay ahead of the competition in the industry today. Businesses are gaining rich and fast insights, accelerating decision-making, personalizing customer experiences, reducing customer churn, and discovering new revenue streams owing to the Snowflake database architecture. Demand for data warehousing solutions like Snowflake will likely continue rising in the coming days. Snowflake's price model, efficient performance, architecture, and other factors significantly contribute to this. All of these aspects make it essential for you to launch your Snowflake learning journey.

FAQs on Snowflake Architecture

-

Why is Snowflake Schema preferred in mstr architecture?

To handle parent-child attribute relations, MicroStrategy uses a de-normalized snowflake schema.

-

What are the layers of snowflake architecture?

The Snowflake architecture comprises three layers- Database Storage Layer, Query Processing Layer, and Cloud Services Layer.

-

What is special about Snowflake?

Snowflake is special as it is the only cloud data solution that can serve as a data warehouse and a data lake. It offers a single platform for building your data warehouse architecture.

-

What does Snowflake do differently?

Snowflake is a data warehouse that combines high performance, high concurrency, flexibility, and scalability to levels not possible with traditional data warehouses. It was developed using a new unique architecture to manage all aspects of data and analytics.

About the Author

Daivi

Daivi is a highly skilled Technical Content Analyst with over a year of experience at ProjectPro. She is passionate about exploring various technology domains and enjoys staying up-to-date with industry trends and developments. Daivi is known for her excellent research skills and ability to distill