In a recent development, Snowflake has announced the general availability of a new feature i.e. “Accessibility to data in Amazon S3-compatible storage”. This exciting addition enables you to create external stages for various cloud storage services and devices that are highly and seamlessly compliant with the Amazon S3 REST API standards. With this feature, you can efficiently manage, govern, and analyze your data irrespective of its storage location, ensuring optimal data management.

The external stage stores an S3-compliant API endpoint, bucket name and path, and credentials. This feature significantly contributes to enhancing your data management capabilities within the Snowflake ecosystem.

One challenge when it comes to loading data into Snowflake database tables and querying external data lakes pertains to the Cost of data transfer. Primarily the egress fees, which are levied for data movement out of a cloud provider’s network. This particular aspect stands out as a substantial cost factor. When your data comes from another cloud environment or even a separate region within the same cloud, it often results in additional expenses for every byte being transferred into the Snowflake platform.

Cloudflare has announced their partnership with Snowflake which empowers customers to employ Cloudflare R2 as an external storage option for their tables. Notably, this entails cheap storage and eliminates egress fees entirely.

As per the Cloudflare “R2 is the ideal object storage platform to build data lakes. It’s infinitely scalable, highly durable (eleven 9’s of annual durability), and has no egress fees. Zero egress fees mean zero vendor lock-in.”

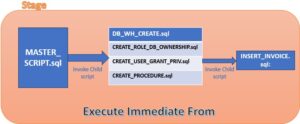

Technical Steps:

Steps to configure Snowflake integration with Cloudflare:

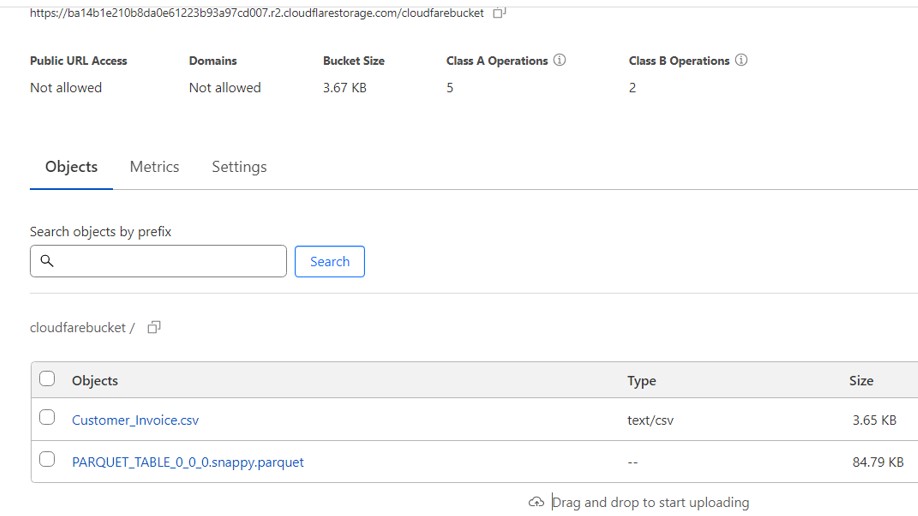

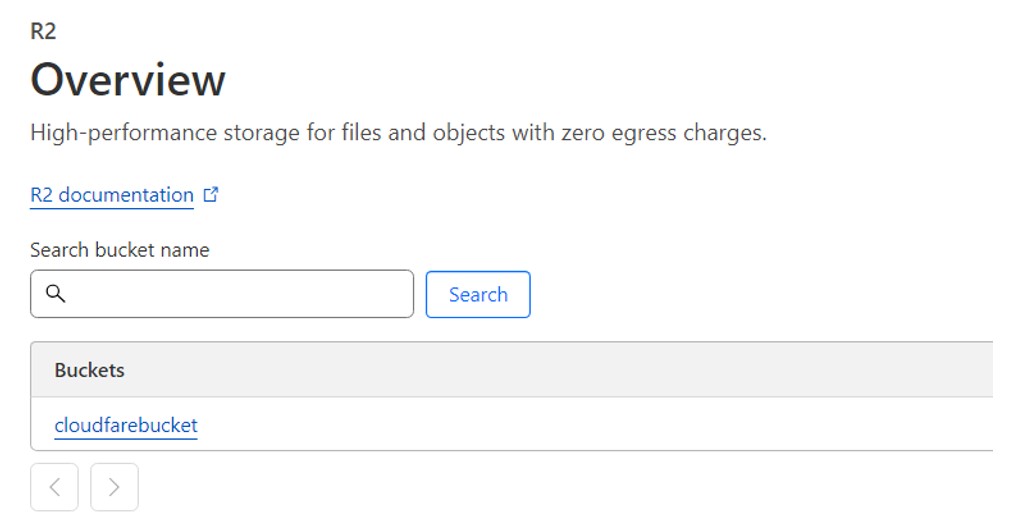

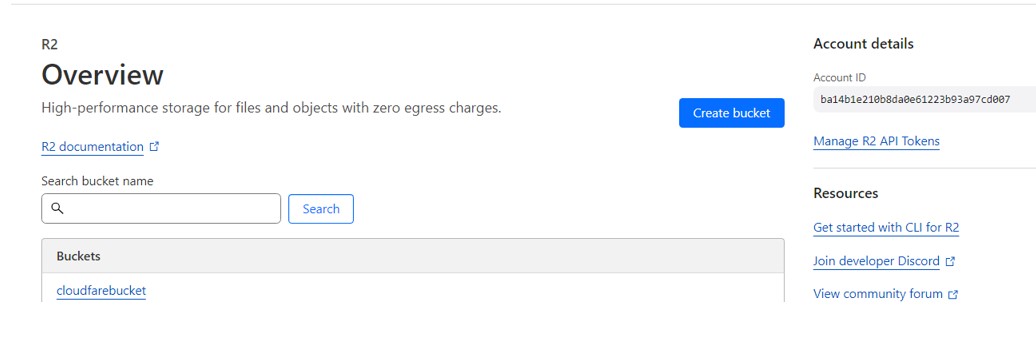

You’ll need to sign up for R2 and create a bucket.

- Use https://dash.cloudflare.com/sign-up URL to get your own account, and verify your email address.

- Enter credit card or PayPal to start using R2.

- Create the Bucket.

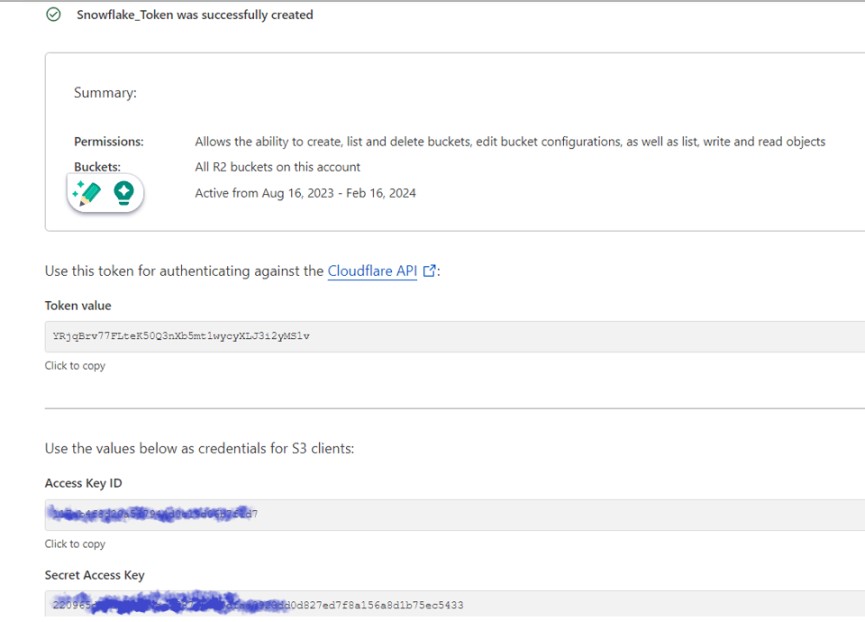

- Create an API token by clicking on Manage R2 API tokens and note ACCOUNT ID as well.

- Note SecretAccess Key and Access Key ID to create an external stage in Snowflake.

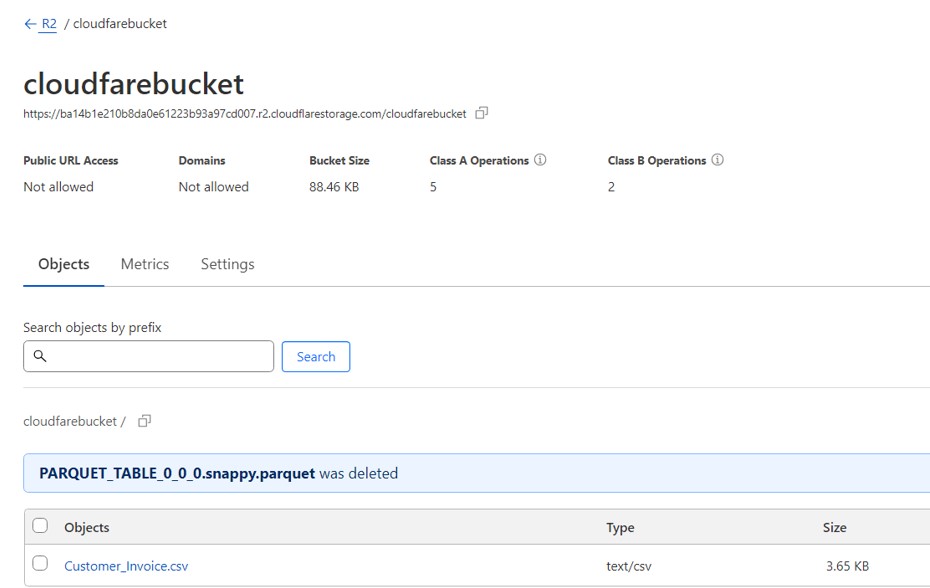

- Upload a file to cloudflare R2 bucket which we will consume in Snowflake.

Creating external stages in Snowflake:

CREATE STAGE cloudfare_stage

URL = 's3compat://cloudfarebucket/'

ENDPOINT = 'ba14b1e210b8da0e61223b93a97cd007.r2.cloudflarestorage.com'

CREDENTIALS = (AWS_KEY_ID = '117ab4f8d20a587944d0c19' AWS_SECRET_KEY = '220965df058a0af4ae278769519dfaa0923');

- Green highlighted : We noted during creation of API token.

- Brown : Standard value.

Note: You may need to contact your Snowflake account team to enable S3-compatible endpoints in Snowflake.

I raised a ticket with support web along with following details and they have whitelisted the required endpoint in a daytime.

Details:

Current Account : AK88284

Region: << Region where Snowflake is hosted>>

Snowflake Account URL : <<Your Snowflake Account URL >>

ENDPOINT : << Account_id.r2.cloudflarestorage.com>>

Vendor : R2 Cloudflare

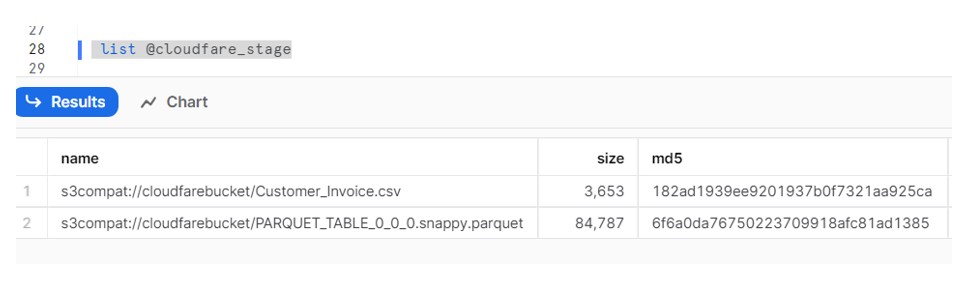

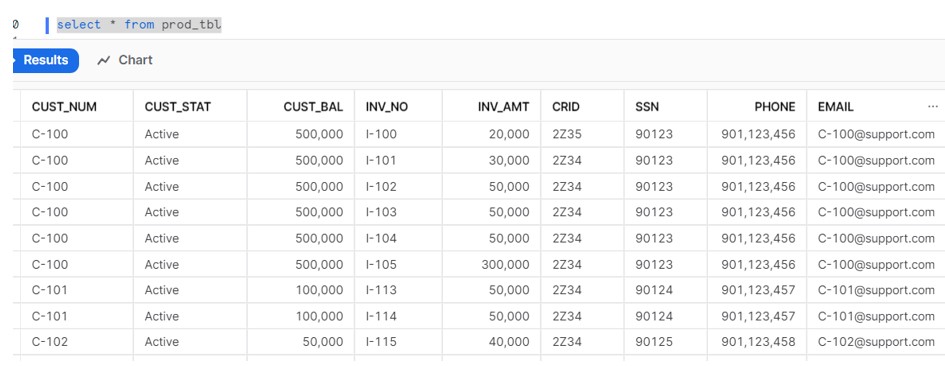

Once I was able to create the Stage (defined above) pointing to s3 compatible R2 storage provider , I created table in snowflake :

create or replace table prod_tbl (

CUST_NUM varchar(8) null,

CUST_STAT varchar(255) null,

CUST_BAL number(20) null,

INV_NO varchar(10) null,

INV_AMT number(10) null,

CRID VARCHAR(4),

SSN VARCHAR(10),

phone number(10),

Email VARCHAR(50)

);

Load the data into Snowflake:

copy into prod_tbl

from @demo_db.public.cloudfare_stage/Customer_Invoice.csv

on_error = CONTINUE;

Also I tried to upload the data from snowflake to Cloudflare Bucket:

copy into @cloudfare_stage/PARQUET_TABLE

from PARQUET_TABLE

file_format = (type=parquet);