The emergence of cloud data warehouses, offering scalable and cost-effective data storage and processing capabilities, initiated a pivotal shift in data management methodologies. This development led to a transition from traditional on-premises servers to cloud-based data architectures, fundamentally altering the data processing paradigm from ETL (Extract, Transform, Load) to ELT (Extract, Load, Transform).

Yet, looking into the complexities of today’s data-driven world, it becomes clear that ELT, while transformative at its inception, now forms just a part of an ever-evolving data landscape. This article revisits the foundational elements of ELT, exploring what it is, how it reshaped data strategies, and how it works. More importantly, we will contextualize ELT in the current scenario, where data is perpetually in motion, and the boundaries of innovation are constantly being redrawn.

What Is ELT?

So, what exactly is ELT? ELT (Extract, Load, Transform) is a data integration technique that collects raw data from multiple sources and directly loads it into the target system, typically a cloud data warehouse.

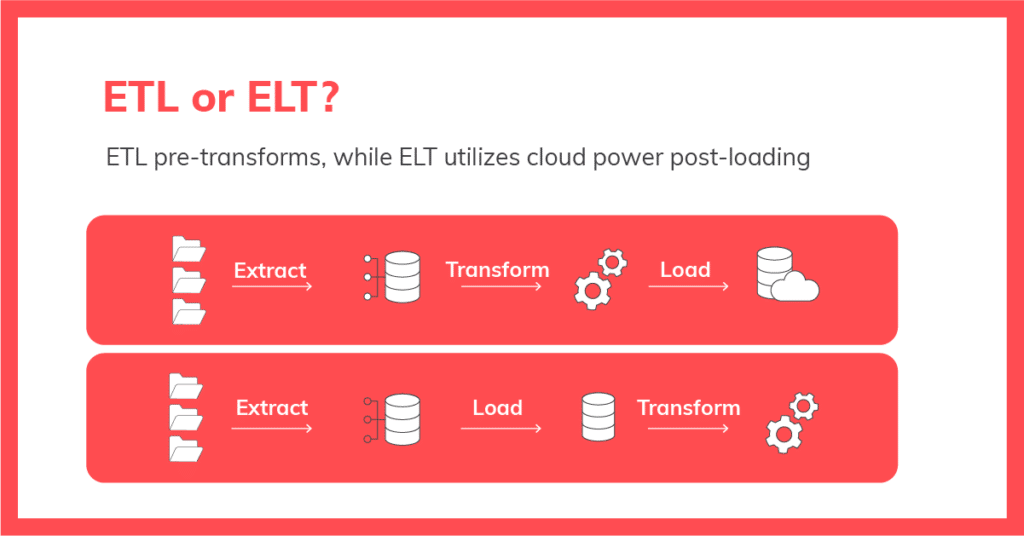

Unlike ETL, where data is transformed before being loaded into the warehouse, ELT adopts a different sequence by loading the data first and then performing the transformation processes within the target system itself.

The approach is not just about altering the order of operations but represents a more fundamental rethinking of how data is prepared and processed for analytical use, aligning with the powerful computational capabilities inherent in cloud data warehouses and the needs of modern, data-intensive business environments.

How ELT Works

The process of ELT can be broken down into the following three stages:

1. Extract

The initial stage of the ELT process is the extraction of data from various source systems. This phase involves collecting raw data from the sources, which can range from structured data in SQL or NoSQL servers, CRM and ERP systems, to unstructured data from text files, emails, and web pages.

2. Load

Unlike ETL, where data is often staged and pre-processed, in ELT the data is loaded directly from the source systems to the cloud data warehouse, bypassing the need for a separate staging area. In ELT, the process of loading data into the target system is typically automated for efficiency and reliability. This automation is integral to the operation, often designed to be either continuous or batch-driven based on the data needs.

3. Transform

The final and critical phase in the ELT process is the transformation of data. This stage involves converting the data from its original format into a format that is suitable for analysis. The transformation is governed by predefined rules that dictate how the data should be altered to fit the requirements of the target data store.

This process can encompass a wide range of activities, each aiming to enhance the data’s usability and relevance. For example:

Aggregating Data: This includes summing up numerical values and applying mathematical functions to create summarized insights from the raw data.

Data Type Conversion: Adjusting data types for consistency across the dataset, which can involve altering date formats, numeric values, or other types.

Text String Modifications: Editing and refining text strings for clarity and uniformity, essential for consistent data interpretation.

Merging Data from Multiple Sources: Combining data from different tables and databases to form a comprehensive and cohesive dataset.

Understanding the Key Differences: ETL vs. ELT

A fundamental aspect of grasping ELT’s role in data management involves understanding how it differs from its predecessor, ETL (Extract, Transform, Load). And this is a topic of ongoing industry discussion.

The primary distinction lies in the sequence and location of the data transformation process. In the ETL process, data extraction is followed by a transformation phase that occurs externally on a separate processing server. Only after this transformation is the data loaded into the target system.

This approach ensures that only processed and refined data is housed in the data warehouse, leaving the raw data outside of it. ETL is traditionally suited for environments where ensuring data integrity before it enters the warehouse is paramount.

Conversely, ELT begins by extracting data from its sources and loading it directly into the target system in its unprocessed form. The transformation of data occurs within the data warehouse itself, after the loading phase. This means that both raw and transformed data coexist within the data warehouse, offering greater flexibility and providing a comprehensive historical context for data analysis.

ELT is particularly beneficial for modern, cloud-based data environments where the data warehouse’s processing capabilities can handle large volumes of data efficiently.

These differences between ETL and ELT give rise to various implications in terms of data processing speed, scalability, and the flexibility of handling data. For a more in-depth comparison and understanding of these two pivotal data integration methods, you can explore our detailed article: ETL vs. ELT: An In-Depth Comparison.

Benefits of ELT

Compared to ETL, the adoption of ELT in data management strategies offers a host of advantages:

Increased Efficiency and Speed: By loading data directly into the warehouse before transforming it, ELT minimizes the time lag between data collection and availability for analysis. This leads to faster insights and decision-making.

Scalability: Cloud data warehouses have significant processing power, allowing ELT processes to handle large volumes of data more effectively than traditional methods.

Flexibility: With ELT, data is stored in its raw form and transformed as needed. This flexibility allows businesses to adapt quickly to changing data requirements and analytical needs.

Reduced Complexity: ELT simplifies the data pipeline by eliminating the need for separate ETL servers and staging areas, thus reducing the complexity and maintenance overhead.

Improved Data Integrity: By processing data in a robust cloud environment, ELT can ensure higher data integrity and offer advanced tools for data cleansing and de-duplication.

Read More: Zero ETL: What’s Behind the Hype?

What You Should Look for in an ELT Tool

Choosing the right ELT tool extends beyond the basic functionality of extracting, transforming, and loading data. It involves aligning the tool with specific business needs and automating processes to focus on business value. Here are key considerations:

Automated Data Integration: Modern ELT tools should automate the entire data integration process, eliminating outdated practices like hand-coding. This automation is crucial for reducing labor-intensive tasks and focusing on strategic business outcomes.

Understanding Your Data Landscape:

Know Your Data Source: Identify the sources of your data, understanding their structure, format, and update frequency.

Audit Your Data Sources: Evaluate the quality and consistency of your data sources to preemptively address potential issues.

Optimal Data Extraction Approach: Select a tool that aligns with your data extraction strategy, whether it’s real-time, batch processing, or a combination of both.

Transformation and Loading Capabilities:

Tailored Transformations: The tool should offer flexible transformation features, allowing data manipulation according to your specific business logic.

End Destination Compatibility: Ensure the tool supports your target destination, be it a data warehouse, data lake, or another system.

Efficient Data Loading: Look for features that facilitate seamless data loading, including load scheduling, error handling, and performance optimization.

Maintenance and Compatibility:

Ease of Maintenance: Opt for a tool that simplifies ongoing maintenance and reduces operational complexity.

Future-Proof Compatibility: The tool should integrate seamlessly with your current tech stack and be adaptable to future data solutions.

Cost-Effectiveness: Consider the total cost of ownership. Pre-built ELT solutions often offer a more cost-effective, faster, and versatile approach suited to various data management strategies than a custom-built solution.

Developer Resources: While custom-built ETL processes are an option, they can be resource-intensive and costly. An efficient ELT tool should provide comprehensive data processing capabilities that are both economical and versatile, aligning with diverse data management needs.

The Alphabet Soup of Data Integration

The terminologies ETL (Extract, Transform, Load), ELT (Extract, Load, Transform), and their numerous variations like EtLT (Extract, transform, Load, Transform) or Zero ETL, represent the evolving strategies for data integration. The key isn’t to dwell on which acronym is superior but to focus on the primary objective: efficiently transporting data to the desired system for transformation and making it accessible for insightful analytics.

From a practical standpoint, the choice between ETL, ELT, EtLT, or any other variation largely depends on the specific needs of the business and its data infrastructure. Factors such as the volume of data, the complexity of transformations required, the existing IT environment, and the strategic goals of the organization play crucial roles in this decision.

For instance, a company heavily invested in cloud infrastructure might find ELT more advantageous due to its compatibility with cloud environments and the ability to handle large-scale data processing efficiently. Conversely, organizations with legacy systems or specific regulatory requirements might still opt for the traditional ETL approach.

Ultimately, the focus should be on the end goal: efficiently bringing data into the system where it can be transformed according to business needs and making it available for analytics teams to extract meaningful insights. The continuous evolution in data management strategies underscores the need for data engineers to remain adaptable, choosing the right tool for the right job. As technology advances, so will the methods of data integration, but the core objective remains constant — to harness the full potential of data for informed decision-making and strategic business growth.