How to Set Data Quality Standards for Your Company the Right Way

It’s a big moment for big data. Advancements in AI are generating countless headlines and breaking adoption records, and talented data specialists may be the hottest hires on the market. But without quality data, even the best technologies and teams won’t be able to drive meaningful results.

And even though accurate and reliable data is the foundation of decision-making and strategy, many companies struggle to achieve high data quality. Technology alone falls short in delivering consistently reliable data, and without organizational support, even the most talented data teams will face an uphill climb when it comes to improving data quality.

This is because data ecosystems are inherently complex, with diverse sources, formats, use cases, and end consumers. Culture, process, technology, and team organization all play a role in data quality. So, in order for your company to uncover the true value of its data, you must take a structured approach to data quality.

That’s where data quality standards come into play. Having a clear and robust set of data quality standards is like having a GPS for the journey of data management — it keeps everything (and everyone) on track, and helps your organization reach the final destination of data-driven decision-making.

Table of Contents

Defining data quality standards and their importance

First things first: let’s define exactly what data quality standards are, and precisely why they matter so much.

What are data quality standards?

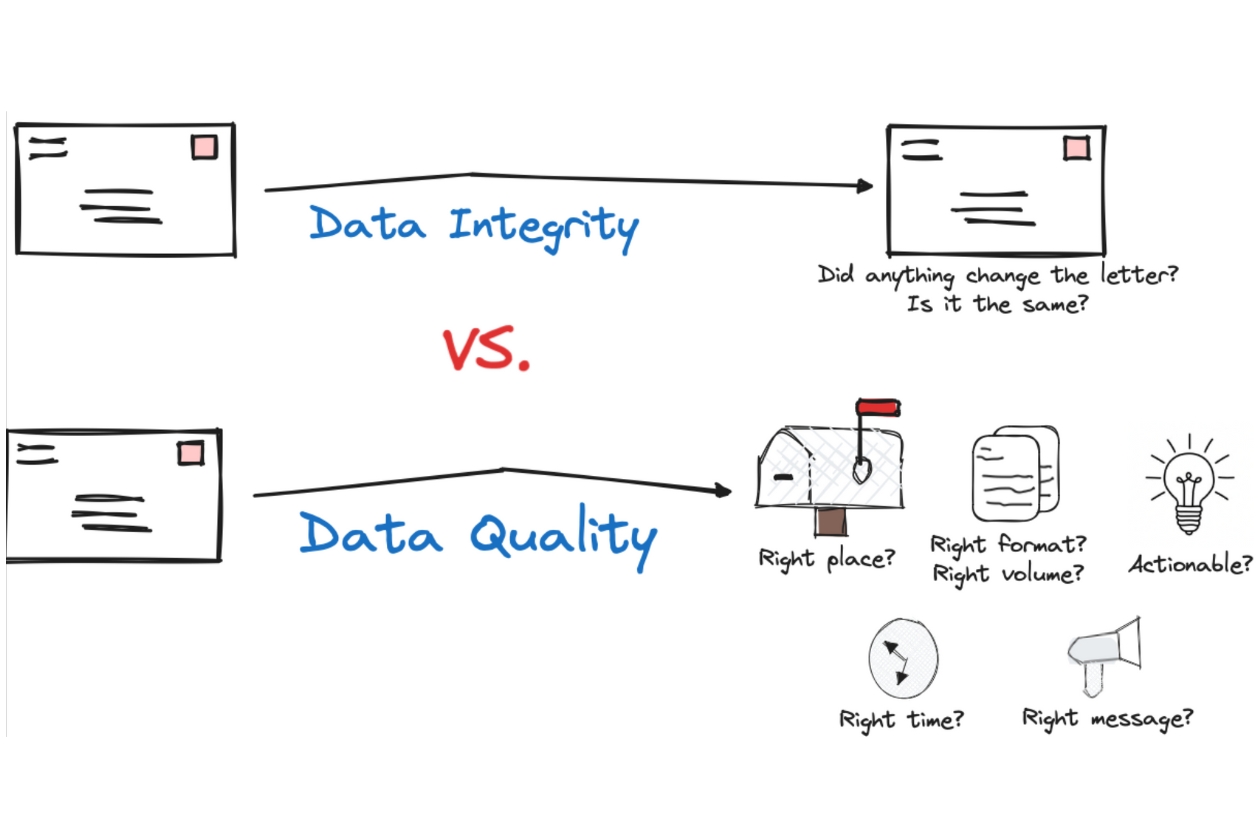

Data quality standards are a set of defined criteria and guidelines that data must meet in order to be considered accurate, reliable, and valuable. And given today’s complex data ecosystems, quality standards are crucial throughout its entire lifecycle — not just final dashboards and reports. Data quality standards need to be applied from the moment of ingestion all the way to final business use.

These standards cover elements of data quality like accuracy, completeness, consistency, timeliness, and relevance. They serve as the guiding principles for every step of data handling, and help ensure all teams strive to meet consistent quality benchmarks.

Why your company needs data quality standards

Data quality standards lay a critical foundation for effective decision-making, operational efficiency, and regulatory compliance. When teams are able to set and stick to data quality standards, stakeholders build confidence in their data’s reliability, and the entire organization fosters a culture of data-driven decision-making.

Without data quality standards, data consumers and stakeholders don’t have clear insight into whether their data is reliable and trustworthy. If organizations make decisions based on inaccurate or incomplete data, it can lead to poor business outcomes and missed opportunities. And when data isn’t trustworthy, decision-makers may revert to relying on their gut instincts rather than data.

Examples of common data quality standards

Every organization will approach data quality standards differently, depending on their data use cases. But most standards will center around the six dimensions of data quality:

- Data accuracy means your data should reflect reality without significant errors or inconsistencies.

Example: All financial transactions must match bank statements to the last cent. - Data completeness means your data sets should contain all relevant information without gaps.

Example: Customer records must include first name, last name, email, and phone number. - Data consistency means your data should not contradict itself or other sources within the organization.

Example: Product IDs should always be alphanumeric and maintain the same number of characters across all systems. - Data freshness (aka data timeliness) means your data should be up-to-date and relevant to the timeframe of analysis.

Example: Social media metrics (e.g., likes, shares) should be refreshed at least once every 12 hours. - Data validity means your data conforms to the required format, type, or range of values.

Example: Email addresses in the customer database should match a valid format (e.g., name@domain.com). - Data uniqueness means your data does not contain unwanted duplicates.

Example: Product SKUs should be unique to avoid confusion in inventory management.

Setting and measuring data quality standards

So how does a data team go about setting and measuring data quality standards? Spoiler alert: with a lot of help and collaboration from across the organization.

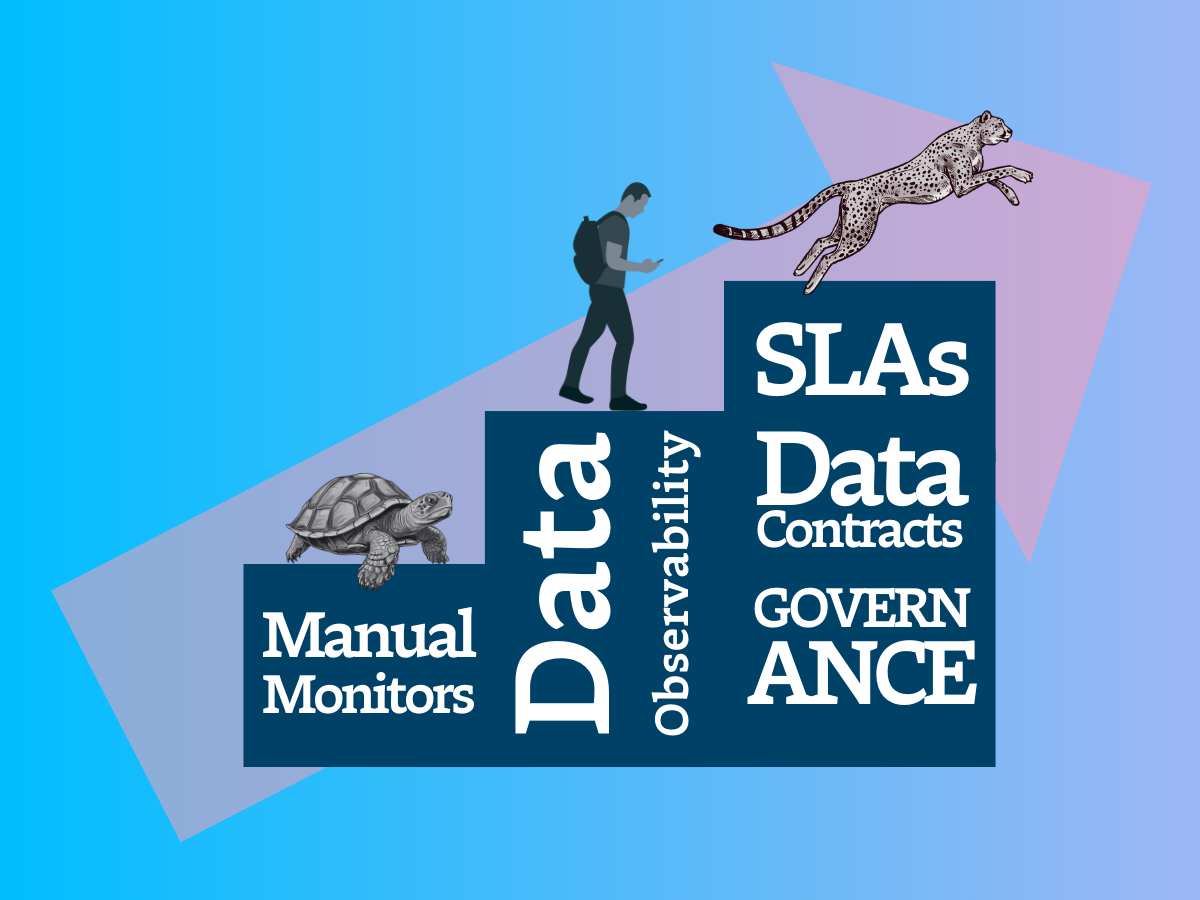

Aligning with stakeholders: SLAs, SLIs, and SLOs

Many organizations adopt an approach to setting data quality standards that will be familiar to stakeholders: SLAs (service-level agreements), SLIs (service-level indicators), and SLOs (service-level objectives). This is a method used in the business world to formalize commitments between a service provider and a customer. For example, a cloud storage company may guarantee specific data retrieval speeds in its SLA, or a SaaS product might promise 99.99% uptime to its users.

SLAs work — and have been so widely adopted — because they set crystal-clear terms and expectations among everyone involved. For a concept as abstract and complex as “data quality”, that clarity is especially crucial.

In the context of data quality standards, here’s what these terms mean:

- Service Level Agreements (SLAs) describe the formal commitment between data providers and data consumers regarding the quality, availability, timeliness, and reliability of the data being exchanged or accessed.

Example: The sales dashboard will reflect data that is no more than 2 hours old from the time of transaction capture. - Service Level Indicators (SLIs) describe the quantitative measures of data quality.

Example: The time difference between the timestamp of the latest data entry in the sales dashboard and the current time. - Service Level Objectives (SLOs) describe target values or ranges of values that each indicator should meet.

Example: 95% of all data updates to the sales dashboard should occur within 1.5 hours of the transaction capture, and no update should ever exceed 2 hours.

We can’t be too clear on this point: setting data SLAs is a team sport. It’s important for data professionals and their stakeholders to come together and determine exactly what ‘quality data’ means for key assets and products. Everyone contributes to the discussion, and everyone’s in agreement with the final outcome.

This process provides clear expectations and builds trust between data teams and business users, and helps everyone stay on the same page. And since the process of defining SLAs helps your data team gain a clearer picture of the business priorities, they’ll be able to quickly prioritize and address the most crucial issues when incidents do occur.

Common measurements for data quality

There are many different measurements you can use to quantify data quality, depending on your use cases and your goals, but here are some common examples:

- Error rates: Percentage of inaccurate or inconsistent data points

- Precision: The ratio of relevant data to retrieved data

- Consistency checks: Number of records that pass/fail consistency validation against predefined rules

- Completeness checks: The percentage of complete records or attributes within a data set

- Staleness: Age of the data or the time since the last update

- Time-to-detection: Average time taken to identify a data issue or discrepancy

- Time-to-resolution: Average time taken to resolve a data issue or discrepancy after it’s identified

- Duplicate records: Number or percentage of records that are redundant or repeated

- Missing values: Number or percentage of records with missing or null attributes

- Failed ETL jobs: Number or percentage of ETL processes that did not complete successfully

- Data downtime: The number of data incidents multiplied by the average time-to- detection plus the average time-to-resolution.

Some organizations evaluate data quality metrics based on statistical analysis, conducting a comprehensive review of data patterns and trends. Other teams use sampling techniques that evaluate a sample of a dataset and make inferences about the remaining data. And some teams leverage automated validation processes, which use technology to automatically ensure data quality (more on that in a minute).

Ownership of measurements

Different teams can play different roles in measuring data quality, but you’ll want to be sure clear accountability is assigned and good communication channels are in place.

For example, while data engineers may excel at measuring technical aspects of data quality, business analysts can bring their understanding of organizational objectives to assess whether data is aligned with business goals and supports decision-making. This collaborative approach helps teams better understand the full scope of data quality, balancing technical intricacies with its broader strategic relevance.

Data quality standards for governance

Data governance is the process of maintaining the availability, usability, privacy, and security of data. Today’s modern approach to data governance is incredibly complex, with governance teams needing to oversee increasing volumes of data ingested from diverse sources, dispersed storage across cloud-based infrastructures, and an appetite for democratized access across the organization.

Data quality standards are an integral piece of the governance puzzle, and seamlessly integrate with governance strategies by establishing a structured process for data collection, transformation, and validation. In many organizations, data governance teams often take the lead on setting data quality standards.

Leveraging data observability platforms to enforce data quality standards

Of course, setting data quality standards is just the first step. Enforcing them is equally important. And this is where technology can help.

Data observability platforms and strategies use automation to monitor and measure data quality across key dimensions. When data teams adopt observability tooling, they receive real-time insights into data pipelines and notifications when anomalies, errors, or inconsistencies occur — across the entire data lifecycle.

Data observability capabilities and features

A good data observability platform includes a broad range of features to support holistic data health, but a few core capabilities are essential to enforcing data standards at scale:

- Automated monitoring: Data quality tests occur automatically, at scale, across the entire data lifecycle. The tests are based on both automated rules set by machine learning algorithms, based on historic patterns in your datasets, as well as custom thresholds you can set to support specific business requirements.

- Intelligent alerting: When issues occur, notifications are sent to the relevant teams in their preferred channels (such as Slack, email, or PagerDuty). This helps drastically improve time-to-detection.

- End-to-end lineage: A visual map of the relationships between upstream and downstream dependencies in your data pipelines — ideally, down to the field-level. Lineage is extremely helpful at speeding up time-to-resolution and mitigating the impacts of data downtime, since you’ll see at a glance which assets and stakeholders are affected.

Additionally, data observability platforms provide robust reporting that should make it easy to track and share how your organization is performing against your data quality standards and SLAs.

Bottom line: data observability makes it possible to monitor, measure, and improve data quality standards at scale.

Achieving data reliability with the help of data quality standards

Organizations today are ingesting more data, making it more accessible to more users, and under ever-increasing pressure to make use of their data with emerging AI capabilities. That’s why it’s never been more important to develop and implement a structured approach to data quality standards.

Getting there requires a lot of collaboration, alignment, and the right set of tools. If you’d like to learn how data observability and the Monte Carlo platform can help your team unlock the full potential of your data quality, let’s talk!

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage