Logistic Regression vs Linear Regression in Machine Learning

Understand when and why should you use linear vs logistic regression when working on real-world hands-on machine learning and data science projects.

This blog introduces the critical differences that one encounters when anyone performs an analysis of logistic regression vs linear regression. Firstly, we introduce the two machine learning algorithms in detail and then move on to their practical applications to answer questions like when to use linear regression vs logistic regression.

AWS MLOps Project to Deploy Multiple Linear Regression Model

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectLinear Regression vs Logistic Regression - How are they related?

Machine Learning, as the name suggests, is about training a machine to learn hidden patterns in a dataset through mathematical algorithms. The hidden patterns are revealed by predicting the value of a target variable using the information (attributes) contained in the dataset. But, predicting the target variable may not always be the end goal. We often come across datasets that do not have a target variable. The objective is to look for a pattern in the dataset and group objects following a similar trend together.

In this blog, we will restrict ourselves to problems that require you to predict the target variable. Such problems fall into the category of supervised machine learning problems. The predicting variable usually takes the value of two types; it can either be continuous or discrete. Based on the value that a predicting variable can take, the machine learning problems can be classified into two categories:

-

Regression Problems

-

Classification Problems

Let us now explore the two types of machine learning problems in detail.

Recommended Reading: Top 30 Machine Learning Projects Ideas for Beginners in 2021

Regression Problems

Regression problems are those problems where the variable to be predicted can take continuous values. Let us look at a few practical examples that will help you understand this category of problems.

Example 1: Predicting the prices of a house using its features like location, area, etc., where the prices take continuous values. A famous dataset to work on this problem will is the Boston Housing dataset and Zillow Dataset.

Example 2: Forecasting the demand for rental bikes in the Capital Bikeshare program of Washington, D.C. The dataset for this problem is the UCI Machine Learning Repository: Bike Sharing Dataset Data Set.

Example 3: Predicting the sales of food stores using the data collected by a meal delivery company to help the stores with inventory planning. Here is the UCI Machine Learning Repository: Demand Forecasting for a store Data Set that you can use for this problem.

All the examples stated above require predictions of variables that take continuous values. And, in Data Science, we have various models available to solve such problems. A few of them are:

-

Linear Regression

-

Decision Tree

-

Random Forests

-

Ridge and Lasso Regression

New Projects

Classification Problems

When exploring several machine learning projects, you will encounter problems requiring you to assign a label to each set of features variables (or row) in the given dataset. These kinds of problems are called Classification problems.

Let us discuss a few examples of classification problems to enhance your understanding.

Example 1: Predicting the quality of the wine from the UCI Machine Learning Repository: Wine Quality Data Set by labelling them with a number from 0 to 10, where 0 indicates poorest quality and 10 indicates the best quality.

Example 2: Identifying fake news that spread false information from the real news articles using the Fake News dataset.

Example 3: Classifying the chest x-ray images to predict whether a patient is suffering from pneumonia or not, using the Chest X-Ray Images (Pneumonia) Dataset.

Recommended Reading:

The popular algorithms widely used to solve classification problems are:

-

Logistic Regression

-

Naive Bayes Classifier

-

Random Forest

-

K-Nearest Neighbour

Recommended Reading: 7 Types of Classification Algorithms in Machine Learning

Now that you understand the two basic types of machine learning problems, let us explore the two most simple and widely used machine learning algorithms: linear and logistic regression.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Introduction to Linear Regression

Linear Regression is a supervised machine learning algorithm used to solve regression problems in Data Science. It assumes a simple linear relationship between the features variables (X) and the target variable (Y). Mathematically, this means

Y ≅ aX + b

where a represents the slope and b represents the intercept coefficients of the model. The reason for using the approximately equal to (≅) is that the algorithm’s predictions (Ŷ = aX + b) are inaccurate. The model first learns the coefficients a and b using the dataset and then uses the same coefficients to predict Ŷ.

Gradient Descent Method

For evaluating the coefficients of the linear regression algorithm, we often use the Gradient descent method. According to this method, the gradient of an error function is evaluated to locate the error function’s minima

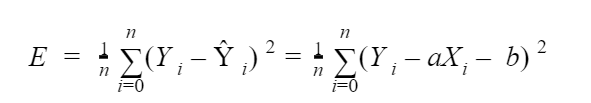

The error function is defined as,

where n denotes the number of observations in the dataset.

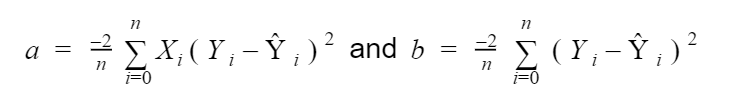

We now evaluate the gradient of this error function with respect to the coefficients a and b to get the following expressions:

The coefficients are then corrected using the equations below.

![]()

where ⺠denotes the learning rate, a parameter that controls the quantification of increment/decrement in the coefficients.

Get FREE Access to Machine Learning Example Codes for Data Cleaning, Data Munging, and Data Visualization

Introduction to Logistic Regression

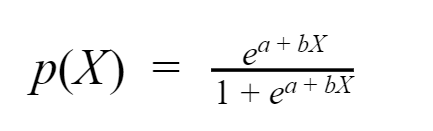

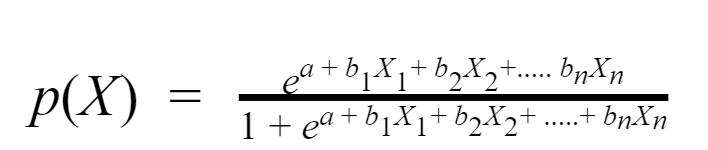

Although the algorithm, logistic regression has the keyword regression in its name, it is actually a supervised machine learning algorithm that is used to solve classification problems. That is because the algorithm uses the logistic function which has its range lie between 0 and 1. Therefore, we can use logistic regression to predict the probability that a specific feature variable (X) belongs to a particular category (Y). The formula for probability prediction that the logistic regression algorithm uses is given by,

where a and b are the algorithm coefficients.

Maximum Likelihood

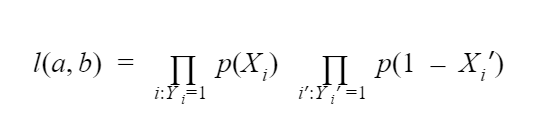

The coefficients of the logistic regression algorithm are computed by maximising the likelihood function given by,

Linear Regression vs Logistic Regression - Understanding the Differences

Now that you understand the two algorithms completely let us compare linear regression vs logistic regression to understand the two in-depth.

Logistic vs Linear Regression - Mathematical Significance

In this section, we will compare linear vs logistic regression to understand how they are different mathematically. A fundamental difference is that the two use different mathematical equations to compute the output value for the target variable. Below we have listed other differences that are not obvious.

-

The linear regression model straight away predicts the value for the target variable. However, the logistic regression algorithm predicts the probability that a given feature variable belongs to a particular category.

-

The linear regression algorithm finds the best fit for a straight line that relates feature variables with the target variable. On the other hand, logistic regression learns the pattern and uses the logistic function to assign a number to the input variable that is either close to 0 or 1.

-

The linear regression coefficients are evaluated using the gradient descent approach, where we change the intercept and slope to make the error as low as possible. For logistic regression, we use the maximum likelihood method that is pretty popular for fitting various non-linear models.

-

To test the null hypothesis that there exists no relationship between feature variables and the target variable, linear regression uses the metric t-statistic, whereas logistic regression uses the z-statistic. A large value for z-statistic and t-statistic indicates that the null hypothesis holds.

Get FREE Access to Machine Learning Example Codes for Data Cleaning, Data Munging, and Data Visualization

Multiple Linear Regression vs Multiple Logistic Regression

We have introduced equations of linear regression and logistic regression that deal with only one feature variable. However, you will likely have more than feature variables to deal with in real-world problems. We can extend both the regression models to deal with such cases of multiple feature variables. In this section, we will discuss how this extension takes place mathematically.

Multiple Linear Regression

For linear regression, one can initially expect that it will be a nice idea to fit the model on each feature variable separately. But, that is not the case because it will be challenging to combine the three models to give one single output. Furthermore, each fitted equation will not take into account the dependence of the other remaining variables on the target variable.

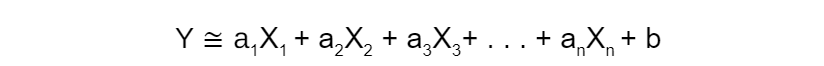

So, the better approach is to develop an equation that will imbibe the presence of all the feature variables. We can accomplish this by assigning a separate slope coefficient to each feature variable in a single equation. Thus, if we have n different features variables, the linear regression equation will look like the following.

The coefficients ai’s act like the weights for each feature variable to indicate their interdependence on the target variable quantitatively.

Here's what valued users are saying about ProjectPro

Ed Godalle

Director Data Analytics at EY / EY Tech

Jingwei Li

Graduate Research assistance at Stony Brook University

Not sure what you are looking for?

View All ProjectsMultiple Logistic Regression

For logistic regression, we will consider the case of binary classification problems where the output target variable can take only two values. The multiple logistic regression for such cases is similar to the approach followed by logistic regression and the equation is given below

Where n denotes the number of observations.

Linear vs Logistic Regression - Use Cases

Now comes the answer to the most interesting question of this blog, the answer that will help you understand how to apply the two algorithms that we have been discussing. The comparison of applications of linear regression vs. logistic regression is somewhat straightforward. The prime reason for this is the mathematical construct of their equations.

When to use linear regression vs logistic regression?

The linear regression algorithm can only be used for solving problems that expect a quantitative response as the output. Thus, it is best suited for regression problems where the target variable takes continuous values. On the other side, the logistic regression algorithm can only be used for classification problems where the output can take discrete values, like 0 or 1. Thus, it is best suited for assigning labels to a set of feature variables.

Can we use Linear Regression for classification?

A question commonly asked in interviews is why we do not use linear regression to solve classification problems. The answer is that if we use numbers like 1,2,3 to assign classes to the target variable labels, these would act as weights. So, if we interchange the meaning of those labels, the maths will remain the same, resulting in irrelevant conclusions.

However, for binary classification, one can still use linear regression provided they interpret the outcomes as crude estimates of probabilities.

Linear vs Logistics Regression- Understanding the Similarities

Here are a few points that highlight the common properties of both algorithms.

-

The two algorithms, linear regression and logistic regression, are supervised machine learning algorithms. Thus the evaluation of coefficients of both the algorithms depends on the predefined target variables.

-

Both algorithms can be extended to problems that have more than one feature variable.

-

They are both instances of parametric regression as they both rely on linear equations to make predictions.

Linear Regression vs Logistic Regression - ML Project Ideas

Well, it is one thing to discuss the applications of linear regression vs logistic regression, and the other to work on hands-on machine learning projects that help you understand them. Below you will find a list of projects that will escalate your understanding of the two algorithms and help you differentiate between them.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

Machine Learning Projects on Linear Regression

1) Avocado Price Prediction Machine Learning Project :

Avocado toasts are enjoyed by most people irrespective of the age group they belong to. But, some people haven’t even tasted them for they find them too expensive. An analysis of the fruit prices can give such people an idea about the usual month where the prices are likely to be less.

For this project, you can use the Avocado Dataset, and use the linear regression algorithm to predict the prices of avocados based on the features mentioned in the dataset.

2) Rossmann Store Sales Machine Learning Project:

At least seven countries in the European continent have 3K+ Rossmann drug stores with about 50K+ employees. Kaggle hosted a competition with data from these stores about six years ago. The Rossmann stores dataset is still available and is quite popular among beginners in Data Science.

For this project, you can use the dataset and the linear regression machine-learning algorithm to predict stores’ salesores. Also, as an additional exercise, try to analyze which factors play a vital role in sales fluctuation.

Machine Learning Projects on Logistic Regression

1) Credit Card Fraud Detection Data Science Project:

Credit Card companies take plenty of steps to ensure their card owners do not fall prey to fraudulent transactions. Yet, a few naive customers still fall for them. The companies thus try to identify such transactions to avoid being unjust towards their customers.

For this project, we recommend you use the Credit Card dataset by Universite Libre de Bruxelles and use logistic regression for identifying fraudulent transactions from the dataset. If you want to try out other machine learning algorithms, you must use Support Vector Machine and K-Means algorithms.

2) Predicting survival on the Titanic Data Science Project:

Millennials thoroughly enjoy one of the most famous romantic movies of all time, Titanic. The movie’s end showed the ship’s staff preparing a list of all the people who survived the ship. We all know that ladies and children were evacuated on boats first, but was it the case?

Kaggle has the Titanic Dataset, which you can use to find answers to all such questions. If you are a newbie in Data Science, this project is a must for you as it is one of the most straightforward datasets available for beginners. The task is to use the dataset and understand what factors influence a person’s survival on the ship. Additionally, you must use the logistic regression algorithm to predict the survival chance of people onboard the ship.

If you like the above projects ideas and want to see more such exciting and beginner-friendly projects with source code in Data Science and Big Data, check out the ProjectPro repository of Big Data projects and Data Science projects. These solved end-to-end big data and data science projects will train you on all the latest tools and techniques used in the industry.

About the Author

Manika

Manika Nagpal is a versatile professional with a strong background in both Physics and Data Science. As a Senior Analyst at ProjectPro, she leverages her expertise in data science and writing to create engaging and insightful blogs that help businesses and individuals stay up-to-date with the