20+ Splunk Interview Questions and Answers For Data Experts

Ace Your Big Data Interview With These Commonly-Asked Splunk Interview Questions and Answers | ProjectPro

Over 3 billion monthly searches, 2,400+ unique apps and add-ons, and 1,000+ unique data integrations make SPLUNK ‘the big data solution for the hybrid world’!

End-to-End Big Data Project to Learn PySpark SQL Functions

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectWith over 18000 customers worldwide, Splunk is the most popular option for businesses seeking to improve productivity, profitability, competitiveness, and security. Splunk is an outstanding tool for exploring, monitoring, analyzing, and acting on your data. Market-leading, purpose-built solutions from Splunk enable integrated security and observability. These customized solutions help technology teams efficiently accomplish their goals, and they can interact with other teams when necessary by sharing data and using shared work surfaces. From monitoring and searching through big data to generating alerts, reports, and visualizations, Splunk offers several such features to help businesses achieve their goals. This clearly shows how crucial it is for data engineers to be familiar with the Splunk platform if they want to succeed in the big data industry. This blog presents some of the most useful Splunk interview questions and answers that data enthusiasts must have the know-how of to ace their big data interviews.

Splunk Interview Questions and Answers for Freshers

This section entails some fundamental Splunk interview questions and answers for beginner-level data engineers.

-

What are the two types of Splunk forwarders?

There are two types of forwarders in Splunk: universal forwarders and heavy forwarders. The universal forwarder is typically the best choice because it comes with all the necessary elements to forward data, uses much fewer hardware resources, and is automatically scalable. A heavy forwarder is necessary in some use cases when data needs to be parsed before sending or when data needs to be forwarded depending on criteria like source or type of event.

-

How do you compress data in Splunk?

When sending over the TLS protocol, forwarders and HTTP Event Collectors compress data. The ratio of compression varies depending on the content and is typically between 8:1 and 12:1.

-

What do you mean by the Cloud Monitoring Console (CMC)?

Your Splunk Cloud Platform setup includes the Cloud Monitoring Console (CMC) application. The Monitoring Console utilized by Splunk Enterprise is replaced by CMC. The Splunk Cloud Platform environment is thoroughly monitored using CMC. The Cloud Monitoring Console app lets you view daily data ingestion details.

-

Give a brief overview of some of Splunk's maintenance responsibilities and tasks.

Here are some of the tasks that Splunk does on behalf of its users-

-

When you submit a support ticket, Splunk will activate some features that demand Splunk's assistance to activate or change your configurations.

-

By default, Splunk-initiated Service Updates will provide you with the most recent version of the Splunk Cloud Platform and a compatible version of any Premium App subscriptions. The platform will also be optimized on your behalf, including boosting the daily ingestion limit, adding storage, enabling Premium App subscriptions, and enabling Encryption at Rest.

-

To guarantee uptime and availability, Splunk constantly monitors the state of your Splunk Cloud Platform setup. It examines several system health and performance aspects, including the ability to sign in, ingest data, use Splunk Web, and conduct searches.

Splunk Admin Interview Questions and Answers

This section lists some of the Splunk interview questions and answers related to Splunk admin concepts.

-

How do you manage authentication tokens in the Splunk Cloud Platform?

The ACS API requires a JSON Web Token (JWT) to authenticate ACS endpoint calls. These authentication tokens can be generated using the ACS API directly, enabling automated workflows incorporating ACS API requests. When you send a request to the tokens endpoint, ACS allows you to target a particular search head (including standalone and premium search heads) or search head cluster by adding the search head prefix to the stack URL.

-

What is an ephemeral authentication token?

You can create an ephemeral JWT token using the ACS tokens endpoint, which supports an optional type request parameter. Ephemeral tokens are perfect for use scenarios where you don't want to maintain sustained credentials, like launching an automated CI/CD pipeline or working with contractors or other third parties who need only brief access to a deployment. Although the expiration for ephemeral tokens is set to 6 hours by default, you can specify a value for the ephemeral token that is less than 6 hours but not longer than 6 hours using the expiresOn parameter.

-

What are the steps to configure the ACS CLI?

The initial ACS CLI configuration workflow is summarized below, along with the relevant commands for each step:

-

Add a deployment (acs config add-stack )

-

Set the current deployment (acs config use-stack )

-

Log in/create authentication token (acs login)

-

Run ACS CLI operations (acs

)

Here's what valued users are saying about ProjectPro

Ed Godalle

Director Data Analytics at EY / EY Tech

Anand Kumpatla

Sr Data Scientist @ Doubleslash Software Solutions Pvt Ltd

Not sure what you are looking for?

View All Projects-

Mention some of the useful ACS CLI commands.

Here are some of the useful ACS CLI commands-

-

acs ip-allowlist- Create, specify, and remove IP allow lists to give certain network subnets access to Splunk Cloud Platform capabilities.

-

acs outbound-port- Create, remove, list, and define outgoing ports for your deployment.

-

acs token- Create, remove, define, and list JWT authentication tokens.

Splunk Scenario-based Interview Questions

Here are a few Splunk interview questions and answers based on Splunk real-time scenarios.

-

How will you add/update 'Website URL' and 'Organization name' in Splunk Answers user profile?

Here are the steps to add/update 'Website URL' and 'Organization name' in the Splunk Answers user profile-

-

After logging in, click on your user name.

-

You will find a Preferences button on the right side.

-

You can add your details by clicking the button.

-

Consider that you have three fields: group, TimeBlock, and ticketNumber. Every ticket number has a group to which it has been assigned and a time block to which it belongs; for instance, ticket number 2 with group IT2 and assigned within 1 Hour. To chart the groups against the TimeBlocks, you need to first group the Tickets with their groups. How will you achieve that?

Use the following command:

| chart count(ticketNumber) over TimeBlock by group

-

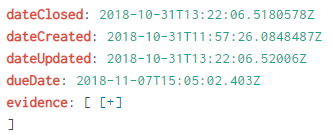

Suppose you ingest the following typical log, and you allow Splunk to assign _time to the time that this record is ingested. But this leads to issues with utilizing the date/time picker as it only looks at the _time field. What is the best way to resolve this?

Use the following command:

index="myindex"

| addinfo

| eval createdEpoch = strptime(dateCreated, "%Y-%m-%dT%T")

| where createdEpoch >= info_min_time

-

Suppose you use the following search to separate data with several multi-value fields into one line at a time.

| eval reading=mvzip(multi_value_field1, multi_value_field2) | eval reading=mvzip(reading, multi_value_field3) | eval reading=mvzip(reading, multi_value_field4) | mvexpand reading | makemv reading delim="," | eval field1=mvindex(reading, 0) | eval field2=mvindex(reading, 1) | eval field3=mvindex(reading, 2) | eval field4=mvindex(reading, 3) | ...

The warning "Field 'reading' does not exist in the data" appears in the result. How will you avoid this warning?

Use the following command-

| eval myFan=mvrange(0,mvcount(field1))

| mvexpand myFan

| eval field1=mvindex(field1,myFan)

| eval field2=mvindex(field2,myFan)

| eval field3=mvindex(field3,myFan)

| eval field4=mvindex(field4,myFan)

Splunk Developer Interview Questions and Answers

This section presents some of the Splunk interview questions and answers for those who are willing to pursue the role of a Splunk Developer.

-

What do you mean by the Search Summary view?

The Search Summary view includes common elements available on other views, such as the Applications menu, the Splunk bar, the Apps bar, the Search bar, and the Time Range Picker. The How to Search and the Search History panels, located below the Search bar, are unique elements of the Search Summary view. Both Splunk Enterprise and Splunk Cloud Platform have nearly identical Search Summary views.

-

What are Lookup files? What are the steps to enable field lookups?

Lookup files hold data that only sometimes changes like the data on users, products, employees, equipment, etc.

To enable field lookups, follow these five steps:

-

Upload the lookup file.

-

Send the uploaded file with the applications.

-

Write a lookup definition.

-

Send the lookup definition to the applications.

-

What are the steps to create and customize a chart using the timechart command and chart options?

Here are the required steps to create a custom chart-

-

Initiate a new search.

-

Select All Time as the new time frame.

-

Launch the following search-

sourcetype=access_* | timechart count(eval(action="purchase")) by productName usenull=f useother=f

-

Navigate to the Visualization tab.

-

Select a line chart as the new chart type.

-

To create the chart, format the X-Axis, Y-Axis, and Legend using the Format drop-down menu.

-

Choose Report after clicking Save As.

-

Click on Save.

-

Click View in the confirmation dialog box to view the report.

-

How will you save a search as a dashboard panel?

Here are a few steps to save a search as a dashboard panel-

-

Initiate a new search.

-

Set the time range to the previous week.

-

Launch the following search.

sourcetype=access_* status=200 action=purchase | top categoryId

-

Navigate to the Visualization tab. The display shows a line chart.

-

Convert the line chart to a pie chart.

-

After clicking Save As, choose Dashboard Panel.

-

Set up a new dashboard and dashboard panel.

-

Click on Save.

-

Click View Dashboard in the confirmation dialog box.

Learn more about Big Data Tools and Technologies with Innovative and Exciting Big Data Projects Examples.

Splunk Interview Questions and Answers for Experienced

Here are some important Splunk interview questions and answers for experienced data professionals looking to move up the career ladder.

-

Mention some of the features of Splunk Infrastructure Monitoring.

Some of the features of Splunk Infrastructure Monitoring include-

-

Real-Time Streaming Analytics- Using a streaming pub/sub bus, Splunk Infrastructure Monitoring applies analytics to real-time metrics.

-

Real-Time Interactive Visualizations- You can engage with all of your data in real-time using high-definition, user-friendly dashboards.

-

Alerting Built for Action- Reduce mean-time-to-detect (MTTR) significantly by being proactive and aware of what matters.

-

Best-in-class Kubernetes Monitoring- The most cutting-edge approach to managing and monitoring Kubernetes deployments.

-

Why do we use Ingest Actions (IA)?

There are several reasons why sometimes you might want to index only some of the data supplied to your Splunk instance. This is often done to reduce noise from specific log sources (to make search time exploration a bit easier) or as a cost-saving technique (through storage or ingest license costs). With Ingest Actions (IA), sampling may be set up with a user interface that makes it easier to create the sampling logic and deploy the changes so that they are immediately effective at the tier you want to sample.

-

What do you mean by the Log Observer?

You can run codeless queries on logs with Log Observer to find the origin of faults in your systems. Infinite Logging S3 buckets may be used to store data for future use, and you can also extract fields from logs to create log processing rules and modify your data as it comes in.

-

What is the importance of the Splunk Data Stream Processor?

The Splunk Data Stream Processor is a data stream processing system that gathers data in real-time, processes it, and delivers it to one or more destinations of your choice at least once. You can enhance, manipulate, and analyze your data as a Splunk Data Stream Processor user throughout the processing stage. The Splunk Data Stream Processor can also be used to create unique data pipelines that transform data and distribute it across a number of data sources and destinations, even if those sources and destinations support various data formats.

Splunk Scenario-Based Interview Questions and Answers

-

You are given a Splunk search query that takes a long time to complete. How will you optimize this query ?

The first step would be identifying any unnecessary fields, terms, or sub-searches that could be removed. The next step would be to check if the search is running on an optimal index and see if there can be any advantage of summary indexing. Last, see if there is any opportunity to parallelize the search and optimize the query.

-

Imagine you have a large dataset and are required to perform a complex search that requires aggregating and analyzing data across multiple fields. How would you approach this task?

You can start by breaking down the search into smaller, manageable steps and using sub searches or summary indexing to aggregate the data. Then use Splunk's statistical and visualization tools to analyze the data and create charts or dashboards that help visualize the insights. Finally, optimize the search for performance by limiting the search space, parallelizing the search, and using efficient search commands.

Practicing these interview questions is not enough if you are willing to master Splunk as a big data tool. Get your hands dirty by practicing some advanced-level Splunk projects if you want to get a step ahead of your competitors. ProjectPro offers various industry-level Big Data and Data Science projects to help you master trending big data tools and technologies.

About the Author

Daivi

Daivi is a highly skilled Technical Content Analyst with over a year of experience at ProjectPro. She is passionate about exploring various technology domains and enjoys staying up-to-date with industry trends and developments. Daivi is known for her excellent research skills and ability to distill