Innovation in Big Data Technologies aides Hadoop Adoption

Innovations in big data technologies has enabled enterprise adoption of Hadoop. Implementing Hadoop is easy with big data tools like Apache Pig,Hive,Spark.

Scott Gnau, CTO of Hadoop distribution vendor Hortonworks said -

"It doesn't matter who you are — cluster operator, security administrator, data analyst — everyone wants Hadoop and related big data technologies to be straightforward. Things like a single integrated developer experience or a reduced number of settings or profiles will start to appear across the board in 2016."

Apache Hadoop is gaining mainstream presence amongst organizations, not just because it is cheap but because it drives innovation. Innovations on Big Data technologies and Hadoop i.e. the Hadoop big data tools, let you pick the right ingredients from the data-store, organise them, and mix them. These big data tools are not just for professional chefs (hard-core programmers) but they can be used by diners too (data warehousing professionals, ETL professionals, etc.). For an organization to set up a big data application and configure the options, it has to use these innovations on big data technologies, in right combinations. That’s how Hadoop will make a delicious enterprise main course for a business.

Earlier Hadoop implementation required skilled programmers - making Hadoop adoption too cumbersome and a costly affair for many enterprises. Now, thanks to a number of open source big data technology innovations, Hadoop implementation has become much more affordable. Curious to know about these Hadoop innovations? Here’s a look at the most prominent big data tools, based on innovations in the Hadoop ecosystem, that operate collectively as “Hadoop” in the big data world.

Sparkling new innovations are easy to find in the big data world. With the need to simplify big data technologies, various open source innovations have been developed on top of Hadoop to handle mission critical workloads. As Hadoop gained mainstream presence in processing data stream in real time - we saw several promising innovations on Hadoop pop up over the years making the technology a collection of 48+ components.

Big Data Hadoop Project-Visualize Daily Wikipedia Trends

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectA lot of innovations have been tagged along the tiny toy elephant, which work together if an organization knows how to make these big data tools work. Each tool within the Hadoop ecosystem is a technology, developed by a group of intellects in the open source community. These tools are driving innovation in the industry whilst many organizations are still figuring out - how the complete big data and hadoop landscape can be used to derive maximum business value, at minimal cost.

Table of Contents

Each of these innovations on Hadoop are packaged either in a cloud service or into a distribution and it is up to the organization to figure out the best way to integrate these hadoop components which can help them solve the business use case at hand. In case of big data projects that have a limited scope and are monitored by skilled teams –this is not a concern. However, as the big data projects grow within an organization, there is a need to effectively operationalize these systems and maintain them. Following are some of the innovations in the hadoop stack that help tackle the complexity of data, multi-tenancy, scalability, security and management issues quickly-

New Projects

Apache Pig

We have seen incredible strides in the past few years on processing big data. It is undeniable that today Facebook, LinkedIn, Yahoo and many other top technology giants run MapReduce jobs on thousands of machines, as it makes parellizable operations extremely scalable. As MapReduce can run on low cost commodity hardware-it reduces the overall cost of a computing cluster but coding MapReduce jobs is not easy and requires the users to have knowledge of Java programming. Some of the challenges with using MapReduce for processing data in Hadoop are –

- Hadoop Developers have to write custom Java based MapReduce code for operations like join, filter and projections.

- It is difficult to manage n-stage jobs with Hadoop MapReduce.

- Many professionals from a non-programming background cannot easily grasp the “map” and “reduce” paradigm.

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

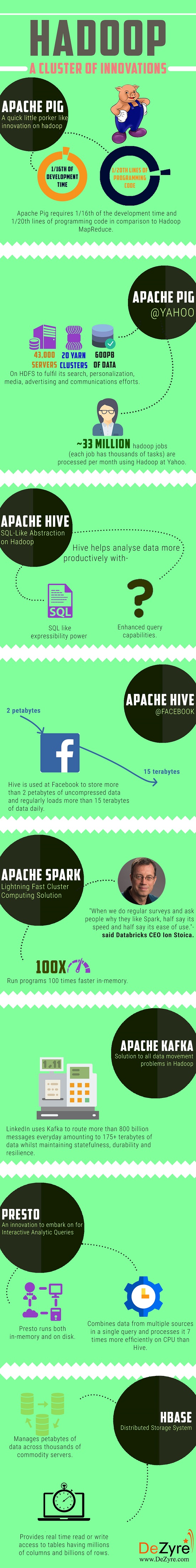

These challenges opened the road to an efficient high-level language for Hadoop i.e. PigLatin, which was developed at Yahoo. Apache Pig is a quick little porker like innovation on Hadoop that requires 1/16th of the development time and 1/20th lines of programming code in comparison to Hadoop MapReduce - with 43,000 servers in 20 YARN clusters and 600PB of data on HDFS to fulfil Yahoo’s search, personalization, media, advertising and communications efforts. Approximately 33 million hadoop jobs (each job has thousands of tasks) are processed per month using Hadoop at Yahoo. Pig Hadoop dominates the big data infrastructure at Yahoo as 60% of the processing happens through Apache Pig Scripts.

In the data preparation phase (popularly known as the ETL phase) where raw data is cleaned to produce datasets that users can consume for analysis –Apache Pig along with a workflow system like Oozie can be a good choice because users of data preparation phase are usually researchers, data specialists or engineers.

Here's what valued users are saying about ProjectPro

Ray han

Tech Leader | Stanford / Yale University

Jingwei Li

Graduate Research assistance at Stony Brook University

Not sure what you are looking for?

View All ProjectsApache Hive

Using Hadoop was not easy for end users, particularly for people who were not from a programming background. To perform simple tasks like getting the average value or the count-users had to write complex Java based MapReduce programs. Hadoop developers had to spend hours and days together to write MapReduce programs for basic analysis tasks, as Hadoop lacked SQL like expressibility power.

The team at Facebook realized this roadblock which led to an open source innovation - Apache Hive in 2008 and since then it is extensively used by various Hadoop users for their data processing needs. Apache Hive helps analyse data more productively with enhanced query capabilities. Today, Hive is used at Facebook to store more than 2 petabytes of uncompressed data and regularly loads more than 15 terabytes of data daily. Hadoop Hive is extensively used at Facebook for BI, machine learning and other simple summarization jobs.

Apache Hive works well during the data presentation phase as its provides SQL-like abstraction i.e. the data warehouse which stores the results and the users need to come and select the appropriate one from the shelves. Users of Apache Hive are usually decision makers, analysts or engineers using the data for their systems.

You might be interested to read about Pig vs Hive

Apache Spark

The ability to process big data at a higher velocities than Hadoop has paved the path for myriad open source innovations from incubator status to top level projects by Apache foundation. Apache Spark is at the forefront of innovative developments on Hadoop in 2016, as it is developed in response to the problem experienced with Hadoop. The new data darling both in the headlines and in real-word enterprise adoption –Apache Spark was developed to process large volumes of data faster than Hadoop MapReduce.

For people who run Hadoop 2.0 –do you want you programs to run 10 times faster on disk or 100 times faster in-memory? The answer definitely is going to be 100 times faster in-memory and the lightning fast cluster computing solution Apache Spark - is the answer to it.

"When we do regular surveys and ask people why they like Spark, half say its speed and half say its ease of use."- said Databricks CEO Ion Stoica.

Apache Spark is for-

- Users who want to access and process all their data from the existing Hadoop environment at a faster pace.

- Users who want to write applications quickly in Scala, Python, R or Java, in a manner that they can build parallel applications to make the most of distributed environment.

- Users who want to combine SQL, machine learning, streaming, complex analytics and graph processing all in the same application.

You might be interested to read about the Spark Ecosystem and Its Components

Apache Kafka

With increasing workloads in mobile, gaming and IoT sectors, hadoop developers have been on the hunt for an innovative technology that can help them consume the data easily in a reliable and comprehensible manner. Spark’s kissing cousin Apache Kafka was developed at LinkedIn to solve data movement problems among Hadoop clusters. LinkedIn uses Kafka to route more than 800 billion messages every day, amounting to 175+ terabytes of data whilst maintaining statefulness, durability and resilience.

As the need for hyper-fast portioned, distributed and replicated commit log service grows –organizations are figuring out on how to use Apache Kafka for operational metrics, stream processing, messaging log aggregation and website activity tracking, along with Hadoop.

Presto

For hadoop developers, data analysts, data scientists and data engineers who crunch data to derive valuable business insights, the performance of queries against the data warehouse is highly critical. To address this concern, engineers at Facebook developed Presto that helped them run more number of queries and achieve desired results faster thereby improving their productivity.

Presto is an open source SQL query solution in the the Hadoop ecosystem for running interactive analytic queries on petabytes of data, unlike Apache Spark which runs only in-memory. Presto runs both in-memory and on disk. Presto can run simple queries on Hadoop in just few milliseconds with complex queries taking only few minutes. The ability to combine data from multiple sources in a single query and processing it 7 times more efficiently on CPU than Hive, makes Presto an innovation to embark on in the Hadoop arena.

Get More Practice, More Big Data and Analytics Projects, and More guidance.Fast-Track Your Career Transition with ProjectPro

HBase

To provide timely search results across the Internet, Google has to cache the web. This paved path for a novel technology that could search the huge cache quickly- a distributed storage system for managing structured data that could scale to petabytes of data across thousands of commodity servers. Mike Cafarella then released the open source code for big table implementation after which it was popularly known as Hadoop Database (HBase). HBase is a NoSQL database on top of Hadoop, for a very large table having millions of columns and billions of rows to provide real time real or write access to big data.

HDFS, MapReduce, YARN, HBase, Hive, Pig, Spark, and Zookeeper are top level Apache projects on Hadoop supported by all the popular Hadoop distributions Cloudera, MapR, Amazon, Hortonworks, Pivotal and IBM. These are just some of the top level innovations on Hadoop , there are many other innovations around Hadoop like Accumulo, Solr, Ambari, Mahout, Parquet, Sqoop, Flume, Cascading, Ganglia, Impala, Knox, ,Tez, Drill, Ranger, Sentry and the list goes on.

Apart from the top level Apache projects, the popular social media giants like Twitter, LinkedIn and Facebook have always taken the lead with innovative big data technology innovations-

- Twitter has more than 300 petabytes of data and has a huge problem with namespaces. Managing complex namespace configurations was problematic at Twitter, which led to the development of TwitterViewFS, an extension of Hadoop’s ViewFS. TwitterViewFS handles all the user, temp and log files under a single superficial namespace that avoids mapping overheads.

- Open source tool Dr.Elephant, developed at LinkedIn, is a performance tuning and monitoring tool that helps improve the cluster efficiency and enhance developer productivity. Dr. Elephant analyses Hadoop and Spark jobs through rule-based heuristics which show insights on the performance of a job and make suggestions to the user on how the job can be tuned to perform more effectively.

Today, Hadoop can be referred to a cluster of innovations rather than just HDFS and MapReduce. It is interesting to see the go-to big data technology extend beyond its foundation and contribute to several open source projects that are set to enhance or replace the core Hadoop technology. Innovations on Hadoop are growing and evolving at a rapid pace, dedication and expertise can help organizations make the most of Hadoop adoption in the enterprise. Even the social media giants are proving that, with great engineering efforts and vision, the march of progress for innovations on Hadoop will increase with time.

As big data gets bigger every day, with data flowing in from of IoT, Mobile devices and organizations look for novel ways to leverage it – in the next 5 years, enterprises will rely less on Hadoop and MapReduce but more on the innovative tools around Hadoop like Pig, Hive, Spark, Presto, Kafka, etc. to meet the scaling big data demands of the future.

What next? We will just have to wait and watch.

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,