Monte Carlo Announces Support for Kafka and Vector Databases at IMPACT 2023

Monte Carlo’s third annual IMPACT: the Data Observability Summit just wrapped and impact was certainly the name of the game.

IMPACT 2023 brought together some of the best and brightest in data and AI for one jam-packed day of content, including insights from industry luminaries like Tristan Handy of dbt Labs and Nga Phan of Salesforce AI.

But premium content wasn’t the only thing on the menu this year. Amidst the hustle and bustle of speakers, Monte Carlo dropped a couple surprises as well.

From new cost optimization tools to support for vector databases and the AI stack, read on to find out everything that was announced at IMPACT 2023.

Kafka and Vector Database support

According to Databricks’ State of Data and AI report, the number of companies using SaaS LLM APIs has grown more than 1300% since November 2022 with a nearly 411% increase in the number of AI models put into production during that same period.

And yet, there’s no question that the rollout of AI en masse hasn’t been without its share of hiccups.

From hallucinations to regulatory and IP snafus, the AI is only as good as the data that powers it.

In fact, according to a 2023 Wakefield Research survey, data and AI teams spent double the amount of time and resources on data downtime year-over-year, owing to an increase in data volume, pipeline complexity, and organization-wide data use cases, with time-to-resolution up 166% on average.

As AI continues its meteoric rise, Monte Carlo is committed to delivering reliable data at scale for data and AI teams. And in the irreducibly complex infrastructure of AI, two critical components provide the backbone of enterprise-ready AI: unstructured streaming data and vector databases.

“To unlock the potential of data and AI, especially large language models (LLMs), teams need a way to monitor, alert to, and resolve data quality issues in both real-time streaming pipelines powered by Apache Kafka and vector databases powered by tools like Pinecone and Weaviate,” said Lior Gavish, co-founder and CTO of Monte Carlo.

To facilitate scalable trust in AI products, we decided to bring data observability to both.

Apache Kafka

Apache Kafka is undoubtedly one of the most popular open-source stream-processing platforms—delivering high-throughput, low-latency, and real-time data feeds for cloud-based data and AI products.

Monte Carlo’s new Apache Kafka integration through Confluent Cloud will enable data teams to ensure that the historically difficult to monitor real-time data feeding their AI and ML models is both accurate and reliable for business use cases.

Vector Databases

Equally critical to delivering quality real-time data is the ability to reliably query the vector databases used for retrieval-augmented generation (RAG) and fine-tuning pipelines.

To support end-to-end data quality coverage for critical AI products, Monte Carlo also announced that it would become the first data observability platform to support trust and reliability for vector databases like Pinecone.

This combined with support for real-time data streaming mark two of the first and most intentional steps toward facilitating trust in the world of enterprise-ready AI.

“Our new integration with Confluent Cloud gives data teams confidence in the reliability of the real-time data streams powering these critical services and applications, from event processing to messaging. Simultaneously, our forthcoming integrations with major vector database providers will help teams proactively monitor and alert to issues in their LLM applications.”

Expanding end-to-end coverage across batch, streaming, and RAG pipelines enables organizations to realize the full potential of their AI initiatives with trusted, high-quality data.

Both integrations will be available early 2024. Keep an eye on the Monte Carlo blog for updates!

New tools to operationalize data observability at scale

As more organizations look to incorporate generative AI for both internal and external use, the need to build and refine the underlying data pipelines becomes all the more poignant

To help companies deliver trustworthy data products at scale, Monte Carlo also announced the release of two new operational features to help data teams easily track the health of their most critical assets.

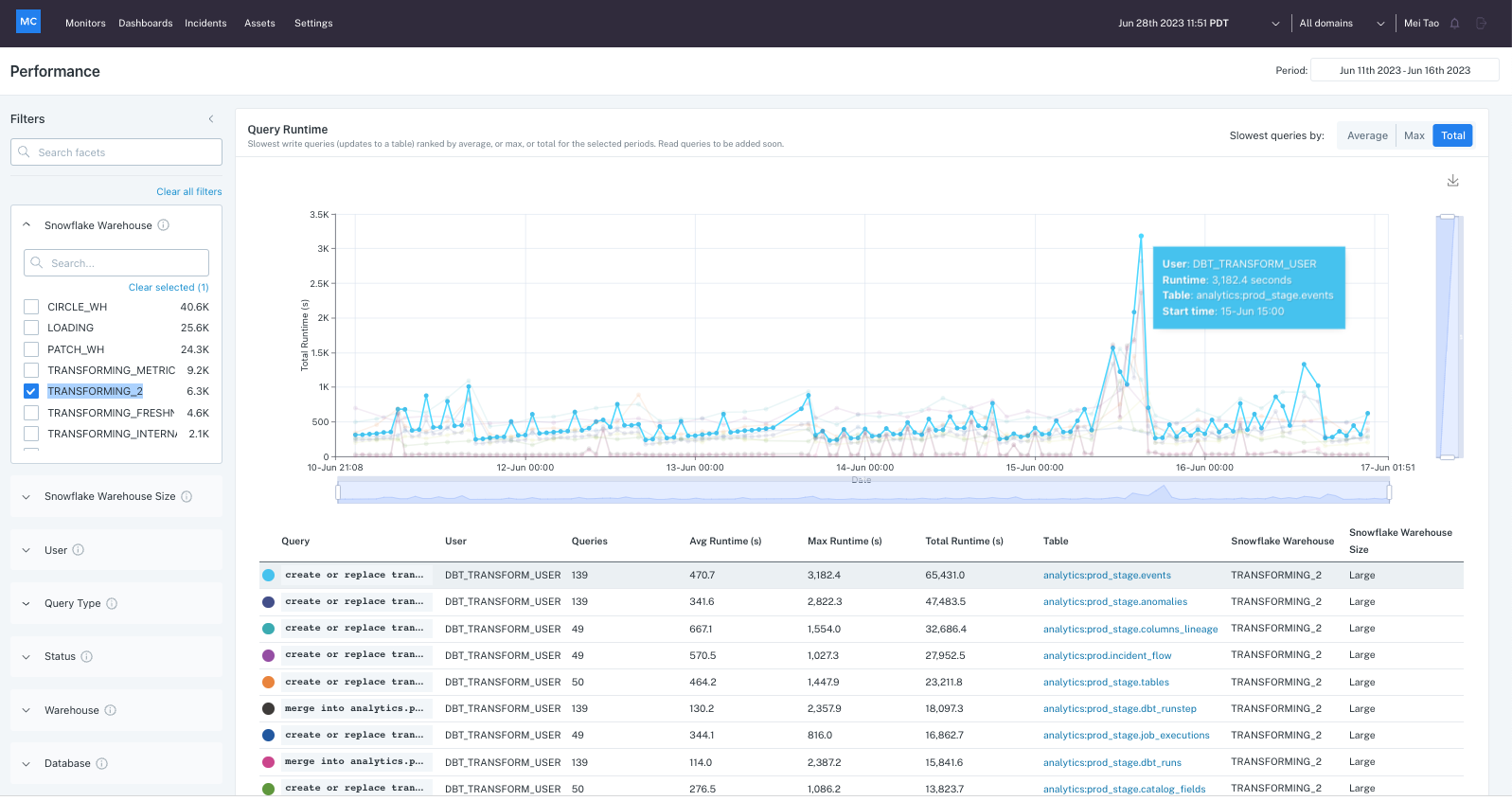

- Performance Monitoring – Efficiency and cost monitoring are critical considerations for everything from data product design to adoption. Our new Performance dashboard allows Monte Carlo users to optimize cost and reduce inefficiencies by detecting and resolving slow-running data and AI pipelines at a glance. With this new feature, users can easily filter queries by DAGs, users, dbt models, warehouses, or datasets; drill down to spot issues and trends; and determine how performance was impacted by changes in code, data, and even warehouse configuration.

- Data Product Dashboard – Speed is critical to maintaining reliable data products. With the new Data Product Dashboard, Monte Carlo users can easily define data products to monitor, track product health, and even report on reliability directly to business stakeholders through Slack, Teams, and more. And if an incident is detected, users can quickly identify which data assets feed a particular data or AI product, and unify detection and resolution for relevant data incidents in a single view.

With the ability to track key reliability SLAs at the individual data product level, Monte Carlo is the first data observability platform to expand reliability beyond the pipeline to enable data observability at the organizational, domain, and data product levels. And we couldn’t be more excited to share it with you!

Learn more about Performance Monitoring and Data Product Dashboard via our docs.

The future of data observability at Monte Carlo

When it comes to data and AI, change is truly the only constant.

As the data landscape evolves and AI enters to take its seat at the table, we’re committed to providing the tools and resources you need to deliver reliable data and AI products at any scale.

We can’t say we’ll always know what’s right around the corner. But whatever it is, we’ll be ready to evolve right along with it. Bad data never sleeps, so we don’t either.

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage