A Comprehensive Guide to Ensemble Learning Methods

Ensemble Learning|Ensemble Methods in Machine Learning

Data Science replicates human behavior. We have designed machine learning to imitate how we behave as humans. Think of a model in Data Science as one way to learn. Human beings have a bias when they make a choice. The way one person lives their life cannot be scaled across the human race. Instead, when multiple people share their experiences and learnings, it is possible to develop a generalized approach. It is the same with machine learning models in Data Science. One model can do a good job with a machine learning problem, but a set of models will do a better job in most cases. This enhanced performance is because the combined model is more generalized with less bias.

Let's say we are trying to get fit by controlling our diet. Your mother might suggest reducing the quantity of food you eat, but make sure that you eat breakfast, lunch, and dinner. You might have friends that say that intermittent fasting is the best way to go about it. Another friend might suggest a keto diet to optimize the number of macronutrients your body is getting. So, in theory, all of these are the possible models that might work for your use case. The question is, are you going to trust one model? Perhaps a better approach might be some combination of these suggestions that work best for you. That's it. That is the concept of ensemble learning models.

It is important to note that only the best ML models don't need to be used in an ensemble model. Instead, it should work for the problem at hand that we are trying to solve.

Customer Churn Prediction Analysis using Ensemble Techniques

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectSome of the best models that are implemented on Kaggle data science competitions are ensemble models. Read this blog all the way through to understand the A-Z of ensemble machine learning models. Getting started on your journey to becoming a data scientist or a machine learning engineer, explore ProjectPro’s real-world data science and machine learning projects to perfect the tools that a data scientist or machine learning engineer uses in the industry.

Table of Contents

What is Ensemble Learning?

Ensemble Learning is the process where multiple machine learning models are combined to get better results. The concepts that we will discuss are easy to grasp. From the introduction, we have an intuition about Ensemble models. The core idea is that the result obtained from a combination of models can be more accurate than any individual machine learning model in the group.

Let's go over the previous example. We were discussing what the best method to get fit by controlling our diet is. Essentially, all of the suggested methods are ways to optimize calorie intake. Each method has different yet effective ways of getting good results. Combining the processes in the right way will help get the most out of the entire exercise.

Imagine a model to be a person who analyses patterns and suggests what you can do next. In the example we have taken, the advice from each person can be considered a model, based on their previous experience, indicates the best way to get fit. Models can also be called learners. Each learner is considered "weak" on their own. However, when multiple weak learners are combined in some form or the other, it forms a robust model. This strong model is what we will be calling an ensemble model. The models should be as diverse as possible. Each model might perform well on different portions of the data. When the models are combined, they cancel out each other's weaknesses. The idea of strength in multiple models over a single one is the premise of ensemble models.

Why use Ensemble Learning?

In short, we use ensemble learning models because they tend to give better results. It's unfair to put the pressure of getting the best possible result on a single model. Even if the model provides good results on a particular dataset, we might see varied performance on other datasets.

Go back to college. You have some great teachers and some who are not. Now, let's say that you are trying to maximize your marks in discrete mathematics. There are three teachers – one who teaches the best and has the best video lectures, one who hints at the questions that will come in exams, and one who gives the best notes. Choosing any one of these teachers has advantages and disadvantages. The most optimal approach is to apply ensemble machine learning methods to this scenario and mix the offerings by all three teachers to optimize your marks in discrete mathematics. It works the same way for ensemble models. You have now understood why we use ensemble learning and why it is preferred over individual models in many scenarios.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

All right, it's been fun understanding the ins and outs of ensemble learning. Now, let's get to business. Even though I am not a fan of learning data science using jargon, it is the language the world prefers and understands.

Ensemble Methods in Machine Learning

Let’s go over the different types of ensemble learning methods in python. First, we will go over the fundamental models followed by the more intricate ensemble learning techniques used across machine learning. Let's Begin!

Fundamental Ensemble Methods in Machine Learning

The below sections will cover max voting, averaging and weighted average methods. These are fundamental yet extremely powerful techniques. It's fun to read and understand Data Science in theory. If you want to learn Data Science, do it. To aid you with this, below is a link to download the iPython notebook so that you can work alongside us and understand what happens.

1) Max Voting

Intuition

How does voting work? Voting works because the opinion of the majority holds more weight than the vote on an individual. Max Voting is used when we have discrete options on which the models can take a vote. The option that has the most number of votes is considered the chosen one. It is used for classification problems. Each machine learning model makes a vote, and the option with the maximum vote is the selected option.

Business Understanding

You decide to go out with your friends. Any cuisine and restaurant can be chosen. All of your votes and the options with the maximum votes are where you guys go in the end. The idea of a democratic system is the premise of the Max Voting ensemble learning method. Simple, yet effective.

Ensemble Learning Method Python - Max Voting Implementation

There are two types of max voting – hard and soft. Let's go through examples of both hard and soft.

Imagine we have three classifiers, 1, 2, and 3, and two classes A and B that we are trying to predict. After training the classifier models, we are trying to predict the class of a single point.

- Hard Voting

Predictions:

Classifier 1 predicts Class A

Classifier 2 predicts Class B

Classifier 3 predicts Class B

2/3 classifier models predict class B.

Thus, Class B is the ensemble decision. - Soft Voting

The use case is identical to the example above. The choices for the classes is now expressed in terms of probabilities. The values shown are only for Class A as the problem is binary.

Predictions:

Classifier 1 predicts Class A with the probability of 99%

Classifier 2 predicts Class A with the probability of 49%

Classifier 3 predicts Class A with the probability of 49%

The average probability of belonging to Class A is (99 + 49 + 49) / 3 = 65.67%.

Thus, Class A is the ensemble decision.

In the above scenario, hard voting and soft voting would give different decisions. Soft voting considers how confident each voter is, rather than just a binary input from the voter.

The sklearn voting classifier considers max voting from three models – Support Vector Machine, XGBoost and Random Forest Classifier. We used soft voting with equal weights for all the models. You can go and try it out using the code in the iPython Notebook.

New Projects

2) Averaging

Intuition

For a data point that we are trying to predict, multiple predictions are made by various models. The average of the model predictions is the final prediction that we consider.

Buiness Understanding

Let's say you are watching a game of cricket on television with your friends, and after considering multiple factors, the score that India will hit is to be predicted. Of course, everyone will have a different guess. A good guess would be an average of everyone's predictions. The stronger the individual guesses, the better the overall ensemble model is. The averaging ensemble model works by taking a simple average.

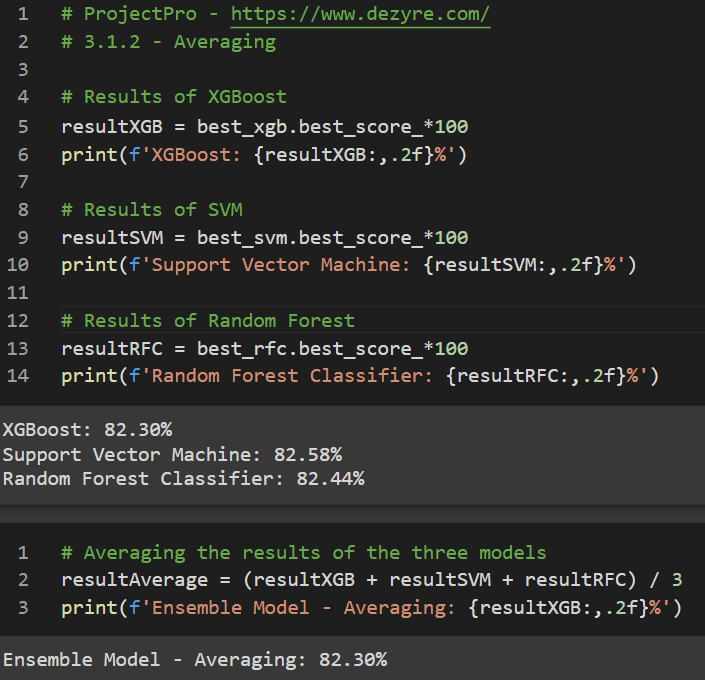

Ensemble Learning Method Python - Averaging Implementation

The logic is simple once we have the models that we are going to use. Then, we will be leveraging the model that we have and performing an average of the results.

The code takes the model results from three models and provides the average of the outputs.

3) Weighted Average

Intuition

A weighted average ensemble model allows multiple models to contribute to the prediction based on how good the model is. If a model does better on the dataset in general, we will give it a higher weight. This generalization will help reduce bias and improve overall performance.

Get FREE Access to Machine Learning Example Codes for Data Cleaning, Data Munging, and Data Visualization

Business Understanding

Let's take the same example of watching a cricket match and predicting the score as effectively as possible. Let's say you have a friend who has been watching cricket religiously for 20 years. We can take a weighted average, where the friend is assigned a higher weightage than somebody new to cricket. In other words, we will give a higher weight to the friend who is more experienced.

Ensemble Learning Method Python - Weighted Average Implementation

Weights are assigned to each model based on certain factors. Here, we have assigned weights to the models and have gotten a weighted average as the output.

Here's what valued users are saying about ProjectPro

Ed Godalle

Director Data Analytics at EY / EY Tech

Gautam Vermani

Data Consultant at Confidential

Not sure what you are looking for?

View All Projects4)Bagging

Intuition

Bagging stands for Bootstrapped Aggregating. Bootstrapping is to make the best of a situation using existing resources. Aggregating is grouping what we have into a class or cluster. Bagging has two main steps:

-

Use our existing train data and make multiple data instances (bootstrapping) – here, you can use the same training samples multiple times. We can sample the data with replacement

- Make multiple models from this bootstrapped data and multiple model outputs. Aggregate the results of the model and get the final result

Business Understanding

Let's say you are trying to teach kids in fifth grade. Now say you have 50 True or False questions that you would like for them to answer. From this, you decide to teach/ train the kids on 40 questions and test them on ten questions. In this scenario, the 50 questions are the entire dataset, the 40 questions are the train data, and ultimately, the ten questions are the test data.

Let's say you have 25 children. One possible way could be you teach them all together and then test them all together. Here, we will be able to maximize using the 40 train questions that we have. But, each kid has learned it in the same way.

The new innovative approach gives each of the 25 children a random set of questions (Always less than the 40 train questions). It is okay for questions to repeat. In this case, we are making the best use of the resources we have at hand, called bootstrapping.

Next, the kids are going to go ahead and try to answer the 10 test questions. Here, we will let the kids answer true or false and for each of the ten questions. Then, finally, we will take a max vote on the final result. This is called aggregating.

In conclusion, we bootstrap the train data we have, make multiple models, and aggregate the results. The combination of bootstrapping and aggregating is called bagging.

Ensemble Learning Method Python - Bagging

Let's get on with a bit of jargon. First, remember what you have learned, and then try to read the official definition of Bagging.

Bagging consists of multiple learners being fit on random subsets of the data, called bootstrapping, and then aggregating the prediction (by voting or averaging) called aggregation. A combination of both of these is called bagging.

The sklearn library has the bagging classifier, and more can be found in the official documentation here. This code performs a grid search on the bagging classifier to get the best possible model.

Get More Practice, More Data Science and Machine Learning Projects, and More guidance.Fast-Track Your Career Transition with ProjectPro

5)Boosting

Intuition

This one is fun. The premise of boosting is what it says. First, a model is run, and the results are obtained. Then, specific points are classified correctly, and the rest incorrectly. Now, we will give more weight to the points that are incorrectly classified and rerun the model. What happens? Since there are specific points with a higher weight, the model is more likely to include them. We keep repeating this process, and multiple models are created, where each one corrects the errors of the previous one.

Business Understanding

Say you are teaching a kid a topic in mathematics. They make mistakes as they start, and with each session, you highlight their error so that they do not repeat it. After many sessions, it is clear to the kid how best to differentiate between right and wrong. This gradual improvement was made by highlighting (boosting) their mistakes (like misclassifications, as stated in our previous example).

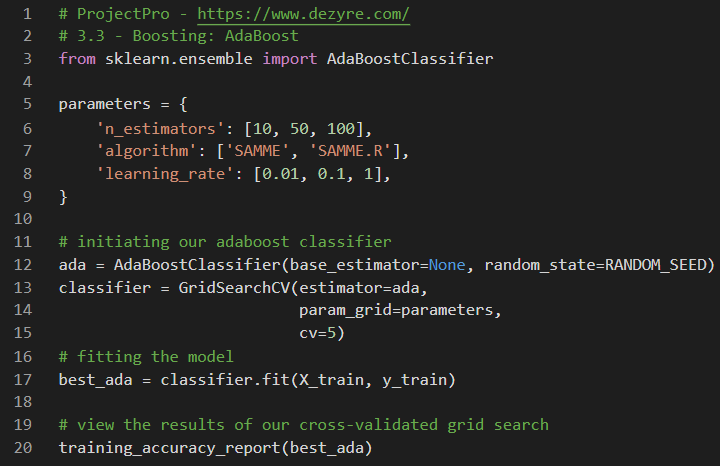

Ensemble Learning Method Python - Boosting

Each of the models we make initially has a unique set of learnings. As it is learning, it is called a weak learner in this scenario. Thus, the boosting algorithm combines several weak learners to form a strong learner. There are different kinds of boosting algorithms, and the more popular ones are XGBoost and AdaBoost.

The two popular boosting models XGBoost and AdaBoost, have been explained with code here. More can be found in the downloadable iPython Notebook for Ensemble Learning at the beginning of the blog.We use the XGBClassifier() and the AdaBoostClassifier()to implement the models.

6)Stacking

Intuition

Stacking is an advanced model for ensemble learning. I would advise you to read this topic multiple times to understand it completely. The idea of stacking is that we perform predictions on the train and test dataset with a few models. For instance, we run a Random Forest model and get the results. This is done on the train and test dataset. Then, we run a support vector machine algorithm on the train and test data, and get the outputs.

Here's the critical part. We now do not consider the original train and test data. Instead, we consider the new decision tree and support vector machine outputs on the train data as the base train model. The new test data is the model outputs of random forest and support vector machine on the test data.

Business Understanding

We will be able to understand more from the figure below. The essence of stacking is that we rely on the derivative models of the base data to make predictions going ahead. Now, if the models were similar, then the outputs would be as well. So, we consciously choose different models to understand the result better, as the models might have better learned certain parts of the data.

Ensemble Learning Method Python - Stacking

Stacking considers heterogeneous weak learners. Stacking combines several weak learners and combines them by training a meta-model to output predictions based on multiple predictions returned by these weak learners.

Here, we use the StackingClassifier() from sklearn to implement stacking of random forest and support vector machine.

Recommended Reading:

7)Blending

Intuition

Blending follows a similar approach to stacking. The only difference is that in Blending, a holdout validation set is leveraged to make predictions. Predictions in the validation set will be used to train the meta-model. Forecasts in the test set will be used to test the meta-model.

Business Understanding

Predictions of the validation set become the training data of the meta-model. Forecasts of the test set will become the test data of the meta-model. These predictions are stacked and used for the final model.

Ensemble Learning Method Python - Blending

The sklearn library does not natively support Blending. So, we will leverage a custom code to implement what we have discussed so far.

This code for ensemble models using Blending. The train test and validation set are leveraged with a Logistic Regression model in this example above.

Can Ensemble Learning be used for both regression and classification problems?

The short answer

Yes, ensemble learning can be used for both regression and classification problems.

The detailed answer

Specific ensemble learning methods such as max voting work for classification problems. Techniques such as Gradient Boosting (GBM) work for both classification and regression problems. In the examples we have stated above, multiple instances of regression and classification have been mentioned. The ensemble models work by reducing bias and variance to enhance the accuracy of models.

How do these ensemble learning techniques help improve the performance of the machine learning model?

The short answer

Ensemble Learning improves model results by combining the learning and methodology of many models compared to a single model. This is highlighted in our example at the very beginning of the blog.

The detailed answer

Ensemble models work by combining multiple base learners into a single strong learner. This helps by decreasing the bias, variance, or improving predictions.

There are two groups of ensemble models when viewed from the lens of performance types:

- Sequential ensemble models – The logic employed is to leverage the dependence between the base learners. Thus, the mistakes made by the first model are sequentially corrected by the second model and so on. This helps get the most accurate ensemble possible.

For example, the AdaBoost Ensemble Model is sequential - Parallel ensemble models – The logic employed is to leverage the independence between the base learners. Thus, the mistakes in labeling made by one model are different from those from another independent model. This lets the ensemble model average out the errors.

For example, the Random Forest Model is a parallel ensemble model with independent Decision Trees

An interesting point to note here is that combining similar or good learners does not make much of a difference. Instead, combining a variety of heterogeneous/weak machine learning techniques helps improve generalization.

Key Takeaways

If you have spent time and gone through the entire blog and the code iPython Notebook, you have learned the theory and implementation of Ensemble Learning Models in Machine Learning.

In this article, ensemble modes have been demystified. Fundamental ensemble models used in production and ensemble models that will help you win Kaggle competitions such as Bagging, Boosting, Blending, and Stacking were analyzed. With detailed explanations and codes, this article is your one-stop solution to understand everything about ensemble models.

Explore the best of real-world Data Science Projects to learn data science by doing. Our expert-curated repository of data science and machine learning projects comes along with reusable code, downloadable datasets, videos explaining the solution, and technical support.

A quick recap of the key takeaways from this long but fun blog on Ensemble learning models is here :

- Some of the best models that have been implemented in multiple use cases are ensemble models. Therefore, knowing how ensemble models work can starkly improve model performance.

- Ensemble Learning is the process wherein multiple models (weak learners) are combined to make a single integrated model (strong learner)

- Fundamental ensemble models can give great generalized results without increasing complexity, and these are useful in machine learning used in production (MLOps)

- Complex ensemble models such as bagging and boosting tend to yield the best performance, but at the cost of increased complexity and computing power

- Ensemble models can be used for a plethora of problem types. They can be used for classification and regression problems

- Ensemble models can be divided into two broad groups of sequential ensemble models and parallel ensemble models

- The best way to learn Data Science is to DO Data Science!

Congratulations! You have managed to get so far all that way from the start. You are now ready to take on a new dataset and attempt to create an ensemble model of your own.

Author

Anish Mahapatra

Senior Data Scientist

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,