Don’t Do Your POC In A Sandbox. Here’s Why.

This article was written in collaboration with Michael Segner, Product Marketing Manager at Monte Carlo and Bob Manfreda, Account Executive at Monte Carlo.

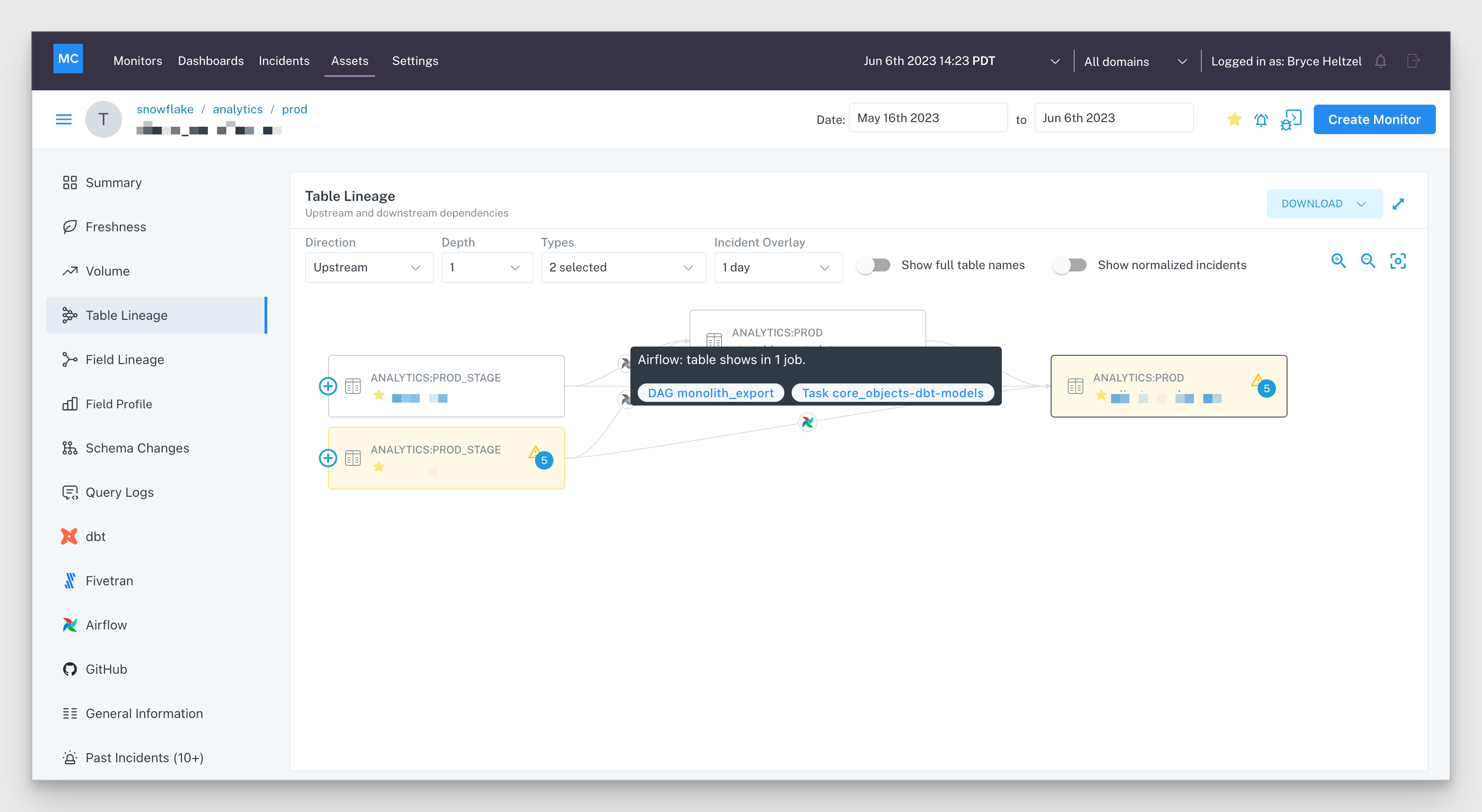

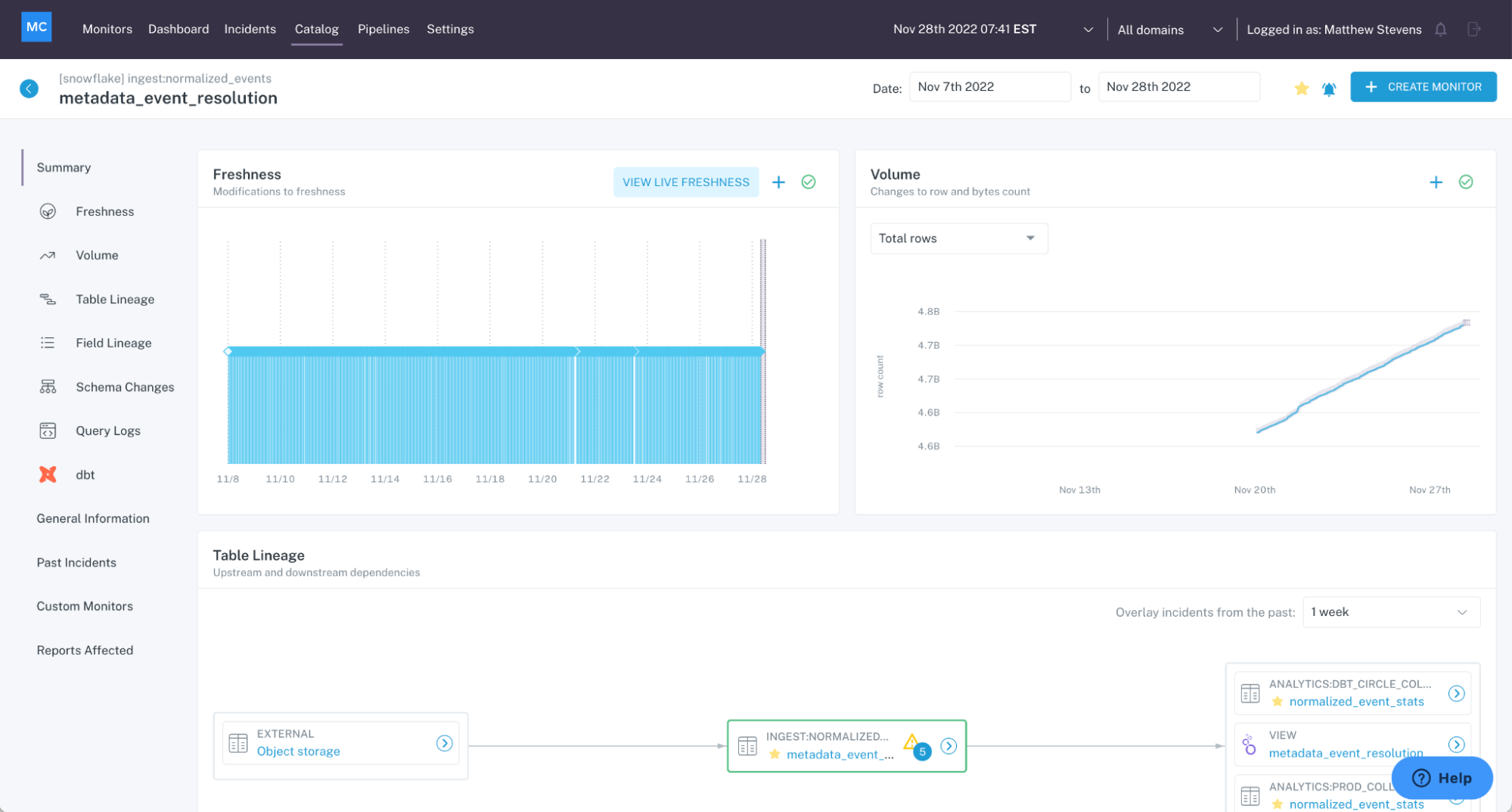

I maintain a sandbox environment for Monte Carlo’s solution engineers to demo our data observability solution. I take a lot of pride in creating a realistic environment that mirrors the architecture our customers use to deliver data every day.

I use Mockaroo to simulate source data. It travels from transactional databases through real pipelines orchestrated with modern tooling before landing in the data warehouses and lakehouses most commonly used by data teams. There it moves through raw, silver, and gold layers as it takes the shape of common data products like financial reporting or feature tables for machine learning applications.

This sandbox is a very helpful tool for quickly showing how our solution works and its value. If a picture is worth a thousand words, then a demo is often worth a thousand pitch decks.

It can even be helpful for teams moving with urgency that are quickly checking a box to determine technical feasibility.

But I’m under no illusion that a sandbox environment, even one as well fleshed out as this one, can help prospects with their most detailed analyses during a proof-of-concept. I will even go so far as to suggest that any vendor that says otherwise is aware their solution is not performant in production environments.

So let’s dive in and discuss the limitations a sandbox environment has in evaluating a solution across the dimensions of technology, process, and people. This will be tailored to data observability solutions specifically, but these principles apply to all data solutions.

Table of Contents

Technology

A well made sandbox environment can tell you a lot about a solution’s technical feasibility and usability. Things like:

- Does it integrate to our key data tools and databases? How easily?

- Can my team get a sense of the ease of use of the tool? Is it easy to configure key elements such as monitors?

- What does an alert look like?

- Do I like the way they report on data reliability levels? Is it a nice looking dashboard?

The problem is this– the data isn’t your data. It is going to feel foreign to your team. If you want to understand if the solution will work for you, then you need to get reps applying monitors to the things you care about.

And of course a sandbox has never seen an edge case, and it’s definitely never seen your edge cases. Just to cite a few examples from an infinite pool of possibilities:

- In the sandbox environment, all tables follow a pristine hourly load cadence using only straightforward incremental loads. In your environment, your tables range from hourly complex merges with complex CDC and soft deletes to weekly trunc and reloads, and you need to ensure that your tool of choice is compatible with your primary method of moving data (Monte Carlo is).

- In the sandbox environment Delta table monitoring went smoothly. But your environment actually contains Delta Live Tables that might be unsupported by your vendor (Monte Carlo has your back).

- Rarely in a sandbox environment will you evaluate monitoring not just the tables but the relationships between the specific segments of the data that are important to you.

Edge cases aren’t the only, or even most important, limitation of executing a POC in a sandbox environment. The hard truth is that no sandbox is going to operate at the scale and complexity of your production environment. For example, in production:

- There are more pipelines delivering larger volumes of data at higher velocities;

- The dependencies between systems and jobs are much more complex;

- Real source data is more nuanced and varied than mock or synthetic data; and

- There are no overarching layers like security or governance influencing the platform.

The number one reason we see vendors advocate a sandbox proof-of-concept is to avoid the scale of production…and the amount of compute their solution adds to your bill as a result. The second is to obscure the amount of time it takes to configure their solution at scale.

Our perspective is that there is no such thing as a successful POC that is blind to the maintenance levels required or the total cost of ownership (and I think your CFO would agree).

People and Process

Data observability isn’t just a technology, it’s an operational process. As JetBlue’s Senior Manager of Data Engineering says, “An observability product without an operational process to back it is like having a phone line for 911 without any operators to receive the calls.”

One of the biggest challenges with evaluating data observability solutions is gathering key requirements. For most teams, this will be the first time that they will be hands-on with a tool in the category, and it is hard to define critical capabilities upfront. The initial focus is typically on requirements that answer the question “How can we catch everything that may break in our data?” as opposed to “How can we identify and take action on business critical data incidents?”.

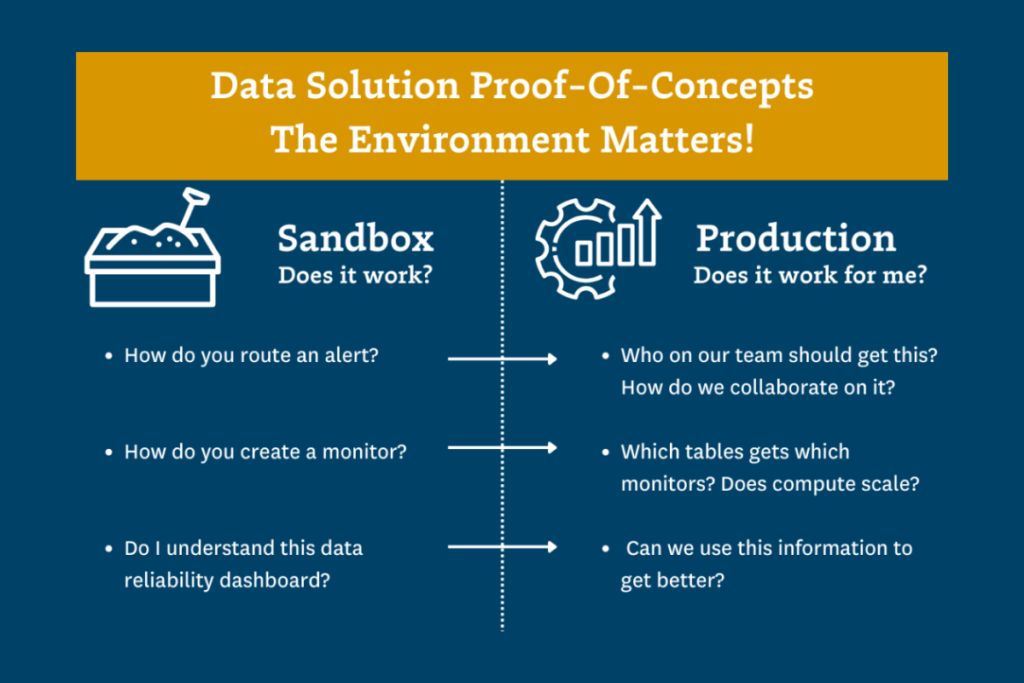

Sandbox environments exacerbate this challenge. They are great at highlighting key product workflows like:

- What does an incident look like?

- What types of monitoring is available?

- How is an alert routed?

- How can I see all open incidents?

….but they aren’t great at highlighting if those key product workflows will work for your team.

That’s because your team doesn’t hang out in a sandbox environment and will inevitably not spend the time to simulate responding to synthetic incidents. The other teams you need to work with–the data producers making source data changes or the data consumers that need to be warned–aren’t in the sandbox either.

Without familiarity with the data in question, the evaluation team is missing out on a potential “ah ha” moment in uncovering data incidents with real business implications.

But when a monitor catches a real incident in a real environment and sends a real alert to real people? Now you can see how your operational response is transformed (or not) by the solution under evaluation. And as a bonus, you are starting to work out a new organizational muscle.

Now, you can answer questions one level deeper like:

- Which team should be routed which alert?

- How can our data producers receive alerts on relevant incidents downstream they may have inadvertently caused?

- How should teams respond once they receive an alert?

- How will hand-offs happen between teams? What needs to be documented where to ensure smooth collaboration?

- What consumers need to be alerted of what type and severity of incident?

- Does this data quality dashboard provide the information teams need to improve?

Not to mention that when data observability is operationalized in a real environment you also get to evaluate how the vendors will support your implementation and ensure your success. That’s important too.

Nothing acts like prod, but prod

All of this isn’t to say sandboxes are worthless or you should never use them to deepen your understanding of how a solution works. For example, an abbreviated sandbox evaluation is a great option when producing a short list of solutions that your team believes may work for you with the added benefit of shifting that initial cost burden off of your shoulders.

But if you communicate that a detailed, comprehensive, and accurate evaluation is important to you and a vendor points you to a sandbox POC, you need to start asking questions. While integrating with production for a proof-of-concept is often a more involved process, it dramatically reduces the risk of acquiring new technology.

Interested in hearing more about data observability and what a proof-of-concept might look like? Talk to us by filling out the form below!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage