Enterprise Data Quality: 3 Quick Tips from Data Leaders

It’s 2024, and the data estate has changed. Data systems are more diverse. Architectures are more complex. And with the acceleration of AI, that’s not changing any time soon.

But even though the data landscape is evolving, many enterprise data organizations are still managing data quality the “old” way: with simple data quality monitoring.

The basics haven’t changed: high-quality data is still critical to successful business operations. But, data quality monitoring is no longer enough to consistently deliver and maintain high-quality data at scale.

So, what are enterprise data teams doing to turn the data quality tide?

We spoke with the data teams at JetBlue, Fox, and Credit Karma about their top tips for data quality in the post-modern data stack. Here’s what they had to say.

Table of Contents

Tip #1: Leveraged automated data lineage for resolution and KPIs to measure success

Just knowing that an issue occurred isn’t enough. Enterprise data teams need to know the table, the column, and the row where it happened.

Airline behemoth JetBlue supports 3,400 analyst-facing tables and views across the company, totaling 5 petabytes of data.

With a data organization of that scale, and an evolving AI strategy on deck, the team needed to gain visibility into their data pipelines to mitigate issues quicker and build data trust downstream.

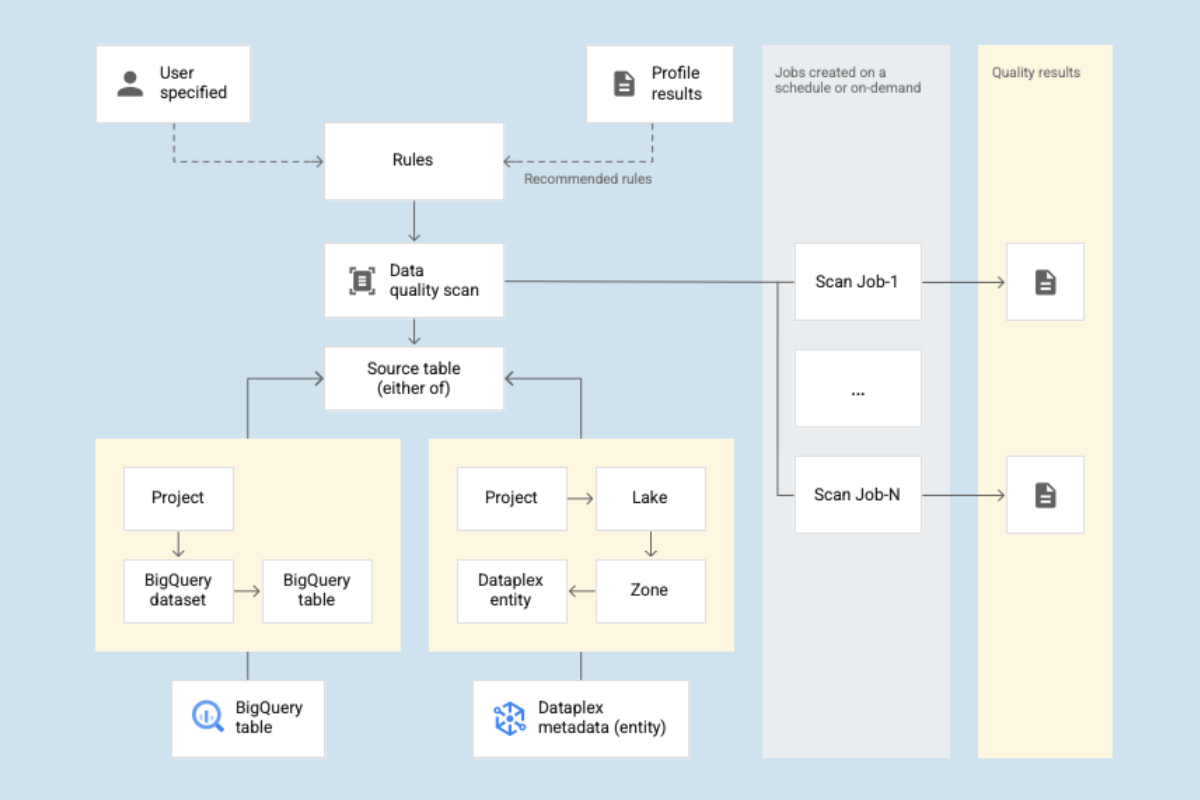

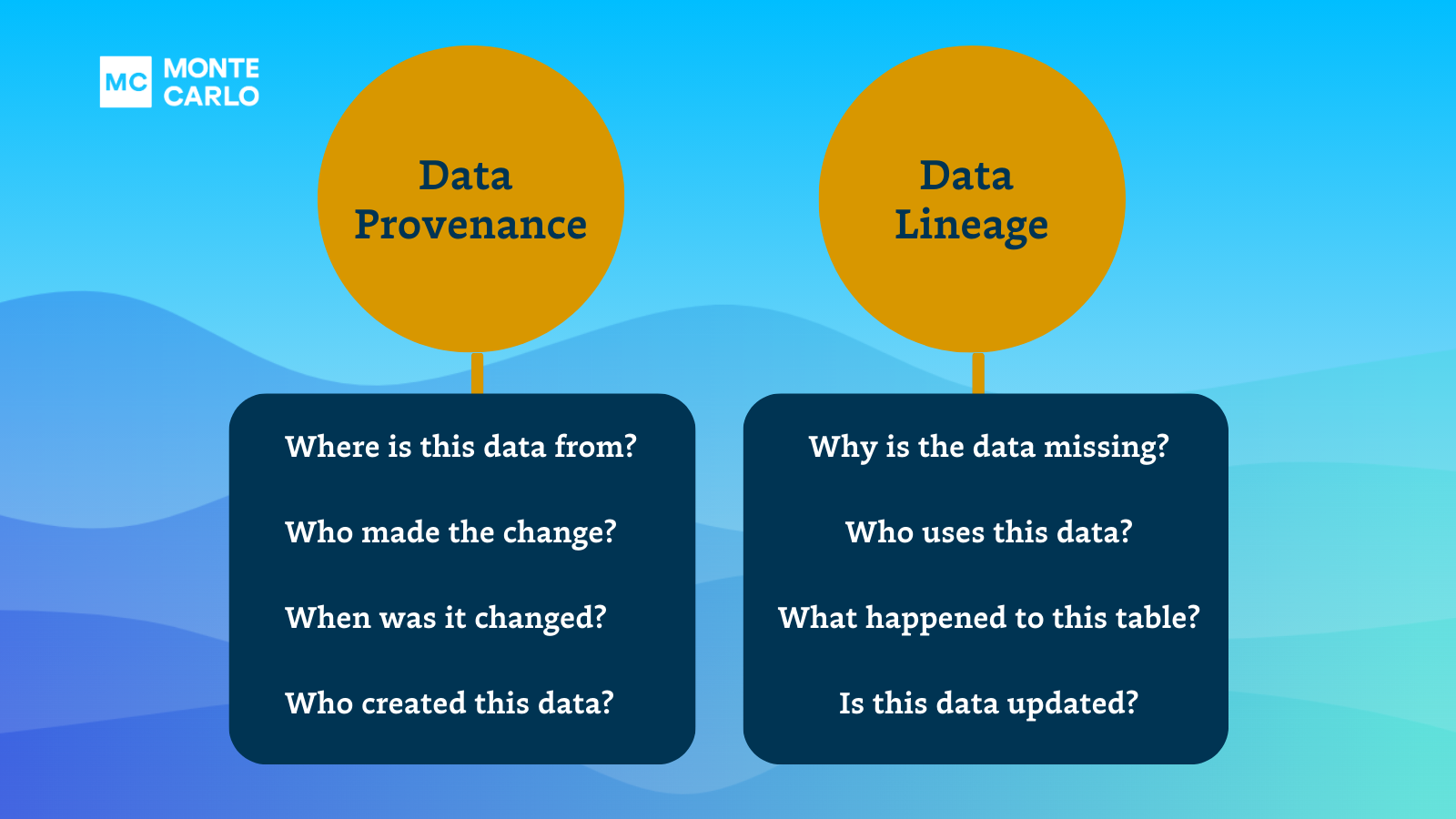

After leveraging data observability for automated volume, freshness, and schema change monitors, the JetBlue team took their data quality strategy a step further, operationalizing automated data lineage and key asset scores to gain critical operational context for data quality alerts.

This enables the team to triage and resolve incidents quicker, and assign ownership and accountability through their primary channels. For JetBlue’s data engineering team, that’s Microsoft Teams.

“Before we onboarded Monte Carlo, the data operations team’s primary focus was to just monitor pipeline runs that failed,” said Ashley Van Name, Senior Manager of Data Engineering at JetBlue.

“But now, they’ve got this extra level of insight where they are able to say ‘something is wrong with this table’ rather than just ‘something is wrong with this pipeline.’ And, in some cases, we see that the pipeline works fine and everything is healthy, but the data in the table itself is incorrect. Monte Carlo helps us see these issues, which we did not previously have a way to easily visualize.”

But being able to do something and actually doing it aren’t the same thing. So, with the ability to detect and resolve data quality incidents more easily, the JetBlue team turned to a set of internal KPIs to measure their success.

Their metrics included:

- Percentage of incidents classified

- Time to resolution

- Number of incidents overall

- Number of incidents per dataset

- User engagement

- Percentage of healthy data overall

Tip #2: Exercise controlled freedom with stakeholders

As data—and more importantly, its use-cases—continue to grow, enabling self-service for stakeholders becomes an increasingly attractive proposition.

Domain-driven architecture certainly isn’t for everyone…but it’s definitely for someone—and that someone tends to be enterprise organizations with the domain talent and scale to make the best use of it.

Of course, while self-service certainly has its benefits for enterprise teams that can wield it, it’s not without its risks. More data access means more opportunities. And those opportunities can be good or bad depending on how they’re managed.

The team at media giant Fox Networks solved this problem by creating a centralized data team to control a few key areas: how data is ingested, how data is kept secure, and how data is optimized in the best format to be then published to standard executive reports.

When his team can ensure data sources are trustworthy, data is secure, and the company is using consistent metrics and definitions for high-level reporting, it gives data consumers the confidence to freely access and leverage data within that framework.

Alex Tverdohleb, VP of Data Services at Fox Networks, calls this level of self-service “controlled freedom.”

“Everything else, especially within data discovery and your ad-hoc analytics, should be free,” said Alex. “We give you the source of the data and guarantee it’s trustworthy. We know that we’re watching those pipelines multiple times every day, and we know that the data inside can be used for X, Y, and Z — so just go ahead and use it how you want. I believe this is the way forward: “striving towards giving people trust in the data platforms while supplying them with the tools and skill sets they need to be self-sufficient.”

Instead of siloing data and causing bottlenecks with the data team, the Fox team created a framework by setting parameters around how data is ingested, how data is secured, and how data is published in the best format for data consumers.

The best of both worlds.

Tip #3: Invest in a data quality before you invest in AI

Seemingly every CEO and board director wants an AI initiative in the next 12 months—and that’s all well and good if they’ve got the use-case and requisite data quality to support it.

The problem is—quite simply—many of them don’t.

For any GenAI initiative to be successful, it needs to drive business value – and that comes from augmenting or fine-tuning an LLM with reliable business context. But that reliable business context doesn’t come without intentional effort.

Credit Karma is one team that’s doubled down on generative AI the right way. While the team is busy leveraging GenAI to provide differentiated and personalized experiences for users, quality, security, and access within their LLMs continues to remain top-of-mind.

“[For example] we don’t want the LLM to randomly give some made-up number when someone asks for their credit score. And we want to make sure that if someone is asking for their credit score, they can only ask for their credit score — not someone else’s,” said Vishnu Ram, VP of Engineering at Credit Karma.

So, that means the Credit Karma team needs visibility into the data feeding the model, how it’s working, and how changes to the data might affect the output—as well as adding additional data sources like RAG architecture to enrich the model. And data observability is the key to making sure that’s all working as expected.

“We don’t have any choice — we need to be able to observe the data,” Vishnu said. “We need to understand what data we’re putting into the LLM, and if the LLM is coming up with its own thing, we need to know that — and then know how to deal with that situation. If you don’t have observability of what goes into the LLM and what comes out, you’re screwed.”

Data quality – and data observability – are foundational to GenAI initiatives that deliver real business value.

If an enterprise organization is unable to consistently deliver reliable, high-quality data, they’re even further from delivering a reliable enterprise AI.

Data observability is the key to enterprise data quality management

For enterprise data teams, data quality monitoring is a great start—but it’s not enough.

Successful enterprise data leaders need visibility into the entire pipeline – at the data, systems, and code level – to enable truly reliable data at scale.

In the next era of data reliability, enterprise data teams need more than data quality monitoring— enterprise data teams need data observability.

Ready to learn how data observability can power your next-gen data quality strategy? Give us a call!

Our promise: we will show you the product.

Product demo.

Product demo.  What is data observability?

What is data observability?  What is a data mesh--and how not to mesh it up

What is a data mesh--and how not to mesh it up  The ULTIMATE Guide To Data Lineage

The ULTIMATE Guide To Data Lineage