What is ETL Pipeline? Process, Considerations, and Examples

Understand the purpose of an ETL pipeline, the difference between an ETL vs Data Pipeline with an example to build an end-to-end ETL pipeline from scratch.

If you are into Data Science or Big Data, you must be familiar with an ETL pipeline. If not, then don't worry. This guide provides definitions, a step-by-step tutorial, and a few best practices to help you understand ETL pipelines and how they differ from data pipelines.

The crux of all data-driven solutions or business decision-making lies in how well the respective businesses collect, transform, and store data. When working on real-time business problems, data scientists build models using various Machine Learning or Deep Learning algorithms. But before diving into making the models, the foremost and most significant thing is to acquire the correct and meaningful data to get insights from it. You might have heard the famous saying Garbage In, Garbage Out (GIGO)

AWS Project - Build an ETL Data Pipeline on AWS EMR Cluster

Downloadable solution code | Explanatory videos | Tech Support

Start Project

Image Source - Accutrend Data

GIGO refers to human errors resulting from incomplete, imprecise, or flawed data. A wrong input can result in unrecognizable output (garbage) on computers that use predefined logic. For example, build a calculator program that asks for an integer input. Instead, the user enters a string or an alphanumeric text. You may get an unexpected result. A well-written program will avoid producing garbage output (using some techniques like exception handling) by not accepting it in the first place. Now that we have understood how much significant role data plays, it opens the way to a set of more questions like

-

How do we acquire or extract raw data from the source? Can the source be single or multiple?

-

How do we transform this data to get valuable insights from it?

-

Where do we finally store or load the transformed data?

That's where the ETL (Extract, Transform, and Load) pipeline comes into the picture! In extract-transform-load (ETL), data is obtained from multiple sources, transformed, and stored in a single data warehouse, with access to data analysts, data scientists, and business analysts for data visualization and statistical analysis model building, forecasting, etc.

Table of Contents

- What is ETL Pipeline?

- ETL Pipeline vs. Data Pipeline - The Differences

- ETL Pipeline Architecture

- ETL Pipeline Tutorial - How to Build an ETL Pipeline?

- ETL Pipeline Python

- ETL Pipeline Tools

- Best Practices on How To Build ETL Pipelines

- ETL Pipeline Airflow Best Practices

- ETL Pipeline SQL Best Practices

- ETL Pipeline Azure Best Practices

- ETL Pipeline AWS Best Practices

- Spark ETL Pipeline Best Practices

- Snowflake ETL Pipeline Best Practices

- ETL Pipeline Example

- Getting Started with Building an ETL Pipeline

- FAQs on ETL Pipeline

What is ETL Pipeline?

ETL (Extract, Transform, and Load) Pipeline involves data extraction from multiple sources like transaction databases, APIs, or other business systems, transforming it, and loading it into a cloud-hosted database or a cloud data warehouse for deeper analytics and business intelligence. First, we will start with understanding the Data pipelines with a straightforward layman's example. Now let us try to understand ETL data pipelines in more detail.

In the good old days, people walked miles to fetch water for their basic needs from water sources like wells, rivers, ponds, etc. It was a manual process and very challenging as well.

Image Source - SheThePeopleTV

As time changed, getting water via the old traditional method was impractical. Our needs also increased; we wanted more water for various purposes. We wanted it automatically and at the same more frequently. The invention of the pipeline system automated the water supply delivery to homes via pipes from various water sources. The concept of Data pipelines is no different than the water pipeline. We have data sources and data consumers, and to connect them, we use data pipelines.

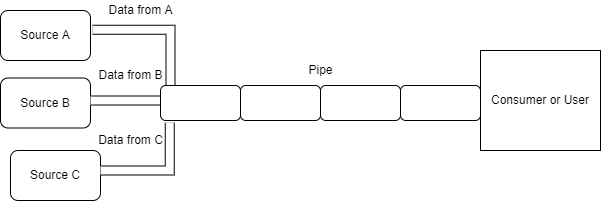

Simple Architecture of Data Pipelines

The example and diagram above are for data pipelines. I know the very next question in your mind is What is the difference between Data pipelines and ETL pipelines, and how to decide when to use which one of them?

Here's what valued users are saying about ProjectPro

Abhinav Agarwal

Graduate Student at Northwestern University

Gautam Vermani

Data Consultant at Confidential

Not sure what you are looking for?

View All ProjectsETL Pipeline vs. Data Pipeline - The Differences

It is common to interchangeably refer to ETL (Extract, Transform, and Load) pipelines and data pipelines. Although both terms refer to processes for moving data from various sources to a single data warehouse, they are not the same. Here are three key differences between ETL pipelines and data pipelines:

New Projects

1) ETL Pipeline vs. Data Pipeline: ETL Pipelines are Subsets of the Data Pipeline

The ETL (Extract, Transform, and Load) pipeline concludes by loading data into a database or data warehouse. A Data Pipeline doesn't always end with loading data. Instead, loading data can trigger new processes and flows in a Data Pipeline by triggering webhooks in other systems.

2) ETL Pipeline vs. Data Pipeline: ETL Pipelines Always Involve Transformation

A Data Pipeline involves moving data between different systems but does not necessarily include transforming it. Data is extracted from a source, transformed, and then loaded into its output destination using ETL, as its acronym suggests.

3) ETL Pipeline vs. Data Pipeline: ETL Pipelines are Batch-processed, and Data Pipelines are Real-Time

Furthermore, ETL pipelines move data in chunks at regular intervals and in batches, and the pipeline might run twice per day or at a time when system traffic is low. In contrast, a data pipeline runs as a real-time process involving streaming computations and continuously updating data.

ETL Pipeline Architecture

ETL (Extract, transform, and Load) pipeline architecture delineates how your ETL data pipeline processes will run from start to finish. It contains information on data flow from a source to a target location and its transformation steps.

Let’s understand each stage of ETL data pipelines in more detail.

Extract

The "Extract" stage of ETL data pipelines involves gathering data from multiple data sources, eventually appearing as rows and columns in your analytics database. Historically, data extraction meant retrieving information from files like Excel, CSV, Text, etc. Storing data in raw format is still prevalent, as they were the primary sources of customer information. But now, with the increase in web and mobile application usage, most businesses find valuable information for their data pipelines through web pages using web scraping or via API. We can gather Amazon product reviews to do sentimental analysis, provide recommendations to the buyers, scrape job postings on different company career pages, and build a job portal using this data.

There are three possible and most commonly used approaches -

-

Full-Extraction

The entire data is extracted from the source and pushed into the data pipeline.

-

Incremental Extraction

Each time a data extraction process runs (such as an ETL pipeline), only new data and data that has changed from the last time are collected—for example, collecting data through an API.

-

Source-Driven Extraction

The source notifies the ETL system when data changes, triggering the ETL pipeline to extract the new data.

Transform

All the data science professionals would be familiar with the term "Garbage in, garbage out." We have already seen how important it is to feed good-quality data for any solution. To improve data quality, we have to apply data transformation techniques. It is a very critical step while building ETL data pipelines, as the entire value of our data lies in how well we transform it as per our needs.

Let us look at some examples of transformation steps taken to process the data.

-

Basic Cleaning:

Converting data into a suitable format as per our requirement. For example, suppose some date input doesn't have any specific format. In that case, the user can provide it differently, like DD/MM/YY or DD/MM/YYYY or MM/DD/YYYY, etc. We can convert it into a standard format as per our needs.

-

Join Tables:

If our source is RDBMS or SQL tables, we might need to join or merge multiple data tables.

-

Filtering:

Sometimes, we only need a subset of the data, and we can filter the relevant data and discard everything else. It will, in turn, also increase the speed of our data transformation process.

-

Aggregation:

To summarize all rows within a group defined by one or more columns, apply aggregate functions such as average, minimum, maximum, median, percentile, and sum. For example, we can compute total sales revenue by region, store, or product.

You may encounter the following data issues while transforming data.

Duplicates: This is a very frequently occurring issue. Understanding whether the identical records are duplicated by mistake or legitimate is crucial. For instance, a given person probably bought the same product on the same day at the same store. IfTo the identical records are indeed duplicates, we are comfortable removing them.

Outlier: In manually entered databases, data entry errors are possible. For instance, someone might enter a person's age as 150 or 200 due to a typo.

Missing Data: Data values that are not recorded for a variable in observation are called missing data or missing values in statistics. Missing data is a common problem that can impact the conclusions drawn from the data. We can drop these values if we have data points required for our analysis even after removing the missing data points. To fix this, we can return to the extraction step and see if we can use an additional data set to fill in the missing data. It is possible to impute the missing data using means, medians, modes, regression-based imputation, etc.

Corrupted Text: When we usually extract data stored in the upstream systems via APIs or scraping, the data may look messy. For example, address data may have misspelled street names, incorrect zip codes, etc., or mobile numbers may have special symbols and country codes appended before them. We might need to discuss this with the data administration team and check how we can resolve them. We can use Google Geolocation API to extract well-formatted location data to fix the address-related issue.

Load

The final step of creating ETL data pipelines is to load data, and the final destination of the processed data could vary based on the business requirement and analysis required of the data. The following are examples of target databases or data warehouses where you can store your processed data.

Flat Files:

CSV, TXT, and Excel spreadsheets are standard text file formats for storing data. Nontechnical users can easily access these data formats without installing data science software.

SQL RDBMS:

The SQL database is a trendy data storage where we can load our processed data.

Cloud:

Technology advancements, information security threats, faster internet speeds, and a push to prevent data loss have contributed to the move toward cloud-native storage and processing. Cloud storage is the best option for storing all the processed data, and it is secure and easily accessible, and no infrastructure is required. It is the most feasible option when the data size is huge.

Build a Job Winning Data Engineer Portfolio with Solved End-to-End Big Data Projects

ETL Pipeline Tutorial - How to Build an ETL Pipeline?

Here’s a brief ETL pipeline tutorial presenting a step-by-step approach to building an end-to-end ETL data pipeline with batch processing -

Step 1. Create reference data:

Reference data contains the possible values for your data based on static references. It could be helpful to have reference data when transforming data from a source to an output destination for your ETL data pipelines. Nevertheless, this is an optional step that we can omit. For instance, specify the list of country codes allowed in a country data field.

Step 2. Connectors to Extract data from sources and standardize data:

For extracting structured or unstructured data from various sources, we will need to define tools or establish connectors that can connect to these sources. The data sources can be an RDBMS or some file formats like XLSX, CSV, JSON, etc., API or web pages, and sometimes a combination of all these sources together! We need to extract data from all the sources and convert it into a single format for standardized processing.

Step 3. Validate data:

Validating the data after extraction is essential to ensure it matches the expected range and rejects it if it does not. You may, for example, extract the data for the past 24 hours, leaving the data containing records older than 24 hours.

Step 4. Transform Data

Once the data is validated, we apply transformations to remove duplicates, perform cleansing, standardization, business rule application, perform data integrity checks, data governance, use aggregations, and many more.

Step 5. Stage Data

Data that has been transformed is stored in this layer. Loading transformed data directly into a data warehouse or other target systems is not recommended. Instead, if something goes wrong, the staging layer allows you to roll back the data easily. Additionally, the staging layer helps generate Audit Reports for analysis, diagnosis, or regulatory compliance.

Step 6. Load to Data Warehouse

The data is pushed from the staging layer to the target data warehouse. You can overwrite or append the existing information each time the ETL pipeline loads a new batch.

Step 7. Scheduling

This is the last and most critical part of automating the ETL pipeline. You can choose daily, weekly, monthly, or any custom range, depending on your preference. We can include timestamps and the data loaded with the schedules to identify the loading date.

ETL Pipeline Python

Although Python is a simple and easy-to-understand language, it requires specific skills to build an ETL Pipeline in Python. If your business is small and you don't have a data engineering team, you can find it challenging to build complex data pipelines from the ground up unless you are an expert in this programming language. However, several tools are now available that significantly simplify the creation of Python ETL data pipelines. These include self-contained toolkits like Pygrametl, Apache Airflow, Luigi for workflow management, and Pandas for data movement and processing.

How to Build ETL Pipeline in Python?

This section will help you understand how to build a simple ETL pipeline using Python. Here are a few quick and easy steps of an ETL Pipeline Python example.

-

To build this ETL pipeline, you must request records from The Movie Database API. To submit queries, you must have an API key. Once you have your key, you must be careful not to insert it into your source code. One simple approach is to write the code into a file named config.py that you will create in the same directory as your ETL script. If you plan to publish your code somewhere, you should include config.py in a.gitignore file or another similar file to prevent it from being pushed to any remote repositories. Create a file called tmdb.py and import the necessary elements into that file.

-

Extract: You will receive a JSON record with the movie id you specify as the response after sending a single GET request to the API. In this example, you will send six movie requests with movie_ids 550 to 555. The next step is constructing a loop that makes individual queries for each movie and adds the results to a list. With the help of from_dict(), create a pandas dataframe from the records.

-

Transform: You will create a list of column names called df_columns, enabling you to choose the necessary columns from the main dataframe. You can easily extend this column to see and use the internal records. One approach for doing this is to divide the list column into a one-hot categorical column. Each categorical value should have a single column, with the row value set to 1 if the movie falls within that category and 0 otherwise. Create a temporary column called genres_all that contains lists of genres that you can later expand into individual columns for each genre.

-

Load: You will have three tables for the tmdb schema that you will label movies, genres, and datetimes. By writing your tables to a file, you can export them. The same directory your script is in will now include three .csv files.

ETL Pipeline Tools

Here are some popular tools for building ETL (Extract, transform, and Load) data pipelines.

-

AWS Glue

The AWS ecosystem offers AWS Glue, a fully managed cloud-based ETL service. It is a fully managed ETL platform that makes getting your data ready for analysis easier. The serverless platform has a wide range of features that can perform additional tasks, like the AWS Glue Data Catalog for identifying data across the organization and the AWS Glue Studio for dynamically creating, executing, and managing ETL data pipelines.

AWS Glue is incredibly simple to use; you only need to create and execute an ETL job in the AWS Management Console with a few clicks. All you need to do is set up AWS Glue to point to your AWS-stored data. The AWS Glue Data Catalog automatically loads your data and the associated metadata. Your data will be immediately accessible and available for the ETL data pipeline once this process is over.

-

Talend

One of the most significant data integration ETL tools in the market is Talend Open Studio (TOS). From the initial ETL design to the execution of the ETL data load, TOS allows you to manage every step involved in the ETL data pipeline process effortlessly. You can quickly map the structured/unstructured data from the source to the target systems using the graphical interface that Talend Open Studio offers. You must drag and drop the necessary components from the palette into the workspace, set them up, and then link them. It gives you access to a metadata library where you can quickly reuse and repurpose your work.

-

Apache Hive

Apache Hive is a data warehousing and ETL tool developed on top of the Hadoop platform for large-scale data summarization, analysis, and querying. Large data management and querying are easier with the Apache Hive data warehouse software. It offers a technique for transforming semi-structured and unstructured data into useful schema-based data. Hive makes it easier for those familiar with SQL and who work with standard RDBMS databases to access and modify data in Hadoop.

Best Practices on How To Build ETL Pipelines

Extraction, transformation, and loading (ETL) processes are the foundation of every organization's data management strategy. The reliability and efficiency of these processes will enhance by implementing a set of best practices for managing ETL data pipelines. Let's explore some of the ETL best practices that businesses adopt.

-

ETL Logging

One of the best practices for maintaining ETL data pipelines is ETL logging, which entails recording all events before, during, and following an ETL process. Every organization is different and needs a special solution, so choosing an ETL process cannot be done using a predefined method. Making this decision and customizing your ETL data pipeline process is easier by maintaining appropriate logs.

-

Improve the Quality of Your Data

If you want faster, reliable data outputs, ensure the data you feed into your ETL processes is as accurate as possible. Automated data quality solutions can help with this task by finding missing and inconsistent data in your data sets.

-

Reduce Data Input

Avoid using serial ETL processes at all times. Instead, you can minimize time-to-value by implementing as many ETL integrations as your architecture supports. Your outputs will be faster and cleaner if you input the ETL process with less data. This is why you should remove any unnecessary data as early as possible during the ETL data pipeline process.

-

Recovery Checkpoint

Setting up checkpoints during the ETL data pipeline process is a good idea. When transferring massive amounts of data, unexpected mistakes or failures are common. Checkpoints help record where an issue happened so that the data pipeline process does not need to be restarted from scratch. Checkpointing is therefore considered one of the best practices for ETL data pipelines.

ETL Pipeline Airflow Best Practices

Here are some best practices for maintaining an Airflow ETL pipeline.

-

Regular Updates for Workflow are Necessary.

Developers must keep Python current and up-to-date for your Airflow processes to function properly. Airflow ETL professionals should sync them to the GitHub repository to accomplish this. Syncing the directory with the bashoperator and pull requests is advisable, and starting a workflow with a pull request is smart.

-

The Right Goals For DAG Should Be Set Up.

Define and properly analyze the DAG's purpose before developing it. It is crucial to properly prepare each component, including the DAG's input, output, triggers, data integration, and third-party tools. It is necessary to reduce the DAG complexity to make maintenance simpler. A well-defined mission statement for DAG is essential, such as updating models or exporting data warehouses.

-

Choose the Best Provider of Airflow ETL Services.

The main cause of data pipeline project failure is a lack of technical skills. Apache Airflow for ETL data pipeline optimization demands strong technical proficiency. To successfully complete such a data pipeline project, you must have individuals with relevant tech expertise.

ETL Pipeline SQL Best Practices

Here are some best practices for maintaining an ETL pipeline SQL.

-

Automation Choices

Consider data pipeline tools that integrate easily with your business data sources. The ideal option would be to create a routine without using any code (drag-and-drop components into your ETL data flow). The ETL data pipeline process shouldn't require much (if any) technical attention to keep loaded data relevant and up-to-date once connected.

-

A Complex Data Transformation Toolkit

The best ETL tools include prewritten SQL statements that may be used to convert data into valuable insights. Additionally, you can discover connectors with external tools that provide powerful data transformations.

-

Automatic Adoption of Regulations

Compliance with regional rules and regulations is necessary even when you alter data. This indicates a broad range of applicability, from nonprofit organizations to high-volume retail enterprises.

ETL Pipeline Azure Best Practices

Here are some best practices for maintaining an Azure Databricks ETL pipeline.

-

Use Azure Databricks Notebooks to Update ETL data pipelines.

You can speed up your ETL processes with Azure Databricks by parallelizing operations across scalable compute clusters. This approach is ideal if you expect a rapid increase in the volume, velocity, and variety of data in your ETL data pipelines. Using Delta Lake, you can use Databricks notebooks and your SQL expertise to query your data lake.

-

Validate and Migrate Your ETL Workflow

When you are ready to initiate your ETL migration, start by moving your SSIS logic to Databricks notebooks so you can dynamically test and perform data transformations. Create ETL data pipelines in Azure Data Factory after the notebooks function well to automate your ETL processes. Review the data in your target data lake or data warehouse, look over logs for anomalies, schedule ETL processes, and set up notifications to examine the outcomes of the transfer from SSIS to Databricks. Moving your ETL workloads and processes to the cloud data warehouse facilitates your ability to accelerate outcomes, reduce costs, and improve dependability.

-

Migrate SSIS Data Pipelines to the Cloud

Customers have successfully transferred numerous on-premises ETL software products to Azure. There are a few options, and which one you select will depend on a few factors:

-

Data pipeline's complexity (number and types of input and target data source).

-

Access to the data repositories at the source and destination data warehouse.

-

Applications that depend on the source data repository in terms of both number and type.

ETL Pipeline AWS Best Practices

Here are some best practices for maintaining an ETL pipeline AWS.

-

Ensure Consistent ETL Runtimes

The queue should have fewer slots, which is one of the greatest strategies to maintain consistency in ETL runtimes. As a result, the wait time for the commit queue is minimized since the ETL data pipeline process commit is high. Businesses can request more RAM in the wlm_query_slot_count. This accelerates the copying process and enables concurrent execution of downstream ETL tasks. Once the process is finished, users can stop using the ETL and start using the RAM from the reporting queue.

-

Integrate Multiple Steps

It is essential to complete several jobs in a single transaction to streamline the ETL data pipeline and improve the AWS data pipeline. This approach improves performance and minimizes commit costs by doing one commit at the end of the execution of the transformation logic.

-

Tracking ETL Performance

Using monitoring scripts, users should keep track of the condition of their ETL data pipeline process. You can implement commit stats.sql, for instance, to past queue lengths and durations. This approach can determine whether execution times are longer than usual or when several options are being considered simultaneously.

The copy performance.sql script will analyze incoming datasets and offer insight regarding growth when the regular COPY executions take longer than expected.

Spark ETL Pipeline Best Practices

Apache Spark is a powerful Big Data platform that simplifies building ETL data pipelines. You can easily load and process petabytes of data by establishing a cluster of multiple nodes. You can also build an ETL pipeline using PySpark because it enables you to integrate Python and Spark features into your ETL workflow.

Here are some best practices for maintaining a Spark ETL pipeline.

-

Workload Balancing Through Randomization

The fundamentals of Spark memory management include the number of cores and memory size for executors. There is a lengthy list of options, including overhead memory size, the number of default partitions, and others. Data engineers often experience issues with various sizes of applications in the pipeline, such as a long-tail pipeline (one final application takes a very long time), larger applications hindering smaller applications, and other issues. Although they can use delicate engineering to solve these problems, randomization is a more efficient solution. In addition to distributing the workload evenly (facilitating cluster-level resource provisioning), randomization of the application also effectively mixes various application sizes (less idleness).

-

Use Window Functions Instead of Joins

Data engineers make intermediate tables before moving data to the data warehouse because each intermediate table holds different business logic, which is then combined to generate a final table. This pattern works fine. However, a straightforward join between intermediate tables later in the process can easily result in a memory issue as the data manipulation chain gets longer. Even familiar methods like persist(), which cache intermediate data, are useless.

Due to persistent memory issues, data engineers prefer Window functions to add new columns rather than joins. This not only minimizes memory issues but also improves performance. While the Window function only uses a few columns, joins involve two tables. Creating Window functions is more complex than writing joins, but making the computation graph simpler.

-

Reorganize Pipeline Output

The most popular and effective method for performance optimization in a data pipeline is to minimize the amount of data used in your application: ingest the minimal amount of raw data, and keep intermediate data frames smaller. You can instantly witness a 50% performance boost if you swap out any raw data sources for a source with a lower level of granularity.

You can use input = spark.read.parquet("fs://path/file.parquet"). select(...) to restrict reading to only relevant columns if alternate data sources are unavailable. Your application will run faster if it reads less data into memory. Reducing the amount of output in your target directory will boost performance. Cleaning up your data pipeline output extends performance benefits throughout the entire system and business, especially considering the cost of data storage and downstream operations to handle additional rows.

Ace your Big Data engineer interview by working on unique end-to-end solved Big Data Projects using Hadoop

Snowflake ETL Pipeline Best Practices

Here are some best practices for maintaining a Snowflake ETL pipeline.

-

Consider Data Staging

Snowflake and some other data sources (Azure/S3) support stage references via paths. It is a best practice to partition typical data sets into logical paths before beginning Snowflake ETL. This can also include the date the data was written and details like source identifiers or geographic location.

After doing so, you can copy files by the path with a single command. This will let you run concurrent COPY statements that match a subset of files, allowing you to benefit from Snowflake's parallel operations.

-

Data Storage

When storing data, Snowflake offers a wide range of options. All Snowflake accounts receive Continuous Data Protection (CDP), which comes with Fail-Safe and Time Travel, at no extra charge. This does not eliminate the possibility that CDP will affect your storage prices. All information in databases, tables, and schemas created by your Snowflake Architecture for your target data warehouse will be charged to your account. CDP affects the cost of storage based on the amount of data stored and how long it is kept in the data warehouse.

The Snowflake ETL pipeline process has a feature called Stages, where files with the data to be loaded are staged for loading data into tables. A "snapshot" of any schema, table, or database can be easily taken using Snowflake's zero-copy cloning functionality. A derived copy of that object is created using this feature, initially sharing the underlying storage of the data warehouse. When making instant backups, this can be useful.

-

Integrity Constraints and Clustering Keys

Referential integrity restrictions should be implemented in Snowflake. They offer useful metadata that users can use to comprehend the schema and the stated relationships between tables. Clustering is a useful strategy and enhances query performance for Big Data sets. An automatic tuning and micro-partitioning feature are available in Snowflake. Users typically use the Snowflake ETL technique to load data into a cloud data warehouse, divide it into micro-partitions by datestamps or timestamps, and then query it along the same dimension.

ETL Pipeline Example

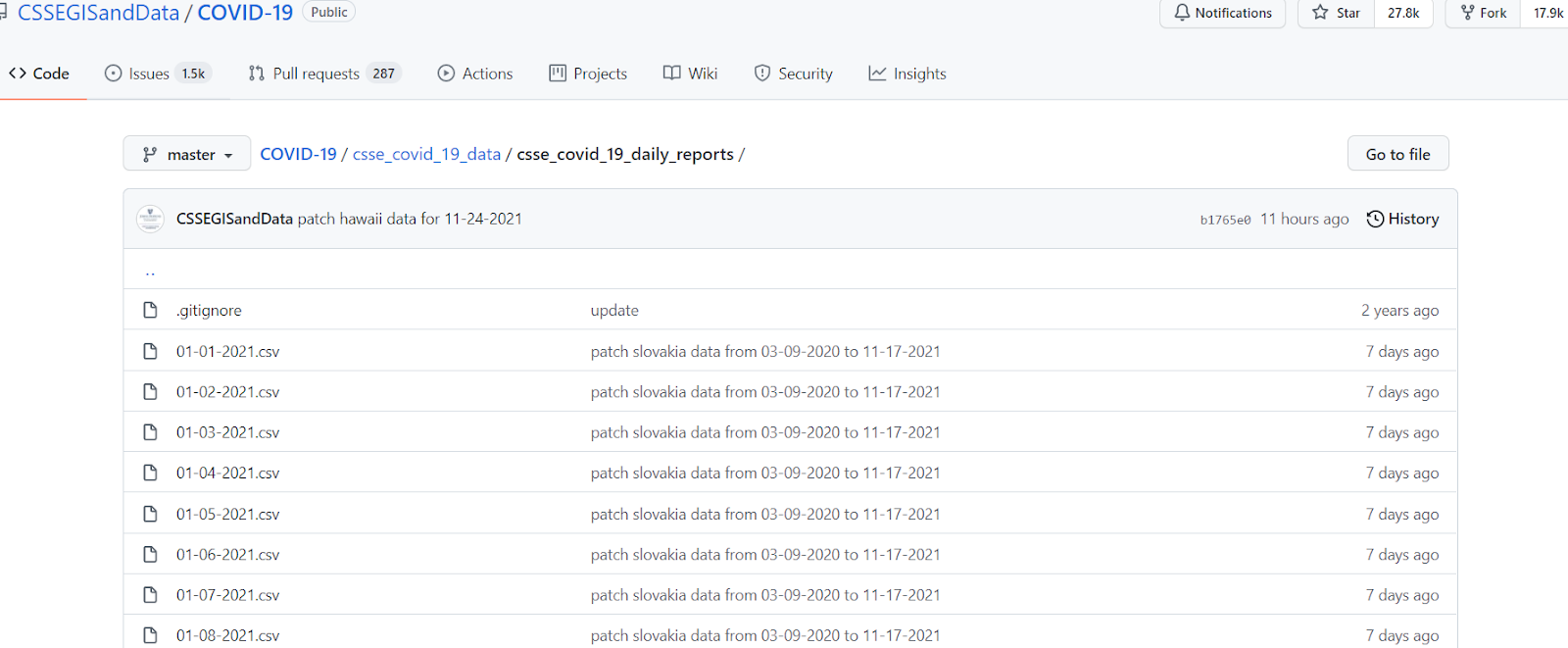

Let's take a real-time ETL data pipeline example where we can use ETL data pipelines. The spread of the COVID-19 virus that began in the initial months of 2020 still influences several parts of the world, and the vaccine has undoubtedly helped to control the spread of the virus. John Hopkins University monitors and updates the country-specific case count, death count, vaccination count, etc.

The above image shows the overall covid case count, total deaths, and total vaccine administered by country/region. At the center of the image is a map of the entire world with red spots highlighting the most adversely affected area. You can also create a dashboard shown above and include more plots and graphs as per your wish!

-

Data Extraction

The data source is a GitHub repository updated daily between 04:45 and 05:15 GMT. The data is present in CSV file format.

Image Name: dataset for etl data pipeline, Alt Tag: data pipeline GitHub dataset, Alt Desc- Image for building etl data pipeline from GitHub dataset

To extract data from the GitHub CSV files, we can use Python's requests library and read the data directly from the URL using the below code.

Import requests

url = r'https://github.com/CSSEGISandData/COVID-19 '.format(url)

raw_string = requests.get(url).content

-

Data Transformation

Depending on our dashboard or what analysis we need to perform, we can de-pivot, join, or union some of the columns in the data. For example, in the code below, Pandas pd.melt() unpivots a DataFrame from wide to long format.

us_confirmed_melt = pd.melt(

confirmed_us,

id_vars=['Country_Region', 'Province_State', 'Admin2', 'Lat', 'Long_'],

value_vars=dates).rename(columns={'Country_Region': 'Country/Region',

'Province_State': 'Province/State',

'Lat': 'Latitude',

'Long_': 'Longitude',

'variable': 'date',

'value': 'Confirmed'})

Python’s pandas’ data frame can be considered a staging area.

-

Data Loading

Our final output will be a dashboard to upload the transformed data to a visualization tool like Tableau or Power BI to create a report or dashboard. We can also use Python visualization libraries like matplotlib, seaborn, plotly, etc.

The ETL data pipeline can run daily after 5:15 GMT after the data is updated in the GitHub repository. The dashboard you create will be updated in real-time as the new data is updated from the data sources!

Getting Started with Building an ETL Pipeline

We know you are certainly excited about some hands-on project examples to work on building an end-to-end ETL data pipeline. Explore this end-to-end project on building an ETL Data Pipeline on AWS EMR Cluster on the sales dataset. Competitive technologies like Amazon S3, EMR, and Tableau are used to derive metrics from the data. An AWS Big Data Pipeline is built to provide batch ingestion to various consumers based on their requirements. It involves creating a fully working, scalable, reliable, and secure AWS EMR complex data pipeline from scratch, including data collection, analysis, and visualization.

With the increasing competition in the job market, the industry demand for ETL developers is very high. However, given the rising adoption of big data across industries, gaining hands-on experience working with big data and implementing an ETL data pipeline architecture is essential. You need experience implementing real-time end-to-end projects using big data technologies like Hive, Kafka, Sqoop, etc., along with ETL. In that case, you will have something we call a competitive edge. As a starter, here is a big data project that uses AWS Quicksight, Druid, and Hive to simulate a complex real-world ETL data pipeline based on messaging.

Keep reading, keep learning and keep practicing!

Image Source - Tenor

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

FAQs on ETL Pipeline

-

Is ETL the same as a pipeline?

ETL is not the same as a pipeline. The term "ETL" defines a set of methods to extract data from a system, transform it, and load it into target systems. A data pipeline is a set of operations that moves data stored in one system to another while transforming it.

-

What is an ETL pipeline example?

An ETL pipeline example is processing and analyzing movie review data for an online movie streaming platform.

-

What does ETL stand for?

ETL stands for Extract, Transform, Load.

About the Author

Daivi

Daivi is a highly skilled Technical Content Analyst with over a year of experience at ProjectPro. She is passionate about exploring various technology domains and enjoys staying up-to-date with industry trends and developments. Daivi is known for her excellent research skills and ability to distill