Hadoop Ecosystem Components and Its Architecture

Understand how the hadoop ecosystem works to master Apache Hadoop skills and gain in-depth knowledge of big data ecosystem and hadoop architecture.

The demand for Big data Hadoop training courses has increased after Hadoop made a special showing in various enterprises for big data management in a big way.Big data hadoop training course that deals with the implementation of various industry use cases is necessary Understand how the hadoop ecosystem works to master Apache Hadoop skills and gain in-depth knowledge of big data ecosystem and hadoop architecture.However, before you enroll for any big data hadoop training course it is necessary to get some basic idea on how the hadoop ecosystem works.Learn about the various hadoop components that constitute the Apache Hadoop architecture in this article.

Web Server Log Processing using Hadoop in Azure

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectAll the components of the Hadoop ecosystem, as explicit entities are evident. The holistic view of Hadoop architecture gives prominence to Hadoop common, Hadoop YARN, Hadoop Distributed File Systems (HDFS) and Hadoop MapReduce of the Hadoop Ecosystem. Hadoop common provides all Java libraries, utilities, OS level abstraction, necessary Java files and script to run Hadoop, while Hadoop YARN is a framework for job scheduling and cluster resource management. HDFS in Hadoop architecture provides high throughput access to application data and Hadoop MapReduce provides YARN based parallel processing of large data sets.

In our earlier articles, we have defined “What is Apache Hadoop” .To recap, Apache Hadoop is a distributed computing open source framework for storing and processing huge unstructured datasets distributed across different clusters. The basic principle of working behind Apache Hadoop is to break up unstructured data and distribute it into many parts for concurrent data analysis. Big data applications using Apache Hadoop continue to run even if any of the individual cluster or server fails owing to the robust and stable nature of Hadoop.

Table of Contents

- Big Data Hadoop Training Videos- What is Hadoop and its popular vendors?

- Defining Architecture Components of the Big Data Ecosystem

- Core Hadoop Components

- 3) MapReduce- Distributed Data Processing Framework of Apache Hadoop

- MapReduce Use Case:

- >4)YARN

- Key Benefits of Hadoop 2.0 YARN Component-

- YARN Use Case:

- >Data Access Components of Hadoop Ecosystem- Pig and Hive

- >Data Integration Components of Hadoop Ecosystem- Sqoop and Flume

- >Data Storage Component of Hadoop Ecosystem –HBase

- >Monitoring, Management and Orchestration Components of Hadoop Ecosystem- Oozie and Zookeeper

- What’s new in Hadoop 3.x?

Big Data Hadoop Training Videos- What is Hadoop and its popular vendors?

Hadoop as defined by Apache Foundation-

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly available service on top of a cluster of computers, each of which may be prone to failures.

Learn Hadoop to become a Microsoft Certified Big Data Engineer.

With big data being used extensively to leverage analytics for gaining meaningful insights, Apache Hadoop is the solution for processing big data. Apache Hadoop architecture consists of various hadoop components and an amalgamation of different technologies that provides immense capabilities in solving complex business problems.

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

All the components of the Hadoop ecosystem, as explicit entities are evident. The holistic view of Hadoop architecture gives prominence to Hadoop common, Hadoop YARN, Hadoop Distributed File Systems (HDFS), and Hadoop MapReduce of Hadoop Ecosystem. Hadoop common provides all java libraries, utilities, OS level abstraction, necessary java files, and scripts to run Hadoop, while Hadoop YARN is a framework for job scheduling and cluster resource management. HDFS in Hadoop architecture provides high throughput access to application data and Hadoop MapReduce provides YARN-based parallel processing of large data sets.

Let us, deep-dive, into the Hadoop architecture and its components to build the right solutions to given business problems.

Image Credit: mssqpltips.com

New Projects

Defining Architecture Components of the Big Data Ecosystem

Core Hadoop Components

The Hadoop Ecosystem comprises of 4 core components –

1) Hadoop Common-

Apache Foundation has pre-defined set of utilities and libraries that can be used by other modules within the Hadoop ecosystem. For example, if HBase and Hive want to access HDFS they need to make of Java archives (JAR files) that are stored in Hadoop Common.

Here's what valued users are saying about ProjectPro

Gautam Vermani

Data Consultant at Confidential

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Not sure what you are looking for?

View All Projects2) Hadoop Distributed File System (HDFS) -

The default big data storage layer for Apache Hadoop is HDFS. HDFS is the “Secret Sauce” of Apache Hadoop components as users can dump huge datasets into HDFS and the data will sit there nicely until the user wants to leverage it for analysis. HDFS component creates several replicas of the data block to be distributed across different clusters for reliable and quick data access. HDFS comprises of 3 important components-NameNode, DataNode and Secondary NameNode. HDFS operates on a Master-Slave architecture model where the NameNode acts as the master node for keeping a track of the storage cluster and the DataNode acts as a slave node summing up to the various systems within a Hadoop cluster.

Image Credit: slidehshare.net

HDFS Use Case-

Nokia deals with more than 500 terabytes of unstructured data and close to 100 terabytes of structured data. Nokia uses HDFS for storing all the structured and unstructured data sets as it allows processing of the stored data at a petabyte scale.

3) MapReduce- Distributed Data Processing Framework of Apache Hadoop

MapReduce is a Java-based system created by Google where the actual data from the HDFS store gets processed efficiently. MapReduce breaks down a big data processing job into smaller tasks. MapReduce is responsible for the analysing large datasets in parallel before reducing it to find the results. In the Hadoop ecosystem, Hadoop MapReduce is a framework based on YARN architecture. YARN based Hadoop architecture, supports parallel processing of huge data sets and MapReduce provides the framework for easily writing applications on thousands of nodes, considering fault and failure management.

The basic principle of operation behind MapReduce is that the “Map” job sends a query for processing to various nodes in a Hadoop cluster and the “Reduce” job collects all the results to output into a single value. Map Task in the Hadoop ecosystem takes input data and splits into independent chunks and output of this task will be the input for Reduce Task. In The same Hadoop ecosystem Reduce task combines Mapped data tuples into smaller set of tuples. Meanwhile, both input and output of tasks are stored in a file system. MapReduce takes care of scheduling jobs, monitoring jobs and re-executes the failed task.

MapReduce framework forms the compute node while the HDFS file system forms the data node. Typically in the Hadoop ecosystem architecture both data node and compute node are considered to be the same.

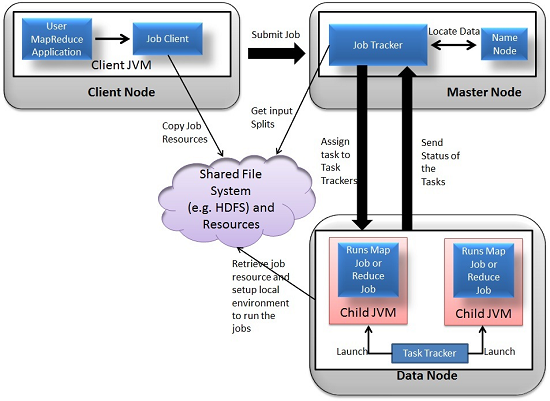

The delegation tasks of the MapReduce component are tackled by two daemons- Job Tracker and Task Tracker as shown in the image below –

Image Credit: saphanatutorial.com

MapReduce Use Case:

Skybox has developed an economical image satellite system for capturing videos and images from any location on earth. Skybox uses Hadoop to analyse the large volumes of image data downloaded from the satellites. The image processing algorithms of Skybox are written in C++. Busboy, a proprietary framework of Skybox makes use of built-in code from java based MapReduce framework.

>4)YARN

YARN forms an integral part of Hadoop 2.0.YARN is great enabler for dynamic resource utilization on Hadoop framework as users can run various Hadoop applications without having to bother about increasing workloads.

Image Credit: slidehshare.net

Key Benefits of Hadoop 2.0 YARN Component-

- It offers improved cluster utilization

- Highly scalable

- Beyond Java

- Novel programming models and services

- Agility

Get More Practice, More Big Data and Analytics Projects, and More guidance.Fast-Track Your Career Transition with ProjectPro

YARN Use Case:

Yahoo has close to 40,000 nodes running Apache Hadoop with 500,000 MapReduce jobs per day taking 230 compute years extra for processing every day. YARN at Yahoo helped them increase the load on the most heavily used Hadoop cluster to 125,000 jobs a day when compared to 80,000 jobs a day which is close to 50% increase.

The above listed core components of Apache Hadoop form the basic distributed Hadoop framework. There are several other Hadoop components that form an integral part of the Hadoop ecosystem with the intent of enhancing the power of Apache Hadoop in some way or the other like- providing better integration with databases, making Hadoop faster or developing novel features and functionalities. Here are some of the eminent Hadoop components used by enterprises extensively -

>Data Access Components of Hadoop Ecosystem- Pig and Hive

-

Pig-

​Apache Pig is a convenient tools developed by Yahoo for analysing huge data sets efficiently and easily. It provides a high level data flow language Pig Latin that is optimized, extensible and easy to use. The most outstanding feature of Pig programs is that their structure is open to considerable parallelization making it easy for handling large data sets.

Pig Use Case-

The personal healthcare data of an individual is confidential and should not be exposed to others. This information should be masked to maintain confidentiality but the healthcare data is so huge that identifying and removing personal healthcare data is crucial. Apache Pig can be used under such circumstances to de-identify health information.

-

Hive-

​ Hive developed by Facebook is a data warehouse built on top of Hadoop and provides a simple language known as HiveQL similar to SQL for querying, data summarization and analysis. Hive makes querying faster through indexing.

Hive Use Case-

Hive simplifies Hadoop at Facebook with the execution of 7500+ Hive jobs daily for Ad-hoc analysis, reporting and machine learning.

Get More Practice, More Big Data and Analytics Projects, and More guidance.Fast-Track Your Career Transition with ProjectPr

>Data Integration Components of Hadoop Ecosystem- Sqoop and Flume

-

Sqoop

​​Sqoop component is used for importing data from external sources into related Hadoop components like HDFS, HBase or Hive. It can also be used for exporting data from Hadoop o other external structured data stores. Sqoop parallelized data transfer, mitigates excessive loads, allows data imports, efficient data analysis and copies data quickly.

Sqoop Use Case-

Online Marketer Coupons.com uses Sqoop component of the Hadoop ecosystem to enable transmission of data between Hadoop and the IBM Netezza data warehouse and pipes backs the results into Hadoop using Sqoop.

-

Flume-

​Flume component is used to gather and aggregate large amounts of data. Apache Flume is used for collecting data from its origin and sending it back to the resting location (HDFS).Flume accomplishes this by outlining data flows that consist of 3 primary structures channels, sources and sinks. The processes that run the dataflow with flume are known as agents and the bits of data that flow via flume are known as events.

Flume Use Case –

Twitter source connects through the streaming API and continuously downloads the tweets (called as events). These tweets are converted into JSON format and sent to the downstream Flume sinks for further analysis of tweets and retweets to engage users on Twitter.

Become a Hadoop Developer By Working On Industry Oriented Hadoop Projects

>Data Storage Component of Hadoop Ecosystem –HBase

HBase –

HBase is a column-oriented database that uses HDFS for underlying storage of data. HBase supports random reads and also batch computations using MapReduce. With HBase NoSQL database enterprise can create large tables with millions of rows and columns on hardware machine. The best practice to use HBase is when there is a requirement for random ‘read or write’ access to big datasets.

HBase Use Case-

Facebook is one the largest users of HBase with its messaging platform built on top of HBase in 2010.HBase is also used by Facebook for streaming data analysis, internal monitoring system, Nearby Friends Feature, Search Indexing and scraping data for their internal data warehouses.

>Monitoring, Management and Orchestration Components of Hadoop Ecosystem- Oozie and Zookeeper

-

Oozie-

​Oozie is a workflow scheduler where the workflows are expressed as Directed Acyclic Graphs. Oozie runs in a Java servlet container Tomcat and makes use of a database to store all the running workflow instances, their states ad variables along with the workflow definitions to manage Hadoop jobs (MapReduce, Sqoop, Pig and Hive).The workflows in Oozie are executed based on data and time dependencies.

Build an Awesome Job Winning Project Portfolio with Solved End-to-End Big Data Projects

Oozie Use Case:

The American video game publisher Riot Games uses Hadoop and the open source tool Oozie to understand the player experience.

-

Zookeeper-

​Zookeeper is the king of coordination and provides simple, fast, reliable and ordered operational services for a Hadoop cluster. Zookeeper is responsible for synchronization service, distributed configuration service and for providing a naming registry for distributed systems.

Zookeeper Use Case-

Found by Elastic uses Zookeeper comprehensively for resource allocation, leader election, high priority notifications and discovery. The entire service of Found built up of various systems that read and write to Zookeeper.

Several other common Hadoop ecosystem components include: Avro, Cassandra, Chukwa, Mahout, HCatalog, Ambari and Hama. By implementing Hadoop using one or more of the Hadoop ecosystem components, users can personalize their big data experience to meet the changing business requirements. The demand for big data analytics will make the elephant stay in the big data room for quite some time.

>Apache AMBARI or Call it the Elephant Rider

The major drawback with Hadoop 1 was the lack of open source enterprise operations team console. As a result of this , the operations and admin teams were required to have complete knowledge of Hadoop semantics and other internals to be capable of creating and replicating hadoop clusters, resource allocation monitoring, and operational scripting. This big data hadoop component allows you to provision, manage and monitor Hadoop clusters A Hadoop component, Ambari is a RESTful API which provides easy to use web user interface for Hadoop management. Ambari provides step-by-step wizard for installing Hadoop ecosystem services. It is equipped with central management to start, stop and re-configure Hadoop services and it facilitates the metrics collection, alert framework, which can monitor the health status of the Hadoop cluster. Recent release of Ambari has added the service check for Apache spark Services and supports Spark 1.6.

- Regardless of the size of the Hadoop cluster, deploying and maintaining hosts is simplified with the use of Apache Ambari.

- Amabari monitors the health and status of a hadoop cluster to minute detailing for displaying the metrics on the web user interface.

Apache MAHOUT

Mahout is an important Hadoop component for machine learning, this provides implementation of various machine learning algorithms. This Hadoop component helps with considering user behavior in providing suggestions, categorizing the items to its respective group, classifying items based on the categorization and supporting in implementation group mining or itemset mining, to determine items which appear in group.

A distributed public-subscribe message developed by LinkedIn that is fast, durable and scalable.Just like other Public-Subscribe messaging systems ,feeds of messages are maintained in topics.

Apache Kafka Use Cases

- Spotify uses Kafka as a part of their log collection pipeline.

- Airbnb uses Kafka in its event pipeline and exception tracking.

- At FourSquare ,Kafka powers online-online and online-offline messaging.

- Kafka power's MailChimp's data pipeline.

What’s new in Hadoop 3.x?

Apache Hadoop 3.0.0 has incorporated quite a few significant changes over the previous major release line, Hadoop 2.x. Hadoop 3.0.0 was made generally available (GA) in December 2017. This means that it represents a point of API stability and is of quality that the Apache community considered production-ready. Since then, multiple versions of Hadoop 3.x have been released. Here are some notable changes of Hadoop 3.x over the previous version:

-

The minimum Java version supported by Hadoop 3.x is JDK 8.0. Oracle ended the use of JDK in 2015, and hence, in order to use Hadoop version 3.0 and above, users will have to have Java version 8 or above to compile and run Hadoop files.

-

Support for Erasure coding: Erasure coding is a method used to recover data if there is a failure on the company hard disk. Erasure coding is generally used in high-level RAID (Redundant Array of Independent Disks) technology to perform data recovery. RAID implements Erasure Coding through a method called striping, where logically sequential data gets divided into smaller units and stored as consecutive units on different blocks. For each stripe of the original cells which contain this data, a number of parity cells are calculated and used to store data. This process is known as encoding. Any errors on striping cells can be recovered using a decoding calculation based on data cells that are surviving or other parity cells. In Hadoop 2 and below, the data is divided into blocks and stored. Each data block has replicas that are stored across different nodes on the cluster. The number of replicas is determined by the replication factor, which is three by default. This creates an additional 200% overhead of storage. Erasure coding is used to provide fault tolerance like replication but without the additional overhead of storage.

-

Support for more than two NameNodes: Hadoop clusters have a master-slave architecture where the NameNode functions as the master and the DataNodes function as the slaves. The NameNode is the single point of failure in a single Hadoop cluster. The cluster has to wait for the NameNode to be up and running in order to function. Hadoop 2.x has support for a single active NameNode and a single standby NameNode. As of Hadoop 3.x, the edits are replicated to a quorum of three JournalNodes (JNs). In this manner, Hadoop 3.x is better able to handle fault tolerance than the previous versions.

-

Rewriting Shell Scripts: The shell scripts have been rewritten in Hadoop 3.x to fix many previously found bugs, resolve specific compatibility issues, and add new features. Some of the essential features added are:

-

-

All subsystems of Hadoop shell script will now execute Hadoop-env.sh so that all environment variables can be in one location.

-

The -daemon option has facilitated the movement of daemonization from *-daemon.sh to the bin commands. Hadoop 3 allows using the command “-daemon start” to start a daemon, “-daemon stop” to stop a daemon, and -daemon status to set the daemon’s status.

-

Operations that result in the triggering of ssh connections can be used in Hadoop 3 if pdsh is installed.

-

Shell scripts in Hadoop 3 report error messages for various log states and pid directories when daemons are started instead of unprotected shell errors displayed to users in previous versions.

-

${HADOOP_CONF_DIR} is maintained throughout the cluster without requiring any symlinking.

-

-

- YARN (Yet Another Resource Negotiator) Timeline Service v.2: This is used to address two significant challenges that were previously faced: improving the scalability and reliability of the Timeline Service and introducing flows and aggregation to enhance usability. In YARN, the Timeline service is responsible for storing and retrieving an application’s information. In Hadoop 1, the Timeline Service allowed users to only make a single instance of reader/writer and storage architecture. This could not be scaled further. Hadoop 2 used a distributed writer architecture in which data read, and writer operations could be separated. Here, distributed collectors were provided for every YARN application. Timeline service v.2 uses HBase for storage purposes and hence can be scaled to massive sizes and ensures that a good response time is provided for read and write operations.

There are two significant categories of information that are stored by Timeline service v.2:

-

-

Generic information related to the completed application: includes data such as user information, queue name, container information that will run for each application attempt, and the number of attempts made per application.

-

Information related to running and completed applications per framework: includes information on the counters, count of Map and Reduce tasks, and information that the developer broadcasted for the Timeline Server along with the Timeline client.

-

-

-

Default Ports of Multiple Services Have been changed: In Hadoop 2.x, the multiple service port for Hadoop had to be in the Linux ephemeral port range -> 32768-6100. This particular configuration sometimes led to conflicts with other applications, and as a result, the service could fail to bind to the ports. As a solution to this problem, in Hadoop 3.x, the ports causing conflicts have been moved out of the Linux ephemeral port range, and new ports have been assigned. As a result, the port numbers of multiple services, including the NameNode, Secondary NameNode, and the DataNode, have been changed.

-

Filesystem Connector Support: Hadoop 3 comes with support for integration with Microsoft Azure Data Lake and Aliyun Object Storage System as Hadoop-compatible filesystems.

-

Intra-DataNode Balancer: The DataNodes in a Hadoop cluster are used for storage purposes. A single DataNode handles writes to multiple disks. During a normal write operation, the disks get filled up with data evenly. However, the addition or removal of a disk can cause a significant skew within a DataNode. The existing HDFS-Balancer was unable to handle such a considerable skew since it was mainly responsible for inter- DN skew and not intra- DN skew. Hadoop version 3 introduces the latest intra-DataNode balancing feature, which can be invoked through the HDFS disk balancer CLI.

- Shaded Client Jars: Hadoop 3.x provides a new Hadoop-client API and Hadoop-client-runtime. They deliver hadoop dependencies to a single jar file or a single packet. Hadoop-client APIs have compile-time scope, and Hadoop-client-runtime has runtime scope in Hadoop 3. Both Hadoop-client API and Hadoop-client-runtime contain third-party dependencies that Hadoop-client provides.The Hadoop-client on Hadoop 2.x pulls Hadoop’s transitive dependencies onto a Hadoop application’s classpath. This could result in a problem if the versions of these transitive dependencies conflicted with any versions used by the application. In Hadoop 3, developers can easily bundle all the dependencies into a single jar file and test the jars for any version conflicts. In this manner, Hadoop dependencies on the application’s classpath can be avoided.

-

Task Heap and Daemon Management: Hadoop versions 3.x allow for easy configuration of Hadoop daemon heap sizes by making use of some new ways.

-

Heap sizes can now be auto-tuned based on the memory size of the host by getting rid of default heap sizes. The older default heap size can, however, be used by configuring HADOOP_HEAPSIZE_MAX in the Hadoop-env.sh file.

-

The HADOOP_HEAPSIZE variable has been replaced with two other variables, HADOOP_HEAPSIZE_MAX and HADOOP_HEAPSIZE_min. The internal variable JAVA_HEAP_SIZE has also been depreciated in the latest Hadoop version.

-

The configuration of heap sizes associated with the map and reduce tasks have been simplified. The heap size is no longer required to be specified in either the task configuration or as a Java option.

-

- Support for Distributed Scheduling and Opportunistic Containers: Opportunistic containers are a new ExecutionType that have been implemented in Hadoop 3. Opportunistic containers can be dispatched at a NodeManager for execution even if no resources are available at the time of scheduling.In this case, the containers get queued at the NodeManager while waiting for resources to be available. Since opportunistic containers have lower priority than the default Guaranteed containers, they are preempted if required in order to make room for Guaranteed containers. In this manner, cluster utilization can be improved. Opportunistic containers work similarly to the existing YARN containers and are allocated by the Capacity Scheduler. Once they are dispatched to a node, it is an indication that there are available resources to allow their execution to begin immediately. Opportunistic Containers run to completion, provided there are no failures. By default, these containers are allocated by the central Resource Manager, but they can also be allocated by an AMRMProtocol Interceptor, which is an implementation of a distributed scheduler.

- MapReduce Task-Level Native Optimization: Hadoop 3 has a native Java implementation added for the map output collector in MapReduce. This particular implementation of the map output collector has been shown to provide speed-ups for shuffle-intensive jobs by 30% or more.

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,