Rabbit in the Cloud

How we deployed RabbitMQ on AWS

How we deployed RabbitMQ on AWS

In an effort to move away from our legacy monolithic service, we decided take on the challenge of building a new communication platform based on a micro service architecture, which would be more focused and more easily manageable. The challenge was exciting and big; we had to make crucial decisions early on, decisions that we would have to live with for the foreseeable future. And one of the most important decisions was how to integrate our microservices once we created them. One common, obvious choice would be REST APIs. This is usually great, except that in certain parts of the platform we needed to speed things up because we were aiming for a fast platform; one that would suffer as little as possible from the inherent latency and limits of possible HTTP connections.

Our new shiny platform would need to process several million messages every day, with the guarantee of no lost messages as well as being able to account for them throughout their journey.

To achieve all that, we decided to use RabbitMQ, because it offered us the reliability and guarantee of message delivery through transactions, flexible routing of messages based on routing keys as well as other conditions, support for high availability, clustering and most of all the maturity of the project as a whole.

In this article, I will describe the challenges we encountered in bringing RabbitMQ to the cloud.

A brief background of RabbitMQ

RabbitMQ is middleware software that first launched in 2007 and serves to support cross-system integration via message exchange. It implements the Advanced Message Queueing Protocol (AMQP), which is different than other standards such JMS in that it aims to standardize the wire protocol rather than the development API.

RabbitMQ allows for highly flexible means of defining exchanges and their bindings to queues with multiple routing options. In RabbitMQ, you publish messages to exchanges and based on your configuration, messages are delivered to the correct queues. This allows for many interesting and useful scenarios.

RabbitMQ is built with Erlang, which is a functional programing language created by Ericsson back in 1986 to be a fault-tolerant, distributed, hot replace capable language that is supposed to run long-running and possibly non-stop applications. There’s no need for Erlang knowledge apart from basic syntax for defining system properties.

How hard could it be to bring it to the cloud?

Two words: “high availability”. With a system such as RabbitMQ serving to integrate your microservices, it is even more important than ever.

RabbitMQ is capable of running in high availability mode, but it requires having well-known machine host names. Unfortunately, that proves difficult since EC2 instance come and go all the time, always with a new IP address (unless you reserve those IPs). In our case that was a limitation that we needed to work around.

We run our cluster on AWS which limits each account to five Elastic IP addresses per region, and in our case, those are mostly used by other services. Hence, we opted to identify the cluster dynamically by building our sidekick service to run alongside the RabbitMQ server.

That sidekick service interfaces with the AWS API and inquires about the available healthy instances running with the same CloudFormation and same version, and then figures out the oldest instance to designate as the master node. Sidekick will keep querying the cluster to detect if the current master is dead, in which case the current oldest node is promoted and so on.

We use Pivotal’s (now deprecated) rabbitmq-clusterer plugin. Updating the configuration file utilized by this plugin will force-update the cluster formation for any changes.

There also comes the problem of cluster upgrades. As mentioned earlier, RabbitMQ is built using Erlang and is only backwards compatible on the minor version level, so if the major version has changed for RabbitMQ and/or Erlang, both old and new clusters would not be compatible, and all hell breaks loose!

Dealing with cluster upgrades

So, we opted for a single solution to enable RabbitMQ’s federation plugin as soon as a new cluster shows up in our AWS account. With this plugin, we’d instruct the old cluster to setup an upstream towards the new cluster. We’d then have all our services renew their connection to bind to the new cluster forcing the messages to be transmitted from the old to the new cluster. Once we were sure all messages were gone, we’d shut down the old cluster, not losing any messages.

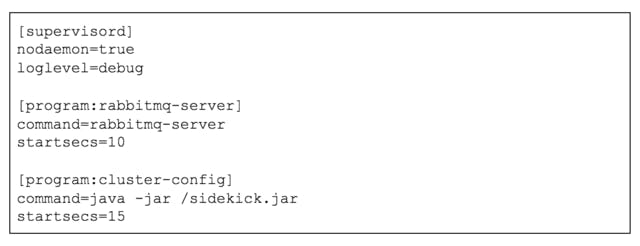

Finally, all this runs within Docker containers on EC2 instances, and to have the sidekick and the RabbitMQ server run alongside each other, we opted to use supervisord with a configuration that looks something like this:

Where do we go from here?

Well, so far this has proved to be useful to us, and we would like to explore this even further. One thing for sure is that we would get rid of the deprecated plugin and move to another more stable one where it would perform node exploration automatically based on AutoScaling Groups on AWS.

In essence, we would like to reduce the duties assigned to the sidekick service to the bare minimum and also have it run independently from the cluster so that we can update it without having to deploy the broker, while also maintaining a state of the art RabbitMQ cluster in the cloud.

We would also like to have the persistence of queues outlive the existence of the RabbitMQ cluster itself in case of a total failure of all instances, which is currently another shortcoming we have to worry about.

And finally, we would like to make the upgrade from one version to another as easy as just deploying the new cluster and then removing the old one. This would help our team perform more seamless deployments and upgrades of the RabbitMQ cluster.

Final Words

We are really satisfied with the features we get out of using RabbitMQ. We have invested enough time to make it work for us in the cloud. We ended up learning a lot along the way, and we’d like to continue to use it while making it easier to maintain.

We're hiring! Do you like working in an ever evolving organization such as Zalando? Consider joining our teams as a Software Engineer!