Big Data Timeline- Series of Big Data Evolution

Big Data Timeline- Series of Big Data Evolution

"Big data is at the foundation of all of the megatrends that are happening today, from social to mobile to the cloud to gaming."- said Chris Lynch, the ex CEO of Vertica.

“Information is the oil of 21st century, and analytics is the combustion engine”- said Peter Sondergaard, Gartner Analyst

“Hiding within those mounds of data is knowledge that could change the life of a patient or change the world.”- Atul Butte, Stanford

Build an AWS ETL Data Pipeline in Python on YouTube Data

Downloadable solution code | Explanatory videos | Tech Support

Start ProjectWith the big data hype all around, it is the fuel of the 21 st century that is driving all that we do. Big data is among the most talked about subjects in the tech world as it promises to predict the future based on analysis of massive amounts of data. Big data might be the hot topic around the business right now but then the roots of big data run deep. Here’s a look at important milestones, tracking the evolutionary progress on how data has been collected, stored, managed and analysed-

- 1926 – Nikola Tesla predicted that humans will be able to access and analyse huge amounts of data in the future by using a pocket friendly device.

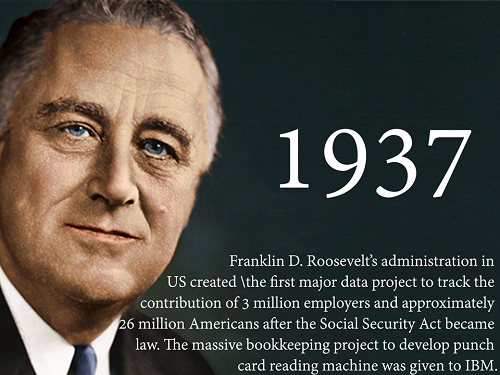

- 1937- Franklin D. Roosevelt’s administration in the US created the first major data project to track the contribution of nearly 3 million employers and 26 million Americans, after the Social Security Act became law. The massive bookkeeping project to develop punch card reading machines was given to IBM.

New Projects

- 1940’s-1950’ – Electronic Computing was created to make high speed calculations.

- 1944- A warning sign indicated there was going to be an inception of storage and retrieval data problem due to the exponential increase of data. A Wesleyan University Librarian, Fremont Rider predicts that the libraries in US are doubling in size every 16 years. Considering the same growth rate, Yale library is expected to have 200,000,000 volumes by end of 2040. The books in the library will require cataloguing staff of more than 6000 people and is expected to occupy approximately 6000 miles of shelves.

Here's what valued users are saying about ProjectPro

Gautam Vermani

Data Consultant at Confidential

Abhinav Agarwal

Graduate Student at Northwestern University

Not sure what you are looking for?

View All Projects- 1949-The “Father of Information”- Claude Shannon did research on various big storage capacity items like photographic data and punch cards. The largest item on Claude Shannon’s list of items was the Library of Congress that measured 100 trillion bits of data.

- 1960- Data warehousing became cheaper.

- 1996- Digital data storage became cost effective than paper - according to R.J.T. Morris and B.J. Truskowski.

- 1997 -The term “BIG DATA” was used for the first time- A paper on Visualization published by David Ellsworth and Michael Cox of NASA’s Ames Research Centre mentioned about the challenges in working with large unstructured data sets with the existing computing systems. “We call this as the problem of BIG DATA” and hence the term “BIG DATA” was coined in this setting.

- 1998-An open source relational database was developed by Carlo Strozzi who named it as NoSQL. However, 10 years later, NoSQL databases gained momentum with the need to process large unstructured data sets.

- 1999-Information was quantified by Hal R. Varian and Peter Lyman at UC Berkeley in computer storage terms. 1999 was the year when the world had produced approximately 1.5 Exabyte’s of Information, according to the study titled “How Much Information?”

- 1999- The term Internet of Things (IoT) was used for the very first time by Kevin Ashton in a business presentation at P & G.

- 2001- The dimensions of big data i.e. the 3V’s were defined by a Gartner Analyst Doug Laney in a research paper titled –“3D Data Management: Controlling Data Volume, Velocity, and Variety”

Get FREE Access to Data Analytics Example Codes for Data Cleaning, Data Munging, and Data Visualization

- 2005- The term Big Data might have been launched by O’Reilly Media in 2005 but the usage of big data and the necessity to analyse it has been identified since quite some time before.

- 2005- The tiny toy elephant Hadoop was developed by Doug Cutting and Mike Cafarella to handle the big data explosion from the web. Hadoop is an open source solution for storing and processing large unstructured data sets.

- 2005-National Science Board suggested that National Science Foundation should create a career path to produce good number of quality data scientist to handle the exponentially growing digital data.

- 2006- According to IDC, 161 exabytes of data was produced in 2006 which was expected to increase by 6 times i.e. 988 exabytes in 4 years. This was the first study that estimated and forecasted the amount of information growth.

- 2007-North Carolina State University established the Institute of Advanced Analytics which offered the first Master’s degree in Analytics.

- 2008- With continuous explosion of data George Glider and Bret Swanson predicted that by end of 2015 the US IP traffic will reach 1 zettabytes and the US internet usage will be 50x times more than it was in 2006.

- 2008- 9.57 trillion gigabytes of data has been processed by the world’s CPU’s.

- 2008-According to a survey by Global Information Industry Centre, in 2008 Americans consumed approximately 1.3 trillion hours of information i.e. an average of 12 hours of information per day. The survey estimates that a normal person on an average consumes 34 gigabytes of information and 100,500 words in a single day totalling the consumption to 10,845 trillion words and 3.6 zettabytes.

- 2008-Google processed 20 petabytes of data in a single day.

- 2009- According to a Gartner report, Business Intelligence (BI) became a top priority for the Chief Information Officers in 2009.

- 2009- A McKinsey report estimated that, on an average-a US company with 1000 employees stores more than 200 TB of data.

- 2011-1.8 Zettabytes of data was generated from the dawn of civilization to the year 2003.In 2011, it took only 2 days to generate 1.8 Zettabytes of information.

- 2011-A McKinsey report on Big Data highlighted the shortage of analytics talent in US by 2018. US alone will face a shortage of 1.5 million analysts and managers, 140,000 and 190,000 skilled professionals with deep analytical skills.

- 2011- IBM’s supercomputer Watson analyses 200 million pages approximately 4TB of data in seconds.

- 2011- According to Ventana Research Survey, 94% of Hadoop users leverage big data analytics on massive amounts of unstructured data which was not possible earlier, 88% of the Hadoop users analyse the unstructured data in great detail and 82% can now store more of their data to leverage it for analytics at later point of time.

- 2012- Harvard Business Review titles Data Scientist as the “Sexiest Job of 21st Century”

- 2012 - There are 500,000+ data centres across the world which can occupy the space of 5955 football fields.

- 2012-Obama administration announced Big Data Research and Development initiative which consisted of 84 big data programs to address the problems faced by the government with the growing influx of data. Big data analysis played a crucial part in Obama’s 2012 re-election campaign. US government invests $200 million in big data research projects.

- 2012- According to an Information Week report, 52% of the big data companies say that it will difficult to find analytics experts in the next 2 years.

- 2013-According to annual Digital Universe Study by EMV, 4.4x 1021 i.e. 4.4 Zettabytes of data was produced by the universe in 2013.EMC annual digital universe study estimates that the volume of big data is anticipated to grow exponentially to 44 Zettabytes by end of 2020.

- 2013- According to Gartner, 72% of the organizations plan to increase their spending on big data analytics but 60% actually stated that they lacked personnel with required deep analytical skills.

- 2013- Only 22% of the data produced has semantic value, of which only 5% of the data is actually leveraged for analysis by businesses. EMC study estimates that by 2020, 35% of the data produced will hold semantic value.

- 2014- According to IDC, by end of 2020 business transactions on the web i.e. B2B and B2C transactions will exceed 450 billion every day.

- 2014- By 2020, the number of IT administrators tracking the data growth will increase by 1.5 times. There will be demand for 75x more files and 10x more servers.

Get More Practice, More Big Data and Analytics Projects, and More guidance.Fast-Track Your Career Transition with ProjectPro

- 2014-By end of 2020, 1/3rd of the data produced in the digital universe will live in or pass through the cloud

- 2014- Gartner predicts that 4.9 billion things will be connected to the Internet by end of 2015 which is expected to reach 25 billion by 2020.

- 2014- Human Genome decoding earlier took 10 years but now with the advent of big data analytics.

- 2015- Research estimates suggest that 2.5 quintillion bytes of data is produced everyday i.e. 2.5 followed by a total of 18 0’s.

- 2015- Google is the largest big data company in the world that stores 10 billion gigabytes of data and processes approximately 3.5 billion requests every day.

- 2015- Amazon is the company with the most number of servers-the 1,000,000,000 gigabytes of big data produced by Amazon from its 152 million customers is stored on more than 1,400,000 servers in various data centres.

With the dawn of a novel era founded on data completely, opportunities in big data industry for computer programmers, investors, entrepreneurs and other IT professionals are growing as big data grows exponentially. Companies that deploy a disruptive business model with strong focus on data will be “The Next Big Thing” in the market.

Do you think that the big data predictions about the future are accurate? Do you have any amazing big data statistics or facts to share? Let us know in comments below!

Build an Awesome Job Winning Project Portfolio with Solved End-to-End Big Data Projects

About the Author

ProjectPro

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,